Abstract

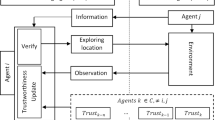

Computational trust and reputation models are key elements in the design of open multi-agent systems. They offer means of evaluating and reducing risks of cooperation in the presence of uncertainty. However, the models proposed in the literature do not consider the costs they introduce and how they are affected by environmental aspects. In this paper, a cognitive meta-model for adaptive trust and reputation in open multi-agent systems is presented. It acts as a complement to a non-adaptive model by allowing the agent to reason about it and react to changes in the environment. We demonstrate how the meta-model can be applied to existent models proposed in the literature, by adjusting the model’s parameters. Finally, we propose evaluation criteria to drive meta-level reasoning considering the costs involved when employing trust and reputation models in dynamic environments.

Similar content being viewed by others

References

Bordini, R. H., & Jomi Fred Wooldridge, M. (2007). Programming multi-agent systems in AgentSpeak using Jason. Hoboken: Wiley.

Burnett, C., Norman, T., & Sycara, K. (2010). Bootstrapping trust evaluations through stereotypes. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (Vol. 1, no. 1, pp. 241–248).

Castelfranchi, C., & Falcone, R. (2001). Social trust: A cognitive approach. In C. Castelfranchi & Y. H. Tan (Eds.), Trust and deception in virtual societies (pp. 55–90). Dordrecht: Kluwer Academic Publishers.

Dignum, V., Vázquez-Salceda, J., & Dignum, F. (2005). Omni: Introducing social structure, norms and ontologies into agent organizations (pp. 181–198)., Programming Multi-Agent Systems Berlin: Springer.

Esfandiari, B., & Chandrasekharan, S. (2001). On how agents make friends: Mechanisms for trust acquisition. In Proceedings of the Fourth Workshop on Deception, Fraud and Trust in Agent Societies (Vol. 222).

Ferber, J., & Gutknecht, O. (1998). A meta-model for the analysis and design of organizations in multi-agent systems. In International Conference on Multi Agent Systems (pp. 128–135). IEEE Comput. Soc.

Fullam, K.K. (2007). Adaptive trust modeling in multi-agent systems: Utilizing experience and reputation. Doctoral thesis, University of Texas.

Fullam, K.K., & Barber, K.S. (2007). Dynamically Learning Sources of Trust Information: Experience vs. Reputation. In Proceedings of the 6th International Conference on Autonomous Agents and Multiagent Systems (Vol. 5, pp. 1055–1062).

Griffiths, N. (2005). Task delegation using experience-based multi-dimensional trust. In Proceedings of the Fourth International Joint Conference on Autonomous Agents and Multiagent Systems—AAMAS’05, pp. 489–496. New York, NY: ACM.

Griffiths, N. (2006). A fuzzy approach to reasoning with trust, distrust and insufficient trust. In M. Klusch, M. Rovatsos, & T. R. Payne (Eds.), Cooperative Information Agents X (Vol. 4149, pp. 360–374)., Lecture Notes in Computer Science Berlin: Springer.

Hermoso, R., & Billhart, H., (2010). Sascha Ossowski: Role evolution in open multi-agent systems as an information source for trust. In Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (Vol. 1, pp. 217–224).

Huynh, T.D. (2006). Trust and reputation in open multi-agent systems. Doctoral thesis, University of Southhampton.

Huynh, T., & Jennings, N. (2006). Certified reputation: How an agent can trust a stranger. In The Fifth International Joint Conference on Autonomous Agents and Multiagent Systems (pp. 1217–1224).

Huynh, T. D., Jennings, N. R., & Shadbolt, N. R. (2006). An integrated trust and reputation model for open multi-agent systems. Autonomous Agents and Multi-Agent Systems, 13(2), 119–154.

Jøsang, A., & Ismail, R. (2002). The beta reputation system. In: Proceedings of the 15th Bled Electronic Commerce Conference. Bled, Slovenia.

Jøsang, A., Ismail, R., & Boyd, C. (2007). A survey of trust and reputation systems for online service provision. Decision Support Systems, 43(2), 618–644.

Keung, S. N. L. C., & Griffiths, N. (2010). Trust and reputation. In N. Griffiths & Km Chao (Eds.), Agent-Based Service-Oriented Computing (Chap. 8) (pp. 189–224). London: Springer.

Kinateder, M., Baschny, E., & Rothermel, K. (2005). Towards a generic trust model—Comparison of various trust update algorithms. In Proceedings of the Third International Conference on Trust Management (iTrust2005). Berlin: Springer.

Koster, A., Sabater-Mir, J., & Schorlemmer, M. (2010). Engineering trust alignment: A first approach. In 13th Workshop on Trust in Agents Societies at AAMAS 2010 (pp. 111–122).

Koster, A., Schorlemmer, M., & Sabater-Mir, J. (2012). Opening the black box of trust: Reasoning about trust models in a BDI agent. Journal of Logic and Computation, 23(1), 25–58. doi:10.1093/logcom/exs003.

Marsh, S.P. (1994). Formalising trust as a computational concept. Doctoral thesis, University of Stirling, UK.

Nardin, L. G., Brand, A. A. F., Sichman, J. S., & Vercouter, L. (2008). SOARI: a service-oriented architecture to support agent reputation models interoperability. In R. Falcone, S. K. Barber, J. Sabater-Mir, & M. P. Singh (Eds.), Trust in Agent Societies (Vol. 5396)., Lecture Notes in Computer Science Berlin: Springer.

Pinyol, I., & Sabater-Mir, J. (2011). Computational trust and reputation models for open multi-agent systems: A review. Artificial Intelligence Review, 40, 1–25.

Raja, A., & Lesser, V. (2007). A framework for meta-level control in multi-agent systems. Autonomous Agents and Multi-Agent Systems, 15(2), 147–196.

Rao, A.S. (1996). AgentSpeak (L): BDI agents speak out in a logical computable language. In 7th European Workshop on Modelling Autonomous Agents in a Multi-Agent World (pp. 42–55).

Rao, A.S., & Georgeff, M.P. (1995). BDI agents: From theory to practice. In First International Conference on Multi-Agent Systems (ICMAS-95).

Regan, K., Poupart, P., & Cohen, R. (2006). Bayesian reputation modeling in e-marketplaces sensitive to subjectivity, deception and change. In Proceedings of the 21st National Conference on Artificial Intelligence (pp. 1206–1212).

Russell, S., & Norvig, P. (eds.). (2002). Artificial Intelligence: A Modern Approach, 2nd edn. Egnlewood Cliffs, NJ: Prentice Hall.

Sabater, J. (2002). Trust and reputation for agent societies. Tesi doctoral: Universitat Autònoma de Barcelona, España.

Sabater, J., & Sierra, C. (2005). Review on computational trust and reputation models. Artificial Intelligence Review, 24(1), 33–60.

Schillo, M., Funk, P., & Rovatsos, M. (2000). Using Trust for Detecting Deceitful Agents in Artificial Societites. Applied Artificial Intelligence (Special Issue on Trust, Deception and Fraud in Agent Societies).

Seidewitz, E. (2003). What models mean. IEEE Software, 20(5), 26–32.

Şensoy, M., Zhang, J., Yolum, P., & Cohen, R. (2009). Poyraz: context-aware service selection under deception. Computational Intelligence, 25(4), 335–366.

Staab, E., & Muller, G. (2012). MITRA: A Meta-Model for Information Flow in Trust and Reputation Architectures (p. 19). arXiv:1207.0405.

Teacy, W. T. L., Patel, J., Jennings, N. R., & Luck, M. (2006). TRAVOS: trust and reputation in the context of inaccurate information sources. Autonomous Agents and Multi-Agent Systems, 12(2), 183–198.

Teacy, W. T. L., Luck, M., Rogers, A., & Jennings, N. R. (2012). An efficient and versatile approach to trust and reputation using hierarchical Bayesian modelling. Artificial Intelligence, 193, 149–185.

Vercouter, L., & Muller, G. (2010). LIAR: achieving social control in open and decentralised multi-agent systems. Applied Artificial Intelligence, 24(8), 723–768.

Wang, Y., & Hang, C. (2011). A probabilistic approach for maintaining trust based on evidence. Journal of Artificial Intelligence Research, 40, 221–267.

Yu, B., & Singh, M.P. (2003). Searching Social Networks. In Proceedings of the Second International Joint Conference in Autonomous Agents and Multiagent Systems—AAMAS’03, (p. 65). New York, NY: ACM.

Zacharia, G., & Maes, P. (2000). Trust management through reputation mechanisms. Applied Artificial Intelligence, 14(9), 881–907.

Author information

Authors and Affiliations

Corresponding author

Appendix 1: Trust and reputation models

Appendix 1: Trust and reputation models

Many TRM with different characteristics and objectives have been proposed in the literature. In this paper, we study how trust and reputation work in dynamic environments. For this purpose, elements that are common or generalizable are more relevant than the specifics of a particular model. In this section, four models are used to illustrate these elements. This is not intended as a review of existing models, as extensive reviews can be found in [16, 17, 23, 30].

1.1 Marsh’s model

Marsh’s model [21] is one of the first computational trust models in the literature. It is described here to illustrate a trust model that is based solely on the agent’s direct experience, as it makes no use of third-party reputation information. The model considers three aspects of trust:

-

(1)

basic trust—a general disposition to trust that is based on the agent’s previous experiences;

-

(2)

general trust—related to trust in a specific agent without specifying the situation;

-

(3)

situational trust—represents trust in an agent in a specific situation.

Situational trust (\(T_{x}(y,\alpha )^{t}\)), given by Eq. 2, is the trust that agent \(x\) has in agent \(y\) in a situation \(\alpha \) at time \(t\). The utility expected by \(x\) is represented by \(U_{x}(\alpha )^{t}\), while \(I_{x}(\alpha )^{t}\) is the importance of the situation, and \(\widehat{T_{x}(y)}^{t}\) is an estimate of general trust based on previous interactions with agent \(y\). To calculate this estimate, the author suggests optimistic, pessimistic or realistic dispositions, considering respectively the maximum, minimum, and mean of previous interactions.

This disposition to trust is what defines the agent’s behavior in the system. Agents that are more optimistic will be more forgiving of failures, with smaller decreases in trust. In the case of pessimistic agents, cooperation failures can lead to a stronger reduction of trust. The realistic agent would sit between these two extremes [21].

After calculating the situational trust on a partner, the agent must decide whether to trust it. Marsh defines a cooperation threshold that determines the sufficient level of trust for cooperation. If trust is above this threshold, then cooperation occurs. The cooperation threshold presented in Eq. 3 is the threshold used by \(x\) to trust \(y\) in situation \(\alpha \). This depends on how \(x\) perceives the risk and the competence of \(y\) in that situation.

Defining the optimal threshold will vary not only because of individual predispositions, but also due to objective circumstances such as the costs of misplacing trust [21]. Higher risk reduces the chance of cooperation, while higher trust increases it.

Since Marsh’s model relies only on direct experience to establish trust, the absence of past experiences severely limits the reliability of the trust estimates. In this case, cooperation is based only on the perceived competence of the potential partner, which is obtained by considering the competence observed in the past, in similar or distinct situations. If the agent is unknown, the basic disposition to trust and the importance of the situation can be used to make an estimate.

Note that the use of static dispositions, as proposed in the model, is not suitable to dynamic environments. As an example, it is easy to see that a static, optimistic stance is not suitable to a system with increasing failure rates. In this case, the agent will consider the maximum value of past interactions, even though they don’t reflect the current performance. In order to avoid losing utility due to changing environments, agent’s must be able to adjust this disposition dynamically.

1.2 SPORAS

SPORAS [40] is a reputation model for loosely connected communities, such as electronic marketplaces. In contrast with Marsh’s model, SPORAS is a reputation-only model, where users submit ratings about each other to a centralized system. Only the most recent rating given by a user about another is considered. These evaluations are then combined to calculate the reputation. New users start with zero reputation (the minimum value), so it is not advantageous for a user to switch identities [40].

The user’s reputation value at time \(t = i\) is given by Eq. 4, where \(\theta \) is the number of ratings considered in the evaluation, \(\Phi \) is called the damping function, \(\sigma \) is the acceleration factor of \(\varPhi \), and \(R_{i}^{other}\) is the reputation of the user giving the rating \(W_{i}\). The damping function adjusts how the reputation is updated, such that users who are very reputable experience smaller changes in reputation [40].

SPORAS also calculates the reputation deviation as a measurement of the reliability of the reputation value. This deviation, at time \(t = i\), uses a recursive least squares computation, given by Eq. 5, where \(\lambda < 1\) is a forgetting factor of the reputation deviation \(RD^{2}_{i-1}\) and \(T_{O}\) is the effective number of observations.

When used in an open MAS, the centralized nature of SPORAS requires agents entering the environment to determine how reliable the reputation system’s evaluations are and if they are willing to participate by sending their ratings. Also, the model does not considerer the possibility of information acquisition costs. It assumes that ratings are submitted without any form of compensation for the submitters.

1.3 ReGreT

The ReGreT model [29] exemplifies models that take into account both direct experiences and information from third party sources. ReGreT uses knowledge about the social structure of the MAS as a way to overcome the lack of direct experiences and to evaluate the credibility of information sources. The model also provides a degree of reliability for trust, reputation and credibility values.

Direct trust, based on the agent’s direct experiences, is calculated as a weighted mean of the interactions’ results (called the impression of the outcome). It is always associated to a behavioural aspect (\(\varphi \)), that specifies the context of such trust.

Equation 6 shows how to calculate the direct trust of agent \(a\) in agent \(b\). For the sake of conciseness, we simplify the notation used in the model’s equations. Agent \(a\) calculates \(DT\) based on all the previous outcomes involving \(b\) (\(O^{a,b}\)) related to aspect \(\varphi \).

The function \(\rho (t, t_{i})\) is used to adjust outcomes as a reflex of time, such that more relevance is given to recent outcomes. The impression of the outcome, \(Imp(o_{i},\varphi )\), represents the evaluation of an outcome using the utility function associated with \(\varphi \), modelled by the agent’s disposition to trust.

The reliability of the direct trust value is similar to the one used in SPORAS, as it considers the number of outcomes used to calculate the trust value and their variability. To simplify the following equations, let \(O^{a,b}_{\varphi } = \alpha \) and \(|\alpha |\) be the number of outcomes involving \(a\) and \(b\) regarding the \(\varphi \) aspect. The reliability of the direct trust relationship value (\(DTRL\)) is given by Eq. 7. It depends on the number of outcomes factor (\(No\)) and the outcome deviation (\(Dv\)).

The number of outcomes factor \(No\) defines a level of intimacy based on the number of interactions (\(itm\)) between the agents. After this number is reached, the \(No\) factor is always 1. The idea is that initial interactions are not enough to establish a reliable measure of trust. In Marsh’s model (Sect. 1), the number of interactions between agents is not considered as discriminant factor. While \(itm\) is not reached, a function is used to obtain an increasing level of intimacy, with \(No(\alpha ) = 0\), if \(|\alpha | = 0\) and \(No(\alpha ) = 1\) when \(|\alpha | = itm\). The value of \(itm\) depends mainly on the frequency of transactions between the agents [29]. The outcome deviation, \(Dv \in [0,1]\), is given by Eq. 8, as the difference between the direct trust value and the impression of the outcome, considering the recency factor defined by \(\rho \).

The model defines three types of reputation: witness, neighbourhood, and system reputation. In this section, we will describe only witness reputation, as it illustrates a common element shared by most models.

Witness reputation is based on information provided by third party agents that have interacted directly with the target agent. This information is subject to bias, omissions, and inaccuracy. Consequently, calculating the reliability of such information is very important. ReGreT assumes that the witness \(w\) provides both its trust (\(Trust_{w\rightarrow b}(\varphi )\)) and how confident it is about this value (\(TrustRL_{w\rightarrow b}(\varphi )\)).

Based on the information gathered from the witnesses, the agent can calculate the actual reputation value. An agent \(a\) can calculate the accuracy of a witness \(w\) regarding an agent \(b\) by comparing the information provided by \(w\) about \(b\) and the actual result of the direct interaction of \(a\) and \(b\). Like in SPORAS, only the most recent information provided by \(w\) about \(b\) is stored by \(a\).

Equation 9 is used to obtain the credibility of a witness \(w\). First, \(\sigma _{i}\) defines the relevance of a piece of information \(i\) provided by \(w\). The restriction \(\sigma > 0.5\) is used to eliminate lower quality information (that neither \(w\) nor \(a\) are certain about). Second, \(\zeta \in [0,1]\) indicates how much the witness information matches the agent’s direct trust. The lower the difference, the higher the value of \(\zeta \). If both values coincide, \(\zeta = 1\).

The calculation of the reliability measure of the witness credibility (\(infoCrRL\)) is analogous to Eq. 7. If \(infoCrRL(a,w) > 0.5\), the witness credibility, \(witnessCr(a, w_{i}, b)\), is equal to \(infoCr(a,w)\). Otherwise, there is not enough information to make a proper evaluation of the credibility of \(w\). In this case, \(witnessCr\) is set to 0.5, or another value in \([0,1]\) indicating how credulous the agent is.

To obtain the witness reputation \(R_{a \overset{W}{\rightarrow } b}(\varphi )\), the weighted average of the witnesses’ credibility \(\omega ^{w_{i}b}\) is used to weight their opinions. The same weight is used in the reliability calculation of the reputation value. Thus, witness reputation is obtained using Eq. 10, and the reliability of the reputation value is given by Eq. 11.

To combine and associate trust and reputation values related to various behavioral aspects, the model proposes an ontological dimension using a graph structure. In this graph, a more general aspect, like good seller is evaluated through related aspects such as delivers quickly and offers good prices. They are combined by calculating the weighted average of the related aspects using the weights that are specified in the vertices that connect the aspects.

1.4 FIRE

FIRE [12] is a TRM designed for open MAS and, as such, assumes that agents are self-interested and therefore are not completely reliable in sharing information. In addition to direct trust and witness information, the model defines two new components:

-

role-based trust, that considers role-based relationships between the agents;

-

certified reputation, where the target agent provides third-party references about itself.

The direct trust and credibility models are very similar to ReGreT’s equations, presented in Sect. 1. To obtain reputation information from witnesses, FIRE uses the referral system proposed by [39]. In this system, an agent queries a number of its closest acquaintances, called neighbors, for information about the target agent. The response may include the information sought, if the agent is confident of its answer, or a referral, if the agent is confident in the relevance of the agent being referred [39]. To use this system, the agent must decide how many neighbors to query and the number of referrals to seek.

In this section, we focus on the concept of certified reputation (CR) introduced by FIRE. After a transaction, agent \(a\) receives a certified reference from agent \(b\), with \(b\)’s evaluation of \(a\)’s performance. Later, agent \(a\) can present this and other references to agent \(c\) as a proof of its performance as viewed by previous partners. It is important to note that the agent \(a\) can choose to omit negative ratings, in order to boost its reputation.

The CR component is an interesting alternative to other information sources, especially in settings where obtaining reputation information is costly. Without the need to look for witnesses, the process of CR is faster [12]. Certified reputation requires that after every transaction, agents exchange these references. The model assumes that these references cannot be tampered with. A security mechanism to guarantee this, such as digital signatures, would add the cost of verifying the authenticity and integrity of the references.

To obtain the final value of trust, FIRE considers the weighted average of the values given by each component. The weights consider the reliability of each measure and a component coefficient that is set by the end user according to a particular application. This composite trust also has a reliability value, calculated considering weighted average of the reliability of each component using the component coefficients as weights.

In the evaluation methodology of FIRE, some dynamism factors found in open MAS are considered such as changes in the population (agents entering and leaving the system), in the location of agents (agents moving in a spherical world) and in performance of the providers. According to the authors of [38], the experiments performed in [14] consider “only minor changes with extremely low probabilities”.

Unlike ReGreT, FIRE explicitly parameterizes elements of the model to allow it to be configured to a particular environment. An empirical evaluation of parameter learning is shown for some of the parameters, including the weight of each component used in reputation calculation [14].

Rights and permissions

About this article

Cite this article

Hoelz, B.W.P., Ralha, C.G. Towards a cognitive meta-model for adaptive trust and reputation in open multi-agent systems. Auton Agent Multi-Agent Syst 29, 1125–1156 (2015). https://doi.org/10.1007/s10458-014-9278-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10458-014-9278-9