Abstract

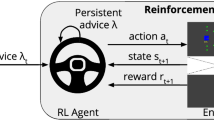

Reinforcement Learning (RL) has been widely used to solve sequential decision-making problems. However, it often suffers from slow learning speed in complex scenarios. Teacher–student frameworks address this issue by enabling agents to ask for and give advice so that a student agent can leverage the knowledge of a teacher agent to facilitate its learning. In this paper, we consider the effect of reusing previous advice, and propose a novel memory-based teacher–student framework such that student agents can memorize and reuse the previous advice from teacher agents. In particular, we propose two methods to decide whether previous advice should be reused: Q-Change per Step that reuses the advice if it leads to an increase in Q-values, and Decay Reusing Probability that reuses the advice with a decaying probability. The experiments on diverse RL tasks (Mario, Predator–Prey and Half Field Offense) confirm that our proposed framework significantly outperforms the existing frameworks in which previous advice is not reused.

Similar content being viewed by others

References

Akiyama, H. (2012). Helios team base code.

Amir, O., Kamar, E., Kolobov, A., & Grosz, B. J. (2016). Interactive teaching strategies for agent training. In Proceedings of the twenty-fifth international joint conference on artificial intelligence (IJCAI) (pp. 804–811).

Barto, A. G., Thomas, P. S., & Sutton, R. S. (2017). Some recent applications of reinforcement learning. In Proceedings of the 18th Yale workshop on adaptive and learning systems.

Brys, T., Nowé, A., Kudenko, D., Taylor, M. (2014). Combining multiple correlated reward and shaping signals by measuring confidence. In Proceedings of 28th AAAI conference on artificial intelligence (pp. 1687–1693).

Chiu, D. K. W., Leung, H. F., & Lam, K. M. (2009). On the making of service recommendations: An action theory based on utility, reputation, and risk attitude. Expert Systems with Applications, 36(2), 3293–3301.

Claus, C., & Boutilier C. (1998). The dynamics of reinforcement learning in cooperative multiagent systems. In The national conference on artificial intelligence (pp. 746–752)

Clouse, J. A. (1996). On integrating apprentice learning and reinforcement learning. PhD thesis, University of Massachusetts

da Silva, F. L., & Costa, A. H. R. (2019). A survey on transfer learning for multiagent reinforcement learning systems. Journal of Artificial Intelligence Research, 64, 645–703.

da Silva F. L., Glatt, R., & Costa, A. H. R. (2017). Simultaneously learning and advising in multiagent reinforcement learning. In Proceedings of the 16th international conference on autonomous agents and multiagent systems (pp. 1100–1108).

Felipe Leno da Silva, Pablo Hernandez-Leal, Bilal Kartal, and Taylor, M. E. (2020) Uncertainty-aware action advising for deep reinforcement learning agents. In The Thirty-Fourth AAAI Conference on Artificial Intelligence (pp.5792–5799

Felipe Leno da Silva, Matthew E. Taylor, and Anna Helena Reali Costa (2018) Autonomously reusing knowledge in multiagent reinforcement learning. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (pp.5487–5493

Fachantidis, A., Taylor, M. E., & Vlahavas, I. P. (2017). Learning to teach reinforcement learning agents. Machine Learning and Knowledge Extraction, 1, 21–42.

Ilhan, E., Gow, J., & Liebana, D. P. (2019) Teaching on a budget in multi-agent deep reinforcement learning. arXiv:1905.01357

Karakovskiy, S., & Togelius, J. (2012). The Mario AI benchmark and competitions. IEEE Transactions on Computational Intelligence and AI in Games, 4(1), 55–67.

Kitano, H., Asada, M., Kuniyoshi, Y., Noda, I., Osawa, E., & Matsubara, H. (1997). Robocup: A challenge problem for AI. AI Magazine, 18, 73–85.

Kober, J., Bagnell, J.A., & Peters, J. (2013). Reinforcement learning in robotics: A survey. The International Journal of Robotics Research, 32, 1238–1274.

Matignon, L., Laurent, G. J., & Le Fort-Piat, N. (2012). Independent reinforcement learners in cooperative markov games: A survey regarding coordination problems. Knowledge Engineering Review, 27, 1–31.

Oliehoek, F. A., & Amato, C. (2016). A concise introduction to decentralized POMDPs (1st ed.). Springer: New York.

Omidshafiei, S., Kim, D.-K., Liu, M., Tesauro, G., Riemer, M., Amato, C., Campbell, M., How, J. P. (2019). Learning to teach in cooperative multiagent reinforcement learning. In The thirty-third AAAI conference on artificial intelligence (pp. 6128–6136).

Rummery, G. A., & Niranjan, M. (1994). On-line q-learning using connectionist systems. Technical report cued/f-infeng/tr 166, Cambridge University Engineering Department.

Sherstov, A. A., & Stone, P. (2005). Function approximation via tile coding: Automating parameter choice. In Proceedings symposium on abstraction, reformulation, and approximation (SARA-05), Edinburgh, Scotland, UK

Suay H. B., Brys T., Taylor, M. E., & Chernova S. (2016). Learning from demonstration for shaping through inverse reinforcement learning. In Proceedings of the 2016 international conference on autonomous agents and multiagent systems) (pp. 429–437).

Sutton, R. S., & Barto, A. G. (1998). Reinforcement Learning: An Introduction (1st ed.). Cambridge, MA: MIT Press.

Taylor, M. E., Carboni, N., Fachantidis, A., Vlahavas, I. P., & Torrey, L. (2014). Reinforcement learning agents providing advice in complex video games. Connection Science, 26(1), 45–63.

Torrey, L., & Taylor, M. E. (2013). Teaching on a budget: Agents advising agents in reinforcement learning. In Proceedings of 12th the international conference on autonomous agents and multiagent systems (pp. 1053–1060).

Wang, Y., Lu, W., Hao, J., Wei, J., & Leung, H. f. (2018). Efficient convention emergence through decoupled reinforcement social learning with teacher–student mechanism. In Proceedings of the 17th international conference on autonomous agents and multiagent systems (pp. 795–803).

Wang, Z., & Taylor. M. E. (2017). Improving reinforcement learning with confidence-based demonstrations. In Proceedings of the twenty-sixth international joint conference on artificial intelligence (IJCAI) (pp. 3027–3033).

Watkins, C. J. C. H., & Dayan, P. (1992). Technical note: Q-learning. Machine Learning, 8, 279–292.

Zhan, Y., Bou-Ammar, H., & Taylor, M. E. (2016). Theoretically-grounded policy advice from multiple teachers in reinforcement learning settings with applications to negative transfer. In Proceedings of the twenty-fifth international joint conference on artificial intelligence (pp. 2315–2321).

Zhu, C., Cai, Y., Leung, H.-f., & Hu, S. (2020). Learning by reusing previous advice in teacher–student paradigm. In: A. El Fallah Seghrouchni, G. Sukthankar, B. An, and N. Yorke-Smith (Eds.), Proceedings of the 19th international conference on autonomous agents and multiagent systems, AAMAS ’20, Auckland, New Zealand, May 9–13, 2020. International foundation for autonomous agents and multiagent systems, 2020 (pp. 1674–1682).

Zimmer, M., Viappiani, P. & Weng, P. (2014). Teacher–student framework: A reinforcement learning approach. In AAMAS workshop.

Acknowledgements

This work was supported by National Natural Science Foundation of China (62076100), and Fundamental Research Funds for the Central Universities, SCUT (D2210010, D2200150, and D2201300), the Science and Technology Planning Project of Guangdong Province (2020B0101100002).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, C., Cai, Y., Hu, S. et al. Learning by reusing previous advice: a memory-based teacher–student framework. Auton Agent Multi-Agent Syst 37, 14 (2023). https://doi.org/10.1007/s10458-022-09595-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s10458-022-09595-1