Abstract

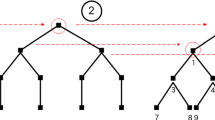

The paper proves the convergence of (Approximate) Iterated Successive Approximations Algorithm for solving infinite-horizon sequential decision processes satisfying the monotone contraction assumption. At every stage of this algorithm, the value function at hand is used as a terminal reward to determine an (approximately) optimal policy for the one-period problem. This policy is then iterated for a (finite or infinite) number of times and the resulting return function is used as the starting value function for the next stage of the scheme. This method generalizes the standard successive approximations, policy iteration and Denardo’s generalization of the latter.

Similar content being viewed by others

References

Bertsekas, D. P., & Yu, H. (2010). Distributed asynchronous policy iteration in dynamic programming. In Communication, control, and computing, 2010 annual allerton conference (pp. 1368–1375).

Bertsekas, D. P., & Yu, H. (2012). Q-learning and enhanced policy iteration in discounted dynamic programming. Mathematics of Operations Research, 37(1), 66–94.

Dembo, R. S., & Haviv, M. (1984). Truncated policy iteration methods. Operations Research Letters, 3(5), 243–246.

Denardo, E. V. (1967). Contraction mappings in the theory underlying dynamic programming. SIAM Review, 9(2), 165–177.

Denardo, E. V., & Rothblum, U. G. (1983). Affine structure and invariant policies for dynamic programming. Mathematics of Operations Research, 8(3), 342–365.

Haviv, M. (1985). Block-successive approximation for a discounted Markov decision model. Stochastic Processes and Their Applications, 19(1), 151–160.

Heyman, D. P., & Sobel, M. J. (1984). Stochastic optimization: Vol. II. Stochastic models in operations research. New York: McGraw-Hill.

Howard, R. A. (1960). Dynamic programming and Markov processes. Cambridge: MIT Press.

Kallenberg, L. (2002). Finite state and action MDPs. In E. A. Feinberg & A. Shwartz (Eds.), Handbook of Markov decision processes: Methods and applications. Norwell: Kluwer Academic.

Porteus, E. L. (1971). Some bounds for discounted sequential decision processes. Management Science, 18(1), 7–11.

Porteus, E. L. (1980). Improved iterative computation of the expected discounted return in Markov and semi-Markov chains. Zeitschrift Für Operations-Research, 24, 155–170.

Puterman, M. L. (1994). Markov decision processes: Discrete stochastic dynamic programming. New York: Wiley.

Puterman, M. L., & Brumelle, S. L. (1979). On the convergence of policy iteration in stationary dynamic programming. Mathematics of Operations Research, 4(1), 60–69.

Puterman, M. L., & Shin, M. C. (1978). Modified policy iteration algorithms for discounted Markov decision problems. Management Science, 24(11), 1127–1137.

Puterman, M. L., & Shin, M. C. (1982). Action elimination procedures for modified policy iteration algorithms. Operations Research, 30(2), 301–318.

Rothblum, U. G. (1979). Iterated successive approximation for sequential decision processes. In J. W. B. van Overhagen & H. C. Tijms (Eds.), Stochastic control and optimization, Amsterdam (pp. 30–32).

Sutton, R. S. (1988). Learning to predict by the methods of temporal differences. Machine Learning, 3(1), 9–44.

Van der Wal, J. (1978). Discounted Markov games: generalized policy iteration method. Journal of Optimization Theory and Applications, 25(1), 125–138.

Van Nunen, J. A. E. E. (1976a). A set of successive approximation methods for discounted Markovian decision problems. Zeitschrift Für Operations-Research, 20, 203–208.

Van Nunen, J. A. E. E. (1976b). Contracting Markov decision processes. Mathematical Centre Tract No. 71, Amsterdam, Holland.

Watkins, C. J. C. H. (1989). Learning from delayed rewards. Ph.D. Thesis, University of Cambridge, England.

Whitt, W. (1978). Approximations of dynamic programs, I. Mathematics of Operations Research, 3(3), 231–243.

Acknowledgements

The conjecture of convergence of the Iterated Successive Approximations Algorithm in the context of arbitrary sequential decision processes was raised in discussions with Jo van Nunen in 1979. The paper benefited greatly from detailed comments on Rothblum (1979) by Jo van Nunen and Jan van der Wal, who suggested the strengthening of an earlier form of Theorem 1 to its present form. The authors also thank Martin Puterman for a thorough discussion of this work. This work was partially supported by the Daniel Rose Yale University-Technion Initiative for Research on Homeland Security and Counter-Terrorism.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Canbolat, P.G., Rothblum, U.G. (Approximate) iterated successive approximations algorithm for sequential decision processes. Ann Oper Res 208, 309–320 (2013). https://doi.org/10.1007/s10479-012-1073-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-012-1073-x