Abstract

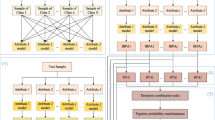

The classification of imprecise data is a difficult task in general because the different classes can partially overlap. Moreover, the available attributes used for the classification are often insufficient to make a precise discrimination of the objects in the overlapping zones. A credal partition (classification) based on belief functions has already been proposed in the literature for data clustering. It allows the objects to belong (with different masses of belief) not only to the specific classes, but also to the sets of classes called meta-classes which correspond to the disjunction of several specific classes. In this paper, we propose a new belief classification rule (BCR) for the credal classification of uncertain and imprecise data. This new BCR approach reduces the misclassification errors of the objects difficult to classify by the conventional methods thanks to the introduction of the meta-classes. The objects too far from the others are considered as outliers. The basic belief assignment (bba) of an object is computed from the Mahalanobis distance between the object and the center of each specific class. The credal classification of the object is finally obtained by the combination of these bba’s associated with the different classes. This approach offers a relatively low computational burden. Several experiments using both artificial and real data sets are presented at the end of this paper to evaluate and compare the performances of this BCR method with respect to other classification methods.

Similar content being viewed by others

Notes

In this work, our classification method is a supervised learning technique because it classifies the objects using a given training data set. The unsupervised learning technique used for the cluster analysis without training data is called a clustering method.

I.e. the set of the specific classes that the objects are close to.

The center of a specific class is calculated by the simple arithmetic average value of the training data belonging to this class.

This rule is mathematically defined only if the denominator is strictly positive, i.e. the sources are not totally conflicting.

The subsets A of Θ such that m(A)>0 are called the focal elements of m(⋅).

The focal elements of each bba are nested.

References

Silveman BW, Jones MC, Fix E, Hodges JL (1951) An important contribution to nonparametric discriminant analysis and density estimation. Int Stat Rev 57(3):233–247. Dec 1989

Bishop CM (1995) Neural networks for pattern recognition. Oxford University Press, Oxford

Lee LH, Wan CH, Rajkumar R, Isa D (2012) An enhanced support vector machine classification framework by using Euclidean distance function for text document categorization. Appl Intell 37(1):80–99

Verma B, Hassan SZ (2011) Hybrid ensemble approach for classification. Appl Intell 34(2):258–278

Dudani SA (1976) The distance-weighted k-nearest-neighbor rule. IEEE Trans Syst Man Cybern SMC 6:325–327

Kim KJ, Ahn H (2012) Simultaneous optimization of artificial neural networks for financial forecasting. Appl Intell 36(4):887–898

Jousselme A-L, Maupin P, Bossé E (2003) Uncertainty in a situation analysis perspective. In: Proc of fusion 2003 int conf, July, pp 1207–1213

Jousselme A-L, Liu C, Grenier D, Bossé E (2006) Measuring ambiguity in the evidence theory. IEEE Trans Syst Man Cybern, Part A 36(5):890–903. (See also comments in [9])

Klir G, Lewis H (2008) Remarks on “Measuring ambiguity in the evidence theory”. IEEE Trans Syst Man Cybern, Part A 38(4):895–999

Shafer G (1976) A mathematical theory of evidence. Princeton Univ. Press, Princeton

Smarandache F, Dezert J (eds) (2004–2009) Advances and applications of DSmT for information fusion, vols 1–3. American Research Press, Rehoboth. http://fs.gallup.unm.edu/DSmT.htm

Smets P (1990) The combination of evidence in the transferable belief model. IEEE Trans Pattern Anal Mach Intell 12(5):447–458

Smets P, Kennes R (1994) The transferable belief model. Artif Intell 66(2):191–243

Denœux T (1995) A k-nearest neighbor classification rule based on Dempster-Shafer theory. IEEE Trans Syst Man Cybern 25(5):804–813

Denœux T, Smets P (2006) Classification using belief functions: relationship between case-based and model-based approaches. IEEE Trans Syst Man Cybern, Part B 36(6):1395–1406

Sutton-Charani N, Destercke S, Denœux T (2012) Classification trees based on belief functions. In: Denoeux T, Masson M-H (eds) Belief functions: theory and appl. AISC, vol 164, pp 77–84

Karem F, Dhibi M, Martin A (2012) Combination of supervised and unsupervised classification using the theory of belief functions. In: Denoeux T, Masson M-H (eds) Belief functions: theory and appl. AISC, vol 164, pp 85–92

Kanj S, Abdallah F, Denœux T (2012) Evidential multi-label classification using the random k-label sets approach. In: Denoeux T, Masson M-H (eds) Belief functions: theory and appl. AISC, vol 164, pp 21–28

Lu Y (1996) Knowledge integration in a multiple classifier system. Appl Intell 6(2):75–86

Denœux T, Masson M-H (2004) EVCLUS: EVidential CLUStering of proximity data. IEEE Trans Syst Man Cybern, Part B 34(1):95–109

Masson M-H, Denœux T (2008) ECM: an evidential version of the fuzzy c-means algorithm. Pattern Recognit 41(4):1384–1397

Masson M-H, Denœux T (2004) Clustering interval-valued data using belief functions. Pattern Recognit Lett 25(2):163–171

Liu Z-g, Dezert J, Mercier G, Pan Q (2012) Belief C-means: an extension of fuzzy C-means algorithm in belief functions framework. Pattern Recognit Lett 33(3):291–300

Liu Z-g, Dezert J, Pan Q, Mercier G (2011) Combination of sources of evidence with different discounting factors based on a new dissimilarity measure. Decis Support Syst 52:133–141

Li XD, Dai XZ, Dezert J (2010) Fusion of imprecise qualitative information. Appl Intell 33(3):340–351

Denoeux T (2000) A neural network classifier based on Dempster-Shafer theory. IEEE Trans Syst Man Cybern A 30(2):131–150

Dubois D, Prade H (1988) Representation and combination of uncertainty with belief functions and possibility measures. Comput Intell 4(4):244–264

Altincay H (2006) On the independence requirement in Dempster-Shafer theory for combining classifiers providing statistical evidence. Appl Intell 25(1):73–90

Dezert J, Liu Z-g, Mercier G (2011) Edge detection in color images based on DSmT. In: Proceedings of fusion 2011 int conf, Chicago, USA, July 2011

Klein J, Colot O (2012) A belief function model for pixel data. In: Denoeux T, Masson M-H (eds) Belief functions: theory and appl AISC, vol 164, pp 189–196

Zouhal LM, Denœux T (1998) An evidence-theoretic k-NN rule with parameter optimization. IEEE Trans Syst Man Cybern, Part C 28(2):263–271

Frank A, Asuncion A (2010) UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. University of California, School of Information and Computer Science, Irvine, CA, USA

Geisser S (1993) Predictive inference: an introduction. Chapman and Hall, New York

Acknowledgements

This work has been partially supported by National Natural Science Foundation of China (Nos. 61075029, 61135001) and Ph.D. Thesis Innovation Fund from Northwestern Polytechnical University (No. cx201015).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, Zg., Pan, Q. & Dezert, J. A belief classification rule for imprecise data. Appl Intell 40, 214–228 (2014). https://doi.org/10.1007/s10489-013-0453-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-013-0453-5