Abstract

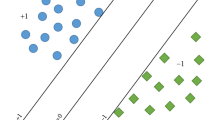

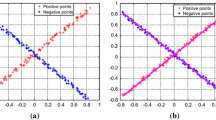

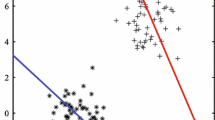

Twin support vector machine (TWSVM) is regarded as a milestone in the development of powerful SVMs. However, there are some inconsistencies with TWSVM that can lead to many reasonable modifications with different outputs. In order to obtain better performance, we propose a novel combined outputs framework that combines rational outputs. Based on this framework, an optimal output model, called the linearly combined twin bounded support vector machine (LCTBSVM), is presented. Our LCTBSVM is based on the outputs of several TWSVMs, and produces the optimal output by solving an optimization problem. Furthermore, two heuristic algorithms are suggested in order to solve the optimization problem. Our comprehensive experiments show the superior generalization performance of our LCTBSVM compared with SVM, PSVM, GEPSVM, and some current TWSVMs, thus confirming the value of our theoretical analysis approach.

Similar content being viewed by others

References

Cortes C, Vapnik VN (1995) Support vector networks. Mach Learn 20:273–297

Burges C (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Disc 2:1–43

Bradley PS, Mangasarian OL (2000) Massive data discrimination via linear support vector machines. Discret Methods Softw 13:1–10

Zheng BC, Sang WY, Sarah SL (2014) Breast cancer diagnosis based on feature extraction using a hybrid of K-means and support vector machine algorithms. Expert Syst Appl 41(4):1476–1482

Li YX, Shao YH, Deng NY (2011) Improved prediction of palmitoylation sites using PWMs and SVM. Protein Pept Lett 18(2):186–193

Scholkopf B, Tsuda K, Vert JP (2004) Kernel methods in computational biology. MIT Press, Cambridge

Rai H, Anamika Y (2014) Iris recognition using combined support vector machine and hamming distance approach. Expert Syst Appl 41(2):588–593

Xia SY, Xiong ZY, Luo YG, Dong LM, Xing CY (2015) Relative density based support vector machine. Neurocomputing 149:1424–1432

Jayadeva RK, Chandra S (2009) Regularized least squares fuzzy support vector regression for financial time series forecasting. Expert Syst Appl 36(1):132–138

Deng NY, Tian YJ, Zhang CH (2012) Support vector machines: optimization based theory, algorithms, and extensions. CRC Press

Cristianini N, Taylor JS (2000) An introduction to support vector machines and other kernel-based learning methods. Mass.: Cambridge University Press, Cambridge

Mangasarian OL, Wild EW (2006) Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Int 28(1):69–74

Jayadeva RK, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Int 29(5):905–910

Shao YH, Zhang CH, Wang XB, Deng NY (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Shao YH, Chen WJ, Deng NY (2014) Nonparallel hyperplane support vector machine for binary classification problems. Inf Sci 263(1):22–35

Tian YJ, Qi ZQ, Ju XC, Shi Y, Liu XH (2014) Nonparallel support vector machines for pattern classification. IEEE Trans Cybern 44(7):1067–79

Shao YH, Wang Z, Chen WJ, Deng NY (2013) A regularization for the projection twin support vector machine. Knowl-Based Syst 37:203–210

Yang ZM, Hua XY, Shao YH (2012) Empirical analysis of adaboost twin bounded support vector machines. J Inf Comput Sci 9(16):5085–5092

Shao YH, Deng NY, Yang ZM (2012) Least squares recursive projection twin support vector machine for classification. Pattern Recog 45(6):2299–2307

Shao YH, Deng NY (2012) A coordinate descent margin based-twin support vector machine for classification. Neural Netw 25:114–121

Qi ZQ, Tian YJ, Shi Y (2013) Robust twin support vector machine for pattern classification. Pattern Recogn 46(1):305–316

Ghorai S, Hossain SJ, Mukherjee A, Dutta PK (2010) Unity norm twin support vector machine classifier.India Conference (INDICON), India, pp 1-4

Ghorai S, Hossain SJ, Mukherjee A, Dutta PK (2010) Newton’s method for nonparallel plane proximal classifier with unity norm hyperplanes. Signal Process 90(1):93–104

Shao YH, Deng NY, Yang ZM, Chen WJ, Wang Z (2012) Probabilistic outputs for twin support vector machines. Knowl Based Syst 33:145–151

Shao YH, Deng NY (2013) A novel margin based twin support vector machine with unity norm hyperplanes. Neural Comput Applic 22(7-8):1627–1635

Chew SW, Lucey S, Lucey P, Sridharan S, Conn JF (2012) Improved facial expression recognition via unihyperplane classification. IEEE Conference on Computer Vision and Pattern Recognition, Rhode Island, pp 2554–2561

Shao YH, Deng N , Chen WJ (2014) Laplacian unit-hyperplane learning from positive and unlabeled examples. Information Sciences, revised

Shao YH, Deng NY, Chen WJ (2013) A proximal classifier with consistency. Knowl-Based Syst 49 (0):171–178

Duda RO, Hart PE, Stork DG (2001) Pattern classification, 2nd (Ed.) John Wiley and Sons, New York

Kirkpatrick S (1984) Optimization by simulated annealing: Quantitative studies. J Stat Phys 34(5):975–986

De BT, Cristianini N (2004) Convex methods for transduction. Adv Neural Inf Process Syst 16:73–80

Li XC, Wang L, Sung E (2008) AdaBoost with SVM-based component classifiers. Eng Appl Artif Intell 21(5):785–795

Li YF, Zhou ZH (2011) Towards making unlabeled data never hurt. Proceedings of the 28th International Conference on Machine Learning (ICML-11), Bellevue, WA, pp 1081–1088

Zhou ZH (2012) Ensemble methods: foundations and algorithms. CRC Press

MATLAB (2007) The MathWorks, Inc. http://www.mathworks.com

Blake CL, Merz CJ (1998) UCI Repository for Machine Learning Databases. Dept. of Information and Computer Sciences,Univ. of California, Irvine, http://www.ics.uci.edu/mlearn/MLRepository.html

Glenn F, Mangasarian OL (2001) Proximal support vector machine classifiers. In: Proceedings of the seventh ACM SIGKDD international conference on Knowledge discovery and data mining, pp 77–86

Chang CC, Lin CJ (2001) LIBSVM: A library for support vector machines. http://www.csie.ntu.edu.tw/cjlin

Glenn F, Mangasarian OL (2001) PSVM:Proximal support vector machine classifiers. http://research.cs.wisc.edu/dmi/svm/psvm/

Demsar J (2006) Statistical comparisons of classifiers over mul-tiple data sets. J Mac Learn Res 7:1–30

Garcia S, Herrera F (2008) An extension on “statistical com-parisons of classifiers over multiple data sets” for all pairwise comparisons. J Mac Learn Res 9:2677–2694

Garcia S, Fernandez A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inf Sci 9:2044–2064

Laarhoven PJM (1987) Simulated Annealing: Theory and Applications. Springer

Sindhwani V, Keerthi SS, Chapelle O (2006) Deterministic annealing for semi-supervised kernel machines. Proceedings of the 23rd international conference on Machine learning, pp 841–848

Chapelle O, Tubingen G, Sindhwani V, Keerthi SS (2007) Branch and bound for semi-supervised support vector machines. In NIPS 20:217–224

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Nos. 11201426, 11371365, 11426202 and 11426200), the Zhejiang Provincial Natural Science Foundation of China (Nos. LQ12A01020, LQ13F030010, and LQ14G010004), the Ministry of Education, Humanities and Social Sciences Research Project of China (No. 13YJC910011), and the Zhejiang Provincial University Students’ Science and Technology Innovation Activities Program (Xinmiao Talents Program) (No. 2014R403063).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Shao, YH., Hua, XY., Liu, LM. et al. Combined outputs framework for twin support vector machines. Appl Intell 43, 424–438 (2015). https://doi.org/10.1007/s10489-015-0655-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-015-0655-0