Abstract

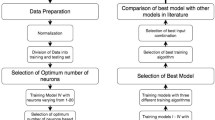

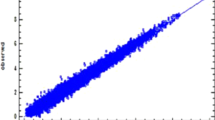

This paper analyzes various earlier approaches for selection of hidden neuron numbers in artificial neural networks and proposes a novel criterion to select the hidden neuron numbers in improved back propagation networks for wind speed forecasting application. Either over fitting or under fitting problem is caused because of the random selection of hidden neuron numbers in artificial neural networks. This paper presents the solution of either over fitting or under fitting problems. In order to select the hidden neuron numbers, 151 different criteria are tested by means of the statistical errors. The simulation is performed on collected real-time wind data and simulation results prove that proposed approach reduces the error to a minimal value and enhances forecasting accuracy The perfect building of improved back propagation networks employing the fixation criterion is substantiated based on the convergence theorem. Comparative analyses performed prove the selection of hidden neuron numbers in improved back propagation networks is highly effective in nature.

Similar content being viewed by others

References

Sivanandam SN, Sumathi S, Deepa SN (2008) Introduction to Neural Networks using Matlab 6.0, 1st. Tata McGraw Hill, India

Panchal G, Ganatra A, Kosta YP, Panchal D (2011) Behaviour analysis of multilayer perceptrons with multiple hidden neurons and hidden layers. Int J Comput Theory Eng 3(2):332–337

Hunter D, Hao Y, PukishIII MS, Kolbusz J, Wilamowski BM (2012) Selection of proper neural network sizes and architecture- A comparative study. IEEE Trans Indust Inf 8(2):228–240

Shuxiang X, Chen L (2008) A novel approach for determining the optimal number of hidden layer neurons for FNN’s and its application in data mining. In: 5th International Conference on Information Technology and Application (ICITA): 683-686

Ke J, Liu X (2008) Empirical analysis of optimal hidden neurons in neural network modeling for stock prediction. Pac Asia Work Comput Intell Indust Appl 2:828–832

Karsoliya S (2012) Approximating number of hidden layer neuron in multiple hidden layer BPNN architecture. Int J Eng Trends Technol 31(6):714–717

Huang S-C, Huang Y-F (1991) Bounds on the number of hidden neurons in multilayer perceptrons. IEEE Tran Neural Netw 2(1):47–55

Arai M (1993) Bounds on the number of hidden units in binary-valued three-layer neural networks. Neural Netw 6:855–860

Hagiwara M (1994) A simple and effective method for removal of hidden units and weights. Neuro Comput 6(2):207–218

Murata N, Yoshizawa S, Amari S-I (1994) Network Information Criterion determining the number of hidden units for an artificial neural network model. IEEE Trans Neural Netw 5(6):865–872

Li J-Y, Chow TWS, Ying-Lin Y (1995) The estimation theory and optimization algorithm for the number of hidden units in the higher-order feed forward neural network. Proc IEEE Int Conf Neural Netw 3:1229–1233

Onoda T (1995) Neural network information criterion for the optimal number of hidden units. Proc IEEE Int Conf Neural Netw 1:275–280

Tamura S, Tateishi M (1997) Capabilities of a four-layered feed forward neural network: four layer versus three. IEEE Trans Neural Netw 8(2):251–255

Fujita O (1998) Statistical estimation of the number of hidden units for feed forward neural network. Neural Netw 11:851–859

Keeni K, Nakayama K, Shimodaira H (1999) Estimation of initial weights and hidden units for fast learning of multilayer neural networks for pattern classification. Int Joint Conf Neural Netw 3:1652–1656

Huang G-B (2003) Learning capability and storage capacity of two-hidden layer feed forward networks. IEEE Trans Neural Netw 14(2):274–281

Yuan HC, Xiong FL, Huai XY (2003) A method for estimating the number of hidden neurons in feed-forward neural networks based on information entropy. Comput Electron Agric 40:57–64

Zhang Z, Ma X, Yang Y (2003) Bounds on the number of hidden neurons in three-layer binary neural networks. Neural Netw 16:995–1002

Mao KZ, Huang G-B (2005) Neuron selection for RBF neural network classifier based on data structure preserving criterion. IEEE Trans Neural Netw 16(6):1531–1540

Teoh EJ, Tan KC, Xiang C (2006) Estimating the number of hidden neurons in a feed forward network using the singular value decomposition. IEEE Trans Neural Netw 17(6):1623–1629

Zeng X, Yeung DS (2006) Hidden neuron purning of multilayer perceptrons using a quantified sensitivity measure. Neuro Comput 69:825–837

Choi B, Lee J-H, Kim D-H (2008) Solving local minima problem with large number of hidden nodes on two layered feed forward artificial neural networks. Neuro Comput 71(16-18):3640– 3643

Han M, Yin J (2008) The hidden neurons selection of the wavelet networks using support vector machines and ridge regression. Neuro Comput 72(1-3):471–479

Jiang N, Zhang Z, Ma X, Wang J (2008) The lower bound on the number of hidden neurons in multi-valued multi threshold neural networks. Second Int Symp Intell Inf Technol Appl 1:103–107

Trenn S (2008) Multilayer perceptrons: Approximation order and necessary number of hidden units. IEEE Trans Neural Netw 19(5):836–844

Shibata K, Ikeda Y (2009) Effect of number of hidden neurons on learning in large-scale layered neural networks. ICROS-SICE International Joint Conference, pp 5008–5013

Doukin C A, Dargham J A, Chekima A (2010) Finding the number of hidden neurons for an MLP neural network using coarse to fine search technique. In: 10thInternational Conference on Information Sciences Signal Processing and their Applications (ISSPA): 606–609

Li J, Zhang B, Mao C, Xie GL, Li Y, Jiming L (2010) Wind speed prediction based on the Elman recursion neural networks. International Conference on Modelling:728–732

Sun J (2012) Learning algorithm and hidden node selection scheme for local coupled feed forward neural network classifier. Neuro Comput 79:158–163

Ramadevi R, Sheela Rani B, Prakash V (2012) Role of hidden neurons in an Elman recurrent neural network in classification of cavitation signals. Int J Comput Appl 37(7):9–13

Gnana Sheela K, Deepa SN (2013) Review on methods to fix number of hidden neurons in neural networks. Mathematical Problems in Engineering. Hindawi Publish Corp 2013:1–11

Qian Guo, Yong H (2013) Forecasting the rural per capita living consumption based on Matlab BP neural network. Int J Bus Soc Sci 4(17):131–137

Vora K, Yagnik S (2014) A new technique to solve local minima problem with large number of hidden nodes on feed forward neural network. Int J Eng Dev Res 2(2):1978–1981

Urolagin S, Prema KV, Subba Reddy NV (2012) Generalization capability of artificial neural network incorporated with pruning method. Lect Notes Comput Sci 7135:171–178

Morris AJ, Zhang J (1998) A sequential learning approach for single hidden layer neural network. Neural Netw 11(1):65–80

Dass HK (2009) Advanced Engineering Mathematics (First edition 1988). S CHAND & Company Ltd, India

Acknowledgments

The authors are thankful to National Oceanic and Atmospheric Administration, United States providing real-time data, to do the research work

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Considers different criterion ‘n’ as input parameters number. All considered criteria are satisfied based on the convergence theorem. If the limit of sequence is finite, the sequence is called convergent sequence. If the limit of the sequence does not tend to a finite number, the sequence is called divergent [36].

The convergence theorem characteristics are given below.

-

1.

A convergent sequence has a finite limit.

-

2.

All convergent sequences are bounded.

-

3.

All bounded point has a finite limit.

-

4.

Convergent sequence needed condition that it has finite limit and is bounded.

-

5.

An oscillatory sequence does not tend to have a unique limit.

In a network there is no change occurring in the state of the network regardless of the operation is called stable network. In the neural network model most important property is it always converges to a stable state. For real-time optimization problem the convergence play a major role, risk of getting stuck at some local mini ma problem in a network prevented by the convergence. The convergence of sequence infinite has been established in convergence theorem because of the discontinuities in model. The real-time neural optimization solvers are designed using the convergence properties.

Discuss convergence of the considered sequence as follows. Taking the sequence

Apply convergence theorem

Hence, the sequence possesses a finite limit value and bounded so the considered sequence is convergent in nature.

Take the sequence

Apply convergence theorem

Hence, the sequence possesses a finite limit value and bounded so the considered sequence is convergent in nature.

Rights and permissions

About this article

Cite this article

Madhiarasan, M., Deepa, S.N. A novel criterion to select hidden neuron numbers in improved back propagation networks for wind speed forecasting. Appl Intell 44, 878–893 (2016). https://doi.org/10.1007/s10489-015-0737-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-015-0737-z