Abstract

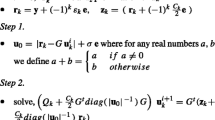

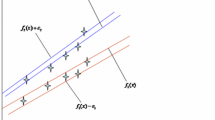

Recently, (Xu & Wang, Appl Intell 41(1):92–101, 2014) proposed a method called as K-nearest neighbor based weighted twin support vector regression (KNNWTSVR) to improve the prediction accuracy by using sample’s local information. A new variant of this approach named K-nearest neighbor based weighted twin support vector regression in primal as a pair of unconstrained minimization problems (KNNUPWTSVR) has been proposed in this paper which also reduces the effect of outliers. The solution of our proposed method is in primal space which has an approximate solution. It is well known that the approximate solution of the optimization problem in primal is always superior to its dual. The proposed KNNUPWTSVR is having continuous piece-wise quadratic objective functions which are solved by computing the zeros of the gradient. However, since the objective functions are having the non-smooth ‘plus’ function, therefore two approaches are suggested to solve the problems: i). by smooth approximation function which replaces the ‘plus’ function; ii). generalized derivative approach. To check the effectiveness of the proposed method, computational results of KNNUPWTSVR are obtained to compare with support vector regression (SVR), twin SVR (TSVR) and ε-twin SVR (ε-TSVR) on a number of synthetic datasets and real-world datasets. Our proposed method gives similar or better generalization performance with SVR, TSVR and ε-TSVR and also requires less computational time that clearly indicates its effectiveness and applicability.

Similar content being viewed by others

References

Achlioptas D, McSherry F, Schölkopf B (2002) Advances in neural information processing systems 14. In: Dietterich TG, Becker S, Ghahramani Z (eds) Sampling techniques for kernel methods. MIT Press, Cambridge, MA

Balasundaram S, Gupta D (2014) Training Lagrangian twin support vector regression via unconstrained convex minimization. Knowl-Based Syst 59:85–96

Balasundaram S, Gupta D, Kapil (2014) Lagrangian support vector regression via unconstrained convex minimization. Neural Netw 51:67–79

Balasundaram S, Gupta D (2014) On implicit Lagrangian twin support vector regression by Newton method. International journal of Computational Intelligence Systems 7(1):50–62

Balasundaram S, Tanveer M (2013) On Lagrangian twin support vector regression. Neural Comput & Applic 22(1):257–267

Bao Y-K, Liu Z-T, Guo L, Wang W (2005) Forecasting stock composite index by fuzzy support vector machines regression. In: Proceedings of international conference on machine learning and cybernetics, vol 6, pp 3535–3540

Box GEP, Jenkins GM (1976) Time series analysis: forecasting and control. Holden-Day, San Francisco

Chapelle O (2007) Training a support vector machine in primal. Neural Comput 19(5):1155–1178

Chen X, Yang J, Liang J, Ye Q (2012) Smooth twin support vector regression. Neural Comput & Applic 21:505–513

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel based learning methods. Cambridge University Press, Cambridge

DELVE (2005) Data for evaluating learning in valid experiments. http://www.cs.toronto.edu/~delve/data

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets, vol 7

Fung G, Mangasarian OL (2003) Finite Newton method for Lagrangian support vector machine. Neurocomputing 55:39–55

Garcia S, Herrera F (2008) An extension on Statistical comparisons of classifiers over multiple data sets for all pairwise comparisons. J Mach Learn Res 9:2677–2694

Gretton A, Doucet A, Herbrich R, Rayner PJW, Scholkopf B (2001) Support vector regression for black-box system identification. In: Proceedings of the 11th IEEE workshop on statistical signal processing

Han X, Clemmensen L (2014) On weighted support vector regression. Qual Reliab Eng Int 30(3):891–903

Hiriart-Urruty J-B, Strodiot JJ, Nguyen H (1984) Generalized Hessian matrix and second order optimality conditions for problems with data. Appl Math Optim 11:43–56

Jayadeva, Khemchandani R, Chandra S (2007) Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29(5):905–910

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Kumar MA, Gopal M (2008) Application of smoothing technique on twin support vector machines. Pattern Recogn Lett 29:1842–1848

Lee YJ, Hsieh W-F, Huang C-M (2005) ε - SSVR: a smooth support vector machine for ε - insensitive regression. IEEE Trans Knowl Data Eng 17(5):678–685

Lee YJ, Mangasarian OL (2001) SSVM: a smooth support vector machine for classification. Comput Optim Appl 20(1):5–22

Malhotra R, Malhotra DK (2003) Evaluating consumer loans using neural networks. Omega 31:83–96

Mangasarian OL (2002) A finite Newton method for classification. Optimization Methods and Software 17:913–929

Mangasarian OL, Wild EW (2006) Multisurface proximal support vector classification via generalized eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Mukherjee S, Osuna E, Girosi F (1997) Nonlinear prediction of chaotic time series using support vector machines. In: NNSP’97: neural networks for signal processing VII: proceedings of IEEE signal processing society workshop Amelia Island, FL USA, pp 511–520

Muller KR, Smola AJ, Ratsch G, Schölkopf B, Kohlmorgen J (1999) Advances in Kernel Methods- Support Vector Learning. In: Schölkopf B, Burges CJC, Smola AJ (eds) Using support vector machines for time series prediction. MIT Press, Cambridge, MA, pp 243–254

Murphy PM, Aha DW (1992) UCI repository of machine learning databases. University of California, Irvine. http://www.ics.uci.edu/~mlearn

Osuna E, Freund R, Girosi F (1997) Training support vector machines: an application to face detection. In: Proceedings of computer vision and pattern recognition, pp 130–136

Peng X (2010a) TSVR: an efficient twin support vector machine for regression. Neural Netw 23(3):365–372

Peng X (2010b) Primal twin support vector regression and its sparse approximation. Neurocomputing 73:2846–2858

Platt JC (1998) Advances in Kernel Methods - Support Vector Learning. In: Scholkopf B, Burges C, Smola A (eds) Sequential minimal optimization: a fast algorithm for training support vector machines. MIT Press, Cambridge, USA

Shao Y, Chen W, Zhang J, Wang Z, Deng N (2014) An efficient weighted Lagrangian twin support vector machine for imbalanced data classification. Pattern Recogn 47(6):3158–3167

Shao Y, Chen W, Wang Z, Deng N (2015) Weighted linear loss twin support vector machine for large-scale classification. Knowl-Based Syst 73:276–288

Shao Y, Zhang C, Wang X, Deng N (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(3):962–968

Shao Y, Zhang C, Yang Z, Jing L, Deng N (2013) An ε - twin support vector machine for regression. Neural Comput & Applic 23(1):175–185

Sjoberg J, Zhang Q, Ljung L, Berveniste A, Delyon B, Glorennec P, Hjalmarsson H, Juditsky A (1995) Nonlinear black-box modeling in system identification: a unified overview. Automatica 31:1691–1724

Souza LGM, Barreto GA (2006) Nonlinear system identification using local ARX models based on the self-organizing map. Learning and Nonlinear Models-Revista da Sociedade Brasileira de Redes Neurais (SBRN) 4(2):112–123

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Tanveer M, Shubham K, Aldhaifallah M, Ho SS (2016) An efficient regularized K-nearest neighbor based weighted twin support vector regression. Knowl-Based Syst 94:70–87

Tong Q, Zheng H, Wang X (2005) Gene prediction algorithm based on the statistical combination and the classification in terms of gene characteristics. In: International conference on neural networks and brain, vol 2, pp 673–677

Xu Y, Wang L (2012) A weighted twin support vector regression. Knowl-Based Syst 33:92–101

Xu Y, Wang L (2014) K-nearest neighbor-based weighted twin support vector regression. Appl Intell 41(1):92–101

Xu Y, Yu J, Zhang Y (2014) KNN-based weighted rough ν–twin support vector machine. Knowl-Based Syst 71:303–313

Ye Q, Zhao C, Gao S, Zhang H (2012) Weighted twin support vector machines with local information and its application. Neural Netw 35:31–39

Ye Y, Bai L, Hua X, Shao Y, Wang Z, Deng N (2016) Weighted Lagrange ε-twin support vector regression. Neurocomputing 197(c):53–68

Zhong P, Xu Y, Zhao Y (2012) Training twin support vector regression via linear programming. Neural Comput & Applic 21:399–407

Vapnik VN (2000) The nature of statistical learning theory, 2nd edn. Springer, New York

Acknowledgments

The author is extremely thankful to the anonymous reviewers for their comments and suggestions which are improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gupta, D. Training primal K-nearest neighbor based weighted twin support vector regression via unconstrained convex minimization. Appl Intell 47, 962–991 (2017). https://doi.org/10.1007/s10489-017-0913-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-017-0913-4