Abstract

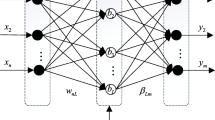

In this paper, a novel dual weights optimization Incremental Learning (w2IL) algorithm is developed to solve Time Series Forecasting (TSF) problem. The w2IL algorithm utilizes IELM as the base learner, while its incremental learning scheme is implemented by employing a newly designed Adaptively Weighted Predictors Aggregation (AdaWPA) subalgorithm to aggregate the existing base predictors with the ones generated upon the new data. There exist two major innovations within w2IL, namely, the well-designed Adaptive Samples Weights Initialization (AdaSWI) and AdaWPA subalgorithms. The AdaSWI subalgorithm initializes the samples’ weights adaptively based on the generated base models’ prediction errors, and fine-tunes the samples’ weights based on the distances from the samples to the clustering centers of base models’ training datasets, achieving more appropriate samples weights initialization. While the AdaWPA algorithm adaptively adjusts base predictors’ weights based on prediction instances and integrates the base predictors employing these adjusted weights. Besides, the AdaWPA subalgorithm makes use of Fuzzy C-Means (FCM) clustering algorithm for distance measurement, further reducing computational complexity and storage space of the algorithm. The w2IL algorithm constructed in this way possesses significantly superior prediction performance compared with other existing good algorithms, which has been verified through experimental results on six benchmark real-world TSF datasets.

Similar content being viewed by others

References

Ziegel E (1992) Time Series: Theory and Methods. Technometrics 34:159–181

Gooijer JGD, Hyndman RJ (2005) 25 years of time series forecasting. Monash Econometrics & Business Statistics Working Papers 22:443–473

Abiyev RH (2011) Fuzzy wavelet neural network based on fuzzy clustering and gradient techniques for time series prediction. Neural Comput & Applic 20:249–259

Chandra R, Zhang M (2012) Cooperative coevolution of Elman recurrent neural networks for chaotic time series prediction. Neurocomputing 86:116–123

Chen D, Han W (2013) Prediction of multivariate chaotic time series via radial basis function neural network. Complexity 18:55–66

Crone SF (2013) Training artificial neural networks for time series prediction using asymmetric cost functions. International Conference on Neural Information Processing 5:2374–2380

Mishra N, Soni HK, Sharma S, Upadhyay AK (2018) Development and Analysis of Artificial Neural Network Models for Rainfall Prediction by Using Time-Series Data. International Journal of Intelligent Systems & Applications 10:16–23

Donate P, Juan GS, Miguel SD, Araceli L et al (2013) Time series forecasting by evolving artificial neural networks with;genetic algorithms, differential evolution and estimation of;distribution algorithm. Neural Comput & Applic 22:11–20

Wan S, Zhang D, Si YW (2015) Evolutionary computation with multi-variates hybrid multi-order fuzzy time series for stock forecasting. IEEE International Conference on Computational Science and Engineering, pp 217-223

Rivero CR, Pucheta J, Patino H, Baumgartner J, Laboret S, Sauchelli V (2013) Analysis of a Gaussian process and feed-forward neural networks based filter for forecasting short rainfall time series. IEEE Computational Intelligence Magazine, pp 1-6

Gentili PL, Gotoda H, Dolnik M, Epstein IR (2015) Analysis and prediction of aperiodic hydrodynamic oscillatory time series by feed-forward neural networks, fuzzy logic, and a local nonlinear predictor. Chaos 25:1383–2693

Brezak D, Bacek T, Majetic D, Kasac J, Novakovic B (2012) A Comparison of Feed-forward and Recurrent Neural Networks in Time Series Forecasting. 2012 IEEE Conference on Computational Intelligence for Financial Engineering & Economics (Cifer), pp 206-211

Huang H, Wang X, Wang (2013) A support vector machine based MSM model for financial short-term;volatility forecasting. Neural Comput & Applic 22:21–28

Yang YW, Zuo HF, Guo C (2006) Influence analysis and self-adaptive optimization of support vector machine time series forecasting model parameters. Journal of Aerospace Power 21:767–772

Yong Y (2018) Spatial Choice Modeling Using the Support Vector Machine (SVM): Characterization and Prediction. Studies in Computational Intelligence 1:767–778

Sotiropoulos DG, Kostopoulos AE, Grapsa TN (2002) A spectral version of Perry's conjugate gradient method for neural network training. University of Patras, Patras, pp 27–29

Lockwood B, Mavriplis D (2013) Gradient-based methods for uncertainty quantification in hypersonic flows. Comput Fluids 85:27–38

Pugachev AO (2013) Application of gradient-based optimization methods for a rotor system with static stress, natural frequency,and harmonic response constraints. Struct Multidiscip Optim 47:951–962

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. Proc Int Joint Conf Neural Netw 2:985–990

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: Theory and applications. Neurocomputing 70:489–501

Mcfarland MB, Rysdyk RT, Calise AJ (1999) Robust adaptive control using single-hidden-layer feedforward neural networks. In: Proceedings of the 1999 American Control Conference, vol 6. IEEE, San Diego, CA, pp 4178–4182

Huang GB, Chen L, Siew CK (2006) Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans Neural Netw 17:879–892

Huang GB, Li MB, Chen L, Siew CK (2008) Incremental extreme learning machine with fully complex hidden nodes. Neurocomputing 71:576–583

Cauwenberghs G, Poggio T (2000) Incremental and decremental support vector machine learning. International Conference on Neural Information Processing Systems, pp 388-394

Zhou ZH, Chen ZQ (2002) Hybrid decision tree. Knowl-Based Syst 15:515–528

Hu LM, Shao C, Li JZ, Ji H (2015) Incremental learning from news events. Knowl-Based Syst 89:618–626

Xu X, Wang W, Wang JH (2016) A three-way incremental-learning algorithm for radar emitter identification. Frontiers of Computer Science 10:673–688

Lange S, Zilles S (2012) Formal models of incremental learning and their analysis. International Joint Conference on Neural Networks 4:2691–2696

Giraud-Carrier C (2000) A note on the utility of incremental learning. AI Commun 13:215–223

Ross DA, Lim J, Lin RS, Yang MH (2008) Incremental Learning for Robust Visual Tracking. Int J Comput Vis 77:125–141

Li J, Dai Q, Ye R (2018) A novel double incremental learning algorithm for time series prediction. Neural Comput & Applic. https://doi.org/10.1007/s00521-018-3434-0

Time Series Data Library. http://datamarket.com/data/list/?q=provider:tsdl

European Central Bank. http://www.ecb.europa.eu/home/html/index.en.html

Zhou TL, Gao SC, Wang JH, Chu CY, Todo Y, Tang Z (2016) Financial time series prediction using a dendritic neuron model. Knowl-Based Syst 105:214–224

World Data Center for the Sunspot Index. http://sidc.oma.be/

Jia S, Xu X, Pang Y, Yan G (2016) Similarity measurement based on cloud models for time series prediction. Control and Decision Conference 23:5138–5142

Bernas M, Płaczek B (2016) Period-aware local modelling and data selection for time series prediction. Expert Syst Appl 59:60–77

Wu SF, Lee SJ (2015) Employing local modeling in machine learning based methods for time-series prediction. Expert Syst Appl 42:341–354

Adwan S, Arof H (2012) On improving Dynamic Time Warping for pattern matching. Measurement 45:1609–1620

Crnic J (2004) Introduction to modern information retrieval, vol 55. McGraw-Hill, New York, pp 239–240

Štěpnička M, Cortez P, Donate JP, Štěpničková L (2013) Forecasting seasonal time series with computational intelligence: On recent methods and the potential of their combinations. Expert Syst Appl 40:1981–1992

Hussain AJ, Al-Jumeily D, Al-Askar H, Radi N (2016) Regularized dynamic self-organized neural network inspired by the immune algorithm for financial time series prediction. Neurocomputing 188:23–30

Vairappan C, Tamura H, Gao S, Tang Z (2009) Batch type local search-based adaptive neuro-fuzzy inference system (ANFIS) with self-feedbacks for time-series prediction. Neurocomputing 72:1870–1877

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant no. 61473150.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, J., Dai, Q. A new dual weights optimization incremental learning algorithm for time series forecasting. Appl Intell 49, 3668–3693 (2019). https://doi.org/10.1007/s10489-019-01471-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01471-y