Abstract

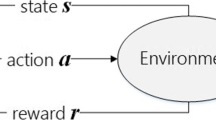

As an important machine learning method, reinforcement learning plays a more and more important role in practical application. In recent years, many scholars have studied parallel reinforcement learning algorithm, and achieved remarkable results in many applications. However, when using existing parallel reinforcement learning to solve problems, due to the limited search scope of agents, it often fails to reduce the running episodes of algorithms. At the same time, the traditional model-free reinforcement learning algorithm does not necessarily converge to the optimal solution, which may lead to some waste of resources in practical applications. In view of these problems, we apply Particle swarm optimization (PSO) algorithm to asynchronous reinforcement learning algorithm to search for the optimal solution. First, we propose a new asynchronous variant of PSO algorithm. Then we apply it into asynchronous reinforcement learning algorithm, and proposed a new asynchronous reinforcement learning algorithm named Sarsa algorithm based on backward Q-learning and asynchronous particle swarm optimization (APSO-BQSA). Finally, we verify the effectiveness of the asynchronous PSO and APSO-BQSA algorithm proposed in this paper through experiments.

Similar content being viewed by others

References

Sutton R, Barto A (1998) Reinforcement learning: An introduction. MIT press, Cambridge

Mnih V, Kavukcuoglu K, Silver D et al (2013) Playing atari with deep rein-forcement learning. Proceedings of Workshops at the 26th Neural Information Pro-cessing. Systems Lake Tahoe, USA, pp 201–220

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G, Petersen S, Beattie C, Sadik A, Antonoglou I, King H, Kumaran D, Wierstra D, Legg S, Hassabis D (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Silver D, Huang A, Maddison M et al (2016) Mastering the game of go with deep neural networks and tree search. Nature 529(7587):484–489

Mnih V, Badia A, Mirza M et al (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning, pp 1928–1937

Zhao X, Ding S, An Y, Jia W (2018) Asynchronous reinforcement learning algorithms for solving discrete space path planning problems. Appl Intell 48(12):4889–4904

Zhao X, Ding S, An Y (2018) A new asynchronous architecture for tabular re-inforcement learning algorithms. In: Proceedings of the eighth international conference on extreme learning machines, pp 172–180

Wang Y, Li T, Lin C (2013) Backward Q-learning: the combination of Sarsa algorithm and Q-learning. Eng Appl Artif Intell 26(9):2184–2193

Kennedy J, Eberhart R (1995) Particle swarm optimization. ICNN 1942–1948

Watkins C (1989) Learning from delayed rewards. Robot Auton Syst 15(4):233–235

Rummery G, Niranjan M (1994) On-line Q-learning using connectionist systems. University of Cambridge, Department of Engineering

Sutton R (1988) Learning to predict by the methods of temporal differences. Mach Learn 3(1):9–44

Singh S, Sutton R (1996) Reinforcement learning with replacing eligibility traces. Mach Learn 22(1-3):123-158

Silver D, Lever G, Heess N et al (2014) Deterministic policy gradient algorithms. In: Proceedings of the 31st international conference on machine learning, pp 387–395

Schulman J, Levine S, Abbeel P et al (2015) Trust region policy optimization. In: Proceedings of the 32nd international conference on machine learning, pp 1889–1897

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. IEEE CEC 69–73

Liang J, Suganthan PN (2006) Dynamic multi-swarm particle swarm optimizer with a novel constraint-handling mechanism. 2006 IEEE congress on. Evol Comput:9–16

Tokic M, Palm G (2011) Value-difference based exploration: adaptive control between epsilon-greedy and softmax. In: Annual conference on artificial intelligence. Springer, Berlin, Heidelberg, pp 335–346

Greg Brockman, Vicki Cheung, Ludwig Pettersson, et al. OpenAI Gym. arXiv preprint arXiv: 1606.01540 (2016)

Acknowledgments

This work is supported by the Fundamental Research Funds for the Central Universities (No.2017XKZD03).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ding, S., Du, W., Zhao, X. et al. A new asynchronous reinforcement learning algorithm based on improved parallel PSO. Appl Intell 49, 4211–4222 (2019). https://doi.org/10.1007/s10489-019-01487-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01487-4