Abstract

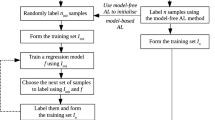

Active learning methods have been introduced to reduce the expense of acquiring labeled data. To solve regression problems with active learning, several expected model change maximization strategies have been developed to select the samples that are likely to greatly affect the current model. However, some of the selected samples may be outliers, which can result in poor estimation performance. To address this limitation, this study proposes an active learning framework that adopts an expected model change that is robust for both linear and nonlinear regression problems. By embedding local outlier probability, the learning framework aims to avoid outliers when selecting the samples that result in the greatest change to the current model. Experiments are conducted on synthetic and benchmark data to compare the performance of the proposed method with that of existing methods. The experimental results demonstrate that the proposed active learning algorithm outperforms its counterparts.

Similar content being viewed by others

References

Chen WJ, Shao YH, Xu DK, Fu YF (2014) Manifold proximal support vector machine for semi-supervised classification. Appl Intell 40(4):623–638

Zhang L, Chen C, Bu J, Cai D, He X, Huang TX (2011) Active learning based on locally linear reconstruction. IEEE Trans Pattern Anal Mach Intell 33(10):2026–2038

O’Neill J, Delany SJ, MacNamee B (2017) Model-free and model-based active learning for regression. Advances in Computational Intelligence Systems, Springer International Publishing 513: 375–386

Guo H, Wang W (2015) An active learning-based SVM multi-class classification model. Pattern Recogn 48(5):1577–1597

Tuia D, Muñoz-Marí J, Camps-Valls G (2012) Remote sensing image segmentation by active queries. Pattern Recogn 45(6):2180–2192

Seung H, Opper M, Sompolinsky H (1992) Query by committee. In: Proceedings of 5th ACM Annual Workshop on Computational Learning Theory, pp. 287–294

Wang R, Kwong S, Chen D (2012) Inconsistency-based active learning for support vector machines. Pattern Recogn 45(10):3751–3767

Settles B, Craven M, Ray S (2008) Multiple-instance active learning. In: Proceedings of Advances in Neural Information Processing Systems (NIPS), pp. 1289–1296

Cai W, Zhang Y, Zhou J (2013) Maximizing expected model change for active learning in regression. In: Proceedings of 13th IEEE International Conference Data Mining (ICDM), pp. 51–60

Cai W, Zhang M, Zhang Y (2017) Batch mode active learning for regression with expected model change. IEEE Trans Neural Netw Learn Syst 28(7):1668–1681

Cai W, Zhang Y, Zhang Y, Zhou S, Wang W, Chen Z, Ding C (2017) Active learning for classification with maximum model change. ACM Trans Inf Syst 36(2):15

MacKay D (1992) Information-based objective functions for active data selection. Neural Comput 4(4):590–604

Cohn D (1994) Neural network exploration using optimal experiment design. In: Proceedings of Advances in Neural Information Processing Systems (NIPS), pp. 679–686

Zhang C, Chen T (2003) Annotating retrieval database with active learning. In: Proceedings of 2003 IEEE International Conference on Image Processing, pp. 595

Dagli CK, Rajaram S, Huang TS (2006) Utilizing information theoretic diversity for SVM active learn. In: Proceeding of 18th IEEE International Conference on Pattern Recognition, pp. 506–511

Atkinson A, Donev A, Tobias R (2007) Optimum experimental designs with SAS, Oxford University Press 34

Yu K, Bi J, Tresp V (2006) Active learning via transductive experimental design. In: Proceedings of 23rd ACM International Conference on Machine Learning, pp. 1081–1088

Settles B (2010) Active learning literature survey. University of Wisconsin, Madison 52: 55–66

Burbidge R, Rowland JJ, King RD (2007) Active learning for regression based on query by committee. In: Proceedings of International Conference on Intelligent Data Engineering and Automated Learning, pp. 209–218

Har-Peled S, Roth D, Zimak D (2007) Maximum Margin Coresets for Active and Noise Tolerant Learning. In: Proceeding of International Joint Conferences on Artificial Intelligence Organization, pp. 836–841

Roy N, McCallum A (2001) Toward optimal active learning through Monte Carlo estimation of error reduction. In: Proceedings of International Conference on Machine Learning, Williamstown, pp. 441–448

Jingbo Z, Wang H, Yao TB, Tsou B (2008) Active Learning with Sampling by Uncertainty and Density for Word Sense Disambiguation and Text Classification. In: Proceedings of the 22nd International Conference on Computational Linguistics, pp. 1137–1144

Cohn DA, Ghahramani Z, Jordan MI (1996) Active learning with statistical models. J Artif Intell Res 4:129–145

Castro R, Willett R, Nowak R (2006) Faster rates in regression via active learning. In: Proceedings of Advances in Neural Information Processing Systems (NIPS), pp. 179–186

Fukumizu K (2000) Statistical active learning in multilayer perceptrons. IEEE Trans Neural Netw Learn Syst 11(1):17–26

Sugiyama M (2000) Active learning in approximately linear regression based on conditional expectation of generalization error. J Mach Learn Res 7:141–166

Freund Y, Seung HS, Shamir E, Tishby N (1997) Selective sampling using the query by committee algorithm. Mach Learn 28:133–168

Douak F, Melgani F, Benoudjit N (2013) Kernel ridge regression with active learning for wind speed prediction. Appl Energy 103:328–340

Demir B, Bruzzone L (2014) A multiple criteria active learning method for support vector regression. Pattern Recogn 47:2558–2567

Yu H, Kim S (2010) Passive Sampling for Regression. In: Proceedings of the 10th International Conference on Machine Learning (ICML), pp. 1151–1156

Wu D, Lin CT, Huang J (2019) Active learning for regression using greedy sampling. Inf Sci 474:90–105

Xue Z, Zhang R, Qin C, Zeng X (2018) A rough ν-twin support vector regression machine. Appl Intell 48(11):1–24

Kriegel HP, Kröger P, Schubert E, Zimek A (2009) LoOP: local outlier probabilities. In: Proceedings of the 18th ACM conference on Information and Knowledge Management, pp. 1649–1652

Roux NL, Schmidt M, Bach FR (2012) A stochastic gradient method with an exponential convergence rate for finite training sets. In: Proceeding of Advances in Neural Information Processing Systems (NIPS), pp. 2663–2671

Kingma D, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Fushiki T (2005) Bootstrap prediction and Bayesian prediction under Misspecified models. Bernoulli:747–758

Zhang Y, Duchi J, Wainwright M (2013) Divide and conquer kernel ridge regression. In: Proceeding of conference on learning theory, pp. 592–617

Van Vaerenbergh S, Santamarıa I. (2014) Online regression with kernels. Regularization, Optimization, Kernels, and Support Vector Machines 477

Schölkopf B, Herbrich R, Smola AJ (2001) A generalized representer theorem. In: Proceeding of International conference on computational learning theory, pp. 416–426

De Giorgi MG, Congedo PM, Malvoni M, Laforgia D (2015) Error analysis of hybrid photovoltaic power forecasting models: a case study of mediterranean climate. Energy Convers Manag 100:117–130

Acknowledgements

This work was supported by Brain Korea PLUS, the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning (NRF-2016R1A2B1008994) and the Ministry of Trade, Industry & Energy under the Industrial Technology Innovation Program (R1623371).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Park, S.H., Kim, S.B. Robust expected model change for active learning in regression. Appl Intell 50, 296–313 (2020). https://doi.org/10.1007/s10489-019-01519-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01519-z