Abstract

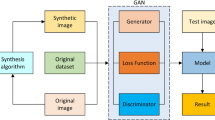

Underwater image analysis is crucial for many applications such as seafloor survey, biological and environment monitoring, underwater vehicle navigation, inspection and maintenance of underwater infrastructure etc. However, due to light absorption and scattering, the images acquired underwater are always blurry and distorted in color. Most existing image enhancement algorithms typically focus on a few features of the imaging environments, and enhanced results depend on the characteristics of original images. In this study, a local cycle-consistent generative adversarial network is proposed to enhance images acquired in a complex deep-water environment. The proposed network uses a combination of a local discriminator and a global discriminator. Additionally, quality-monitor loss is adopted to evaluate the effect of the generated images. Experimental results show that the local cycle-consistent generative adversarial network is robust and can be generalized for many different image enhancement tasks in different types of complex deep-water environment with varied turbidity.

Similar content being viewed by others

References

He KM, Sun J, Tang X (2009) Single image haze removal using Dark Channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):1956–1963

Land EH (1977) The Retinex theory of color vision. Sci Am 237:108–128

Getreuer P (2012) Automatic color enhancement (ACE) and its fast implementation. Image Process Line 2:266–277

Lan G, Jean P-A, Mehdi M, et al, 2014. Generative adversarial networks[J]. arXiv:1406.2661

Zhu JY, Park T, Isola P et al (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. arXiv:1703.10593

Yan XC (2009) A new method for underwater image enhancement based on local complexity. Modern Manuf Eng (12):101–103

Yang WZ, Xu YL, Qiao X et al (2016) Method for image intensification of Underwater Sea cucumber based on contrast-limited adaptive histogram equalization. Trans Chin Soc Agric Eng 32(06):197–203

Zhang S, Wang T, Dong JY et al (2017) Underwater image enhancement via extended multi-scale Retinex. Neurocomputing. 245:1–9

Javier P, Mitch B, Stefan BW et al (2020) Recovering depth from still images for underwater Dehazing using deep learning. Sensors 20(16):4580

Ho SL, Sang WM et al (2020) Underwater image enhancement using successive color correction and Superpixel Dark Channel prior. Symmetry 12(8):1220

Li CY, Guo JC, Cong RM, Pang YW, Wang B (2016) Underwater image enhancement by Dehazing with minimum information loss and histogram distribution prior. IEEE Trans Image Process 25(12):5664–5677

Zhang MH, Peng JH (2018) Underwater image restoration based on a new underwater image formation model IEEE access 6:58634–58644

Ma XM, Chen ZH, Feng ZP 2019 Underwater image restoration through a combination of improved Dark Channel prior and gray world algorithms. J Electron Imaging 28(5)

Song W, Wang Y, Huang DM et al (2018) Combining background light fusion and underwater Dark Channel prior with color balancing for underwater. Pattern Recogn Artificial Intell 31(09):856–868

Tang ZQ, Zhou B, Dai XZ (2018) Underwater robot visual enhancements based on the improved DCP algorithm. Robot 40(2):222–230

Yang SD, Chen ZH, Feng ZP 2019 Underwater Image Enhancement Using Scene Depth-Based Adaptive Background Light Estimation and Dark Channel Prior Algorithms IEEE Access

Yu H, Li X, Lou Q, Lei C, Liu Z (2020) Underwater image enhancement based on DCP and depth transmission map. Multimed Tools Appl 79:20373–20390

Jin WP, Guo JC, Qi Q (2019) Underwater image enhancement based on conditional generative adversarial network. Signal Processing: Image Communication 81

Liu P, Wang GY, Qi H et al (2019) Underwater image enhancement with a deep residual framework. IEEE Access 7:94614–94629

Lu JY, Li N, Zhang AY et al (2019) Multi-scale adversarial network for underwater image restoration. Opt Laser Technol 110:105–113

Fu XY, Cao XY 2020 Underwater image enhancement with global–local networks and compressed-histogram equalization. Signal Process: Image Commun 86

Li CY, Anwar S, Porikli F 2020 Underwater Scene Prior Inspired Deep Underwater Image and Video Enhancement. Pattern Recogn 98

Radford A Metz L, Chintala S (2016) Unsupervised representation learning with deep convolutional generative adversarial networks. The International Conference on Learning Representations 10667:97–108

Li J, Skinner K, Eustice R et al (2018) WaterGAN: unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robotics Automation Letters 3(1):387–394

Mirza M, Osindero S, 2014 Conditional generative adversarial nets. arXiv:1411.1784

Chen X, Duan Y, Houthooft R et al (2016) InfoGAN: interpretable representation learning by information maximizing generative adversarial nets. arXiv:1606.03657

Li T, Qian RH, Chao D et al. 2018 BeautyGAN: instance-level facial makeup transfer with deep generative adversarial network. ACM Multimedia Conference

Junho K, Minjae K, Hyeonwoo K et al 2019 U-GAT-IT: unsupervised generative Attentional networks with adaptive layer-instance normalization for image-to-image translation. arXiv:1907.10830

Huang X., Liu M.Y., Belongie S., et al., 2018. Multimodal unsupervised image-to-image translation. European Conference on Computer Vision, Multimodal Unsupervised Image-to-Image Translation

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Declarations

This research was funded by the National Key R&D Program of China (Grant No. 2018YFC0810500) and the Scientific, the Fundamental Research Funds for the Central Universities (Grant No. RF-TP-20-009A3) and Technological Innovation Foundation of Shunde Graduate School, USTB (Grant No. BK19BE003).

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zong, X., Chen, Z. & Wang, D. Local-CycleGAN: a general end-to-end network for visual enhancement in complex deep-water environment. Appl Intell 51, 1947–1958 (2021). https://doi.org/10.1007/s10489-020-01931-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01931-w