Abstract

In machine learning, feature selection is a kind of important dimension reduction techniques, which aims to choose features with the best discriminant ability to avoid the issue of curse of dimensionality for subsequent processing. As a supervised feature selection method, Fisher score (FS) provides a feature evaluation criterion and has been widely used. However, FS ignores the association between features by assessing all features independently and loses the local information for fully connecting within-class samples. In order to solve these issues, this paper proposes a novel feature evaluation criterion based on FS, named iteratively local Fisher score (ILFS). Compared with FS, the new criterion pays more attention to the local structure of data by using K nearest neighbours instead of all samples when calculating the scatters of within-class and between-class. In order to consider the relationship between features, we calculate local Fisher scores of feature subsets instead of scores of single features, and iteratively select the current optimal feature to achieve this idea like sequential forward selection (SFS). Experimental results on UCI and TEP data sets show that the improved algorithm performs well in classification activities compared with some other state-of-the-art methods.

Similar content being viewed by others

References

Abualigah LM, Khader AT, Hanandeh ES (2018) Hybrid clustering analysis using improved krill herd algorithm. Appl Intell 48(11):4047–4071

Abualigah LMQ (2019) Feature selection and enhanced krill herd algorithm for text document clustering. Springer, Berlin

Appice A, Ceci M, Rawles S, Flach P (2004) Redundant feature elimination for multi-class problems. In: Proceedings of the twenty-first international conference on machine learning, p 5

Bishop CM et al (1995) Neural networks for pattern recognition. Oxford University Press, Oxford

Bugata P, Drotár P (2019) Weighted nearest neighbors feature selection. Knowl-Based Syst 163:749–761

Chandrashekar G, Sahin F (2014) A survey on feature selection methods. Comput Elec Eng 40(1):16–28

Chen L, Man H, Nefian AV (2005) Face recognition based on multi-class mapping of fisher scores. Pattern Recogn 38(6):799–811

Dixit M, Li Y, Vasconcelos N (2019) Semantic fisher scores for task transfer: using objects to classify scenes. IEEE Trans Pattern Anal Mach Intell

Downs JJ, Vogel EF (1993) A plant-wide industrial process control problem. Comput Chem Eng 17(3):245–255

Dua D, Graff C (2017) UCI machine learning repository. http://archive.ics.uci.edu/ml

Gu Q, Li Z, Han J (2012) Generalized fisher score for feature selection. arXiv:1202.3725

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3:1157–1182

He B, Shah S, Maung C, Arnold G, Wan G, Schweitzer H (2019) Heuristic search algorithm for dimensionality reduction optimally combining feature selection and feature extraction. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 2280–2287

He X, Cai D, Niyogi P (2006) Laplacian score for feature selection. In: Advances in neural information processing systems, pp 507–514

He X, Niyogi P (2004) Locality preserving projections. In: Advances in neural information processing systems, pp 153–160

Huang SH (2015) Supervised feature selection: a tutorial. Artif Intell Res 4(2):22–37

Johnson BA, Iizuka K (2016) Integrating openstreetmap crowdsourced data and landsat time-series imagery for rapid land use/land cover (lulc) mapping: Case study of the laguna de bay area of the philippines. Appl Geogr 67:140–149

Keogh EJ, Mueen A (2010) Curse of dimensionality

Lai H, Tang Y, Luo H, Pan Y (2011) Greedy feature selection for ranking. In: Proceedings of the 2011 15th international conference on computer supported cooperative work in design (CSCWD). IEEE, pp 42–46

Liu H, Setiono R (1995) Chi2: feature selection and discretization of numeric attributes. In: Proceedings of 7th IEEE international conference on tools with artificial intelligence. IEEE, pp 388–391

Liu K, Yang X, Fujita H, Liu D, Yang X, Qian Y (2019) An efficient selector for multi-granularity attribute reduction. Inf Sci 505:457–472

Liu K, Yang X, Yu H, Mi J, Wang P, Chen X (2019) Rough set based semi-supervised feature selection via ensemble selector. Knowl-Based Syst 165:282–296

Moran M, Gordon G (2019) Curious feature selection. Inf Sci 485:42–54

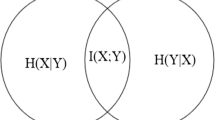

Peng H, Long F, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Qi X, Liu X, Boumaraf S (2019) A new feature selection method based on monarch butterfly optimization and fisher criterion. In: 2019 international joint conference on neural networks (IJCNN). IEEE, pp 1–6

Robnik-Šikonja M, Kononenko I (2003) Theoretical and empirical analysis of relieff and rrelieff. Mach Learn 53(1-2):23–69

Sakar CO, Polat SO, Katircioglu M, Kastro Y (2019) Real-time prediction of online shoppers’ purchasing intention using multilayer perceptron and lstm recurrent neural networks. Neural Comput Appl 31 (10):6893–6908

Sheikhpour R, Sarram MA, Gharaghani S, Chahooki MAZ (2017) A survey on semi-supervised feature selection methods. Pattern Recogn 64:141–158

Solorio-Fernández S, Carrasco-Ochoa JA, Martínez-trinidad JF (2020) A review of unsupervised feature selection methods. Artif Intell Rev 53(2):907–948

Stańczyk U (2015) Feature evaluation by filter, wrapper, and embedded approaches. In: Feature selection for data and pattern recognition. Springer, pp 29–44

Ververidis D, Kotropoulos C (2005) Sequential forward feature selection with low computational cost. In: 2005 13Th european signal processing conference. IEEE, pp 1–4

Xue Y, Zhang L, Wang B, Zhang Z, Li F (2018) Nonlinear feature selection using gaussian kernel svm-rfe for fault diagnosis. Appl Intell 48(10):3306–3331

Yu L, Liu H (2004) Efficient feature selection via analysis of relevance and redundancy. J Mach Learn Res 5:1205–1224

Zhang R, Zhang Z (2020) Feature selection with symmetrical complementary coefficient for quantifying feature interactions. Appl Intell 50(1):101–118

Zhou H, Zhang Y, Zhang Y, Liu H (2019) Feature selection based on conditional mutual information: minimum conditional relevance and minimum conditional redundancy. Appl Intell 49(3):883–896

Acknowledgments

This work was supported in part by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 19KJA550002, by the Six Talent Peak Project of Jiangsu Province of China under Grant No. XYDXX-054, by the Priority Academic Program Development of Jiangsu Higher Education Institutions, and by the Collaborative Innovation Center of Novel Software Technology and Industrialization.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supported by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 19KJA550002, the Six Talent Peak Project of Jiangsu Province of China under Grant No. XYDXX-054, and the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Appendix A: Proof of Theorem 1

Appendix A: Proof of Theorem 1

Proof

We adopt the mathematical induction method to prove Theorem 1. Fist, we generate the series {J(G(t))}, t = 1,⋯ ,m using the rule (5).

For t = 1, G(0) = ∅ and \(\overline {G(0)}=\{1,\cdots ,m\}\). The highest score can be obtained by

where \(s_{b}^{G(0)}=0\) and \(s_{w}^{G(0)}=\delta \). In other words, J(G(1)) is generated by maximizing the local Fisher score of single feature. Then \(G(1)=\{k_{1}^{*}\}\) according to (5), and \(\overline {G(1)}=\{1,\cdots ,m\}-G(1)\), where \(k_{1}^{*}\) is the optimal feature selected in the first iteration. From now, \(k_{t}^{*}\) denotes the optimal feature selected in the t-th iteration.

For t = 2, J(G(2)) can be represented by

Naturally, the score of the feature subset \(\{k_{2}^{*}\}\) is less than or equal to that of the feature subset \(\{k_{1}^{*}\}\). Namely

By comparing (12) and (13), we have

Note that \(s_{w}^{G(1)}>0\) and \(s^{\{k_{2}^{*}\}}_{w}\geq 0\). Substituting (14) into (15), we have

which means that Theorem 1 holds in the first two iterations.

Now, we assume that Theorem 1 holds in the (t − 1)-th and t-th iterations. Namely,

For ∀t, J(G(t)) has the form:

The difference of J(G(t)) and J(G(t + 1)) can be described as

Because the denominator of (19) is greater than 0, we just consider the numerator of (19) that can be further represented as

Because (17) holds true, we have the following inequalities

where \(k,k^{*}_{t}\in \overline {G(t-1)}\) and \(k\neq k^{*}_{t}\). We separately derive the three inequalities and get

and

Because (24) holds true for \(\forall k\in \overline {G(t-1)}\) and \(k_{t+1}^{*} \in \overline {G(t)} \subset \overline {G(t-1)}\) in (20), we can rewrite (20) as

Substituting (25) into (19), we have

Consequently, we complete the proof of Theorem 1 by using the mathematical induction method.□

Rights and permissions

About this article

Cite this article

Gan, M., Zhang, L. Iteratively local fisher score for feature selection. Appl Intell 51, 6167–6181 (2021). https://doi.org/10.1007/s10489-020-02141-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-02141-0