Abstract

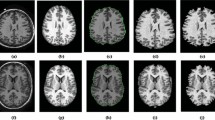

To have the sparsity of deep neural networks is crucial, which can improve the learning ability of them, especially for application to high-dimensional data with small sample size. Commonly used regularization terms for keeping the sparsity of deep neural networks are based on L1-norm or L2-norm; however, they are not the most reasonable substitutes of L0-norm. In this paper, based on the fact that the minimization of a log-sum function is one effective approximation to that of L0-norm, the sparse penalty term on the connection weights with the log-sum function is introduced. By embedding the corresponding iterative re-weighted-L1 minimization algorithm with k-step contrastive divergence, the connections of deep belief networks can be updated in a way of sparse self-adaption. Experiments on two kinds of biomedical datasets which are two typical small sample size datasets with a large number of variables, i.e., brain functional magnetic resonance imaging data and single nucleotide polymorphism data, show that the proposed deep belief networks with self-adaptive sparsity can learn the layer-wise sparse features effectively. And results demonstrate better performances including the identification accuracy and sparsity capability than several typical learning machines.

Similar content being viewed by others

References

Qiao C, Gao B, Shi Y (2020) SRS-DNN: a deep neural network with strengthening response sparsity. Neural Comput Applic 32:8127–8142

Liu K, Wu J, Liu H, Sun M, Wang Y (2021) Reliability analysis of thermal error model based on DBN and Monte Carlo method. Mech Syst Signal Process 146:107020

Yan X, Liu Y, Jia M (2020) Multiscale cascading deep belief network for fault identification of rotating machinery under various working conditions. Knowl Based Syst 193:105484

Chen CLP, Feng S (2020) Generative and discriminative fuzzy restricted Boltzmann machine learning for text and image classification. IEEE Trans Cybern 50(5):2237–2248

Chu J, Wang H, Meng H, Jin P, Li T (2019) Restricted Boltzmann machines with Gaussian visible units guided by pairwise constraints. IEEE Trans Cybern 49(12):4321–4334

Zhang J, Wang H, Chu J, Huang S, Li T, Zhao Q (2019) Improved Gaussian–Bernoulli restricted Boltzmann machine for learning discriminative representations. Knowl Based Syst 185:104911

Qiao J, Wang L (2021) Nonlinear system modeling and application based on restricted Boltzmann machine and improved BP neural network. Appl Intell 51:37–50

Bengio Y (2009) Learning deep architectures for ai. Found Trends Mach Learn 2(1):1–127

LeCun Y, Bengio Y, Hinton GE (2015) Deep learning. Nature 521(7553):436–444

Gu L, Huang J, Yang L (2019) On the representational power of restricted boltzmann machines for symmetric functions and boolean functions. IEEE T Neur Net Lear 30(5):1335–1347

Chen Y, Zhao X, Jia X (2015) Spectral-spatial classification of hyperspectral data based on deep belief network. IEEE J Select Topics Appl Earth Observ Remote Sens 8(6):2381–2392

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5786):504–507

Salakhutdinov R, Hinton G (2009) Replicated softmax: An undirected topic model. In: Proceedings of the 22nd International Conference on Neural Information Processing Systems, Curran Associates Inc., Red Hook, NY, USA, NIPS’09, pp 1607–1614

Morris G, Nevet A, Bergman H (2003) Anatomical funneling, sparse connectivity and redundancy reduction in the neural networks of the basal ganglia. J Physiol-Paris 97(4-6):581–589

Olshausen BA, Field DJ (2004) Sparse coding of sensory inputs. Current Opin Neurobiol 14 (4):481–487

Banino A, Barry C, Uria B, Blundell C, Lillicrap T, Mirowski P, Pritzel A, Chadwick M, Degris T, Modayil J, Wayne G, Soyer H, Viola F, Zhang B, Goroshin R, Rabinowitz N, Pascanu R, Beattie C, Petersen S, Kumaran D (2018) Vector-based navigation using grid-like representations in artificial agents. Nature 557, 429–433

Girosi F, Poggio T (1995) Regularization theory and neural networks architectures. Neural Comput 7(2):219–269

Williams P (1995) Bayesian regularization and pruning using a laplace prior. Neural Comput 7 (1):117–143

Weigend AS, Rumelhart DE, Huberman BA (1990) Generalization by weight-elimination with application to forecasting.. In: Proceedings of the 1990 conference on advances in neural information processing systems 3, Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, NIPS-3, pp 875–882

Nowlan SJ, Hinton GE (1992) Simplifying neural networks by soft weight-sharing. Neural Comput 4(4):473–493

Hinton GE, Osindero S, Teh YW (2006) A fast learning algorithm for deep belief nets. Neural comput 18:1527–54

Denton E, Zaremba W, Bruna J, LeCun Y, Fergus R (2014) Exploiting linear structure within convolutional networks for efficient evaluation. In: Proceedings of the 27th international conference on neural information processing systems - volume 1, MIT Press, Cambridge, MA, USA, NIPS’14, pp 1269–1277

Wang E, Davis JJ, Zhao R, Ng HC, Niu X, Luk W, Cheung PYK, Constantinides GA (2019) Deep neural network approximation for custom hardware: Where we’ve been, where we’re going. ACM Comput Surv 52(2):1–39

J Candès E, Wakin MB, Boyd SP (2007) Enhancing sparsity by reweighted l1 minimization. J Fourier Anal Appl 14:877–905

Nair V, Hinton GE (2009) 3d object recognition with deep belief nets. In: Bengio Y, Schuurmans D, Lafferty JD, Williams CKI, Culotta A (eds) Advances in neural information processing systems 22, Curran Associates, Inc. pp 1339–1347

Lee H, Ekanadham C, Ng AY (2008) Sparse deep belief net model for visual area v2. In: Platt JC, Koller D, Singer Y, Roweis ST (eds) Advances in neural information processing systems 20 curran associates Inc. pp 873–880

Lee H, Grosse R, Ranganath R, Ng AY (2011) Unsupervised learning of hierarchical representations with convolutional deep belief networks. Commun Acm 54(10):95–103

Ranzato M, Poultney C, Chopra S, LeCun Y (2007) Efficient learning of sparse representations with an energy-based model, MIT Press 1137–1144

Luo H, Shen R, Niu C, Ullrich C (2011) Sparse group restricted boltzmann machines. In: Proceedings of the twenty-fifth AAAI conference on artificial intelligence, AAAI Press, AAAI’11, pp 429–434

Zhang J, Ji N, Liu J, Pan J, Meng D (2015) Enhancing performance of the backpropagation algorithm via sparse response regularization. Neurocomputing 153:20–40

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: A simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Hinton GE (2002) Training product of expert by minimizing contrastive divergence. Neural Comput 14(8):1771–1800

Kong Y, Yu T (2018) A graph-embedded deep feedforward network for disease outcome classification and feature selection using gene expression data. Bioinformatics 34(21):3727–3737

Min S, Lee B, Yoon S (2016) Deep learning in bioinformatics. Brief Bioinform 18(5):851–869

Rao B, Kreutz-Delgado K (1999) An affine scaling methodology for best basis selection. IEEE T Signal Proces 47(1):187–200

Wipf D, Nagarajan S (2010) Iterative reweighted ℓ1 and ℓ2 methods for finding sparse solutions. IEEE J Sel Top Signal Process 4(2):317–329

Hinton GE (2010) A practical guide to training restricted boltzmann machines. Momentum 9:926–947

Fischer A, Igel C (2014) Training restricted boltzmann machines: An introduction. Pattern Recogn 47(1):25–39

Segal D (2015) Diagnostic and statistical manual of mental disorders (5th ed.), American Cancer Society 101–105

Segall J, Allen E, Jung R, Erhardt E, Arja S, Kiehl K, Calhoun V (2012) Correspondence between structure and function in the human brain at rest. Front Neuroinform 6:10

Allen E, Erik BE (2011) A baseline for the multivariate comparison of resting-state networks. Syst Neurosci 5:2

Hoyer PO (2004) Non-negative matrix factorization with sparseness constraints. J Mach Learn Res 5:1457–1469

Thom M, Palm G (2013) Sparse activity and sparse connectivity in supervised learning, JMLR 14

Hu J, Li T, Wang H, Fujita H (2016) Hierarchical cluster ensemble model based on knowledge granulation. Knowl Based Syst 91:179–188

Lan L, Wang Z, Zhe S, Cheng W, Wang J, Zhang K (2019) Scaling up kernel SVM on limited resources: a low-rank linearization approach. IEEE Trans Neural Netw Learn Syst 30(2):369–378

Guo X, Zhang C, Luo W, Yang J, Yang M (2020) Urban impervious surface extraction based on multi-features and random forest. IEEE Access 8:226609–226623

Cao H, Duan J, Lin D, Calhoun V, Wang YP (2013) Integrating fmri and snp data for biomarker identification for schizophrenia with a sparse representation based variable selection method. BMC Med Genomics 6(3):S2

Meier L, Geer S, Bühlmann P (2008) The group lasso for logistic regression, group lasso for logistic regression. J R Stat Soc Ser B 70(1):53–71

Onitsuka T, Shenton ME, Salisbury DF, Dickey CC, Kasai K, Toner SK, Frumin M, Kikinis R, Jolesz FA, MR W (2004) Middle and inferior temporal gyrus gray matter volume abnormalities in chronic schizophrenia: An mri study. Am J Psychiatry 161(9):1603–1611

Tyekucheva S, Marchionni L, Karchin R, Parmigiani G (2011) Integrating diverse genomic data using gene sets. Genome Biol 12(10):R105

Cao H, Lin D, Duan J, Calhoun V, Wang YP (2012) Biomarker identification for diagnosis of schizophrenia with integrated analysis of fmri and snps. In: 2012 IEEE Int C Bioinform, pp 1–6

Li Y, Namburi P, Yu Z, Guan C, Feng J, Gu Z (2009) Voxel selection in fmri data analysis based on sparse representation. IEEE T Bio-Med Eng 56(10):2439–2451

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by the National Natural Science Foundation of China (No. 12090020 and No.12090021), the National Key Research and Development Program of China (No. 2020AAA0106302), the Science and Technology Innovation Plan of Xian (No. 2019421315KYPT004JC006) and was partly Supported by HPC Platform, Xi’an Jiaotong University.

Rights and permissions

About this article

Cite this article

Qiao, C., Yang, L., Shi, Y. et al. Deep belief networks with self-adaptive sparsity. Appl Intell 52, 237–253 (2022). https://doi.org/10.1007/s10489-021-02361-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02361-y