Abstract

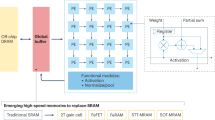

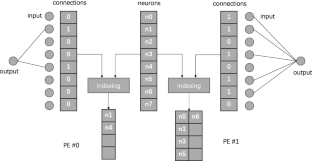

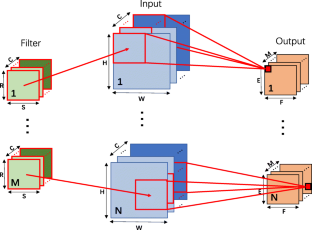

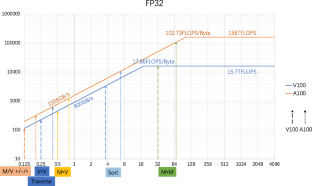

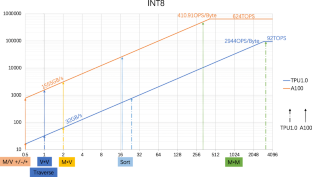

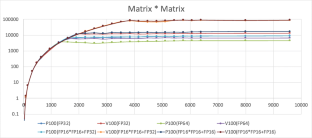

Artificial Intelligence algorithms have shown performance advantages in a wide range of application domains. However, the increasing demand for hardware resources raises challenges for AI accelerator design. To alleviate this issue, the academic community has presented much research. Among these, evaluating the performance of AI algorithms on accelerators is a hot topic. However, such work usually requires a miscellaneous experimental setup configuration, and may involve repetitive tests. Instead of conducting redundant experiments with prior research, in this paper, we present a comprehensive evaluation of AI operators rather than AI algorithms in an easy-to-operate manner. We first explore common AI operators in a variety of AI algorithms with an in-depth analysis. We identify six representative operator categories. Then, we analyze their performance using roofline model. To verify our analysis, we conduct simple evaluation experiments, where several AI operators are evaluated on two NVIDIA GPUs. We observe from the evaluation results that AI operators benefit from low-precision, large-size on-chip cache and high-bandwidth off-chip memory, and sparsity processing. Based on the observations, we propose three optimization opportunities for AI accelerator design, including multiple-precision support, an efficient memory system, and sparsity processing.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. Neural Inf Process Syst 25:01

Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P (2011) Natural language processing (almost) from scratch. J Mach Learn Res 12:2493–2537

Hinton G, Deng l, Yu D, Dahl G, Mohamed A-R, Jaitly N, Senior A, Vanhoucke V, Nguyen P, Sainath T, Kingsbury B (2012) Deep neural networks for acoustic modeling in speech recognition. IEEE Sig Process Mag 29(6):11

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86:2278–2324

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Goodfellow I (2016) Nips 2016 tutorial: Generative adversarial networks. arXiv:1701.00160

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Cheng J, Greiner R (2001) Learning bayesian belief network classifiers: Algorithms and system. In: Conference of the Canadian society for computational studies of intelligence. Springer, pp 141–151

Keller JM, Gray MR, Givens JA (1985) A fuzzy k-nearest neighbor algorithm. IEEE Trans Syst Man Cybern (4):580–585

Arafa M, Fahim B, Kottapalli S, Kumar A, Looi LP, Mandava S, Rudoff A, Steiner IM, Valentine B, Vedaraman G et al (2019) Cascade lake: Next generation intel xeon scalable processor. IEEE Micro 39(2):29–36

Nvidia (2017) Nvidia tesla v100 gpu architecture. https://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf/https://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf/https://images.nvidia.com/content/volta-architecture/pdf/volta-architecture-whitepaper.pdf/

Nvidia (2020) Nvidia a100 tensor core gpu architecture. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf/

Guo K, Sui L, Qiu J, Yu J, Wang J, Yao S, Han S, Wang Y, Yang H (2017) Angel-eye: A complete design flow for mapping cnn onto embedded fpga. IEEE Trans Comput-Aided Des Integr Circ Syst 37(1):35–47

Venieris SI, Bouganis C-S (2017) fpgaconvnet: Automated mapping of convolutional neural networks on fpgas. In: Proceedings of the 2017 ACM/SIGDA international symposium on field-programmable gate arrays, pp 291–292

Nakahara H, Fujii T, Sato S (2017) A fully connected layer elimination for a binarizec convolutional neural network on an fpga. In: 2017 27th international conference on field programmable logic and applications (FPL). IEEE, pp 1–4

Chen T, Du Z, Sun N, Wang J, Wu C, Chen Y, Temam O (2014) Diannao: A small-footprint high-throughput accelerator for ubiquitous machine-learning. ACM SIGARCH Comput Architect News 42(1):269–284

Chen Y-H, Krishna T, Emer JS, Sze V (2016) Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid-State Circ 52(1):127–138

Parashar A, Rhu M, Mukkara A, Puglielli A, Venkatesan R, Khailany B, Emer J, Keckler SW, Dally WJ (2017) Scnn: An accelerator for compressed-sparse convolutional neural networks. ACM SIGARCH Comput Archit News 45(2):27–40

Han S, Liu X, Mao H, Jing P u, Pedram A, Horowitz MA, Dally WJ (2016) Eie: efficient inference engine on compressed deep neural network. ACM SIGARCH Comput Archit News 44(3):243–254

Jouppi NP, Young C, Patil N, Patterson D, Agrawal G, Bajwa R, Bates S, Bhatia S, Boden N, Borchers A et al (2017) In-datacenter performance analysis of a tensor processing unit. In: Proceedings of the 44th annual international symposium on computer architecture, pp 1–12

Lipton RJ, Lopresti D (1985) A systolic array for rapid string comparison. In: Proceedings of the chapel hill conference on VLSI. Chapel Hill NC, pp 363–376

Zhang S, Du Z, Zhang L, Lan H, Liu S, Li L, Qi G, Chen T, Chen Y (2016) Cambricon-x: An accelerator for sparse neural networks. In: 2016 49th Annual IEEE/ACM international symposium on microarchitecture (MICRO). IEEE, pp 1–12

He P, Chen G, Deng K, Yao P, Fu L (2020) Improve image classification by convolutional network on Cambricon. pp 75–82

Williams S, Waterman A, Patterson D (2009) Roofline: an insightful visual performance model for multicore architectures. Commun ACM 52(4):65–76

Md AR, Goli N, Aamodt TM (2019) Modeling deep learning accelerator enabled gpus. In: 2019 IEEE international symposium on performance analysis of systems and software (ISPASS). IEEE, pp 79–92

Kalamkar D, Mudigere D, Mellempudi N, Das D, Banerjee K, Avancha S, Vooturi DT, Jammalamadaka N, Huang J, Yuen H et al (2019) A study of bfloat16 for deep learning training. arXiv:1905.12322

Yao Z, Cao S, Xiao W, Zhang C, Nie L (2019) Balanced sparsity for efficient dnn inference on gpu. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 5676– 5683

Cao S, Zhang C, Yao Z, Xiao W, Nie L, Zhan D, Liu Y, Wu M, Zhang L (2019) Efficient and effective sparse lstm on fpga with bank-balanced sparsity. In: Proceedings of the 2019 ACM/SIGDA international symposium on field-programmable gate arrays, pp 63–72

Norrie T, Patil N, Yoon DH, Kurian G, Li S, Laudon J, Young C, Jouppi NP, Patterson D (2020) Google’s training chips revealed: Tpuv2 and tpuv3. Hotchips

Hecht-Nielsen R (1992) Theory of the backpropagation neural network. In: Neural networks for perception. Elsevier, pp 65–93

Hara K, Saito D, Shouno H (2015) Analysis of function of rectified linear unit used in deep learning. In: 2015 international joint conference on neural networks (IJCNN). IEEE, pp 1–8

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167

Samuel W, Yang C, Wang Y (2020) Roofline performance modeling for hpc and deep learning applications

Applegate DL, Bixby RE, Chvátal V, Cook WJ (2011) The traveling salesman problem. Princeton University Press, Princeton

NVIDIA (2021) Cuda toolkit documentation

Mishra A, Latorre JA, Pool J, Stosic D, Stosic D, Venkatesh G, Yu C, Micikevicius P (2021) Accelerating sparse deep neural networks. arXiv:2104.08378

Jacob B, Kligys S, Bo C, Zhu M, Tang M, Howard A, Adam H, Kalenichenko D (2018) Quantization and training of neural networks for efficient integer-arithmetic-only inference. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2704–2713

Lin D, Talathi S, Annapureddy S (2016) Fixed point quantization of deep convolutional networks. In: International conference on machine learning, pp 2849–2858

Gupta S, Agrawal A, Gopalakrishnan K, Narayanan P (2015) Deep learning with limited numerical precision. In: International conference on machine learning, pp 1737–1746

Mark H (2014) Energy table for 45nm process. In: Stanford VLSI wiki

Courbariaux M, Bengio Y, David J-P (2014) Training deep neural networks with low precision multiplications. arXiv:1412.7024

Liu H, Ferdman M, Huh J, Burger D (2008) Cache bursts: A new approach for eliminating dead blocks and increasing cache efficiency. In: 2008 41st IEEE/ACM international symposium on microarchitecture. IEEE, pp 222–233

Ma S, Guo Y, Chen S, Huang L, Wang Z (2019) Improving the dram access efficiency for matrix multiplication on multicore accelerators. In: 2019 design, automation & test in europe conference & exhibition (DATE). IEEE, pp 1058–1063

Ham TJ, Aragón JL, Martonosi M (2015) Desc: Decoupled supply-compute communication management for heterogeneous architectures. In: 2015 48th annual IEEE/ACM international symposium on microarchitecture (MICRO). IEEE, pp 191– 203

Wang Z, Nowatzki T (2019) Stream-based memory access specialization for general purpose processors. In: 2019 ACM/IEEE 46th annual international symposium on computer architecture (ISCA). IEEE, pp 736–749

Albericio J, Judd P, Hetherington T, Aamodt T, Jerger NE, Moshovos A (2016) Cnvlutin: Ineffectual-neuron-free deep neural network computing. ACM SIGARCH Comput Archit News 44 (3):1–13

Han S, Kang J, Mao H, Hu Y, Li X, Li Y, Xie D, Luo H, Yao S, Wang Y et al (2016) Ese: Efficient speech recognition engine with compressed lstm on fpga. arXiv:1612.00694

Kiningham K, Graczyk M, Ramkumar A, Stanford SCPD (2016) Design and analysis of a hardware cnn accelerator. Small 27:6

Xu R, Han F, Ta Q (2018) Deep learning at scale on nvidia v100 accelerators. pp 23–32

Zhang H, Chen D, Ko SB (2019) Efficient multiple-precision floating-point fused multiply-add with mixed-precision support. IEEE Trans Comput 68(7):1035–1048

Han S, Mao H, William JD (2015) Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv:1510.00149

Acknowledgements

We thank the anonymous reviewers for their feedback. This work was supported by National Major Science and Technology Projects of China under Grant 2018ZX01028-102.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, Z., Zheng, F., Yu, Q. et al. Evaluating performance of AI operators using roofline model. Appl Intell 52, 7054–7069 (2022). https://doi.org/10.1007/s10489-021-02794-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02794-5