Abstract

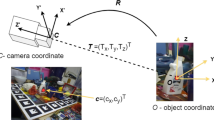

Recently, pose estimation algorithms have been widely discussed. Although been investigated by many prior works, pose estimation for heavily stacked workpieces, like bins, is still a challenge for industrial applications. Moreover, the scene of stacked workpieces is much more arduous than the general scene for robots to carry out routine tasks, such as object detection and picking. This paper aims to address the problem of pose estimation for stacked symmetrical workpieces in the presence of partial occlusions and cluttered backgrounds. To tackle those problems, we propose a novel pose estimation method for industrial stacking scenes based on RGB images. Specifically, due to the common symmetry characteristics of industrial workpieces, we firstly present a new standardized spatial representation method, which could auto-encode the 2D-3D correspondences of symmetrical workpieces. Besides, we introduce a novel GAN-based deep neural network model to reconstruct the representation of stacked workpieces. Based on that, the pose of the target workpieces is predicted based on the reconstructed expression and an improved RANSAC-PnP algorithm. Finally, comprehensive experiments demonstrate that the proposed method outperforms state-of-the-art methods, especially in complex stacking scenes.

Similar content being viewed by others

References

Aldoma A, Marton Z-C, Tombari F, Wohlkinger W, Potthast C, Zeisl B, Bogdan Rusu R, Gedikli S, Vincze M (2012) Tutorial: Point cloud library: Three-dimensional object recognition and 6 dof pose estimation. IEEE Robotics & Automation Magazine 19(3):80–91

Barath D, Matas J (2018) Graph-cut ransac. In: 2018 IEEE/CVF Conference on computer vision and pattern recognition

Barath D, Matas J (2019) Progressive-x: Efficient, anytime, multi-model fitting algorithm. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3780–3788

Brachmann E, Michel F, Krull A, Yang MY, Gumhold S et al (2016) Uncertainty-driven 6d pose estimation of objects and scenes from a single rgb image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3364–3372

Butters L, Xu Z, Klette R (2019) Using machine vision to command a 6-axis robot arm to act on a randomly placed zinc die cast product. In: Proceedings of the 2nd International Conference on Control and Computer Vision, pp 8–12

Castro P, Armagan A, Kim TK (2019) Accurate 6d object pose estimation by pose conditioned mesh reconstruction

Chen W, Jia X, Jin Chang H, Duan J, Leonardis A (2020) G2l-net: Global to local network for real-time 6d pose estimation with embedding vector features

Chum O, Matas J (2005) Matching with prosac-progressive sample consensus. In: 2005 IEEE Computer society conference on computer vision and pattern recognition (CVPR’05), vol 1, pp 220–226

Drost B, Ulrich M, Navab N, Ilic S (2010) Model globally, match locally: Efficient and robust 3d object recognition. In: 2010 IEEE Computer society conference on computer vision and pattern recognition. Ieee, pp 998–1005

Du G, Wang K, Lian S (2019) Vision-based robotic grasping from object localization, pose estimation, grasp detection to motion planning: A review

Dwibedi D, Misra I, Hebert M (2017) Cut, paste and learn: Surprisingly easy synthesis for instance detection. In: Proceedings of the IEEE international conference on computer vision, pp 1301–1310

Fernando O (2016) A comprehensive performance evaluation of 3d local feature descriptors. Comput Rev 57(8):507–507

Fu T, Li F, Zheng Y, Quan W, Song R, Li Y (2019) Dynamically grasping with incomplete information workpiece based on machine vision. In: 2019 IEEE International conference on unmanned systems (ICUS). IEEE, pp 502–507

Guo Y, Bennamoun M, Sohel F, Lu M, Wan J, Kwok NM (2016) A comprehensive performance evaluation of 3d local feature descriptors. Int J Comput Vis 116(1):66–89

Hinterstoisser S, Cagniart C, Ilic S, Sturm P, Navab N, Fua P, Lepetit V (2011) Gradient response maps for real-time detection of textureless objects. IEEE Trans Pattern Anal Mach Intell 34 (5):876–888

Hinterstoisser S, Holzer S, Cagniart C, Ilic S, Konolige K, Navab N, Lepetit V (2011) Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes. In: 2011 International conference on computer vision. IEEE, pp 858–865

Hinterstoisser S, Lepetit V, Ilic S, Holzer S, Bradski G, Konolige K, Navab N (2012) Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes. In: Asian conference on computer vision. Springer, pp 548–562

Hinterstoisser S, Lepetit V, Rajkumar N, Konolige K (2016) Going further with point pair features. In: European conference on computer vision

Hodan T, Barath D, Matas J (2020) Epos: estimating 6d pose of objects with symmetries. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11703–11712

Kehl W, Manhardt F, Tombari F, Ilic S, Navab N (2017) Ssd-6d: Making rgb-based 3d detection and 6d pose estimation great again. In: Proceedings of the IEEE international conference on computer vision, pp 1521–1529

Kendall A, Cipolla R (2017) Geometric loss functions for camera pose regression with deep learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5974–5983

Kneip L, Scaramuzza D, Siegwart R (2011) A novel parametrization of the perspective-three-point problem for a direct computation of absolute camera position and orientation. In: Proceedings / CVPR IEEE computer society conference on computer vision and pattern recognition. IEEE computer society conference on computer vision and pattern recognition, pp 2969– 2976

Li Y, Wang G, Xiang YX, Fox D (2020) Deepim: Deep iterative matching for 6d pose estimation. Int J Comput Vision 128(3)

Li Z, Wang G, Ji X (2019) Cdpn: Coordinates-based disentangled pose network for real-time rgb-based 6-dof object pose estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 7678–7687

Park K, Patten T, Vincze M (2020) Pix2pose: Pixel-wise coordinate regression of objects for 6d pose estimation. In: 2019 IEEE/CVF International conference on computer vision (ICCV)

Peng S, Liu Y, Huang Q, Bao H, Zhou X (2018) Pvnet: Pixel-wise voting network for 6dof pose estimation

Rad M, Lepetit V (2017) Bb8: A scalable, accurate robust to partial occlusion method for predicting the 3d poses of challenging objects without using depth

Rublee E, Rabaud V, Konolige K, Bradski G (2012) Orb: An efficient alternative to sift or surf. In: International conference on computer vision

Song C, Song J, Huang Q (2020) Hybridpose: 6d object pose estimation under hybrid representations

Tekin B, Sinha SN, Fua P (2018) Real-time seamless single shot 6d object pose prediction. In: 2018 IEEE/CVF Conference on computer vision and pattern recognition (CVPR)

Lepetit V, Moreno-NoguerPascal F, Fua (2009) Epnp: An accurate o(n) solution to the pnp problem. International Journal of Computer Vision

Wang C, Xu D, Zhu Y, Martín-Martín R, Lu C, Fei-Fei L, Savarese S (2019) Densefusion: 6d object pose estimation by iterative dense fusion

Wang H, Sridhar S, Huang J, Valentin J, Guibas LJ (2019) Normalized object coordinate space for category-level 6d object pose and size estimation

Xiang Y, Schmidt T, Narayanan V, Fox D (2017) Posecnn: A convolutional neural network for 6d object pose estimation in cluttered scenes. arXiv:1711.00199

Zakharov S, Shugurov I, Ilic S (2019) Dpod: 6d pose object detector and refiner. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 1941–1950

Acknowledgment

This paper was supported by the Natural Science Fund of China (NSFC) under Grant No.51575186, the Major Program of National Natural Science Foundation of China under Grant No. 61690214, Shanghai Science and Technology Action Plan under Grant No.18DZ1204000, 18510745500, 18510750100, 18510730600, Shanghai Aerospace Science and Technology Innovation Fund (SAST) under Grant No. 2019-080, 2019-116 and Shanghai Sailing Program under Grant No. 20YF1417300.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interests

Jianjun Yi has received research grants from Shanghai economic and information commission, Shanghai science and technology action plan project, the Natural Science Fund of China, the Fundamental Research Funds for the Central Universities, Shanghai Pujiang Program and Shanghai Software and IC industry Development Special Fund.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Y., Yi, J., Chen, Y. et al. Pose estimation for workpieces in complex stacking industrial scene based on RGB images. Appl Intell 52, 8757–8769 (2022). https://doi.org/10.1007/s10489-021-02857-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02857-7