Abstract

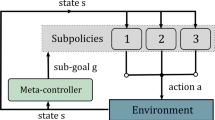

Hierarchical Reinforcement Learning (HRL) is primarily proposed for addressing problems with sparse reward signals and a long time horizon. Many existing HRL algorithms use neural networks to automatically produce goals, which have not taken into account that not all goals advance the task. Some methods have addressed the optimization of goal generation, while the goal is represented by the specific value of the state. In this paper, we propose a novel HRL algorithm for automatically discovering goals, which solves the problem for the optimization of goal generation in the latent state space by screening goals and selecting policies. We compare our approach with the state-of-the-art algorithms on Atari 2600 games and the results show that it can speed up learning and improve performance.

Similar content being viewed by others

References

Andrychowicz M, Wolski F, Ray A, Schneider J, Fong R, Welinder P, McGrew B, Tobin J, Abbeel P, Zaremba W (2017) Hindsight experience replay. arXiv preprint arXiv:1707.01495

Bacon PL, Harb J, Precup D (2017) The option-critic architecture. In: Proceedings of the AAAI Conference on Artificial Intelligence 31

Bellemare MG, Naddaf Y, Veness J, Bowling M (2013) The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research 47:253–279

Chen Y, He F, Li H, Zhang D, Wu Y (2020) A full migration bbo algorithm with enhanced population quality bounds for multimodal biomedical image registration. Applied Soft Computing 93:106335

Dietterich TG (2000) Hierarchical reinforcement learning with the maxq value function decomposition. Journal of artificial intelligence research 13:227–303

Dilokthanakul N, Kaplanis C, Pawlowski N, Shanahan M (2019) Feature control as intrinsic motivation for hierarchical reinforcement learning. IEEE transactions on neural networks and learning systems 30(11):3409–3418

Dulac-Arnold G, Mankowitz D, Hester T (2019) Challenges of real-world reinforcement learning. arXiv preprint arXiv:1904.12901

Johannink T, Bahl S, Nair A, Luo J, Kumar A, Loskyll M, Ojea JA, Solowjow E, Levine S (2019) Residual reinforcement learning for robot control. In: 2019 International Conference on Robotics and Automation (ICRA) IEEE 6023–6029

Jong NK, Hester T, Stone P (2008) The utility of temporal abstraction in reinforcement learning. In: AAMAS Citeseer 1: 299–306.

Kahn G, Villaflor A, Pong V, Abbeel P, Levine S (2017) Uncertainty-aware reinforcement learning for collision avoidance. arXiv preprint arXiv:1702.01182

Kulkarni TD, Narasimhan K, Saeedi A, Tenenbaum J (2016) Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. Advances in neural information processing systems 29:3675–3683

Levy A, Konidaris G, Platt R, Saenko K (2017) Learning multi-level hierarchies with hindsight. arXiv preprint arXiv:1712.00948

Li H, He F, Chen Y, Luo J (2020) Multi-objective self-organizing optimization for constrained sparse array synthesis. Swarm and Evolutionary Computation 58:100743

Li S, Wang R, Tang M, Zhang C (2019) Hierarchical reinforcement learning with advantage-based auxiliary rewards. arXiv preprint arXiv:1910.04450

Liang Y, He F, Zeng X (2020) 3d mesh simplification with feature preservation based on whale optimization algorithm and differential evolution. Integrated Computer-Aided Engineering (Preprint), 1–19

Luo J, He F, Yong J (2020) An efficient and robust bat algorithm with fusion of opposition-based learning and whale optimization algorithm. Intelligent Data Analysis 24(3):581–606

McGovern A, Sutton RS (1998) Macro-actions in reinforcement learning: An empirical analysis. Computer Science Department Faculty Publication Series 15

Mnih V, Badia AP, Mirza M, Graves A, Lillicrap T, Harley T, Silver D, Kavukcuoglu K (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning PMLR 1928–1937

Mnih V, Kavukcuoglu K, Silver D, Graves A, Antonoglou I, Wierstra D, Riedmiller M (2013) Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602

Nachum O, Gu S, Lee H, Levine S (2018) Data-efficient hierarchical reinforcement learning. arXiv preprint arXiv:1805.08296

Parr R, Russell S (1998) Reinforcement learning with hierarchies of machines. Advances in neural information processing systems 1043–1049

Rummery GA, Niranjan M (1994) On-line Q-learning using connectionist systems Citeseer 37

Sallab AE, Abdou M, Perot E, Yogamani S (2017) Deep reinforcement learning framework for autonomous driving. Electronic Imaging 2017(19):70–76

Schaul T, Horgan D, Gregor K, Silver D (2015) Universal value function approximators. In: International conference on machine learning PMLR 1312–1320

Schulman YWMLR Openai baselines: A2c. [EB/OL]. https://openai.com/blog/baselines-acktr-a2c/ Accessed 18 Aug 2017

Sharma A, Gu S, Levine S, Kumar V, Hausman K (2019) Dynamics-aware unsupervised discovery of skills. arXiv preprint arXiv:1907.01657

Sutton RS, Barto AG (2018) Reinforcement learning: An introduction. MIT press

Sutton RS, McAllester DA, Singh SP, Mansour Y, et al (1999) Policy gradient methods for reinforcement learning with function approximation. In: NIPs Citeseer 99: 1057–1063

Sutton RS, Precup D, Singh S (1999) Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial intelligence 112(1–2):181–211

Vezhnevets AS, Osindero S, Schaul T, Heess N, Jaderberg M, Silver D, Kavukcuoglu K (2017) Feudal networks for hierarchical reinforcement learning. In: International Conference on Machine Learning PMLR 3540–3549

Watkins CJ, Dayan P (1992) Q-learning. Machine learning 8(3–4):279–292

Zhang T, Guo S, Tan T, Hu X, Chen F (2020) Generating adjacency-constrained subgoals in hierarchical reinforcement learning. arXiv preprint arXiv:2006.11485

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhou, J., Chen, J., Tong, Y. et al. Screening goals and selecting policies in hierarchical reinforcement learning. Appl Intell 52, 18049–18060 (2022). https://doi.org/10.1007/s10489-021-03093-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-03093-9