Abstract

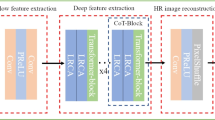

In this paper, we propose an effective and novel network architecture based on the transformer TC-Net. The architecture is composed of several transformer blocks and convolutions for image denoising. The following four core designs of TC-Net ensure that it is suitable for image denoising: (1) An extra skip-connection for feature fusion ensures more effective transmission and utilization of low-level and high-level features. (2) Window multihead self-attention greatly reduces the amount of calculation and captures the dependence of capturing long distances. (3) A convolution-based forward network further improves the ability to capture local information. (4) Ingeniously adding a deep residual shrinkage network into a transformer block improves the networks ability to deal with noise and its robustness in complex scenes. Not just for denoising tasks. To deal with similar low-level visual tasks, only the dataset needs to be changed. The model architecture remains the same and is trained separately. Then, the pretraining models of other tasks can be obtained. A large number of experiments proved the ability of TC-Net in image restoration and demonstrated its efficiency and effectiveness in various scenes.

Similar content being viewed by others

References

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2021) Multi-stage progressive image restoration. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 14821–14831

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2020) Learning enriched features for real image restoration and enhancement. In: Computer vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXV 16, pp 492–511

Tian C, Fei L , Zheng W, Xu Y, Zuo W, Lin C-W (2020) Deep learning on image denoising: an overview. Neural Networks

Tian C, Xu Y, Li Z, Zuo W, Fei L, Liu H (2020) Attention-guided cnn for image denoising. Neural Netw 124:117–129

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Yuan K, Guo S, Liu Z, Zhou A, Yu F, Wu W (2021) Incorporating convolution designs into visual transformers. arXiv:2103.11816

Li Y, Zhang K, Cao J, Timofte R, Van Gool L (2021) Localvit: bringing locality to vision transformers. arXiv:2104.05707

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 234–241

Wang Z, Cun X, Bao J, Liu J (2021) Uformer: a general u-shaped transformer for image restoration. arXiv:2106.03106

Zhang Z, Wu C, Coleman S, Kerr D (2020) Dense-inception u-net for medical image segmentation. Comput Methods Prog Biomed 192:105395

Deng X, Dragotti PL (2020) Deep convolutional neural network for multi-modal image restoration and fusion. IEEE Trans Pattern Anal Mach Intell

Mei Y, Fan Y, Zhang Y, Yu J, Zhou Y, Liu D, Fu Y, Huang TS, Shi H (2020) Pyramid attention networks for image restoration. arXiv:2004.13824

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2021) Multi-stage progressive image restoration. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14821–14831

Zhao M, Zhong S, Fu X, Tang B, Pecht M (2019) Deep residual shrinkage networks for fault diagnosis. IEEE Trans Industr Inform 16(7):4681–4690

Abdelhamed A, Lin S, Brown MS (2018) A high-quality denoising dataset for smartphone cameras. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1692–1700

Guo S, Yan Z, Zhang K, Zuo W, Zhang L (2019) Toward convolutional blind denoising of real photographs. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1712–1722

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2020) Cycleisp: real image restoration via improved data synthesis. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2696–2705

Cui K, Boev A, Alshina E, Steinbach E (2020) Color image restoration exploiting inter-channel correlation with a 3-stage cnn. IEEE J Sel Top Signal Process 15(2):174–189

Khan A, Sohail A, Zahoora U, Qureshi AS (2020) A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 53(8):5455–5516

Lv T, Pan X, Zhu Y, Li L (2021) Unsupervised medical images denoising via graph attention dual adversarial network. Appl Intell 51(6):4094–4105. https://doi.org/10.1007/s10489-020-02016-4

Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, Zhang Y, Sun L, Wang G (2018) Low-dose ct image denoising using a generative adversarial network with wasserstein distance and perceptual loss. IEEE Trans Med Imaging 37(6):1348–1357

Dong Z, Liu G, Ni G, Jerwick J, Duan L, Zhou C (2020) Optical coherence tomography image denoising using a generative adversarial network with speckle modulation. J Biophotonics 13 (4):201960135

Zhang H, Liu J, Yu Z, Wang P (2021) Masg-gan: a multi-view attention superpixel-guided generative adversarial network for efficient and simultaneous histopathology image segmentation and classification. Neurocomputing 463:275–291

Andreini P, Bonechi S, Bianchini M, Mecocci A, Scarselli F (2020) Image generation by gan and style transfer for agar plate image segmentation. Comput Methods Prog Biomed 184:105268

Pan X, Zhan X, Dai B, Lin D, Loy CC, Luo P (2020) Exploiting deep generative prior for versatile image restoration and manipulation. In: European conference on computer vision, pp 262–277

Fu J, Zheng H, Mei T (2017) Look closer to see better: recurrent attention convolutional neural network for fine-grained image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4438–4446

Wang H, Wei M, Cheng R, Yu Y, Zhang X (2021) Residual deep attention mechanism and adaptive reconstruction network for single image super-resolution. Applied Intelligence, https://doi.org/10.1007/s10489-021-02568-z

Zhao M, Zhong S, Fu X, Tang B, Pecht M (2020) Deep residual shrinkage networks for fault diagnosis. IEEE Trans Industr Inform 16(7):4681–4690. https://doi.org/10.1109/TII.2019.2943898

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser U, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, pp 5998–6008

Hua W, Liu G (2021) Transformer-based networks over tree structures for code classification. Applied Intelligence, https://doi.org/10.1007/s10489-021-02894-2

Zhao Z, Niu W, Zhang X, Zhang R, Yu Z, Huang C (2021) Trine: syslog anomaly detection with three transformer encoders in one generative adversarial network. Applied Intelligence, https://doi.org/10.1007/s10489-021-02863-9

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European conference on computer vision, pp 213–229

Radford A, Narasimhan K, Salimans T, Sutskever I (2018) Improving language understanding by generative pre-training

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Liu R, Yuan Z, Liu T, Xiong Z (2021) End-to-end lane shape prediction with transformers. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 3694–3702

Li Y, Zhang K, Cao J, Timofte R, Van Gool L (2021) Localvit: bringing locality to vision transformers. arXiv:2104.05707

Yuan K, Guo S, Liu Z, Zhou A, Yu F, Wu W (2021) Incorporating convolution designs into visual transformers. arXiv:2103.11816

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. arXiv:2103.14030

Xu B, Wang N, Chen T, Li M (2015) Empirical evaluation of rectified activations in convolutional network. arXiv:1505.00853

Shi C, Pun C-M (2019) Adaptive multi-scale deep neural networks with perceptual loss for panchromatic and multispectral images classification. Inf Sci 490:1–17

Gholizadeh-Ansari M, Alirezaie J, Babyn P (2020) Deep learning for low-dose ct denoising using perceptual loss and edge detection layer. J Digit Imaging 33(2):504–515

Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, Zhang Y, Sun L, Wang G (2018) Low-dose ct image denoising using a generative adversarial network with wasserstein distance and perceptual loss. IEEE Trans Med Imaging 37(6):1348–1357

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision, pp 694–711

Tian C, Xu Y, Li Z, Zuo W, Fei L, Liu H (2020) Attention-guided cnn for image denoising. Neural Netw 124:117–129

Yu S, Park B, Jeong J (2019) Deep iterative down-up cnn for image denoising. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 0–0

Patil TR, Baligar VP, Huilgol RP (2018) Low psnr high fidelity image compression using surrounding pixels. In: International conference on circuits and systems in digital enterprise technology (ICCSDET), pp 1–6

Anwar S, Barnes N (2019) Real image denoising with feature attention. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3155–3164

Kim Y, Soh JW, Park GY, Cho NI (2020) Transfer learning from synthetic to real-noise denoising with adaptive instance normalization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3482–3492

Yue Z, Yong H, Zhao Q, Zhang L, Meng D (2019) Variational denoising network: Toward blind noise modeling and removal. arXiv:1908.11314

Yue Z, Zhao Q, Zhang L, Meng D (2020) Dual adversarial network: toward real-world noise removal and noise generation. In: European conference on computer vision, pp 41–58

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization. arXiv:1711.05101

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang H, Xie Q, Zhao Q, Meng D (2020) A model-driven deep neural network for single image rain removal. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3103–3112

Jiang Y, Chang S, Wang Z (2021) Transgan: two transformers can make one strong gan. arXiv:2102.0707

Kumar V, Choudhary A, Cho E (2020) Data augmentation using pre-trained transformer models. arXiv:2003.02245

Peng X, Wang K, Zhu Z, You Y (2022) Crafting better contrastive views for siamese representation learning. arXiv:2202.03278

Acknowledgements

This research was supported by the Shaanxi Provincial Technical Innovation Guidance Special (Fund) Plan in 2020 (2020CGXNG-012). We thank Professor Tao Xue for his guidance and mentorship throughout the course of this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xue, T., Ma, P. TC-net: transformer combined with cnn for image denoising. Appl Intell 53, 6753–6762 (2023). https://doi.org/10.1007/s10489-022-03785-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03785-w