Abstract

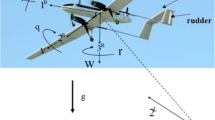

Successful operation of a miniature rotorcraft relies on capabilities including automated guidance, trajectory following, and teleoperation; all of which require accurate estimates of the vehicle’s body velocities and Euler angles. For larger rotorcraft that operate outdoors, the traditional approach is to combine a highly accurate IMU with GPS measurements. However, for small scale rotorcraft that operate indoors, lower quality MEMS IMUs are used because of limited payload. In indoor applications GPS is usually not available, and state estimates based on IMU measurements drift over time. In this paper, we propose a novel framework for state estimation that combines a dynamic flight model, IMU measurements, and 3D velocity estimates computed from an onboard monocular camera using computer vision. Our work differs from existing approaches in that, rather than using a single vision algorithm to update the vehicle’s state, we capitalize on the strengths of multiple vision algorithms by integrating them into a meta-algorithm for 3D motion estimation. Experiments are conducted on two real helicopter platforms in a laboratory environment for different motion types to demonstrate and evaluate the effectiveness of our approach.

Similar content being viewed by others

References

Achtelik, M., Weiss, S., & Siegwart, R. (2011). Onboard IMU and monocular vision based control for MAVs in unknown in and outdoor environments. In IEEE international conference on Robotics and automation (pp. 3056–3063). IEEE.

Andersh, J., & Mettler, B. (2011). System integration of a miniature rotorcraft for aerial tele-operation research. Mechatronics, 21(5), 776–788.

Andersh, J., Mettler, B., & Papanikolopoulos, N. (2009). Miniature embedded rotorcraft platform for aerial teleoperation experiments. In Mediterranean conference on control and automation.

Baerveldt, A.J., & Klang, R. (1997). A low-cost and low-weight attitude estimation system for an autonomous helicopter. In IEEE international conference on intelligent engineering systems (pp. 391–395).

Beauchemin, S., & Barron, J. (1995). The computation of optical flow. ACM Computing Surveys, 27(3), 433–466.

Bleser, G., & Hendeby, G. (2010) Using optical flow for filling the gaps in visual-inertial tracking. In European signal processing conference.

Bristeau, P.J., Callou, F., Vissiere, D., & Petit, N. (2011). The navigation and control technology inside the AR drone micro UAV. In 18th IFAC world congress (pp. 1477–1484).

Bristeau, P.J., Martin, P., Salaun, E., & Petit, N. (2009). The role of propeller aerodynamics in the model of a quadrotor UAV. In Proceedings of the European control conference.

Calonder, M., Lepetit, V., Strecha, C., & Fua, P. (2010). BRIEF: Binary robust independent elementary features. In European conference on computer vision (pp. 778–792).

Dadkhah, N., & Mettler, B. (2012). System identification modelling and flight characteristics analysis of miniature co-axial helicopter. Journal of the American Helicopter Society.

Hartley, R., & Zisserman, A. (2004). Multiple view geometry in computer vision. Cambridge, MA: Cambridge University Press.

Heeger, D., & Jepson, A. (1992). Subspace methods for recovering rigid motion I: Algorithm and implementation. International Journal of Computer Vision, 7(2), 95–117.

Higgins, H. (1981). A computer algorithm for reconstructing a scene from two projections. Nature, 133–135.

Kehoe, J., Watkins, A., Causey, R. & Lind, R. (2006). State estimation using optical flow from parallax-weighted feature tracking. In AIAA guidance, navigation and control conference.

Johnson, A., & Matthies, L. (1999) Precise image-based motion estimation for autonomous small body exploration. Artificial Intelligence, Robotics and Automation in Space, 627–634.

Jun, M., Roumeliotis, S., & Sukhatme, G. (1999) State estimation of an autonomous helicopter using kalman filtering. In International conference on intelligent robots and systems (pp. 1346–1353).

Kanatani, K. (2005). Statistical optimization for geometric computation: Theory and practice. New York: Dover Publications Incorporated.

Lau, T.K., Liu, Y., & Lin, K. (2010). A robust state estimation method against GNSS outage for unmanned miniature helicopters. In IEEE international conference on robotics and automation (pp. 1116–1122).

Ma, Y. (2004). An invitation to 3-d vision: From images to geometric models (Vol. 26). Berlin: Springer.

Madison, R., Andrews, G., DeBitetto, P., Rasmussen, S., & Bottkol, M. (2007). Vision-aided navigation for small UAVs in GPS-challenged environments. In AIAA infotech at aerospace conference and exhibit (p. 27332745).

Mahony, R., & Hamel, T. (2007). Advances in unmanned aerial vehicles: State of the art and the road to autonomy, chap. Robust nonlinear observers for attitude estimation of mini UAVs (pp. 343–375). Berlin: Springer.

Mettler, B. (2003). Identification modeling and characteristics of miniature rotorcraft. Dordrecht: Kluwer Academic Publisher.

Mettler, B., Dadkhah, N., Kong, Z., & Andersh, J. (2013). Research infrastructure for interactive human- and autonomous guidance. Journal of Intelligent and Robotic Systems: Theory and Applications, 70(1–4), 437–459.

Niehsen, W. (2002). Information fusion based on fast covariance intersection filtering. In International conference on information fusion (pp. 901–904). IEEE.

Rotstein, H., & Gurfil, P. (2002) Aircraft state estimation from visual motion: application of the subspace constraints approach. In Position location and navigation symposium (pp. 263–270).

Rutkowski, A.J., Quinn, R.D., & Willis, M.A. (2006) Biologically inspired self-motion estimation using the fusion of airspeed and optical flow. In American control conference (pp. 6-pp). IEEE.

Shen, S., Mulgaonkar, Y., Michael, N., & Kumar, V. (2013) Vision-based state estimation for autonomous rotorcraft mavs in complex environments. In 2013 IEEE international conference on robotics and automation (ICRA) (pp. 1758–1764). IEEE.

Soatto, S., Frezza, R., & Perona, P. (1996). Motion estimation via dynamic vision. IEEE Transactions on Automatic Control, 41(3), 393–413.

Soatto, S., & Perona, P. (1997). Recursive 3-d visual motion estimation using subspace constraints. International Journal of Computer Vision, 22, 235–259.

Tian, T., Tomasi, C., & Heeger, D. (1996). Comparison of approaches to egomotion computation. In Computer vision and pattern recognition (pp. 315–320).

US Army Aeroflightdynamics Directorate. (2010). CIFER comprehensive identification from frequency responses user’s guide.

Webb, T., Prazenica, R., Kurdila, A., & Lind, R. (2004) Vision-based state estimation for autonomous micro air vehicles. In AIAA guidance, navigation, and control conference.

Weiss, S., Achtelik, M.W., Lynen, S., Chli, M., & Siegwart, R. (2012). Real-time onboard visual-inertial state estimation and self-calibration of mavs in unknown environments. In IEEE international conference on robotics and automation (pp. 957–964). IEEE.

Zachariah, D., & Jansson, M. (2011). Self-motion and wind velocity estimation for small-scale uavs. In IEEE international conference on robotics and automation (pp. 1166–1171).

Acknowledgments

This material is based in part upon work supported by the National Science Foundation through grants #IIP-0934327, #IIS-1017344, #CNS-1061489, #CNS-1138020, #IIP-1332133, #IIS-1427014, and #IIP-1432957.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Complementary filter

The basic equations for \(\phi \) (roll), \(\theta \) (pitch), and \(\psi \) (yaw) angles of the complementary filter are given in (28). The complementary filter has time constant \(\tau =0.99s\) and sampling time \(dt=0.01s\).

Using the Euler angles, the acceleration due to gravity can be removed from the IMU’s accelerometer measurements to get the accelerations in the body frame using (29).

Appendix 2: EKF details for AR drone

The EKF used for estimation follows the standard approach. The state vector and its covariance estimate are denoted by \(\hat{x}\) and \(P\) respectively, the Jacobians for the state transition matrix are denoted by \(\varPhi \) and \(G\), the observation vector \(\hat{z}\), and observation covariance matrix \(H\). After discretizing (2) with \(dt=0.01\), the \(\varPhi \) and \(G\) matrices for the AR Drone are:

and \({G_{2,1}} = {G_{4,2}} = {G_{6,3}} = - dt, {G_{other\;elements}} = 0\). The matrix \(Q = 25\,{I_{3x3}}\) captures the uncertainty in the model and control inputs.

The observation vector for updates using measurements from the accelerometer is

with an estimate of the measurement covariance \(R = 0.05{I_{3x3}}\) and observation matrix \(H\) given by:

The vision algorithms can determine when the rotorcraft is in a stationary hover. The measurement update for the stationary condition is a measurement of zero velocity \(({z_m} = {\left[ {\begin{array}{lll} 0&0&0 \end{array}} \right] ^T})\) and uses the observation vector \(h(\hat{x}) = \left[ {\begin{array}{lll} \hat{u}\&\hat{v}&\hat{w} \end{array}}\right] \) with \(R = 0.01\,{I_{3x3}}\) and

The last measurement update uses the velocity direction obtained from the vision algorithms. The estimate is found by normalizing the vector containing the velocity state estimates \(\hat{u}, \, \hat{v}\), and \(\hat{w}\). The observation vector is defined as

with observation matrix (calculated by taking the partial derivatives of \(h(\hat{x})\) with respect to the states):

The \(R\) matrix identified by the vision algorithms.

Appendix 3: EKF details for coaxial helicopter

The non-zero elements of the \(14\times 14\) state transition matrix \(\varPhi \) are defined as

with \(G\) matrix

The matrix \(Q=\left[ \begin{array}{cc}{{I_{3x3}}}&{}{{0_{3x7}}}\\ {{0_{7x3}}}&{}{0.5{I_{7x7}}}\end{array}\right] \) captures the uncertainty due to modeling error and input noise. The measurement update based on the Euler angles (calculated from the IMU using the complementary filter (28)) uses observation vector \(h(\hat{x}) = {[\hat{\phi },\hat{\theta },\hat{\psi } ]^T}\), observation matrix \(H = \left[ {\begin{array}{ll} {{I_{3x3}}}&{{0_{3x11}}} \end{array}} \right] \), and \(R\) matrix based on the accelerometer noise\((R = 0.01\,{I_{3x3}})\).

The measurement estimate for a stationary hover (zero velocity) is given by \(h(\hat{x}) = \left[ {\begin{array}{lll} \hat{u}&\hat{v}&\hat{w}\end{array}}\right] \) with observation matrix \(H = \left[ {\begin{array}{ll} {{0_{3x11}}}&{{I_{3x3}}} \end{array}} \right] \) and measurement covariance matrix given by \(R = 0.01\,{I_{3x3}}\). The final measurement update uses the velocity direction calculated from the vision algorithms given by (34), and the \(R\) matrix provided as part of the vision update. The \(H\) matrix is (refer to (35) for \(H_{ij}\)):

Rights and permissions

About this article

Cite this article

Andersh, J., Cherian, A., Mettler, B. et al. A vision based ensemble approach to velocity estimation for miniature rotorcraft. Auton Robot 39, 123–138 (2015). https://doi.org/10.1007/s10514-015-9430-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-015-9430-7