Abstract

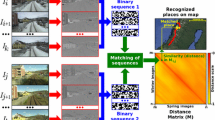

This paper presents an alternative approach to the problem of outdoor, persistent visual localisation against a known map. Instead of blindly applying a feature detector/descriptor combination over all images of all places, we leverage prior experiences of a place to learn place-dependent feature detectors (i.e., features that are unique to each place in our map and used for localisation). Furthermore, as these features do not represent low-level structure, like edges or corners, but are in fact mid-level patches representing distinctive visual elements (e.g., windows, buildings, or silhouettes), we are able to localise across extreme appearance changes. Note that there is no requirement that the features posses semantic meaning, only that they are optimal for the task of localisation. This work is an extension on previous work (McManus et al. in Proceedings of robotics science and systems, 2014b) in the following ways: (i) we have included a landmark refinement and outlier rejection step during the learning phase, (ii) we have implemented an asynchronous pipeline design, (iii) we have tested on data collected in an urban environment, and (iv) we have implemented a purely monocular system. Using over 100 km worth of data for training, we present localisation results from Begbroke Science Park and central Oxford.

Similar content being viewed by others

Notes

In their earlier work, Valgren and Lilienthal (2007) originally concluded that it was not possible to perform localisation across seasons with point features. Their later work incorporated epipolar geometry constraints to make this possible over a limited set of images.

As was done in Doersch et al. (2012).

We set \(K=5\) as done in Doersch et al. (2012).

In our experiments, the window was taken to be the distance between places, which is 10 m.

Maddern et al. (2014) demonstrated improved robustness to LAPS by using an illumination-invariant colour space.

References

Anati, R., Scaramuzza, D., Derpanis, K., & Daniilidis, K. (2012). Robot localization using soft object detection. In Proceedings of the IEEE international conference on robotics and automation (ICRA), St. Paul.

Atanasov, N., Zhu, M., Daniilidis, K., & Pappas, G. J. (2014). Semantic localisation via the matrix permanent. In Proceedings of robotics science and systems (RSS), Berkeley.

Bao, S. Y., & Savarese, S. (2011). Semantic structure from motion. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 2025–2032.

Bay, H., Ess, A., Tuytelaars, T., & Gool, L. (2008). Surf: Speeded up robust features. Computer Vision and Image Understanding (CVIU), 110(3), 346–359.

Castle, R. O., Gawley, D. J., Klein, G., & Murray, D. W. (2007). Towards simultaneous recognition, localization and mapping for hand-held and wearable cameras. In Proceedings of the IEEE international conference on in robotics and automation (ICRA), Rome.

Churchill, W., & Newman, P. (2012). Practice makes perfect? Managing and leveraging visual experiences for lifelong navigation. In Proceedings of the international conference on robotics and automation, Saint Paul.

Dalal, N., & Triggs, B. (2005). Histograms of oriented gradients for human detection. In Proceedings of the conference on computer vision and pattern recognition (pp. 886–893), San Diego.

Davison, A., & Murray, D. (2002). Simultaneous localization and map-building using active vision. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(7), 865–880.

Davison, A., Reid, I., Motlon, N., & Stasse, O. (2007). Monoslam: Real-time single camera slam. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(6), 1052–1067.

Doersch, C., Singh, S., Gupta, A., Sivic, J., & Efros, A. (2012). What makes paris look like Paris? ACM Transactions on Graphics, 31(4), 101.

Furgale, P., & Barfoot, T. (2001). Visual teach and repeat for long-range rover autonomy. Journal of Field Robotics, Special Issue on “Visual Mapping and Navigation Outdoors”, 27(5), 534–560.

Hartley, R., & Zisserman, A. (2004). Multiple view geometry in computer vision (2nd ed.). Cambridge: Cambridge University Press, ISBN: 0521540518.

Johns, E., & Yang, G.-Z. (2013). Feature co-occurrence maps: Appearance-based localisation throughout the day. In Proceedings of the international conference on robotics and automation.

Kaess, M., Johannson, H., Roberts, R., Ila, V., Leonard, J., & Dellaert, F. (2012). isam2: Incremental smoothing and mapping using the bayes tree. Internatioanl Journal of Robotics Research, 31(2), 216–235.

Ko, D. W., Yi, C., & Suh, I. H. (2013). Semantic mapping and navigation: A bayesian approach. In Proceedings of the IEEE/RSJ international conference on intelligent robotics and systems (IROS), pp. 2630–2636.

Konolige, K., Bowman, J., Chen, J., Mihelich, P., Calonder, M., Lepetit, V., et al. (2010). View-based maps. The International Journal of Robotics Research, 29(8), 941–957.

Lategahn, H., Beck, J., Kitt, B., & Stiller, C. (2013). How to learn an illumination robust image feature for place recognition. IEEE intelligent vehicles symposium, Gold Coast.

Levenberg, K. (1944). A method for the solution of certain non-linear problems in least squares. The Quarterly of Applied Mathematics, 2, 164–168.

Linegar, C., Churchill, W., & Newman, P. (2015). Work smart, not hard: Recalling relevant experiences for vast-scale but time-constrained localisation. In IEEE international conference on robotics and automation (ICRA), Seattle.

Lowe, D. (2004). Distinctive image features from scale-invariant key points. International Journal of Computer Vision, 60(2), 91–110.

Maddern, W., Stewart, A., McManus, C., Upcroft, B., Churchill, W., & Newman, P. (2014). Illumination invariant imaging: Applications in robust vision-based localisation, mapping and classification for autonomous vehicles. In Proceedings of the visual place recognition in changing environments workshop, IEEE international conference on robotics and automation, Hong Kong.

McKinnon, D., Smith, R., & Upcroft, B. (2012). A semi-local method for iterative depth-map refinement. In Proceedings of the IEEE international conference on in robotics and automation (ICRA).

McManus, C. (2010). The unscented kalman filter for state estimation. Presented at the simultaneous localization and mapping (SLAM) workshop, 7th Canadian conference on computer vision (CRV).

McManus, C., Churchill, W., Maddern, W., Stewart, A., & Newman, P. (2014a). Shady dealings: Robust, long-term visual localisation using illumination invariance. In Proceedings of the IEEE international conference on robotics and automation (ICRA), Hong Kong.

McManus, C., Churchill, W., Napier, A., Davis, B., & Newman, P. (2013). Distraction suppression for vision-based pose estimation at city scales. In Proceedings of the IEEE international conference on robotics and automation, Karlsruhe.

McManus, C., Upcroft, B., & Newman, P. (2014b). Scene signatures: Localised and point-less features for localisation. In Proceedings of robotics science and systems (RSS), Berkley.

Milford, M. (2013). Vision-based place recognition: How low can you go? The International Journal of Robotics Research, 32(7), 766–789.

Milford, M. & Wyeth, G. (2012). Seqslam: Visual route-based navigation for sunny summer days and stormy winter nights. In Proceedings of the IEEE international conference on robotics and automation (ICRA), Saint Paul.

Naseer, T., Spinello, L., Burgard, W., & Stachniss, C. (2014). Robust visual robot localization across seasons using network flows. In AAAI conference on artifical intelligence (AAAI), Quebec.

Neubert, P., Sunderhauf, N., & Protzel, P. (2013). Appearance change prediction for long-term navigation across seasons. In European Conference on mobile robotics (ECMR).

Piniés, P., Paz, L. M., Gálvez-López, D., & Tardós, J. D. (2010). Ci-graph simultaneous localisation and mappin for three-dimensional reconstruction of large and complex environments using a multicamera system. Journal of Field Robotics, 27(5), 561–586.

Ranaganathan, A., Matsumoto, S., & Ilstrup, D. (2013). Towards illumination invariance for visual localization. Proceedings of the IEEE international conference on in robotics and automation (ICRA) (pp. 3791–3798), Karlsruhe.

Renato F. Salas-Moreno, Richard A. Newcombe, H. S. P. H. J. K. & Davison, A. J. (2013). Slam++: Simultaneous localisation and mapping at the level of object. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR).

Richardson, A. & Olson, E. (2013). Learning convolutional filters for interest point detection. In Proceedings of the IEEE international conference on robotics and automation (ICRA).

Sibley, G., Mei, C., Reid, I., & Newman, P. (2010). Vast-scale outdoor navigation using adaptive relative bundle adjustment. The International Journal of Robotics Research, 29(8), 958–980.

Singh, S., Gupta, A., & Efros, A. A. (2012). Unsupervised discovery of mid-level discriminative patches. In Proceedings of the European conference on computer vision (ECCV).

Stewart, A. & Newman, P. (2012). Laps - localisation using appearance of prior structure: 6-dof monocular camera localisation using prior pointclouds. In Proceedings of the international conference on robotics and automation, Saint Paul.

Stewart, A. D. (2015). Localisation using the appearance of prior structure. PhD thesis, University of Oxford.

Valgren, C., & Lilienthal, A. (2007). Sift, surf & seasons: Long-term outdoor localization using local features. In Proceedings of the 3rd European conference on mobile robotics (ECMR).

Valgren, C., & Lilienthal, A. (2010). Sift, surf and seasons: Appearance-based long-term localization in outdoor environments. Robotics and Autonomous Systems, 58(2), 149–156.

Yi, C., Suh, I. H., Lim, G. H., & Choi, B.-U. (2009). Active-semantic localization with a single consumer-grade camera. In Proceedings of the IEEE international conference on systems, man and cybernetics (SMC), pp. 2161–2166.

Acknowledgments

This work would not have been possible without the financial support from the Nissan Motor Company, the EPSRC Leadership Fellowship Grant (EP/J012017/1), and V-CHARGE (Grant Agreement Number 269916).

Author information

Authors and Affiliations

Corresponding author

Additional information

This is one of several papers published in Autonomous Robots comprising the “Special Issue on Robotics Science and Systems”.

Rights and permissions

About this article

Cite this article

McManus, C., Upcroft, B. & Newman, P. Learning place-dependant features for long-term vision-based localisation. Auton Robot 39, 363–387 (2015). https://doi.org/10.1007/s10514-015-9463-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-015-9463-y