Abstract

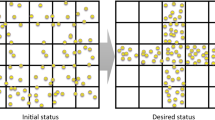

This paper presents a Markov chain based approach for the probabilistic density control of a large number, swarm, of autonomous agents. The proposed approach specifies the time evolution of the probabilistic density distribution by using a Markov chain, which guides the swarm to a desired steady-state distribution, while satisfying the prescribed ergodicity, motion, and safety constraints. This paper generalizes our previous results on density upper bound constraints and captures a general class of linear safety constraints that bound the flow of agents. The safety constraints are formulated as equivalent linear inequality conditions on the Markov chain matrices by using the duality theory of convex optimization which is our first contribution. With the safety constraints, we can facilitate proper low-level conflict avoidance policies to compute and execute the detailed agent state trajectories. Our second contribution is to develop (i) linear matrix inequality based offline methods, and (ii) quadratic programming based online methods that can incorporate these constraints into the Markov chain synthesis. The offline method provides a feasible solution for Markov matrix when there is no density feedback. The online method utilizes realtime estimates of the swarm density distribution to continuously update the Markov matrices to maximize the convergence rates within the problem constraints. The paper also introduces a decentralized method to compute the density estimates needed for the online synthesis method.

Similar content being viewed by others

References

Açıkmeşe, B., & Bayard, D. S. (2012). A Markov chain approach to probabilistic swarm guidance. In American control conference, montreal, Canada (pp. 6300–6307).

Açıkmeşe, B., & Bayard, D. S. (2015). Markov chain approach to probabilistic guidance for swarms of autonomous agents. Asian Journal of Control, 17(4), 1–20.

Açıkmeşe, B., Demir, N., & Harris, M. (2014). Convex necessary and sufficient conditions for density safety constraints in markov chain convex necessary and sufficient conditions for density safety constraints in markov chain synthesis. IEEE Transactions on Automatic Control (Accepted).

Açıkmeşe, B., Hadaegh, F. Y., Scharf, D. P., & Ploen, S. R. (2007). Formulation and analysis of stability for spacecraft formations. IET Control Theory & Applications, 1(2), 461–474.

Arapostathis, A., Kumar, R., & Tangirala, S. (2003). Controlled markov chains with safety upper bound. IEEE Transactions on Automatic Control, 48(7), 1230–1234.

Arslan, G., Marden, J. R., & Shamma, J. S. (2007). Autonomous vehicle-target assignment: A game-theoretical formulation. ASME Journal of Dynamic Systems, Measurement, and Control, 129(5), 197–584.

Bai, H., Arcak, M., & Wen, J. T. (2008). Adaptive design for reference velocity recovery in motion coordination. Systems & Control Letters, 57(8), 602–610.

Berkovitz, L. D. (2002). Convexity and optimization in \(R^{n}\). New York: Wiley.

Berman, A., & Plemmons, R. J. (1994). Nonnegative matrices in the mathematical sciences. SIAM.

Bertsekas, D. P., & Tsitsiklis, J. N. (2007). Comments on on coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Transaction on Automatic Control, 52(5), 968–969.

Boyd, S., Diaconis, P., Parillo, P., & Xiao, L. (2009). Fastest mixing Markov chain on graphs with symmetries. SIAM Journal of Optimization, 20(2), 792–819.

Boyd, S., Diaconis, P., & Xiao, L. (2004). Fastest mixing Markov chain on a graph. SIAM Review, 46, 667–689.

Boyd, S., El Ghaoui, L., Feron, E., & Balakrishnan, V. (1994). Linear matrix inequalities in system and control theory. SIAM.

Boyd, S., & Vandenberghe, L. (2004). Convex optimization. Cambridge, MA: Cambridge University Press.

Chattopadhyay, I., & Ray, A. (2009). Supervised self-organization of homogeneous swarms using ergodic projections of markov chains. IEEE Transaction on Systems, Man, and Cybernetics, 39(6), 1505–1515.

Chung, K. L. (2001). A course in probability theory. London: Academic Press.

Corless, M., & Frazho, A. E. (2003). Linear systems and control: An operator perspective. New York: Marcel Dekker.

Cortés, J., Martínez, S., Karatas, T., & Bullo, F. (2004). Coverage control for mobile sensing networks. IEEE Transactions on Robotics and Automation, 20(2), 243–255.

de Oliveira, M.C., Bernussou, J., & Geromel, J. C. (1999). Anew discrete-time robust stability condition. Systems and Control Letters, pages 261–265.

Demir, N., Açıkmeşe, B., & Harris, M. (2014). Convex optimization formulation of density upper bound constraints in Markov chain synthesis. In American control conference (pp. 483–488). June 4-6 2014.

Demir, N., Açıkmeşe, B., & Pehlivanturk, C. (2014). Density control for decentralized autonomous agents with conflict avoidance. In IFAC world congress (pp. 11715–11721).

Deo, N. (1974). Graph theory with applications to engineering and computer science. Englewood Cliffs, NJ: Prentice-Hall.

Domahidi, A., Chu, E., & Boyd, S. (2013). ECOS: An SOCP solver for embedded systems. In European control conference (ECC) (pp. 3071–3076).

Dueri, D., Açıkmeşe, B., Baldwin, M., & Erwin, R. S. (2014). Finite-horizon controllability and reachability for deterministic and stochastic linear control systems with convex constraints. In American control conference (ACC), 2014 (pp. 5016–5023). IEEE.

Dueri, D., Zhang, J., & Açıkmeşe, B. (2014). Automated custom code generation for embedded, real-time second order cone programming. In IFAC world congress.

Fiedler, M. (1973). Algebraic connectivity of graphs. Czechoslovak Mathematical Journal, 23(2), 298–305.

Fiedler, M. (2008). Special matrices and their applications in numerical mathematics. New York: Dover.

Girard, A., & Le Guernic, C. (2008). Zonotope/hyperplane intersection for hybrid systems reachability analysis. In M. Egerstedt & B. Mishra (Eds.), Hybrid systems: Computation and control. Lecture notes in computer science (Vol. 4981, pp. 215–228). Berlin, Heidelberg: Springer.

Grant, M., & Boyd, S. (2013). CVX: Matlab software for disciplined convex programming, version 2.0 beta.

Hadaegh, F. Y., Açıkmeşe, B., Bayard, D. S., Singh, G., Mandic, M., Chung, S., & Morgan, D. (2013). Guidance and control of formation flying spacecraft: From two to thousands. Festschrift honoring John L. Junkins, Tech Science Press (pp. 327–371).

Horn, R. A., & Johnson, C. R. (1985). Matrix analysis. Cambridge, MA: Cambridge University Press.

Horn, R. A., & Johnson, C. R. (1991). Topics in matrix analysis. Cambridge, MA: Cambridge University Press.

Hsieh, M. A., Halasz, A., Berman, S., & Kumar, V. (2008). Biologically inspired redistribution of a swarm of robots among multiple sites. Swarm Intelligence, 2(2–4), 121–141.

Hsieh, M. A., Kumar, V., & Chaimowicz, L. (2008). Decentralized controllers for shape generation with robotic swarms. Robotica, 26(5), 691–701.

Hsu, S., Arapostathis, A., & Kumar, R. (2006). On optimal control of Markov chains with safety constraint. In Proceeding of the American control conference (pp. 4516–4521).

Roos, C., Peng, J., & Terlaky, T. (2002). Self-regularity—A new paradigm for primal-dual interior-point algorithms. Princeton, NJ: Princeton University Press.

Jadbabaie, A., Lin, G. J., & Morse, A. S. (2003). Coordination of groups of mobile autonomous agents using nearest neighbor rules. IEEE Transaction on Automatic Control, 48(6), 988–1001.

Kalman, R. E., & Bertram, J. E. (1960). Control system analysis and design via the second method of Lyapunov, II: Discrete-time systems. Journal of Basic Engineering, 82, 394–400.

Kim, Y., Mesbahi, M., & Hadaegh, F. (2004). Multiple-spacecraft reconfiguration through collision avoidance, bouncing, and stalemate. Journal of Optimization Theory and Applications, 122(2), 323–343.

Lawford, M., & Wonham, W. (1993). Supervisory control of probabilistic discrete event systems. In Proceedings of the 36th midwest symposium on circuits systems (pp. 327–331).

Löfberg, J. (2004). YALMIP: A toolbox for modeling and optimization in MATLAB. In Proceedings of the CACSD Conference, Taipei, Taiwan.

Lumelsky, V. J., & Harinarayan, K. R. (1997). Decentralized motion planning for multiple mobile robots: The cocktail party model. Autonomous Robots, 4(1), 121–135.

Maidens, J., Kaynama, S., Mitchell, I., Oishi, M., & Dumont, G. (2013). Lagrangian methods for approximating the viability kernel in high-dimensional systems. Automatica, 49(7), 2017–2029.

Martínez, S., Bullo, F., Cortés, J., & Frazzoli, E. (2007). On synchronous robotic networks-part II: Time complexity of rendezvous and deployment algorithms. IEEE Transaction on Automatic Control, 52(12), 2214–2226.

Martínez, S., Cortés, J., & Bullo, F. (2007). Motion coordination with distributed information. In IEEE Control system magazine (pp. 75–88).

Mattingley, J., & Boyd, S. (2010). Real-time convex optimization in signal processing. IEEE Signal Processing Magazine, 27(3), 50–61.

Mattingley, J., & Boyd, S. (2012). Cvxgen: A code generator for embedded convex optimization. Optimization and Engineering, 13(1), 1–27.

Mesbahi, M., & Egerstedt, M. (2010). Graph theoretic methods in multiagent networks. Princeton, NJ: Princeton University Press.

Mesquita, A. R., Hespanha, J. P., & Astrom, K. (2008). Optimotaxis: A stochastic multi-agent on site optimization procedure. Hybrid systems: Computation and control. Lecture notes in computional science, 4981, 358–371.

Metropolis, N., & Ulam, S. (1949). The Monte-Carlo method. American Statistical Association, 44, 335–341.

Nesterov, Y., & Nemirovsky, A. (1994). Interior-point polynomial methods in convex programming. SIAM.

Pallottino, L., Scordio, V., Bicchi, A., & Frazzoli, E. (2007). Decentralized cooperative policy for conflict resolution in multivehicle systems. IEEE Transaction on Robotics, 23(6), 1170–1183.

Pavone, M., & Frazzoli, E. (2007). Decentralized policies for geometric pattern formation and path coverage. ASME Journal of Dynamic Systems, Measurement, and Control, 129(5), 633–643.

Pavone, M., Frazzoli, E., & Bullo, F. (2011). Adaptive and distributed algorithms for vehicle routing in a stochastic and dynamic environment. IEEE Transaction on Automatic Control, 56(6), 1259–1274.

Ramirez, J., Pavone, M., Frazzoli, E., & Miller, D. W. (2010). Distributed control of spacecraft formations via cyclic pursuit: Theory and experiments. AIAA Journal of Guidance, Control, and Dynamics, 33(5), 1655–1669.

Richards, A., Schouwenaars, T., How, J., & Feron, E. (2002). Spacecraft trajectory planning with avoidance constraints using mixed-integer linear programming. AIAA Journal of Guidance, Control, and Dynamics, 25(4), 755–764.

Scharf, D. P., Hadaegh, F., & Ploen, S. R. (2003). A survey of spacecraft formation flying guidance and control (part I): Guidance. In Proceedings of the American control conference.

Tillerson, M., Inalhan, G., & How, J. (2002). Coordination and control of distributed spacecraft systems using convex optimization techniques. International Journal of Robust Nonlinear Control, 12(1), 207–242.

Tutuncu, R. H., Toh, K. C., & Todd, M. J. (2003). Solving semidefinite-quadratic-linear programs using sdpt3. Mathematical Programming, 95(2), 189–217.

Acknowledgments

This research was supported by Defense Advanced Research Projects Agency (DARPA) Grant No. D14AP00084.

Author information

Authors and Affiliations

Corresponding author

Appendix: Two interpretations of the probabilistic swarm distribution

Appendix: Two interpretations of the probabilistic swarm distribution

The following lemma provides two interpretations for the swarm distribution x(t): (i) x(t) is the vector of expected ratios of the agents in each bin; (ii) the ensemble of agent states, \(\{r_k(t)\}_{k=1}^N\), has a distribution that approaches x(t) with probability one as N is increased towards infinity.

Lemma 2

Consider N agents where \(x_1(0)=\cdots =x_N(0) = x_0 \in {\mathbb {P}}^m\) with \(x_k, \ k= 1,2,\ldots ,\) defined as in (1). Further, suppose that each agent uses the PDC algorithm with the same Markov matrix M(t), that is, \(M_1(t) = \cdots =M_N(t)=M(t)\). Then, \(x_1(t) = \cdots = x_N(t) = x(t) \) for \(t=0,1,\ldots ,\) where

\( \mathrm{prob}(r(t) \in R_i )\) is the probability of finding an agent in the ith bin, and \(\mathbf{n}(t)\) is the vector of the number of agent states in each bin at t. Furthermore,

Proof

It is straight forward to show that (3) implies (33) by using the the Total Probability theorem (Chung 2001) and noting that \( \mathrm{prob}(r(t+1) \in R_i) = \sum _{j=1}^m \mathrm{prob}(r(t+1) \in R_i|\mathrm{prob}(r(t) \in R_j)) \mathrm{prob}(r(t)\in R_j).\) Since the PDC algorithm uses the same Markov matrix for each agent at each time, \(M_1(t)=\cdots =M_N (t)=M(t)\) with \(x_1(0)=\cdots =x_M(0)=x(0)\), the probability distribution of all agents evolves according to (33). Clearly \( \mathrm{prob}(r(t) \in R_i )\) is the probability of finding any of the agents in ith bin. Since \({\mathbb {E}}(\mathbf{n}[i](t)) = \sum _{k=1}^N x_k[i](t)= N x[i](t)\), we have \(x(t)={\mathbb {E}}(\mathbf{n}(t)/N)\).

Next, we prove (35) by using standard arguments as in Theorem 5.4.2 of Chung (2001). Consider x[i](t) which is the probability of finding any agent in bin i at time t. Consider a new Random Variable (RV), \(Z_k[i](t)\), such that \(Z_k[i](t) =1\) if agent k is in bin i at time t, and zero otherwise. Clearly, \({\mathbb {E}}(Z_k[i](t)) = x_k[i](t)=x[i](t)\) and \(Z_k[i](t), \ k=1,\ldots ,N,\) form Independently Identically Distributed (iid) RVs. Then it follows that \(\mathbf{n}[i](t) =Z_1[i](t) +\ldots + Z_N[i](t)\). Since \(Z_k[i](t), \ k=1,\ldots ,N\) are iid RVs and \(\mathrm{E}[| Z_k[i](t)|] < \infty \), we can use the strong law of large numbers theorem, Chung (2001, Theorem 5.4.2), to conclude, \( \mathrm{prob}\left( \lim _{N \rightarrow \infty } \frac{\mathbf{n}[i](t)}{N} = x[i] (t) \right) = 1, \) which implies (35). \(\square \)

Rights and permissions

About this article

Cite this article

Demir, N., Eren, U. & Açıkmeşe, B. Decentralized probabilistic density control of autonomous swarms with safety constraints. Auton Robot 39, 537–554 (2015). https://doi.org/10.1007/s10514-015-9470-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-015-9470-z