Abstract

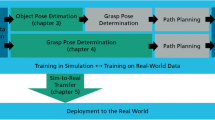

We consider the problem of path planning in an initially unknown environment where a robot does not have an a priori map of its environment but has access to prior information accumulated by itself from navigation in similar but not identical environments. To address the navigation problem, we propose a novel, machine learning-based algorithm called Semi-Markov Decision Process with Unawareness and Transfer (SMDPU-T) where a robot records a sequence of its actions around obstacles as action sequences called options which are then reused by it within a framework called Markov Decision Process with unawareness (MDPU) to learn suitable, collision-free maneuvers around more complex obstacles in future. We have analytically derived the cost bounds of the selected option by SMDPU-T and the worst case time complexity of our algorithm. Our experimental results on simulated robots within Webots simulator illustrate that SMDPU-T takes \(24\%\) planning time and \(39\%\) total time to solve same navigation tasks while, our hardware results on a Turtlebot robot indicate that SMDPU-T on average takes \(53\%\) planning time and \(60\%\) total time as compared to a recent, sampling-based path planner.

Similar content being viewed by others

Notes

To achieve localization SLAM-based techniques could also be used; we do not discuss localization issues further to focus on the learning problem.

Fitness score is measured by calculating the Jaccard Index(JI) between the detected obstacle pattern and each obstacle pattern in P as described in Saha and Dasgupta (2017a).

If the fitness score is still below the fitness threshold, a local motion planner is called to construct a trajectory around the detected obstacle pattern.

If we consider \(Dis_{thresh}\ne 0\), from our analysis, \(D_{opt}=CH/2+3Dis_{thresh}\).

References

Andreas, J., Klein, D., & Levine, S. (2017). Modular multitask reinforcement learning with policy sketches. In ICML (Vol. 70, pp. 166–175). PMLR.

Argall, B., Chernova, S., Veloso, M. M., & Browning, B. (2009). A survey of robot learning from demonstration. Robotics and Autonomous Systems, 57(5), 469–483.

Bacon, P., Harb, J., & Precup, D. (2017). The option-critic architecture. In AAAI (pp. 1726–1734). Menlo Park: AAAI Press.

Bajracharya, M., Tang, B., Howard, A., Turmon, M. J., & Matthies, L. H. (2008). Learning long-range terrain classification for autonomous navigation. In ICRA (pp. 4018–4024). IEEE.

Berenson, D., Abbeel, P., & Goldberg, K. (2012). A robot path planning framework that learns from experience. In ICRA (pp. 3671–3678). IEEE.

Bischoff, B., Nguyen-Tuong, D., Lee, I., Streichert, F., & Knoll, A. (2013). Hierarchical reinforcement learning for robot navigation. In ESANN.

Boularias, A., Duvallet, F., Oh, J., & Stentz, A. (2016). Learning qualitative spatial relations for robotic navigation. In IJCAI (pp. 4130–4134). IJCAI/AAAI Press.

Bowen, C., Ye, G., & Alterovitz, R. (2015). Asymptotically optimal motion planning for learned tasks using time-dependent cost maps. IEEE Transactions on Automation Science and Engineering, 12(1), 171–182.

Bruin, T. de, Kober, J., Tuyls, K., & Babuska, R. (2016). Improved deep reinforcement learning for robotics through distribution-based experience retention. In IROS (pp. 3947–3952). IEEE.

Brys, T., Harutyunyan, A., Taylor, M. E., & Nowé, A. (2015). Policy transfer using reward shaping. In AAMAS (pp. 181–188). ACM.

Burchfiel, B., Tomasi, C., & Parr, R. (2016). Distance minimization for reward learning from scored trajectories. In Proceedings of the thirtieth aaai conference on artificial intelligence (pp. 3330–3336). AAAI Press.

Calinon, S. (2009). Robot programming by demonstration—a probabilistic approach. Lausanne: EPFL Press.

Chernova, S., & Veloso, M. M. (2009). Interactive policy learning through confidence-based autonomy. Journal of Artificial Intelligence Research, 34, 1–25.

Choset, H. M. (2005). Principles of robot motion: Theory, algorithms, and implementation. Cambridge: MIT press.

Cutler, M., & How, J. P. (2015). Efficient reinforcement learning for robots using informative simulated priors. In ICRA (pp. 2605–2612). IEEE.

Deisenroth, M. P., & Rasmussen, C. E. (2011). PILCO: A model-based and data-efficient approach to policy search. In ICML (pp. 465–472). Omnipress.

Elbanhawi, M., & Simic, M. (2014). Sampling-based robot motion planning: A review. IEEE Access, 2, 56–77.

Erkan, A., Hadsell, R., Sermanet, P., Ben, J., Muller, U., & LeCun, Y. (2007). Adaptive long range vision in unstructured terrain. In IROS (pp. 2421–2426). IEEE.

Fachantidis, A., Partalas, I., Taylor, M. E., & Vlahavas, I. (2015). Transfer learning with probabilistic mapping selection. Adaptive Behavior, 23(1), 3–19.

Fachantidis, A., Partalas, I., Taylor, M. E., & Vlahavas, I. P. (2011). Transfer learning via multiple inter-task mappings. In EWRL (Vol. 7188, pp. 225–236). Springer.

Fernández, F., García, J., & Veloso, M. M. (2010). Probabilistic policy reuse for inter-task transfer learning. Robotics and Autonomous Systems, 58(7), 866–871.

Galceran, E., & Carreras, M. (2013). A survey on coverage path planning for robotics. Robotics and Autonomous Systems, 61(12), 1258–1276.

Gammell, J. D., Srinivasa, S. S., & Barfoot, T. D. (2014). Informed rrt*: Optimal sampling-based path planning focused via direct sampling of an admissible ellipsoidal heuristic. In IROS (pp. 2997–3004). IEEE.

Gopalan, N., desJardins, M., Littman, M. L., MacGlashan, J., Squire, S., Tellex, S., et al. (2017). Planning with abstract markov decision processes. In ICAPS (pp. 480–488). AAAI Press.

Gu, S., Holly, E., Lillicrap, T. P., & Levine, S. (2017). Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In ICRA (pp. 3389–3396). IEEE.

Happold, M., Ollis, M., & Johnson, N. (2006). Enhancing supervised terrain classification with predictive unsupervised learning. In G. S. Sukhatme, S. Schaal, W. Burgard, & D. Fox (Eds.), Robotics: Science and systems. Cambridge: The MIT Press.

Hester, T., & Stone, P. (2013). TEXPLORE: Real-time sample-efficient reinforcement learning for robots. Machine Learning, 90(3), 385–429.

Hussein, A., Gaber, M. M., Elyan, E., & Jayne, C. (2017). Imitation learning: A survey of learning methods. ACM Computing Surveys, 50(2), 21:1–21:35.

Jeni, L. A., Istenes, Z., Korondi, P., & Hashimoto, H. (2007). Hierarchical reinforcement learning for robot navigation using the intelligent space concept. In ICIES (pp. 149–153).

Kim, B., Farahmand, A., Pineau, J., & Precup, D. (2013). Learning from limited demonstrations. In NIPS (pp. 2859–2867).

Kim, D., Sun, J., Oh, S. M., Rehg, J. M., & Bobick, A. F. (2006). Traversability classification using unsupervised on-line visual learning for outdoor robot navigation. In ICRA (pp. 518–525). IEEE.

Kober, J., Bagnell, J. A., & Peters, J. (2013). Reinforcement learning in robotics: A survey. The International Journal of Robotics Research, 32(11), 1238–1274.

Kober, J., & Peters, J. (2011). Policy search for motor primitives in robotics. Machine Learning, 84(1–2), 171–203.

Kollar, T., & Roy, N. (2008). Trajectory optimization using reinforcement learning for map exploration. The International Journal of Robotics Research, 27(2), 175–196.

Konidaris, G., & Barto, A. G. (2007). Building portable options: Skill transfer in reinforcement learning. In IJCAI (pp. 895–900).

Levine, S., Finn, C., Darrell, T., & Abbeel, P. (2016). End-to-end training of deep visuomotor policies. The Journal of Machine Learning Research, 17(1), 1334–1373.

Lien, J., & Lu, Y. (2009). Planning motion in environments with similar obstacles. In J. Trinkle, Y. Matsuoka, & J. A. Castellanos (Eds.), Robotics: Science and systems. Cambridge: The MIT Press.

Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., et al. (2016). Continuous control with deep reinforcement learning. In ICLR.

Mahler, J., & Goldberg, K. (2017). Learning deep policies for robot bin picking by simulating robust grasping sequences. In Corl (Vol. 78, pp. 515–524). PMLR.

Mahler, J., Liang, J., Niyaz, S., Laskey, M., Doan, R., Liu, X., et al. (2017). Dex-net 2.0: Deep learning to plan robust grasps with synthetic point clouds and analytic grasp metrics. In N. M. Amato, S. S. Srinivasa, N. Ayanian & S. Kuindersma (Eds.), Robotics: Science and systems XIII. Cambridge, MA: Massachusetts Institute of Technology.

Manschitz, S., Kober, J., Gienger, M., & Peters, J. (2015). Learning movement primitive attractor goals and sequential skills from kinesthetic demonstrations. Robotics and Autonomous Systems, 74, 97–107.

Mendoza, J. P., Veloso, M. M., & Simmons, R. G. (2015). Plan execution monitoring through detection of unmet expectations about action outcomes. In ICRA (pp. 3247–3252). IEEE.

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., et al. (2013). Playing atari with deep reinforcement learning. arXiv:1312.5602

Montgomery, W., Ajay, A., Finn, C., Abbeel, P., & Levine, S. (2017). Reset-free guided policy search: Efficient deep reinforcement learning with stochastic initial states. In ICRA (pp. 3373–3380). IEEE.

Moore, A. W., & Atkeson, C. G. (1993). Prioritized sweeping: Reinforcement learning with less data and less time. Machine learning, 13(1), 103–130.

Nehaniv, C. L., & Dautenhahn, K. (2002). Imitation in animals and artifacts. In K. Dautenhahn & C. L. Nehaniv (Eds.), (pp. 41–61). Cambridge, MA: MIT Press.

Ng, A. Y., Coates, A., Diel, M., Ganapathi, V., Schulte, J., Tse, B., et al. (2006). Autonomous inverted helicopter flight via reinforcement learning. In Experimental robotics ix (pp. 363–372). Springer.

Paden, B., Cáp, M., Yong, S. Z., Yershov, D. S., & Frazzoli, E. (2016). A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Transactions on intelligent vehicles, 1(1), 33–55.

Ratliff, N. D., Silver, D., & Bagnell, J. A. (2009). Learning to search: Functional gradient techniques for imitation learning. Autonomous Robots, 27(1), 25–53.

Rong, N., Halpern, J. Y., & Saxena, A. (2016). Mdps with unawareness in robotics. In UAI (pp. 627–636). AUAI Press.

Russell, S., & Norvig, P. (2009). Artificial intelligence: A modern approach. Upper Saddle River: Prentice Hall.

Rusu, R. B., Blodow, N., & Beetz, M. (2009). Fast point feature histograms (FPFH) for 3d registration. In ICRA (pp. 3212–3217). IEEE.

Saha, O., & Dasgupta, P. (2017). Experience learning from basic patterns for efficient robot navigation in indoor environments. Journal of Intelligent and Robotic Systems, 92, 545–564.

Saha, O., & Dasgupta, P. (2017). Improved reward estimation for efficient robot navigation using inverse reinforcement learning. In AHS (pp. 245–252). IEEE.

Saha, O., & Dasgupta, P. (2017). Real-time robot path planning around complex obstacle patterns through learning and transferring options. In 2017 IEEE international conference on autonomous robot systems and competitions, ICARSC 2017, coimbra, portugal, april 26–28, 2017 (pp. 278–283).

Silver, D., Bagnell, J. A., & Stentz, A. (2010). Learning from demonstration for autonomous navigation in complex unstructured terrain. The International Journal of Robotics Research, 29(12), 1565–1592.

Silver, D., Lever, G., Heess, N., Degris, T., Wierstra, D., & Riedmiller, M. A. (2014). Deterministic policy gradient algorithms. In ICML (Vol. 32, pp. 387–395). JMLR Org.

Simsek, Ö., Wolfe, A. P., & Barto, A. G. (2005). Identifying useful subgoals in reinforcement learning by local graph partitioning. In ICML (Vol. 119, pp. 816–823). ACM.

Sofman, B., Lin, E., Bagnell, J. A., Cole, J., Vandapel, N., & Stentz, A. (2006). Improving robot navigation through self-supervised online learning. Journal of Field Robotics, 23(11–12), 1059–1075.

Sutton, R. S., Precup, D., & Singh, S. P. (1999). Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1–2), 181–211.

Takeuchi, J., & Tsujino, H. (2010). One-shot supervised reinforcement learning for multi-targeted tasks: RL-SAS. In ICANN (2) (Vol. 6353, pp. 204–209). Springer.

Taylor, M. E., & Stone, P. (2007). Cross-domain transfer for reinforcement learning. In ICML (Vol. 227, pp. 879–886). ACM.

Taylor, M. E., & Stone, P. (2009). Transfer learning for reinforcement learning domains: A survey. Journal of Machine Learning Research, 10, 1633–1685.

Torrey, L., Walker, T., Shavlik, J., & Maclin, R. (2005). Using advice to transfer knowledge acquired in one reinforcement learning task to another. In Ecml (pp. 412–424).

Wulfmeier, M., Rao, D., Wang, D. Z., Ondruska, P., & Posner, I. (2017). Large-scale cost function learning for path planning using deep inverse reinforcement learning. The International Journal of Robotics Research, 36(10), 1073–1087.

Zhu, Y., Mottaghi, R., Kolve, E., Lim, J. J., Gupta, A., Fei-Fei, L., et al. (2017). Target-driven visual navigation in indoor scenes using deep reinforcement learning. In ICRA (pp. 3357–3364). IEEE.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Saha, O., Dasgupta, P. & Woosley, B. Real-time robot path planning from simple to complex obstacle patterns via transfer learning of options. Auton Robot 43, 2071–2093 (2019). https://doi.org/10.1007/s10514-019-09852-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-019-09852-5