Abstract

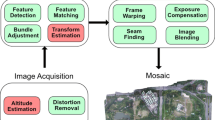

The estimation of geolocation for aerial images is significant for tasks like map creating, or automatic navigation for unmanned aerial vehicles (UAVs). We propose a novel geolocalization method for the UAVs using only aerial images and reference road map. The corresponding road maps of the aerial images are firstly merged into a whole mosaic image using our newly-designed aerial image mosaicking algorithm, where the relative homography transformations between road images are firstly estimated using keypoints tracking in RGB aerial images, and then further refined with registration between detected roads. The geolocalization of the aerial mosaic image is then taken as the problem of registering observed roads in the aerial images to the reference road map under the homography transformation. The registration problem is solved with our fast search algorithm based on a novel projective-invariant feature, which consists of two road intersections augmented with their tangents. Experiments demonstrate that the proposed method can localize the aerial image sequence over an area larger than 1000 km\(^2\) within a few seconds.

Similar content being viewed by others

References

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12), 2481–2495.

Brubaker, M. A., Geiger, A., & Urtasun, R. (2013). Lost! leveraging the crowd for probabilistic visual self-localization. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3057–3064).

Bu, S., Zhao, Y., Wan, G., & Liu, Z. (2016) Map2dfusion: Real-time incremental uav image mosaicing based on monocular slam. In 2016 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 4564–4571). IEEE

Chiu, H. P., Das, A., Miller, P., Samarasekera, S., & Kumar, R. (2014) Precise vision-aided aerial navigation. In 2014 IEEE/RSJ international conference on intelligent robots and systems (pp. 688–695). IEEE

Conte, G., & Doherty, P. (2008) An integrated UAV navigation system based on aerial image matching. In 2008 IEEE aerospace conference (pp. 1–10). IEEE

Costea, D., & Leordeanu, M. (2016) Aerial image geolocalization from recognition and matching of roads and intersections. arXiv preprint arXiv:1605.08323.

Demir, I., Koperski, K., Lindenbaum, D., Pang, G., Huang, J., Basu, S., Hughes, F., Tuia, D., & Raska, RD. (2018) A challenge to parse the earth through satellite images. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Salt Lake City, UT, USA (pp. 18–22)

Divecha, M., & Newsam, S. (2016) Large-scale geolocalization of overhead imagery. In Proceedings of the 24th ACM SIGSPATIAL international conference on advances in geographic information systems, ACM (p. 32).

Dumble, S. J., & Gibbens, P. W. (2015). Airborne vision-aided navigation using road intersection features. Journal of Intelligent and Robotic Systems, 78(2), 185–204.

Garcia-Fidalgo, E., Ortiz, A., Bonnin-Pascual, F., & Company, J. P. (2016) Fast image mosaicing using incremental bags of binary words. In 2016 IEEE international conference on robotics and automation (ICRA) (pp. 1174–1180). IEEE

Hartley, R., & Zisserman, A. (2003). Multiple view geometry in computer vision. Cambridge: Cambridge University Press.

He, H., Yang, D., Wang, S., Wang, S., & Li, Y. (2019). Road extraction by using atrous spatial pyramid pooling integrated encoder-decoder network and structural similarity loss. Remote Sensing, 11(9), 1015.

Jung, J., Yun, J., Ryoo, C. K., & Choi, K. (2011). Vision based navigation using road-intersection image. In 2011 11th international conference on control (pp. 964–968). IEEE: Automation and Systems.

Kekec, T., Yildirim, A., & Unel, M. (2014). A new approach to real-time mosaicing of aerial images. Robotics and Autonomous Systems, 62(12), 1755–1767.

Korman, S., & Litman, R. (2018) Latent ransac. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6693–6702).

Kunina, I., Terekhin, A. P., Khanipov, T. M., Kuznetsova, E. G., & Nikolaev, D. P. (2017) Aerial image geolocalization by matching its line structure with route map. In Ninth international conference on machine vision (ICMV 2016), International Society for Optics and Photonics (Vol. 10341, p. 103412A).

Li, S. Z., Kittler, J., & Petrou, M. (1992) Matching and recognition of road networks from aerial images. In European conference on computer vision (pp. 857–861). Springer.

Li, Y., Yang, D., Wang, S., & He, H. (2019) Road-network-based rapid geolocalization. arXiv e-prints arXiv:1906.12174.

Lin, Y., & Medioni, G. (2007) Map-enhanced UAV image sequence registration and synchronization of multiple image sequences. In 2007 IEEE conference on computer vision and pattern recognition (pp. 1–7). IEEE.

Máttyus, G., & Fraundorfer, F. (2016). Aerial image sequence geolocalization with road traffic as invariant feature. Image and Vision Computing, 52, 218–229.

Mattyus, G., Wang, S., Fidler, S., & Urtasun, R. (2015) Enhancing road maps by parsing aerial images around the world. In Proceedings of the IEEE international conference on computer vision (pp. 1689–1697).

Miao, Z., Shi, W., Zhang, H., & Wang, X. (2012). Road centerline extraction from high-resolution imagery based on shape features and multivariate adaptive regression splines. IEEE Geoscience and Remote Sensing Letters, 10(3), 583–587.

Rodriguez, J. J., & Aggarwal, J. (1990). Matching aerial images to 3-d terrain maps. IEEE Transactions on Pattern Analysis and Machine Intelligence, 12, 1138–1149.

Rublee, E., Rabaud, V., Konolige, K., & Bradski, G. R. (2011) Orb: An efficient alternative to sift or surf. In ICCV (Vol. 11, p. 2). Citeseer.

Shan, M., Wang, F., Lin, F., Gao, Z., Tang, Y. Z., & Chen, B. M. (2015) Google map aided visual navigation for UAVs in GPS-denied environment. In 2015 IEEE international conference on robotics and biomimetics (ROBIO) (pp. 114–119). IEEE.

Wang, C., Stefanidis, A., & Agouris, P. (2007) Relaxation matching for georegistration of aerial and satellite imagery. In 2007 IEEE international conference on image processing (Vol. 5, pp. V–449). IEEE.

Wu, C. (2013) Towards linear-time incremental structure from motion. In 2013 international conference on 3D Vision-3DV 2013 (pp. 127–134). IEEE.

Wu, L., & Hu, Y. (2009) Vision-aided navigation for aircrafts based on road junction detection. In 2009 IEEE international conference on intelligent computing and intelligent systems (Vol. 4, pp. 164–169). IEEE.

Yang, T., Li, J., Yu, J., Wang, S., & Zhang, Y. (2015). Diverse scene stitching from a large-scale aerial video dataset. Remote Sensing, 7(6), 6932–6949.

Zhang, Z. (1994). Iterative point matching for registration of free-form curves and surfaces. International Journal of Computer Vision, 13(2), 119–152.

Acknowledgements

This work was supported by National Natural Science Foundation of China (Grant No. 61673017).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

7 Appendix A

We provide the deduction to compute the homography matrix that transforms points from the world coordinate to image coordinate.

Let \({\mathbf {p}}_{w}=\left( x_{w}, y_{w}, z_{w}\right) ^{T}\) be one point expressed in the world coordinate, \({\mathbf {K}}\) be the internal camera parameters, and \({\mathbf {R}}, {\mathbf {t}}\) are the rotation matrix and translation vector of the camera respectively. Then the corresponding pixel point \({\mathbf {p}}_{c}\) for \({\mathbf {p}}_{w}\) can be computed as:

When the world coordinate is set as that in Fig. 6, we can get \(z_{w}=0\).

The rotation matrix \({\mathbf {R}}\) can be expressed in the form of \({\mathbf {R}}=\left[ \begin{array}{ccc}{{\mathbf {r}}_{1}^{T}}&{{\mathbf {r}}_{2}^{T}}&{{\mathbf {r}}_{3}^{T}}\end{array}\right] \), where \({\mathbf {r}}_{i}^{T}, i=1,2,3\), is the ith column of \({\mathbf {R}}\), and then the Eq. 20 becomes

Writing \({\mathbf {K}}\left[ \begin{array}{ccc}{{\mathbf {r}}_{1}^{T}}&{{\mathbf {r}}_{2}^{T}}&{{\mathbf {t}}}\end{array}\right] \) as \({\mathbf {H}}={\mathbf {K}}\left[ \begin{array}{lll}{{\mathbf {r}}_{1}^{T}}&{{\mathbf {r}}_{2}^{T}}&{{\mathbf {t}}}\end{array}\right] \) yields

here \(\left( x_{w}, y_{w}, 1\right) ^{T}\) can be treated as the homogeneous coordinate of point in the ground expressed in the world coordinate. So the homography matrix that transforms a point in the ground to the image plane can be computed as:

8 Appendix B

Here we provide the detailed information of areas where we performed our geolocalization on synthetic aerial image sequences. The distribution of the 20 areas is shown in Fig. 14, and the total surface area, the total length of the roads, the number of the road intersection are presented in Table 1

Rights and permissions

About this article

Cite this article

Li, Y., He, H., Yang, D. et al. Geolocalization with aerial image sequence for UAVs. Auton Robot 44, 1199–1215 (2020). https://doi.org/10.1007/s10514-020-09927-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-020-09927-8