Abstract

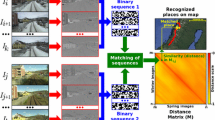

In the field of visual Place Recognition (vPR), sequence-based techniques have received close attention since they combine visual information from multiple measurements to enhance the results. This paper is concerned with the task of identifying sequence boundaries, corresponding to physical scene limits of the robot’s trajectory, that can potentially be re-encountered during an autonomous mission. In contrast to other vPR techniques that select a predefined length for all the image sequences, our approach focuses on a dynamic segmentation and allows for the visual information to be consistently grouped between different visits of the same area. To achieve this, we compute similarity measurements between consecutively acquired frames to incrementally formulate a similarity signal. Then, local extrema are detected in the Scale-Space domain regardless the velocity that a camera travels and perceives the world. Accounting for any detection inconsistencies, we explore asynchronous sequence-based techniques and a novel weighted temporal consistency scheme that strengthens the performance. Our dynamically computed sequence segmentation is tested on two different vPR methods offering an improvement in the systems’ accuracy.

Similar content being viewed by others

Notes

For the rest of this paper, we consider that any similarity measurement is normalized in the range of \(\left[ 0, 1\right] \) for consistency reasons.

The notation \(\lceil y \rfloor \) denotes the integer value that is closest to y (rounding operation). Similarly, \(\lfloor y\rfloor \) and \(\lceil y\rceil \) correspond to the smallest and highest integer which are closest to y (flooring and ceiling operations), respectively.

The size of each kernel is determined by \(s{=}2\lceil 3 \sigma \rceil {+}1\) in order to sufficiently describe the filter’s structure and provide a single middle value.

SeqSLAM does not include the normalization factor \(d_s\) in Eq. 7. However, it is included here to ensure a common value range for D across sequences with different sizes.

We made use of an open-source version of SeqSLAM found in http://openslam.org/openseqslam.html.

References

Angeli, A., Filliat, D., Doncieux, S., & Meyer, J. A. (2008). Fast and incremental method for loop-closure detection using bags of visual words. IEEE Transactions on Robotics, 24(5), 1027–1037.

Ansari, A., & Mohammed, M. H. (2015). Content based video retrieval systems-methods, techniques, trends and challenges. International Journal of Computer Applications, 112(7).

Arroyo, R., Alcantarilla, P. F., Bergasa, L. M., & Romera, E. (2015) Towards life-long visual localization using an efficient matching of binary sequences from images. In Proceedings of the IEEE international conference on robotics and automation (pp. 6328–6335).

Arroyo, R., Alcantarilla, P. F., Bergasa, L. M., & Romera, E. (2016) Fusion and binarization of CNN features for robust topological localization across seasons. In Proceedings of the IEEE/RSJ interantional conference intelligent robots and system (pp. 4656–4663).

Arroyo, R., Alcantarilla, P. F., Bergasa, L. M., Yebes, J. J., & Bronte, S. (2014). Fast and effective visual place recognition using binary codes and disparity information. In IEEE/RSJ international conference on intelligent robots and systems (pp. 3089–3094).

Bai, D., Wang, C., Zhang, B., Yi, X., & Yang, X. (2018). Sequence searching with CNN features for robust and fast visual place recognition. Comput & Graphics, 70, 270–280.

Bampis, L., Amanatiadis, A., & Gasteratos, A. (2016). Encoding the description of image sequences: A two-layered pipeline for loop closure detection. In Proceedings of the IEEE/RSJ international conference on intelligent robots and system (pp. 4530–4536).

Bampis, L., Amanatiadis, A., & Gasteratos, A. (2017) High order visual words for structure-aware and viewpoint-invariant loop closure detection. In Proceedings of the IEEE/RSJ international conference on intelligent robots and system (pp. 4898–4903).

Bansal, A., Russell, B., & Gupta, A. (2016). Marr revisited: 2D-3D alignment via surface normal prediction. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5965–5974).

Bay, H., Tuytelaars, T., & Van Gool, L. (2006). SURF: Speeded up robust features. In Proceedings of the European conference on computer vision (pp. 404–417).

Blanco, J. L., Moreno, F. A., & Gonzalez, J. (2009). A collection of outdoor robotic datasets with centimeter-accuracy ground truth. Autonomous Robots, 27(4), 327–351.

Brown, M., Lowe, D. G. (2002). Invariant features from interest point groups. In British machine vision conference (Vol. 4).

Burguera, A., Bonin-Font, F., & Oliver, G. (2015). Trajectory-based visual localization in underwater surveying missions. Sensors, 15(1), 1708–1735.

Calonder, M., Lepetit, V., Strecha, C., & Fua, P. (2010). BRIEF: Binary robust independent elementary features. In Proceedings of the European conference computer vision (pp. 778–792).

Carrasco, P. L. N., Bonin-Font, F., & Oliver-Codina, G. (2016). Global image signature for visual loop-closure detection. Autonomous Robots, 40(8), 1403–1417.

Cieslewski, T., & Scaramuzza, D. (2017). Efficient decentralized visual place recognition using a distributed inverted index. IEEE Robotics and Automation Letters, 2(2), 640–647.

Cummins, M., & Newman, P. (2008). FAB-MAP: Probabilistic localization and mapping in the space of appearance. International Journal of Robotics Research, 27(6), 647–665.

Cummins, M., & Newman, P. (2011). Appearance-only SLAM at large scale with FAB-MAP 2.0. International Journal of Robotics Research, 30(9), 1100–1123.

Eustice, R. M., Pizarro, O., & Singh, H. (2008). Visually augmented navigation for autonomous underwater vehicles. IEEE Journal of Oceanic Engineering, 33(2), 103–122.

Fei, X., Tsotsos, K., & Soatto, S. (2016). A simple hierarchical pooling data structure for loop closure. In European conference on computer vision (pp. 321–337).

Gálvez-López, D., & Tardós, J. D. (2012). Bags of binary words for fast place recognition in image sequences. IEEE Transactions on Robotics, 28(5), 1188–1197.

Garcia-Fidalgo, E., & Ortiz, A. (2015). Vision-based topological mapping and localization methods: A survey. Robotics and Autonomous Systems, 64, 1–20.

Garg, S., & Milford, M. (2017). Straightening sequence-search for appearance-invariant place recognition using robust motion estimation.

Gehrig, M., Stumm, E., Hinzmann, T., & Siegwart, R. (2017). Visual place recognition with probabilistic voting. In Proceedings of the IEEE international conference on robotics and automation (pp. 3192–3199).

Geiger, A., Lenz, P., Stiller, C., & Urtasun, R. (2013). Vision meets robotics: The KITTI dataset. International Journal of Robotics Research, 32(11), 1231–1237.

Han, Z., Mo, R., Yang, H., & Hao, L. (2018). CAD Assembly Model Retrieval Based on Multi-Source Semantics Information and Weighted Bipartite Graph. Computers in Industry, 96, 54–65.

Hess, R. (2010). An open-source SIFT library. In Proceedings of the ACM international conference on multimedia (pp. 1493–1496).

Ho, K. L., & Newman, P. (2007). Detecting loop closure with scene sequences. International Journal of Computer Vision, 74(3), 261–286.

Huang, P., Hilton, A., & Starck, J. (2010). Shape similarity for 3D video sequences of people. International Journal of Computer Vision, 89(2–3), 362–381.

Kazmi, S. A. M., & Mertsching, B. (2016). Simultaneous place learning and recognition for real-time appearance-based mapping. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (pp. 4898–4903).

Khan, S., & Wollherr, D. (2015). IBuILD: Incremental bag of binary words for appearance based loop closure detection. In Proceedings of the IEEE international conference on robotics and automation (pp. 5441–5447).

Konolige, K., Bowman, J., Chen, J., Mihelich, P., Calonder, M., Lepetit, V., & Fua, P. (2010). View-based maps. International Journal of Robotics Research, 29(8), 941–957.

Latif, Y., Cadena, C., & Neira, J. (2013). Robust loop closing over time for pose graph SLAM. International Journal of Robotics Research, 32(14), 1611–1626.

Lindeberg, T. (1990). Scale-space for discrete signals. IEEE Transactions on Pattern Analysis and Machine Intelligence, 12(3), 234–254.

Lindeberg, T. (1993). Detecting salient blob-like image structures and their scales with a scale-space primal sketch: A method for focus-of-attention. International Journal of Computer Vision, 11(3), 283–318.

Lindeberg, T. (1994). Scale-space theory: A basic tool for analyzing structures at different scales. Journal of Applied Statistics, 21(1–2), 225–270.

Lowe, D. G. (2004). Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2), 91–110.

Lowry, S., Sünderhauf, N., Newman, P., Leonard, J. J., Cox, D., Corke, P., & Milford, M. J. (2015). Visual place recognition: A survey. IEEE Transactions on Robotics, 32(1), 1–19.

Lynen, S., Bosse, M., Furgale, P., & Siegwart, R. (2014) Placeless place-recognition. In Proceedings of the IEEE international conference on 3D vision (Vol. 1, pp. 303–310).

MacTavish, K., & Barfoot, T. D. (2014). Towards hierarchical place recognition for long-term autonomy. In Proceedings of the IEEE international conference robotics and automation. Visual place recognition in changing environments workshop (pp. 1–6).

Mangelson, J. G., Dominic, D., Eustice, R. M., & Vasudevan, R. (2018). Pairwise consistent measurement set maximization for robust multi-robot map merging. In Proceedings of the IEEE international conference on robotics and automation (pp. 2916–2923).

McManus, C., Upcroft, B., & Newman, P. (2015). Learning place-dependant features for long-term vision-based localisation. Autonomous Robots, 39(3), 363–387.

Mei, C., Sibley, G., Cummins, M., Newman, P. M., & Reid, I. D. (2009). A constant-time efficient stereo SLAM system. In Proceedings of the British machine vision conference (pp. 1–11).

Milford, M. J., & Wyeth, G. F. (2012) SeqSLAM: Visual route-based navigation for sunny summer days and stormy winter nights. In Proceedings of the IEEE international conference on robotics and automation (pp. 1643–1649).

Mur-Artal, R., & Tardós, J. D. (2014). Fast relocalisation and loop closing in keyframe-based slam. In Proceedings of the IEEE international conference on robotics and automation (pp. 846–8531).

Mur-Artal, R., Montiel, J. M. M., & Tardos, J. D. (2015). ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Transactions on Robotics, 31(5), 1147–1163.

Newman, P., Cole, D., & Ho, K. (2006) Outdoor SLAM using visual appearance and laser ranging. In Proceedings of the IEEE international conference on robotics and automation (pp. 1180–1187).

Pepperell, E., Corke, P., & Milford, M. (2013). Towards persistent visual navigation using SMART. In Proceedings of the Australasian conference on robotics and automation.

RAWSEEDS (2007–2009) Robotics advancement through web-publishing of sensorial and elaborated extensive data sets (Project FP6-IST-045144). http://www.rawseeds.org/rs/datasets.

Rublee, E., Rabaud, V., Konolige, K., & Bradski, G. (2011) ORB: an efficient alternative to SIFT or SURF. In Proceedings of the IEEE international conference on computer vision (pp. 2564–2571).

Shou, Z., Pan, J., Chan, J., Miyazawa, K., Mansour, H., Vetro, A., Giro-i Nieto, X., & Chang, S. F. (2018). Online detection of action start in untrimmed, streaming videos. In Proceedings of the European on conference computer vision (pp. 534–551).

Sivic, J., & Zisserman, A. (2003). Video Google: A text retrieval approach to object matching in videos. In Proceedings of the IEEE international conference computer visionn (pp. 1470–1477).

Sizikova, E., Singh, V. K., Georgescu, B., Halber, M., Ma, K., & Chen, T. (2016). Enhancing place recognition using joint intensity-depth analysis and synthetic data. In Proceedings of the European conference on computer vision workshop (pp. 901–908).

Smith, M., Baldwin, I., Churchill, W., Paul, R., & Newman, P. (2009). The new college vision and laser data set. Interantional Journal of Robotics Research, 28(5), 595–599.

Strasdat, H., Montiel, J., & Davison, A. J. (2010). Scale drift-aware large scale monocular SLAM. In Proceedings of the robotics: science and systems (p. 5).

Stumm, E., Mei, C., Lacroix, S., & Chli, M. (2015). Location graphs for visual place recognition. In Proceedings of the IEEE international conference on robotics and automation (pp. 5475–5480).

Sünderhauf, N., Neubert, P., & Protzel, P. (2013). Are we there yet? Challenging SeqSLAM on a 3000 Km journey across all four seasons. In Proceedings of the IEEE international conference on robotics and automation, workshop on long-term autonomy.

Sünderhauf, N., Shirazi, S., Dayoub, F., Upcroft, B., & Milford, M. J. (2015a). On the performance of ConvNet features for place recognition. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (pp. 4297–4304).

Sünderhauf, N., Shirazi, S., Jacobson, A., Dayoub, F., Pepperell, E., Upcroft, B., & Milford, M. (2015b). Place recognition with convnet landmarks: Viewpoint-robust, condition-robust, training-free. In Proceedngs of the robotics: science and systems.

Vysotska, O., Naseer, T., Spinello, L., Burgard, W., & Stachniss, C. (2015). Efficient and effective matching of image sequences under substantial appearance changes exploiting GPS priors. In Proceedings of the IEEE international conference on robotics and automation (pp. 2774–2779).

Warren, M., McKinnon, D., He, H., & Upcroft, B. (2010) Unaided stereo vision based pose estimation. In Proceedings of Australasian conference on robotics and automation.

Witkin, A. (1984). Scale-space filtering: A new approach to multi-scale description. In: Proceedings of the IEEE international conference on acoustics, speech, and signal processing (Vol. 9, pp. 150–153).

Wolf, J., Burgard, W., & Burkhardt, H. (2005). Robust vision-based localization by combining an image-retrieval system with Monte Carlo localization. IEEE Trans Robotics, 21(2), 208–216.

Yang, X., & Cheng, K. T. T. (2014). Local difference binary for ultrafast and distinctive feature description. IEEE Transations on Pattern Analysis and Machine Intelligence, 36(1), 188–194.

Zhang, H., Li, B., & Yang, D. (2010) Keyframe detection for appearance-based visual SLAM. In Proceedingd IEEE/RSJ international conference on intelligent robots and systems (pp 2071–2076).

Zolfaghari, M., Singh, K., & Brox, T. (2018) ECO: Efficient convolutional network for online video understanding. In Proceedings of the European conference computer vision (pp. 695–712).

Acknowledgements

This research is co-financed by Greece and the European Union (European Social Fund-ESF) through the Operational Programme ńHuman Resources Development, Education and Lifelong Learningż in the context of the project “Reinforcement of Postdoctoral Researchers—2nd Cycle” (MIS-5033021), implemented by the State Scholarships Foundation (IKY).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bampis, L., Gasteratos, A. Sequence-based visual place recognition: a scale-space approach for boundary detection. Auton Robot 45, 505–518 (2021). https://doi.org/10.1007/s10514-021-09984-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-021-09984-7