Abstract

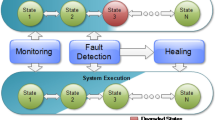

Selecting the optimum component implementation variant is sometimes difficult since it depends on the component’s usage context at runtime, e.g., on the concurrency level of the application using the component, call sequences to the component, actual parameters, the hardware available etc. A conservative selection of implementation variants leads to suboptimal performance, e.g., if a component is conservatively implemented as thread-safe while during the actual execution it is only accessed from a single thread. In general, an optimal component implementation variant cannot be determined before runtime and a single optimal variant might not even exist since the usage contexts can change significantly over the runtime. We introduce self-adaptive concurrent components that automatically and dynamically change not only their internal representation and operation implementation variants but also their synchronization mechanism based on a possibly changing usage context. The most suitable variant is selected at runtime rather than at compile time. The decision is revised if the usage context changes, e.g., if a single-threaded context changes to a highly contended concurrent context. As a consequence, programmers can focus on the semantics of their systems and, e.g., conservatively use thread-safe components to ensure consistency of their data, while deferring implementation and optimization decisions to context-aware runtime optimizations. We demonstrate the effect on performance with self-adaptive concurrent queues, sets, and ordered sets. In all three cases, experimental evaluation shows close to optimal performance regardless of actual contention.

Similar content being viewed by others

Notes

The notions “context” and “context-aware” are overloaded: “context” often refers to the CPU state such as registers and stack variables or the calling context. Context-aware composition and self-adaptive components use a generalized notion of a calling context and the present paper focuses on the contention context, i.e., the number of concurrent threads calling functions of a component.

References

Abbas, N., Andersson, J., Löwe, W.: Autonomic software product lines (ASPL). In: Proceedings of the Fourth European Conference on Software Architecture: Companion Volume, ACM, New York, NY, USA, ECSA ’10, pp. 324–331 (2010). doi:10.1145/1842752.1842812

Amdahl, G.M.: Validity of the single processor approach to achieving large scale computing capabilities. In: Proceedings of the April 18–20, 1967, Spring Joint Computer Conference, ACM, AFIPS ’67 (Spring), pp. 483–485 (1967). doi:10.1145/1465482.1465560

Ananian, C.S., Asanovic, K., Kuszmaul, B.C., Leiserson, C.E., Lie, S.: Unbounded transactional memory. In: High-Performance Computer Architecture, 2005. HPCA-11. 11th International Symposium on, IEEE, pp. 316–327 (2005)

Andersson, J., Ericsson, M., Keßler, C.W., Löwe, W.: Profile-guided composition. In: Software Composition, pp. 157–164 (2008)

Damron, P., Fedorova, A., Lev, Y., Luchangco, V., Moir, M., Nussbaum, D.: Hybrid transactional memory. In: Proceedings of the 12th International Conference on Architectural Support for Programming Languages and Operating Systems, ACM, New York, NY, USA, ASPLOS XII, pp. 336–346 (2006). doi:10.1145/1168857.1168900

Dice, D., Shalev, O., Shavit, N.: Transactional locking II. In: Distributed Computing, Springer, pp. 194–208 (2006)

Dig, D., Marrero, J., Ernst, M.D.: Refactoring sequential java code for concurrency via concurrent libraries. In: Proceedings of the 31st International Conference on Software Engineering, IEEE Computer Society, pp. 397–407 (2009)

Dijkstra, E.W., Lamport, L., Martin, A.J., Scholten, C.S., Steffens, E.F.M.: On-the-fly garbage collection: an exercise in cooperation. Commun. ACM 21(11), 966–975 (1978). doi:10.1145/359642.359655

Felber, P., Fetzer, C., Riegel, T.: Dynamic performance tuning of word-based software transactional memory. In: Proceedings of the 13th ACM SIGPLAN Symposium on Principles and practice of parallel programming, ACM, pp. 237–246 (2008)

Fomitchev, M., Ruppert, E.: Lock-free linked lists and skip lists. In: Proceedings of the Twenty-Third Annual ACM Symposium on Principles of Distributed Computing, ACM, pp. 50–59 (2004)

Frigo, M., Johnson, S.G.: The design and implementation of FFTW3. Proc. IEEE 93(2), 216–231 (2005). Special issue “Program Generation, Optimization, and Platform Adaptation”

Gamma, E., Helm, R., Johnson, R., Vlissides, J.: Design Patterns—Elements of Reusable Object-Oriented Software. Addison-Wesley, Reading (1995)

Gammie, P., Hosking, A.L., Engelhardt, K.: Relaxing safely: verified on-the-fly garbage collection for x86-TSO. SIGPLAN Not. 50(6), 99–109 (2015). doi:10.1145/2813885.2738006

Hammond, L., Wong, V., Chen, M., Carlstrom, B.D., Davis, J.D., Hertzberg, B., Prabhu, M.K., Wijaya, H., Kozyrakis, C., Olukotun, K.: Transactional memory coherence and consistency. In: ACM SIGARCH Computer Architecture News, IEEE Computer Society, vol. 32, pp. 102 (2004)

Hawblitzel, C., Petrank, E.: Automated verification of practical garbage collectors. SIGPLAN Not. 44(1), 441–453 (2009). doi:10.1145/1594834.1480935

Herlihy, M., Moss, J.E.B.: Transactional memory: architectural support for lock-free data structures, vol. 21. ACM (1993)

Herlihy, M.P., Wing, J.M.: Linearizability: a correctness condition for concurrent objects. ACM Trans. Program. Lang. Syst. TOPLAS 12(3), 463–492 (1990)

Herlihy, M., Luchangco, V., Moir, M., Scherer III, W.N.: Software transactional memory for dynamic-sized data structures. In: Proceedings of the Twenty-Second Annual Symposium on Principles of Distributed Computing, ACM, pp. 92–101 (2003)

Herlihy, M., Shavit, N., Tzafrir, M.: Hopscotch hashing. In: Distributed Computing, Springer, pp. 350–364 (2008)

Kessler, C., Löwe, W.: Optimized composition of performance-aware parallel components. Concurr. Comput. Pract. Exp. 24(5), 481–498 (2012). doi:10.1002/cpe.1844

Kirchner, J., Heberle, A., Löwe, W.: Evaluation of the employment of machine learning approaches and strategies for service recommendation. In: Service Oriented and Cloud Computing—4th European Conference, ESOCC 2015, Taormina, Italy, Sept 15–17, 2015. Proceedings, pp. 95–109 (2015a)

Kirchner, J., Heberle, A., Löwe, W.: Service recommendation using machine learning methods based on measured consumer experiences within a service market. Int. J. Adv. Intell. Syst. 8(3&4), 347–373 (2015b)

Kjolstad, F., Dig, D., Acevedo, G., Snir, M.: Transformation for class immutability. In: Proceedings of the 33rd International Conference on Software Engineering, ACM, pp. 61–70 (2011)

Kogan, A., Petrank, E.: Wait-free queues with multiple enqueuers and dequeuers. ACM SIGPLAN Not. 46(8), 223–234 (2011)

Kogan, A., Petrank, E.: A methodology for creating fast wait-free data structures. ACM SIGPLAN Not. ACM 47, 141–150 (2012)

Lamport, L.: How to make a multiprocessor computer that correctly executes multiprocess programs. IEEE Trans. Comput. C–28(9), 690–691 (1979). doi:10.1109/TC.1979.1675439

Li, X., Garzarán, M.J., Padua, D.: A dynamically tuned sorting library. In: Proceedings of the International Symposium on Code Generation and Optimization (CGO’04), IEEE Computer Society, pp. 111ff (2004)

Löwe, W., Neumann, R., Trapp, M., Zimmermann, W.: Robust dynamic exchange of implementation aspects. In: TOOLS (29), pp. 351–360 (1999)

Michael, M.M.: High performance dynamic lock-free hash tables and list-based sets. In: Proceedings of the Fourteenth Annual ACM Symposium on Parallel Algorithms and Architectures, ACM, pp. 73–82 (2002)

Michael, M.M.: Hazard pointers: safe memory reclamation for lock-free objects. IEEE Trans. Parallel Distrib. Syst. 15(6), 491–504 (2004)

Michael, M.M., Scott, M.L.: Simple, fast, and practical non-blocking and blocking concurrent queue algorithms. In: Proceedings of the Fifteenth Annual ACM Symposium on Principles of Distributed Computing, ACM, pp. 267–275 (1996)

Moir, M.: Transparent support for wait-free transactions. In: Distributed Algorithms, Springer, pp. 305–319 (1997)

Moura, J.M.F., Johnson, J., Johnson, R.W., Padua, D., Prasanna, V.K., Püschel, M., Veloso, M.: SPIRAL: Automatic implementation of signal processing algorithms. In: High Performance Embedded Computing (HPEC) (2000)

Oracle: Object (Java Platform SE 8). (2016). https://docs.oracle.com/javase/8/docs/api/java/lang/Object.html. Online, Accessed 13 July 2016

Österlund, E., Löwe, W.: Dynamically transforming data structures. In: Automated Software Engineering (ASE), 2013 IEEE/ACM 28th International Conference on, IEEE, pp. 410–420 (2013)

Österlund, E., Löwe, W.: Concurrent transformation components using contention context sensors. In: Proceedings of the 29th ACM/IEEE International Conference on Automated Software Engineering, ACM, New York, NY, USA, ASE ’14, pp. 223–234 (2014). doi:10.1145/2642937.2642995

Österlund, E., Löwe, W.: Concurrent compaction using a field pinning protocol. SIGPLAN Not. 50(11), 56–69 (2015). doi:10.1145/2887746.2754177

Pirinen, P.P.: Barrier techniques for incremental tracing. In: Proceedings of the 1st International Symposium on Memory Management, ACM, New York, NY, USA, ISMM ’98, pp. 20–25 (1998). doi:10.1145/286860.286863

Pizlo, F., Frampton, D., Hosking, A.L.: Fine-grained adaptive biased locking. In: Proceedings of the 9th International Conference on Principles and Practice of Programming in Java, ACM, pp. 171–181 (2011)

Russell, K., Detlefs, D.: Eliminating synchronization-related atomic operations with biased locking and bulk rebiasing. In: Proceedings of the 21st Annual ACM SIGPLAN Conference on Object-oriented Programming Systems, Languages, and Applications, ACM, New York, NY, USA, OOPSLA ’06, pp. 263–272 (2006). doi:10.1145/1167473.1167496

Saha, B., Adl-Tabatabai, A.R., Hudson, R.L., Minh, C.C., Hertzberg, B.: McRT-STM: a high performance software transactional memory system for a multi-core runtime. In: Proceedings of the Eleventh ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, ACM, pp. 187–197 (2006)

Schonberg, E., Schwartz, J., Sharir, M.: Automatic data structure selection in SETL. In: Proceedings of the 6th ACM SIGACT-SIGPLAN Symposium on Principles of Programming Languages, ACM, pp. 197–210 (1979)

Schultz, U.P., Lawall, J.L., Consel, C., Muller, G.: Towards automatic specialization of Java programs. In: Proceedings of the 13th European Conference on Object-Oriented Programming (ECOOP’99), Springer, pp. 367–390 (1999)

Shavit, N., Touitou, D.: Software transactional memory. Distrib. Comput. 10(2), 99–116 (1997)

Sundell, H.: Wait-free reference counting and memory management. In: Parallel and Distributed Processing Symposium, 2005. Proceedings. 19th IEEE International, IEEE, pp. 24b (2005)

Svahnberg, M., van Gurp, J., Bosch, J.: A taxonomy of variability realization techniques. Softw. Pract. Exp. 35(8), 705–754 (2005). doi:10.1002/spe.652

Timnat, S., Braginsky, A., Kogan, A., Petrank, E.: Wait-free linked-lists. In: Principles of Distributed Systems, Springer, pp. 330–344 (2012)

von Löwis, M., Denker, M., Nierstrasz, O.: Context-oriented programming: beyond layers. In: Proceedings of the International Conference on Dynamic Languages (ICDL’07), ACM, pp. 143–156 (2007). doi:10.1145/1352678.1352688

Whaley, R.C., Petitet, A., Dongarra, J.J.: Automated empirical optimizations of software and the ATLAS project. Parallel Comput. 27(1–2), 3–35 (2001). http://citeseer.ist.psu.edu/article/whaley00automated.html

Xu, G.: Coco: sound and adaptive replacement of java collections. In: 27th European Conference, Montpellier, France, July 1–5, 2013. Proceedings, Springer, Berlin, pp. 1–26 (2013)

Acknowledgements

We would also like to thank the anonymous reviewers of ASE’13 and ASE’14 for their insightful feedback that helped to improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was supported by the Swedish Research Council Under Grant 2011-6185.

Appendix A: Lock-free invalidation correctness proof

Appendix A: Lock-free invalidation correctness proof

1.1 A. I Invalidation termination

To prove termination of invalidation, we require three lemmata:

Lemma 1

Every node visited by the tracing is unique.

Proof

Reference cycles are broken by not pushing black referents on the stack again. That is, visited (black) nodes are not visited again. Multiple pointers to the same referent are not followed by not pushing gray referents on the stack again. That is, (gray) nodes are only occurring once on the stack. \(\square \)

Lemma 2

There is a finite set of nodes to be visited.

Proof

At the point when the root node has been successfully invalidated, referred to as root invalidation time, there may be at most t concurrently executing operations to mutate the shape of the data structure, where t is the number of threads operating on the data structure. At root invalidation time, let the snapshot of reachable nodes (with propagation paths from the root) be the set of nodes N. Let the nodes created by the t potentially concurrently executing threads be the set of nodes L. The L nodes may be committed by creating edges from gray and white nodes that the concurrent invalidation has not reached yet.

All operations started subsequent to root invalidation time, will always fail, because all atomic references linking the data structure together are completely encapsulated in the lock-free component variant. Therefore the only way of performing the operation is to go through the root node and its atomic references, that have been invalidated. Therefore, only the finite L nodes due to latent operations may be added after root invalidation time.

During concurrent tracing, the latent operations that had already started at root invalidation time, could either be removing nodes in N with white or gray predecessor so that they are no longer transitively reachable, or adding nodes from L to gray or white nodes in the data structure so that they become reachable. This is a race between the latent operations of the t mutating threads and the tracer. If the mutators are faster than the tracer, they have the potential to change a few edges, but that does not block the tracer. Conversely, if the tracer is faster at coloring nodes black, then the latent mutator operations get aborted.

Therefore, in the worst case, the nodes to be visited by the invalidation is at most \(N \cup L\), which is finite. Therefore, the data structure can not be extended endlessly concurrent to invalidation. \(\square \)

Lemma 3

The tracing progresses for each visited node.

Proof

At root invalidation time, the set of black (visited) nodes, V, is a set of size 1 containing only the root node r, and then the set of unvisited nodes, U is in the worst case \(N \cup L \setminus \{ r \}\). U will contain gray nodes immediately reachable from V and white nodes. Each time a unique gray node from U is visited, it is moved from U to V. Therefore, U monotonically decreases for each visit, until either U is empty or contains only unreachable (white) objects. \(\square \)

Theorem 1

Invalidation of a component variant will terminate.

Proof

Since it was proven that invalidation visits unique (cf. 1) and finitely many (cf. Lemma 2) nodes, and the tracing progresses through those nodes (cf. Lemma 3), it follows that the invalidation will terminate when there are eventually no more nodes to visit. \(\square \)

1.2 A. II Invalidation completeness

To prove completeness of invalidation, we require three more lemmata:

Lemma 4

No edge from a black node may ever change.

Proof

References out of black objects are invalidated and therefore immutable. Any CAS invocation, linearizing or not, needs to expect the INVALIDATED sentinel value for its CAS to succeed, which is impossible; the INVALIDATED sentinel value is privately encapsulated in the atomic reference class. \(\square \)

This invariant is strictly stronger than then the strong tricoloring invariant, cf. Lemma 5, which is sufficient to prove completeness. However, Lemma 4 makes the proof easier and easier to follow.

Lemma 5

[Strong tricoloring invariant] There is no edge from a black node to a white node.

Proof

A node is colored black (and moved to V) once all immediately reachable nodes in U have been shaded gray. At that point, the edges may refer to either gray or black nodes. Mutating threads cannot subsequently add new (white) referents to black nodes as they are invalidated and therefore immutable. \(\square \)

Lemma 6

Every black node is part of the transitive closure C of the root r.

Proof

It can be proven by induction that there exists a path of predecessors from every black node that goes all the way back to the root r.

Base case At the root invalidation point, the set of black (visited) nodes, V, consists of only r. The root r is by itself part of the transitive closure of R by definition. Also all successor nodes of r in U are gray. Once a node has been colored black (and moved to V), its out edges will not change (cf. Lemma 4), and therefore those gray objects will permanently have r as a predecessor.

General case A gray node \(u \in U\) being visited at a point in time has at least one black predecessor node \(v \in V\). All immediately reachable successor nodes from u in U are shaded gray during that visit, then u is shaded black and moved to V. Therefore, every such visited node u added to V was at some point (when colored black) directly reachable from a predecessor black node \(v \in V\). Because of Lemma 4, that predecessor will remain the same throughout the tracing and u will remain to be the successor of v throughout the tracing, too. Therefore, Lemma 6 is proven by induction. \(\square \)

Theorem 2

Upon termination of invalidation, the whole data structure is invalidated.

Proof

Tracing terminates when U is either empty or contains only white nodes. Because of Lemma 5, there are no edges from V (only black nodes) to any node in U. Therefore, upon termination, the nodes in U are not reachable from any of the nodes in V. Together with Lemma 6, the remaining nodes in V are the transitive closure C of r, i.e., \(C=V\). Since all nodes in V are black, hence, invalidated, it holds that all reachable nodes are invalidated and, hence, the whole data structure is invalidated. \(\square \)

1.3 A. III Component consistency

The consistency of the whole data structure instance is guaranteed by the design of the linearizable lock-free data structure types. Operations and algorithms are designed to be consistent if reads and writes fail at liniarization points; they just do not make progress then, like a failed transaction. Linearizability is a consistency property that is up to implementors of lock-free components to prove. Once proven, consistency of the data structure is guaranteed. Either operations succeed, or fail safely. By safely we mean that they do not have side effects that compromise the consistency of the data structure. Any operation that fails safely, will wait for the component to transform into a new component variant, and then restart the operation there.

Lemma 7

In a concurrently invalidating component variant, all subsequent operations after root invalidation time will fail safely.

Proof

All subsequent linearizable operations that start after root invalidation time will need to access the data structure root edges to perform any meaningful operation on the data structure, as the linearization points are all defined in the atomic references. The root node is black, and therefore its successor edges will all throw exceptions once accessed, causing any such operation to immediately fail safely. \(\square \)

Lemma 8

In a concurrently invalidating component variant, all latent operations that started before root invalidation time will either fail or succeed safely.

Proof

There could be up to t latent operations that had already started at root invalidation time, where t is the number of threads concurrently accessing the data structure. Such operations could consist of either:

-

Reading elements (following edges)

-

Adding edges to new nodes

-

Removing edges to old nodes

In all of those scenarios, the operations could at any point before the linearization point come across an invalidated reference, in which case the operation is invalidated. But since a linearization point had not been reached, this fails safely by definition.

However, any such operations could also make it to the linearization point. The linearization may succeed if performed on edges of white or gray nodes (ahead of invalidation), in which case the operation is made completely observable at that linearization point (according to definition).

The operation could fail at an invalidation point either due to conflicts with other latent operations, or due to conflicts with invalidation. If it is a conflict with other latent operations, then the operation will be retried (without violating any consistency due to linearizability). When linearization points fail due to accessing invalidated references (the invalidation tracer caught up), the operation will fail safely (without retrying in the old component variant) due to the exception thrown, effectively blocking the linearization point. \(\square \)

Lemma 9

In a concurrently invalidating component variant, any latent operation that succeeds at a linearization point in the source component variant \(c_1\), will have its successful operation reflected in the target component variant \(c_2\).

Proof

A successful linearization point during concurrent tracing can only be reached when the linearization point is reading or writing edges of a white or gray node. That is, it is ahead of the invalidation tracer and operating on nodes the tracer has not yet reached. Tracing will eventually reach all transitively reachable objects (cf. Theorem 2), and therefore the successful operations will eventually have their operations reflected in the invalidated data structure and the corresponding state changes gets transformed to \(c_2\). \(\square \)

Lemma 10

In a concurrently invalidated component variant, all latent operations that started after invalidation terminated, will fail safely.

Proof

After invalidation has terminated, the transitive closure of the root r is invalidated (cf. Theorem 2). Therefore, all atomic references transitively reachable from r are invalidated, and any subsequent linearization point will fail safely. \(\square \)

Theorem 3

All operations on a component transforming from component variant \(c_1\) to \(c_2\) will finish in \(c_1\) before invalidation and in \(c_2\) after invalidation.

Proof

We consider three cases: (i) the invalidation has not yet started, (ii) it has started but not terminated, and (iii) the invalidation has finished.

Case (i): all operations will finish in \(c_1\) because no atomic references in the component variant have been invalidated and hence will work as normal.

Case (ii): Lemma 10 proved that the remaining latent operations that started before invalidation finished, but had not yet finished after invalidation finished, will fail safely, and restart their operation in \(c_2\). Transformation eventually shifts all operations to the new component variant.

Case (iii): Lemma 7 proved that all subsequent operations started after invalidation finished will, fail safely and start operating on \(c_2\). \(\square \)

Theorem 4

Operations on components undergoing transformation preserve consistency such that all operations, invariantly of the stage of the transformation, have a total ordering that is consistently observed across all threads and homomorphic to a sequential execution with the same component-specified semantics preserved.

Proof

We distinguish the cases of Theorem again.

Case (i): all operations work as usual because no atomic reference has been invalidated yet. Their operations finish in linearization points with a total ordering that can be projected to consistent sequential executions, as required by linearizability that the lock-free component variants guarantee.

Case (ii a): all operations started after root invalidation time will fail safely and restart their operations in the target component variant \(c_2\) (cf. Lemma 7). When an operation fails safely, it did not commit its operation at its linearization point, and, hence, linearizability guarantees that it has not left any traces of half-completed operations that compromises the consistency of the component, i.e., it restarts in the target component variant \(c_2\) once transformation has finished, without leaving any trace in \(c_1\). All potential target components \(c_2\) guarantee consistency, i.e., we only transform between component variants that are thread safe on their own, disregarding transformation. Therefore, operations that fail safely in \(c_1\) and are restarted in \(c_2\) have a total order homomorphic to consistent sequential execution with corresponding component-specified semantics.

Case (ii b): latent operations were started before root invalidation time but did not yet finish. It was proven in Lemma 7 that those operations either complete successfully in the source component variant \(c_1\) or fail safely and restart in \(c_2\) after transformation. As already argued, operations that fail safely and restart in \(c_2\) after transformation guarantee the desired consistency. Lemma 9 proved that those operations that succeed in \(c_1\) will have their side effects observable in \(c_2\) after transformation. The latent operations may compete with each other as usual, and get a total ordering in the linearization points. Once an operation succeeds at a linearization point, it is ahead of the invalidation wavefront. Once the invalidation tracing catches up, it will be as if those latent operations that succeeded had already succeeded at root invalidation time, and any potential state change due to such successful latent operations will be reflected in the invalidated component variant once the invalidation is completed. This invalidated component variant \(c_1\) is then transformed to be semantically equivalent in \(c_2\), and, hence, the effect of those successfully completed latent operations in \(c_1\) have successfully transferred to \(c_2\) and maintained the same total ordering that they had in \(c_1\). Again, this total ordering is homomorhic to a consistent sequential execution with intended data structure specified semantics, because it has the same cloned state as the valid execution in \(c_1\) that committed at linearization points that according to linearizability guarantee those properties. It is as if invalidation of the whole data structure happened instantaneously right after the last latent operation succeeded at its linearization point.

Case (iii): all new operations that start after invalidation terminated, or latent operations that did not finish before invalidation finished, will retry in the target component variant \(c_2\) (cf. Theorem 4) once transformation has finished. The total ordering of those operations can, hence, be guaranteed if such guarantees hold in \(c_2\) and, again, we only use component variants that can guarantee total ordering. Therefore, those operations will have such a total ordering and be homomorphic to a consistent sequential execution with corresponding component-specified semantics. \(\square \)

Rights and permissions

About this article

Cite this article

Österlund, E., Löwe, W. Self-adaptive concurrent components. Autom Softw Eng 25, 47–99 (2018). https://doi.org/10.1007/s10515-017-0219-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10515-017-0219-0