Abstract

Irony is a pervasive aspect of many online texts, one made all the more difficult by the absence of face-to-face contact and vocal intonation. As our media increasingly become more social, the problem of irony detection will become even more pressing. We describe here a set of textual features for recognizing irony at a linguistic level, especially in short texts created via social media such as Twitter postings or “tweets”. Our experiments concern four freely available data sets that were retrieved from Twitter using content words (e.g. “Toyota”) and user-generated tags (e.g. “#irony”). We construct a new model of irony detection that is assessed along two dimensions: representativeness and relevance. Initial results are largely positive, and provide valuable insights into the figurative issues facing tasks such as sentiment analysis, assessment of online reputations, or decision making.

Similar content being viewed by others

Notes

Some fine-grained theoretical aspects of these concepts cannot be directly mapped to our framework, due to the idealized communicative scenarios that they presuppose. Nonetheless, we attempt to capture the core of these concepts in our model.

A manual comparison shows that a small number of tweets share two or more sets, being the sets #education and #politics the ones that present more of these cases: around 250 tweets (approximately 2 % of the total).

Type-level statistics are not provided because these tweets contain many typos, abbreviations, user mentions, etc. There was no standardization processing to remove such misspelling. Therefore, any statistics regarding types would be biased.

Prior to computing the distance between texts, all words were stemmed using the Porter algorithm, and all stopwords were eliminated. Accordingly, the distance measure better reflects the similarity in core vocabularies rather than similarity in superficial forms.

To aid understanding, “Appendix 1” provides examples from our evaluation corpus.

The complete list with emoticons can be downloaded from http://users.dsic.upv.es/grupos/nle.

Version 3.0 was used.

It is obvious that most sequences of c-grams are neutral with respect to irony. Moreover, they are neutral with respect to any topic. For instance, “ack or “acknowledgements are not representative of scientific discourses. However, irony and many figurative devices take advantage of rhetorical devices to accurately convey their meaning. We cite the research works described in Mihalcea and Strapparava (2006a, b) in which the authors focused on automatically recognizing humor by means of linguistic features. One of them is alliteration (which relies on phonological information). Therefore, in “Infants dont enjoy infancy like adults do adultery” is clear the presence of such linguistic feature to produce the funny effect. Rhetorical devices like the one cited are quite common in figurative language to guarantee the transmission of a message. In this respect, we modified the authors’ approach: instead of reproducing their phonological feature, we aimed to find underlying features based on morphological information in such a way we could find sequences of patterns beyond alliteration or rhyme.

All tweets underwent preprocessing, in which terms were stemmed and both hashtags and stop words were removed.

In order to observe the density function of each dimension for all four features, in “Appendix 2” we present the probability density function (PDF) associated with δ i,j (d k ) prior applying the threshold.

It is worth mentioning that we use all 11 dimensions of the four conceptual features by adding them in batches.

Each algorithm is implemented in Weka toolkit (Witten and Frank 2005). No optimization was performed.

Only the information gain values for the balanced distribution are displayed. The imbalanced case is not considered here since the values follow a similar distribution.

This problem affected Toyota during the last months of 2009 and the beginning of 2010.

Only 55 annotators were native speakers of English, while the remaining 25 were post-graduate students with sufficient English skills.

Recall that the #toyota set is artificially balanced, and contains 250 tweets with a positive emoticon and 250 tweets with a negative emoticon, regardless of the overall frequency of these emoticons on Twitter. Each emoticon serves a different purpose in an ironic tweet. Irony is mostly used to criticize, and we expect the negative emoticon will serve to highlight the criticism, while the positive emoticon will serve to highlight the humor of the tweet.

It is important to mention that 141 tweets were tagged as ironic by just single annotators. However, these tweets were not considered in order to not bias the test. It is senseless to take a tweet as ironic when only one annotator tagged it as ironic, if 3 annotators said it was non-ironic.

References

Artstein, R., & Poesio, M. (2008). Inter-coder agreement for computational linguistics. Computational Linguistics, 34(4), 555–596.

Attardo, S. (2007). Irony as relevant inappropriateness. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 135–174). London: Taylor and Francis Group.

Balog, K., Mishne, G., & Rijke, M. (2006). Why are they excited? Identifying and explaining spikes in blog mood levels. In: European chapter of the association of computational linguistics (EACL 2006).

Burfoot, C., & Baldwin, T. (2009). Automatic satire detection: Are you having a laugh? In: ACL-IJCNLP ’09: Proceedings of the ACL-IJCNLP 2009 conference short papers (pp. 161–164).

Carvalho, P., Sarmento, L., Silva, M., & de Oliveira, E. (2009). Clues for detecting irony in user-generated contents: Oh\(\ldots\)!! it’s “so easy” ;-). In: TSA ’09: Proceedings of the 1st international CIKM workshop on topic-sentiment analysis for mass opinion (pp. 53–56). Hong Kong: ACM.

Chin-Yew, L., & Och, F. (2004). Automatic evaluation of machine translation quality using longest common subsequence and skip-bigram statistics. In: ACL ’04: Proceedings of the 42nd annual meeting on association for computational linguistics (p. 605). Morristown, NJ: Association for Computational Linguistics.

Clark, H., & Gerrig, R. (1984). On the pretense theory of irony. Journal of Experimental Psychology: General, 113(1), 121–126.

Cohen, W., Ravikumar, P., & Fienberg, S. (2003). A comparison of string distance metrics for name-matching tasks. In: Proceedings of IJCAI-03 workshop on information integration (pp. 73–78).

Colston, H. (2007). On necessary conditions for verbal irony comprehension. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 97–134). London: Taylor and Francis Group.

Curcó, C. (2007). Irony: Negation, echo, and metarepresentation. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 269–296). London: Taylor and Francis Group.

Davidov, D., Tsur, O., & Rappoport, A. (2010). Semi-supervised recognition of sarcastic sentences in Twitter and Amazon. In: Proceedings of the 23rd international conference on computational linguistics (COLING).

Gibbs, R. (2007). Irony in talk among friends. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 339–360). London: Taylor and Francis Group.

Giora, R. (1995). On irony and negation. Discourse Processes, 19(2), 239–264.

Grice, H. (1975) Logic and conversation. In P. Cole & J. L. Morgan (Eds.), Syntax and semantics (Vol. 3, pp. 41–58). New York: Academic Press.

Guthrie, D., Allison, B., Liu, W., Guthrie, L., & Wilks, Y. (2006). A closer look at skip-gram modelling. In: Proceedings of the fifth international conference on language resources and evaluation (LREC-2006) (pp. 1222–1225).

Kreuz, R. (2001) Using figurative language to increase advertising effectiveness. In: Office of naval research military personnel research science workshop. Memphis, TN.

Kumon-Nakamura, S., Glucksberg, S., & Brown, M. (2007). How about another piece of pie: The allusional pretense theory of discourse irony. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 57–96). London: Taylor and Francis Group.

Lucariello, J. (2007) Situational irony: A concept of events gone away. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 467–498). London: Taylor and Francis Group.

Mihalcea, R., & Strapparava, C. (2006a). Learning to laugh (automatically): Computational models for humor recognition. Journal of Computational Intelligence, 22(2), 126–142.

Mihalcea, R., & Strapparava, C. (2006b). Technologies that make you smile: Adding humour to text-based applications. IEEE Intelligent Systems, 21(5), 33–39.

Miller, G. (1995). Wordnet: A lexical database for English. Communications of the ACM, 38(11), 39–41.

Monge, A., & Elkan, C. (1996). The field matching problem: Algorithms and applications. In: In Proceedings of the second international conference on knowledge discovery and data mining (pp. 267–270).

Pang, B., Lee, L., & Vaithyanathan, S. (2002). Thumbs up? sentiment classification using machine learning techniques. In: Proceedings of the 2002 conference on empirical methods in natural language processing (EMNLP) (pp. 79–86). Morristown, NJ: Association for Computational Linguistics.

Pedersen, T., Patwardhan, S., & Michelizzi, J. (2004). Wordnet::Similarity—Measuring the relatedness of concepts. In: Proceedings of the 9th national conference on artificial intelligence (AAAI-04) (pp. 1024–1025). Morristown, NJ: Association for Computational Linguistics.

Reyes, A., & Rosso, P. (2011). Mining subjective knowledge from customer reviews: A specific case of irony detection. In: Proceedings of the 2nd workshop on computational approaches to subjectivity and sentiment analysis (WASSA 2.011) (pp. 118–124). Association for Computational Linguistics.

Reyes, A., Rosso, P., & Buscaldi, D. (2009). Humor in the blogosphere: First clues for a verbal humor taxonomy. Journal of Intelligent Systems 18(4), 311–331.

Saif, M., Cody, D., & Bonnie, D. (2009). Generating high-coverage semantic orientation lexicons from overtly marked words and a thesaurus. In: Proceedings of the 2009 conference on EMNLP (pp. 599–608). Morristown, NJ: Association for Computational Linguistics.

Sarmento, L., Carvalho, P., Silva, M., & de Oliveira, E. (2009). Automatic creation of a reference corpus for political opinion mining in user-generated content. In: TSA ’09: Proceedings of the 1st international CIKM workshop on topic-sentiment analysis for mass opinion (pp. 29–36). ACM: Hong Kong, China.

Sperber, D., & Wilson, D. (1992). On verbal irony. Lingua, 87, 53–76.

Tsur, O., Davidov, D., & Rappoport, A. (2010). {ICWSM}—A great catchy name: Semi-supervised recognition of sarcastic sentences in online product reviews. In W. W. Cohen & S. Gosling (Eds.), Proceedings of the fourth international AAAI conference on weblogs and social media (pp. 162–169). Washington, D.C.: The AAAI Press.

Utsumi, A. (1996). A unified theory of irony and its computational formalization. In: Proceedings of the 16th conference on computational linguistics (pp. 962–967). Morristown, NJ: Association for Computational Linguistics.

Veale, T., & Hao, Y. (2009). Support structures for linguistic creativity: A computational analysis of creative irony in similes. In: Proceedings of CogSci 2009, the 31st annual meeting of the cognitive science society (pp. 1376–1381).

Veale, T., & Hao, Y. (2010). Detecting ironic intent in creative comparisons. In: Proceedings of 19th European conference on artificial intelligence—ECAI 2010 (pp. 765–770). Amsterdam: IOS Press.

Whissell, C. (2009). Using the revised dictionary of affect in language to quantify the emotional undertones of samples of natural language. Psychological Reports, 105(2), 509–521.

Wilson, D., & Sperber, D. (2007). On verbal irony. In R. Gibbs & H. Colston (Eds.), Irony in language and thought (pp. 35–56). London: Taylor and Francis Group.

Witten, I., & Frank, E. (2005). Data mining. Practical machine learning tools and techniques. Los Altos, CA, Amsterdam: Morgan Kaufmann Publishers, Elsevier.

Acknowledgments

This work has been done in the framework of the VLC/CAMPUS Microcluster on Multimodal Interaction in Intelligent Systems and it has been partially funded by the European Commission as part of the WIQEI IRSES project (grant no. 269180) within the FP 7 Marie Curie People Framework, and by MICINN as part of the Text-Enterprise 2.0 project (TIN2009-13391-C04-03) within the Plan I+D+I. The National Council for Science and Technology (CONACyT - Mexico) has funded the research work of Antonio Reyes.

Author information

Authors and Affiliations

Corresponding author

Appendices

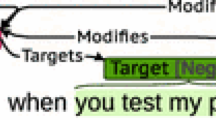

Appendix 1: Examples of the model representation

In this appendix are given some examples regarding how the model is applied over the tweets.

-

1.

Pointedness

-

The govt should investigate him thoroughly; do I smell IRONY

-

Irony is such a funny thing :)

-

Wow the only network working for me today is 3G on my iPhone. WHAT DID I EVER DO TO YOU INTERNET???????

-

-

2.

Counter-factuality

-

My latest blog post is about how twitter is for listening. And I love the irony of telling you about it via Twitter.

-

Certainly I always feel compelled, obsessively, to write. Nonetheless I often manage to put a heap of crap between me and starting\(\ldots\)

-

BHO talking in Copenhagen about global warming and DC is about to get 2ft. of snow dumped on it. You just gotta love it.

-

-

3.

Temporal compression

-

@ryan c onnolly oh the irony that will occur when they finally end movie piracy and suddenly movie and dvd sales begin to decline sharply.

-

I’m seriously really funny when nobody is around. You should see me. But then you’d be there, and I wouldn’t be funny\(\ldots\)

-

RT @Butler G eorge: Suddenly, thousands of people across Ireland recall that they were abused as children by priests.

-

-

4.

Temporal imbalance

-

Stop trying to find love, it will find you;\(\ldots\)and no, he didn’t say that to me..

-

Woman on bus asked a guy to turn it down please; but his music is so loud, he didn’t hear her. Now she has her finger in her ear. The irony

-

-

5.

Contextual imbalance

-

DC’s snows coinciding with a conference on global warming proves that God has a sense of humor.

Relatedness score of 0.3233

-

I know sooooo many Haitian-Canadians but they all live in Miami.

Relatedness score of 0

-

I nearly fall asleep when anyone starts talking about Aderall. Bullshit.

Relatedness score of 0.2792

-

-

6.

Character n-grams (c-grams)

-

WIF

More about Tiger—Now I hear his wife saved his life w/ a golf club?

-

TRAI

SeaWorld (Orlando) trainer killed by killer whale. or reality? oh, I’m sorry politically correct Orca whale

-

NDERS

Because common sense isn’t so common it’s important to engage with your market to really understand it.

-

-

7.

Skip-grams (s-grams)

-

1-skip: richest \(\ldots\) mexican

Our president is black nd the richest man is a Mexican hahahaha lol

-

1-skip: unemployment \(\ldots\) state

When unemployment is high in your state, Open a casino tcot tlot lol

-

2-skips: love \(\ldots\) love

Why is it the Stockholm syndrome if a hostage falls in love with her kidnapper? I’d simply call this love. ;)

-

-

8.

Polarity s-grams (ps-grams)

-

1-skip: pos-neg

Reading glasses pos have RUINED neg my eyes. B4, I could see some shit but I’d get a headache. Now, I can’t see shit but my head feels fine

-

1-skip: neg-neg-pos

Breaking neg News neg : New charity pos offers people to adopt a banker and get photos of his new bigger house and his wife and beaming mistress.

-

2kips: pos-pos-neg

Just heard the brave pos hearted pos English Defence League neg thugs will protest for our freedoms in Edinburgh next month. Mad, Mad, Mad

-

-

9.

Activation

-

I enjoy(2.22) the fact(2.00) that I just addressed(1.63) the dogs(1.71) about their illiteracy(0) via(1.80) Twitter(0). Another victory(2.60) for me.

-

My favorite(1.83) part(1.44) of the optometrist(0) is the irony(1.63) of the fact(2.00) that I can’t see(2.00) afterwards(1.36). That and the cool(1.72) sunglasses(1.37).

-

My male(1.55) ego(2.00) so eager(2.25) to let(1.70) it be stated(2.00) that I’am THE MAN(1.8750) but won’t allow(1.00) my pride(1.90) to admit(1.66) that being egotistical(0) is a weakness(1.75)\(\ldots\)

-

-

10.

Imagery

-

Yesterday(1.6) was the official(1.4) first(1.6) day(2.6) of spring(2.8)\(\ldots\) and there was over a foot(2.8) of snow(3.0) on the ground(2.4).

-

I think(1.4) I have(1.2) to do(1.2) the very(1.0) thing(1.8) that I work(1.8) most on changing(1.2) in order(2.0) to make(1.2) a real(1.4) difference(1.2) paradigms(0) hifts(0) zeitgeist(0)

-

Random(1.4) drug(2.6) test(3.0) today(2.0) in elkhart(0) before 4(0). Would be better(2.4) if I could drive(2.1). I will have(1.2) to drink(2.6) away(2.2) the bullshit(0) this weekend(1.2). Irony(1.2).

-

-

11.

Pleasantness

-

Goodmorning(0), beauties(2.83)! 6(0) hours(1.6667) of sleep(2.7143)? Total(1.7500) score(2.0000)! I love(3.0000) you school(1.77), so so much(2.00).

-

The guy(1.9000) who(1.8889) called(2.0000) me Ricky(0) Martin(0) has(1.7778) a blind(1.0000) lunch(2.1667) date(2.33).

-

I hope(3.0000) whoever(0) organized(1.8750) this monstrosity(0) realizes(2.50) that they’re playing(2.55) the opening(1.88) music(2.57) for WWE’s(0) Monday(2.00) Night(2.28) Raw(1.00) at the Olympics(0).

-

Appendix 2: Probability density function

In this appendix are shown 11 graphs in which we depict the probability density function associated with δ i,j (d k ) for all dimensions according to Formula 2. All these graphs are intended to provide descriptive information concerning the fact that the model is not capturing idiosyncratic features of the negative sets; rather, it is really capturing some aspects of irony. For all the graphs we keep the following representation: #irony (blue line), #education (black line), #humor (green line), #politics (brown line) (Figs. 5, 6, 7, 8

).

Rights and permissions

About this article

Cite this article

Reyes, A., Rosso, P. & Veale, T. A multidimensional approach for detecting irony in Twitter. Lang Resources & Evaluation 47, 239–268 (2013). https://doi.org/10.1007/s10579-012-9196-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10579-012-9196-x