Abstract

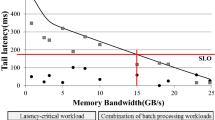

Latency-critical workloads such as web search engines, social networks and finance market applications are sensitive to tail latencies for meeting service level objectives (SLOs). Since unexpected tail latencies are caused by sharing hardware resources with other co-executing workloads, a service provider executes the latency-critical workload alone. Thus, the data center for the latency-critical workloads has exceedingly low hardware resource utilization. For improving hardware resource utilization, the service provider has to co-locate the latency-critical workloads and other batch processing ones. However, because the memory bandwidth cannot be provided in isolation unlike the cores and cache memory, the latency-critical workloads experience poor performance isolation even though the core and cache memory are allocated in isolation to the workloads. To solve this problem, we propose an optimized memory bandwidth management approach for ensuring quality of service (QoS) and high server utilization. By providing isolated shared resources including the memory bandwidth to the latency-critical workload and co-executing batch processing ones, firstly, our proposed approach performs few pre-profilings under the assumption that memory bandwidth contention is the worst with a divide and conquer method. Second, we predict the memory bandwidth to meet the SLO for all queries per seconds (QPSs) based on results of the pre-profilings. Then, our approach allocates the amount of the isolated memory bandwidth that guarantees the SLO to the latency-critical workload and the rest of the memory bandwidth to co-executing batch processing ones. It is experimentally found that our proposed approach can achieve up to 99% SLO assurance and improve the server utilization up to 6.5×.

Similar content being viewed by others

References

Jalaparti, V., Bodik, P., Kandula, S., Menache, I., Rybalkin, M., Yan, C., Jalaparti, V., Bodik, P., Kandula, S., Menache, I., Rybalkin, M., Yan, C.: Speeding up distributed request-response workflows. In: Proceedings of the ACM SIGCOMM 2013 conference on SIGCOMM - SIGCOMM ’13, vol. 43, p. 219. ACM Press, New York (2013)

Xu, Y., Musgrave, Z., Noble, B., Bailey, M.: Bobtail: avoiding long tails in the cloud (2013)

Dabrowski, J.R., Munson, E.V.: Is 100 milliseconds too fast? In: CHI ’01 Extended Abstracts on Human Factors in Computing Systems—CHI ’01, p. 317. ACM Press, New York (2001)

Kapoor, R., Porter, G., Tewari, M., Voelker, G.M., Vahdat, A.: Chronos: predictable low latency for data center applications. In: Proceedings of the Third ACM Symposium on Cloud Computing—SoCC ’12, pp. 1–14. ACM Press, New York (2012)

Lalith, S., Canini, M., Schmid, S., Feldmann, A.: C3: cutting tail latency in cloud data stores via adaptive replica selection. In: Proceedings of the 12th USENIX Conference on Networked Systems Design and Implementation, USENIX Association, p. 296 (2015)

Wang, Q., Lai, C.-A., Kanemasa, Y., Zhang, S., Pu, C.: A study of long-tail latency in n-Tier systems: RPC vs. asynchronous invocations. In: Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), pp. 207–217. IEEE (2017)

Kohavi, R., Longbotham, R.: Online experiments: lessons learned. Computer 40(9), 103–105 (2007)

Zhu, T., Tumanov, A., Kozuch, M.A., Harchol-Balter, M., Ganger, G.R.: Prioritymeister: tail latency qos for shared networked storage. In: Proceedings of the ACM Symposium on Cloud Computing, SOCC ’14, pp. 29:1–29:14. ACM, New York (2014)

Govindan, S., Liu, J., Kansal, A., Sivasubramaniam, A.: Cuanta: quantifying effects of shared on-chip resource interference for consolidated virtual machines. In: Proceedings of the 2nd ACM Symposium on Cloud Computing, SOCC ’11, pp. 22:1–22:14. ACM, New York (2011)

Mars, J., Tang, L., Hundt, R., Skadron, K., Soffa, M.L.: Bubble-up: increasing utilization in modern warehouse scale computers via sensible co-locations. In: Proceedings of the 44th Annual IEEE/ACM International Symposium on Microarchitecture, MICRO-44, pp. 248–259. ACM, New York (2011)

Nathuji, R., Kansal, A., Ghaffarkhah, A.: Q-clouds: managing performance interference effects for qos-aware clouds. In: Proceedings of the 5th European Conference on Computer Systems, EuroSys ’10, pp. 237–250. ACM , New York (2010)

Kasture, H., Sanchez, D.: Ubik: efficient cache sharing with strict qos for latency-critical workloads. In: Proceedings of the 19th International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’14, pp. 729–742. ACM, New York (2014)

Barroso, L.A., Hoelzle, U.: The Datacenter as a Computer: An Introduction to the Design of Warehouse-Scale Machines, 1st edn. Morgan and Claypool Publishers, San Rafael (2009)

Yang, X., Blackburn, S.M., McKinley, K.S.: Elfen scheduling: fine-grain principled borrowing from latency-critical workloads using simultaneous multithreading. In: Proceedings of the 2016 USENIX Annual Technical Conference (USENIX ATC 16), pp. 309–322. USENIX Association, Denver (2016)

Lo, D., Cheng, L., Govindaraju, R., Ranganathan, P., Kozyrakis, C.: Heracles: improving resource efficiency at scale. In: Proceedings of the 42nd Annual International Symposium on Computer Architecture, ISCA ’15, pp. 450–462. ACM, New York (2015)

Zhu, H., Erez, M.: Dirigent: enforcing qos for latency-critical tasks on shared multicore systems. In: Proceedings of the Twenty-First International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’16, pp. 33–47. ACM, New York (2016)

Yun, H., Yao, G., Pellizzoni, R., Caccamo, M., Sha, L.: Memguard: memory bandwidth reservation system for efficient performance isolation in multi-core platforms. In: Proceedings of the 2013 IEEE 19th Real-Time and Embedded Technology and Applications Symposium (RTAS), pp. 55–64 (2013)

Cook, H., Moreto, M., Bird, S., Dao, K., Patterson, D.A., Asanovic, K.: A hardware evaluation of cache partitioning to improve utilization and energy-efficiency while preserving responsiveness. In: Proceedings of the 40th Annual International Symposium on Computer Architecture, ISCA ’13, pp. 308–319. ACM, New York (2013)

Ferdman, M., Adileh, A., Kocberber, O., Volos, S., Alisafaee, M., Jevdjic, D., Kaynak, C., Popescu, A.D., Ailamaki, A., Falsafi, B.: Clearing the clouds: a study of emerging scale-out workloads on modern hardware. In: Proceedings of the Seventeenth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS XVII, pp. 37–48. ACM, New York (2012)

Kasture, H., Sanchez, D.: Tailbench: a benchmark suite and evaluation methodology for latency-critical applications. In: 2016 IEEE International Symposium on Workload Characterization (IISWC), pp. 1–10 (2016)

STREAM Benchmark: http://www.cs.virginia.edu/stream/ref.html (2017)

Huang, S., Huang, J., Dai, J., Xie, T., Huang, B.: The hibench benchmark suite: characterization of the mapreduce-based data analysis. In: 2010 IEEE 26th International Conference on Data Engineering Workshops (ICDEW 2010), pp. 41–51 (2010)

Hurt, K., John, E.: Analysis of memory sensitive spec cpu2006 integer benchmarks for big data benchmarking. In: Proceedings of the 1st Workshop on Performance Analysis of Big Data Systems, PABS ’15, pp. 11–16. ACM, New York (2015)

Mian, R., Martin, P., Vazquez-Poletti, J.L.: Provisioning data analytic workloads in a cloud. Future Gener. Comput. Syst. 29(6), 1452–1458 (2013)

Guo, F., Solihin, Y., Zhao, L., Iyer, R.: A framework for providing quality of service in chip multi-processors. In: Proceedings of the 40th Annual IEEE/ACM International Symposium on Microarchitecture, MICRO 40, pp. 343–355. IEEE Computer Society, Washington, DC (2007)

Iyer, R.: Cqos: a framework for enabling qos in shared caches of cmp platforms. In: Proceedings of the 18th Annual International Conference on Supercomputing, ICS ’04, pp. 257–266. ACM, New York (2004)

Iyer, R., Zhao, L., Guo, F., Illikkal, R., Makineni, S., Newell, D., Solihin, Y., Hsu, L., Reinhardt, S.: Qos policies and architecture for cache/memory in cmp platforms. In: Proceedings of the 2007 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, SIGMETRICS ’07, pp. 25–36. ACM, New York (2007)

Sanchez, D., Kozyrakis, C.: Vantage: scalable and efficient fine-grain cache partitioning. In: Proceedings of the 38th Annual International Symposium on Computer Architecture, ISCA ’11, pp. 57–68. ACM, New York (2011)

Srikantaiah, S., Kandemir, M., Wang, Q.: Sharp control: controlled shared cache management in chip multiprocessors. In: Proceedings of the 42nd Annual IEEE/ACM International Symposium on Microarchitecture, MICRO 42, pp. 517–528. ACM, New York (2009)

Delimitrou, C., Kozyrakis, C.: Paragon: Qos-aware scheduling for heterogeneous datacenters. In: Proceedings of the Eighteenth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’13, pp. 77–88. ACM, New York (2013)

Delimitrou, C., Kozyrakis, C.: Quasar: resource-efficient and qos-aware cluster management. In: Proceedings of the 19th International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’14, pp. 127–144. ACM, New York (2014)

Novaković, D., Vasić, N., Novaković, S., Kostić, D., Bianchini, R.: Deepdive: transparently identifying and managing performance interference in virtualized environments. In: Presented as Part of the 2013 USENIX Annual Technical Conference (USENIX ATC 13), pp. 219–230. USENIX, San Jose (2013)

Vasić, N., Novaković, D., Miučin, S., Kostić, D., Bianchini, R.: Dejavu: accelerating resource allocation in virtualized environments. SIGARCH Comput. Arch. News 40(1), 423–436 (2012)

Yang, H., Breslow, A., Mars, J., Tang, L.: Bubble-flux: precise online qos management for increased utilization in warehouse scale computers. In: Proceedings of the 40th Annual International Symposium on Computer Architecture, ISCA ’13, pp. 607–618. ACM, New York (2013)

Acknowledgements

This research was supported by (1) Institute for Information & communications Technology Promotion (IITP) Grant funded by the Korea government (MSIP) (R0190-16-2012, High Performance Big Data Analytics Platform Performance Acceleration Technologies Development). It was also partly supported by (2) Institute for Information & communications Technology Promotion (IITP) Grant funded by the Korea government (MSIP) (No. 2017-0-01733, General Purpose Secure Database Platform Using a Private Blockchain), and partly supported by (3) the National Research Foundation (NRF) Grants (NRF-2016M3C4A7952587, NRF-2017M3C4A7083751, PF Class Heterogeneous High Performance Computer Development). In addition, this work was partly supported by (4) BK21 Plus for Pioneers in Innovative Computing (Dept. of Computer Science and Engineering, SNU) funded by National Research Foundation of Korea (NRF-21A20151113068).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sung, H., Min, J., Ha, S. et al. OMBM: optimized memory bandwidth management for ensuring QoS and high server utilization. Cluster Comput 22, 161–174 (2019). https://doi.org/10.1007/s10586-018-2828-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-018-2828-1