Abstract

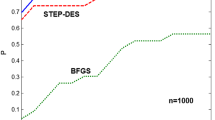

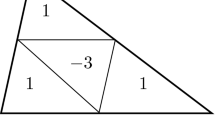

We consider Hessian approximation schemes for large-scale unconstrained optimization in the context of discretized problems. The considered Hessians typically present a nontrivial sparsity and partial separability structure. This allows iterative quasi-Newton methods to solve them despite of their size. Structured finite-difference methods and updating schemes based on the secant equation are presented and compared numerically inside the multilevel trust-region algorithm proposed by Gratton et al. (IMA J. Numer. Anal. 28(4):827–861, 2008).

Similar content being viewed by others

References

Broyden, C.G.: The convergence of A class of double-rank minimization algorithms. J. Inst. Math. Appl. 6, 76–90 (1970)

Coleman, T.F., Garbow, B., Moré, J.J.: Software for estimating sparse Hessian matrices. ACM Trans. Math. Softw. 11, 363–378 (1985)

Coleman, T.F., Moré, J.J.: Estimation of sparse Hessian matrices and graph coloring problems. Math. Program. 28, 243–270 (1984)

Conn, A.R., Gould, N.I.M., Toint, Ph.L.: LANCELOT: a Fortran package for large-scale nonlinear optimization (Release A). In: Springer Series in Computational Mathematics, vol. 17. Springer, Heidelberg (1992)

Curtis, A., Powell, M.J.D., Reid, J.: On the estimation of sparse Jacobian matrices. J. Inst. Math. Appl. 13, 117–119 (1974)

Davidon, W.C.: Variable metric method for minimization. Report ANL-5990(Rev.), Argonne National Laboratory, Research and Development (1959). Republished in the SIAM J. Optim. 1, 1–17 (1991)

Dennis, J.E., Schnabel, R.B.: Numerical Methods for Unconstrained Optimization and Nonlinear Equations. Prentice-Hall, Englewood Cliffs (1983). Reprinted as Classics in Applied Mathematics 16, SIAM, Philadelphia, USA, 1996

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Fisher, M.: Minimization algorithms for variational data assimilation. In: Recent Developments in Numerical Methods for Atmospheric Modelling, pp. 364–385. ECMWF (1998)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13, 317–322 (1970)

Gebremedhin, A.H., Manne, F., Pothen, A.: What color is your jacobian? Graph coloring for computing derivatives. SIAM Rev. 47(4), 629–705 (2005)

Goldfarb, D.: A family of variable metric methods derived by variational means. Math. Comput. 24, 23–26 (1970)

Goldfarb, D., Toint, Ph.L.: Optimal estimation of Jacobian and Hessian matrices that arise in finite difference calculations. Math. Comput. 43(167), 69–88 (1984)

Gratton, S., Mouffe, M., Sartenaer, A., Toint, Ph.L., Tomanos, D.: Numerical experience with a recursive trust-region method for multilevel nonlinear optimization. Optim. Methods Softw. (2009, to appear)

Gratton, S., Mouffe, M., Toint, Ph.L., Weber-Mendonça, M.: A recursive trust-region method in infinity norm for bound-constrained nonlinear optimization. IMA J. Numer. Anal. 28(4), 827–861 (2008)

Gratton, S., Sartenaer, A., Toint, Ph.L.: Recursive Trust-Region Methods for Multiscale Nonlinear Optimization. SIAM J. Optim. 19(1), 414–444 (2008)

Griewank, A.: On automatic differentiation. In: Iri, M., Tanabe, K. (eds.) Mathematical Programming: Recent Developments and Applications, pp. 83–108. Kluwer, Dordrecht (1989)

Griewank, A., Toint, P.L.: On the unconstrained optimization of partially separable functions. In: Powell, M.J.D. (ed.) Nonlinear Optimization 1981, pp. 301–312. Academic Press, London (1982)

Griewank, A., Toint, Ph.L.: Partitioned variable metric updates for large structured optimization problems. Numer. Math. 39, 119–137 (1982)

Hossain, S., Steihaug, T.: Computing a sparse jacobian matrix by rows and columns. Optim. Methods Softw. 10(1), 33–48 (1998)

Hossain, S., Steihaug, T.: Sparsity issues in the computation of jacobian matrices. In: ISSAC ’02: Proceedings of the 2002 International Symposium on Symbolic and Algebraic Computation, pp. 123–130. ACM, New York (2002)

Marwil, E.: Exploiting sparsity in Newton-like methods. Ph.D. thesis, Cornell University, Ithaca, USA (1978)

Nash, S.G.: A multigrid approach to discretized optimization problems. Optim. Methods Softw. 14, 99–116 (2000)

Nash, S.G.: A survey of truncated-Newton methods. J. Comput. Appl. Math. 124, 45–59 (2000)

Nocedal, J., Wright, S.J.: Numerical Optimization. Series in Operations Research, Springer, Heidelberg (1999)

Oh, S., Milstein, A., Bouman, C., Webb, K.: Multigrid algorithms for optimization and inverse problems. In: Bouman C., Stevenson R. (eds.) Computational Imaging, Proceedings of the SPIE, vol. 5016, pp. 59–70. DDM (2003)

Powell, M.J.D., Toint, Ph.L.: On the estimation of sparse Hessian matrices. SIAM J. Numer. Anal. 16(6), 1060–1074 (1979)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24, 647–657 (1970)

Sorensen, D.C.: An example concerning quasi-Newton estimates of A sparse Hessian. SIGNUM Newslet. 16(2), 8–10 (1981)

Toint, Ph.L.: On sparse and symmetric matrix updating subject to a linear equation. Math. Comput. 31(140), 954–961 (1977)

Toint, Ph.L.: On the superlinear convergence of an algorithm for solving a sparse minimization problem. SIAM J. Numer. Anal. 16, 1036–1045 (1979)

Toint, Ph.L.: A note on sparsity exploiting quasi-Newton methods. Math. Program. 21(2), 172–181 (1981)

Wen, Z., Goldfarb, D.: A linesearch multigrid methods for large-scale convex optimization. Tech. rep., Department of Industrial Engineering and Operations Research, Columbia University, New York (2007)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Malmedy, V., Toint, P.L. Approximating Hessians in unconstrained optimization arising from discretized problems. Comput Optim Appl 50, 1–22 (2011). https://doi.org/10.1007/s10589-010-9317-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-010-9317-7