Abstract

In many emerging security applications, a system designer frequently needs to ensure that a certain property of a given system (that may reveal important details about the system’s operation) be kept secret (opaque) to outside observers (eavesdroppers). Motivated by such applications, several researchers have formalized, analyzed, and described methods to verify notions of opacity in discrete event systems of interest. This paper introduces and analyzes a notion of opacity in systems that can be modeled as probabilistic finite automata or hidden Markov models. We consider a setting where a user needs to choose a specific hidden Markov model (HMM) out of m possible (different) HMMs, but would like to “hide” the true system from eavesdroppers, by not allowing them to have an arbitrary level of confidence as to which system has been chosen. We describe necessary and sufficient conditions (that can be checked with polynomial complexity), under which the intruder cannot distinguish the true HMM, namely, the intruder cannot achieve a level of certainty about its decision, which is above a certain threshold that we can a priori compute.

Similar content being viewed by others

Notes

A passive observer is one that does not have any decision-making authority in the system (i.e., it cannot influence the operation of the system).

The graph corresponding to the Markov chain (Q, A, π 0)is the graph G = (Q, E)with vertices Q and edges E ⊆ Q × Q such that (q k , q j ) ∈ E iff A(k, j) > 0.

Usually P 1 + P 2 = 1,but in our case we keep the priors as if the two HMMs, were part of asetting with m HMMs, as it is described in Definition 6. This helps usto avoid notational overhead involving renormalizations of priors (namely, P i′ = P i /(P 1 + P 2)for i = 1, 2).

For simplicity we describe a case with only two HMMs, but the connection with probabilistic current-state opacity, can be easily generalised to the case of m HMMs.

The Kronecker product (Brewer 1978) A ⊗ B of a p × q matrix A with an m × n matrix B is the p m × q n matrix

$$A\otimes B = \left[ \begin{array}{llll} a_{11}B& a_{12}B & {\cdots} & a_{1q}B\\ a_{21}B& a_{22}B & {\cdots} & a_{2q}B\\ {\vdots} & {\vdots} & {\vdots} & \vdots\\ a_{p1}B& a_{p2}B & {\cdots} & a_{pq}B\\ \end{array} \right]. $$We can always do this since subsequent behavior of the enhanced model does not depend on whether westart from state q h, e or \(q_{h,e^{\prime }}\).

In performing this analysis, we use the following well known properties of the Kronecker product:1. (A ⊗ B)(C ⊗ D) = (A C) ⊗ (B D); 2. (A ⊗ B) ⊗ C = A ⊗ (B ⊗ C)for matrices A, B, C, D of appropriate dimensions (Brewer 1978).

Equivalently, \(d_{V}({\pi }^{(1)}_{e}, {\pi }^{(2)}_{e})>0\) (Lemma 6).

References

Athanasopoulou E, Hadjicostis CN (2008) Probability of error bounds for failure diagnosis and classification in hidden Markov models. In: Proceedings of IEEE conference on decision and control, pp 1477–1482

Badouel E, Bednarczyk M, Borzyszkowski A, Caillaud B, Darondeau P (2006) Concurrent secrets. In: Proceedings of the 8th international workshop on discrete event systems, pp 51–57

Berard B, Mullins J, Sassolas M (2010) Quantifying opacity. In: Proceedings of 7th international conference on the quantitative evaluation of systems (QEST), pp 263–272

Brard B, Mullins J, Sassolas M (2015) Quantifying opacity. Math Struct Comput Sci 25(2):361–403

Brewer J (1978) Kronecker products and matrix calculus in system theory. IEEE Trans Circuits Syst 25(9):772–781

Bryans JW, Koutny M, Ryan P (2005a) Modelling dynamic opacity using Petri nets with silent actions. ser. Formal Aspects in Security and Trust. Springer 173:159–172

Bryans JW, Koutny M, Ryan P (2005b) Modelling opacity using Petri nets. Electron Notes Theor Comput Sci 121:101–115

Bryans JW, Koutny M, Mazare L, Ryan P (2005) Opacity generalised to transition systems. In: Proceedings of the 3rd international workshop on formal aspects in security and trust, pp 81–95

Cardenas AA, Baras JS, Ramezani V (2004) Distributed change detection for worms, DDos and other network attacks. In: Proceedings of the 2004 American control conference, vol 2, pp 1008–1013

Cassandras CG, Lafortune S (2007) Introduction to discrete event systems. Springer, Berlin

Chen B, Willett P (2000) Detection of hidden Markov model transient signals. IEEE Trans Aerosp Electron Syst 36(4):1253–1268

Dembo A, Zeitouni O (1998) Large deviations techniques and applications. Springer, New York

Dubreil J, Darondeau P, Marchand H (2008) Opacity enforcing control synthesis. In: Proceedings of 9th international workshop on discrete event systems, pp 28–35

Focardi R, Gorrieri R (1994) A taxonomy of trace–based security properties for CCS. In: Proceedings of the 7th workshop on computer security foundations, pp 126–136

Fu KS (1982) Syntactic pattern recognition and applications. Prentice-Hall, Upper Saddle River

Glynn P, Ormoneit D (2002) Hoeffding’s inequality for uniformly ergodic Markov chains. Stat Prob Lett 56:143–146

Hadjicostis CN (2005) Probabilistic detection of FSM single state-transition faults based on state occupancy measurements. IEEE Trans Autom Control 50(12):2078–2083

Keroglou C, Hadjicostis CN (2013) Initial state opacity in stochastic DES. In: Proceedings of 18th conference on emerging technologies factory automation (ETFA), pp 1–8

Keroglou C, Hadjicostis CN (2014) Hidden Markov model classification based on empirical frequencies of observed symbols. In: Proceedings of 12th international workshop on discrete event systems (WODES), pp 7–12

Keroglou C, Hadjicostis CN (2016) Probabilistic system opacity in discrete event systems. In: Proceedings of 13th international workshop on discrete event systems (WODES), pp 379–384

Millen JK (1987) Covert channel capacity. In: Proceedings of IEEE symposium on security and privacy, pp 60–66

Neyman J, Pearson ES (1992) On the problem of the most efficient tests of statistical hypotheses. Springer, New York, pp 73–108

Saboori A, Hadjicostis CN (2007) Notions of security and opacity in discrete event systems. In: Proceedings of 46th IEEE conference on decision and control, pp 5056–5061

Saboori A, Hadjicostis CN (2011) Coverage analysis of mobile agent trajectory via state-based opacity formulations. Control Engineering Practice (Special Issue on Selected Papers from 2nd International Workshop on Dependable Control of Discrete Systems) 19(9):967–977

Saboori A, Hadjicostis CN (2013) Verification of initial-state opacity in security applications of DES. Inf Sci 246:115–132

Saboori A, Hadjicostis CN (2014) Current-state opacity formulations in probabilistic finite automata. IEEE Trans Autom Control 59(1):120–133

Seneta E (2006) Non-negative matrices and Markov chains. Springer Series in Statistics, Berlin

Tzeng W-G (1989) The equivalence and learning of probabilistic automata. In: Proceedings of 30th annual symposium on foundations of computer science, pp 268–273

Wittbold JT, Johnson DM (1990) Information flow in nondeterministic systems. In: Proceedings of IEEE computer society symposium on research in security and privacy, pp 144–161

Wu Y-C, Lafortune S (2013) Comparative analysis of related notions of opacity in centralized and coordinated architectures. Discrete Event Dynamic Systems 23(3):307–339

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Special Issue on Diagnosis, Opacity and Supervisory Control of Discrete Event Systems

Guest Editors: Christos G. Cassandras and Alessandro Giua

This work falls under the Cyprus Research Promotion Foundation (CRPF) Framework Programme for Research, Technological Development and Innovation 2009–2010 (CRPF’s FP 2009–2010), co-funded by the Republic of Cyprus and the European Regional Development Fund, and specifically under Grant T Π E/O P I Z O/0609(BE)/08. Any opinions, findings, and conclusions or recommendations expressed in this publication are those of the authors and do not necessarily reflect the views of CRPF.

Appendix A: Proof of Theorem 1

Appendix A: Proof of Theorem 1

In order to simplify the notation, we present a proof that is appropriate for a decision rule that we introduce. We name this rule, “empirical rule” (A.1) which is based on the total number of single events. The empirical rule is useful only when we can distinguish statistically the two HMMs, counting only single events. This rule is illustrated for the case of single events, but can also be applied for (finite) event sequences that can be produced by at least one of the two HMMs. This is equivalent to the statement “ S (1) and S (2) are not probabilistically equivalent from steady–state” in Theorem 1. The statistical measure that we use is the distance in variation, which compares the expected frequency of an event, against the measured frequency. The expected frequency of an event can be computed easily and is equivalent to what we call “steady-state emission probability” for a single event. In order to prove the asymptotical tightness of the upper bound on the probability of misclassification, we use a generalisation of “Hoeffding’s inequality” (Glynn and Ormoneit 2002), for functions of Markov chains. We apply the generalised Hoeffding’s inequality, to distinguish two enhanced HMM models defined in 1, and we prove that these enhanced models, are also irreducible and aperiodic, as long as the given HMMs are irreducible and aperiodic. The expected frequency for event sequences can be computed without any state explosion in the enhanced models (compared to the initial HMMs) something that is established in Lemma 6. Finally, we use the generalised Hoeffding’s inequality, combined with the empirical rule, in order to establish Theorem 1. Most of the material in this appendix was presented in our previous work in Keroglou and Hadjicostis (2014) where we explored an emprirical frequency rule for stochastic fault diagnosis. The new material in this paper is related to important proofs that were omitted in our previous work due to space limitations. Specifically, we provide a complete proof in three important Lemmas (Lemmas 4, 5, and 6), and in Section 5 that uses Hoeffding’s inequality to obtain an upper bound on the probability of misclassification.

1.1 A.1 Empirical rule

We define a suboptimal rule for HMM classification, which compares the total number of occurrences of each event (see Definition 10) against their frequencies (Definition 11) expected in each of the two HMMs.

Definition 10

(Fraction of times event e i appears (m n (e i ))).Suppose we are given an observation sequence of length n (ω = ω[1]⋯ω[n]). Wedefine \(m_{n}(e_{i})= \frac {1}{n}\displaystyle \sum \limits \limits _{t = 1}^{n} g_{e_{i}}(\omega [t])\),where

In other words, m n (e i )is the fraction of timesevent e i appears inobservation sequence ω.

Definition 11

(Distance in Variation d V (v, v ′)BetweenTwo Probability Vectors v, v ′).The distance in variation (Dembo and Zeitouni 1998) between two |E|-dimensional probabilityvectors v, v ′is definedas

where v(j)(v ′(j)) is the j th entryof vector v(v ′).

Let the stationary emission probabilities in Definition 6 for HMM S (1) (S (2)) be denoted by the |E|-dimensional vector \(\pi ^{(1)}_{e}=\left [\pi ^{(1)}_{e_{1}},...,\pi ^{(1)}_{e_{|E|}}\right ]^{T}\) (respectively, by \(\pi ^{(2)}_{e}=[\pi ^{(2)}_{e_{1}},...,\pi ^{(2)}_{e_{|E|}}]^{T}\)). Then, we have \(d_{V}(\pi ^{(1)}_{e},\pi ^{(2)}_{e})=\frac {1}{2}\displaystyle \sum \limits _{j = 1}^{|E|}|\pi ^{(1)}_{e_{j}}-\pi ^{(2)}_{e_{j}}|\).

Definition 12

(Empirical Rule). Given two irreducible and aperiodic HMMs, S (1)and S (2), and a sequenceof observations ω = ω[1]ω[2]⋯ω[n], we perform classification using the following suboptimal rule.

-

We first compute m n = [m n (e 1),m n (e 2),⋯ ,m n (e |E|)]Tas in Definition 10.

-

We then set \(\theta = \frac {1}{2} d_{V}(\pi ^{(1)}_{e}, \pi ^{(2)}_{e})\),where \(\pi ^{(j)}_{e}\), j ∈{1, 2},is the stationary emission probability vector for S (j),and compare

$$ d_{V}(m_{n},\pi^{(1)}_{e})_<^> \theta \; . $$(4) -

We decide in favor of S (1) (S (2))ifthe right (left) quantity is larger.

1.2 A.2 Enhanced HMM model

In this section we define a function of the states of the underlying Markov chain of the two HMMs S (1) and S (2), that counts the occurrences of each event e i ∈ E, with which we arrive at that state. This is not necessarily possible in S (j), j ∈{1, 2}, because in general we can reach a state via different events. The reason we need to define a function of the states is so that we can analyze the empirical rule (Definition 12) using existing techniques for Markov chain analysis.

First, we obtain, for each of the given HMMs, an enhanced construction that allows us to discriminate the transition to the same state but via different events. We prove that our enhanced construction inherits the properties of irreducibility and aperiodicity (the two conditions needed to apply Theorem 2) from the corresponding original HMM. The two enhanced HMM models are denoted by \(\widetilde {S}^{(j)}= \{\widetilde {Q}^{(j)}, E, \widetilde {\Delta }^{(j)}, \widetilde {\Lambda }^{(j)}, \widetilde {\pi _{0}}^{(j)} \}\), j ∈{1, 2}. The enhanced construction creates replicas of each state, depending on the event via which one reaches this state. Thus, for each state \(q^{}_{h} \in Q^{(j)}\), we create states \(q^{}_{h,e_{i}} \in \widetilde {Q^{(j)}}\), e i ∈ E, to represent that we reach state \(q^{}_{h} \in Q^{(j)}\) under the output symbol \(e^{}_{i}\). Clearly, we end up with at most \(|\widetilde {Q}^{(j)}|=|Q|\times |E_{}|\) states.

The following discussion applies to each original HMM and its enhanced model (we drop j, j ∈{1, 2}, to simplify notation). In the state probability vectors π[k], \(\widetilde {\pi }[t]\), where t is the current state epoch, states are indexed in the order shown below

The matrix \(\widetilde {A}_{e_{i}}\), e i ∈ E, satisfies \(\widetilde {A}_{e_{i}}(q_{h,e_{i}},q^{\prime }_{h,e^{\prime }_{i}})=A_{e_{i}}(q_{h},q^{\prime }_{h})\), \(\forall e^{\prime }_{i} \in E\) and ∀q h , q h′∈ Q (zero otherwise). We also have for, \(e^{\prime }_{i^{\prime }}\in E\) and \(q_{h,e_{i}}, q^{\prime }_{h,e^{\prime }_{i}}\in \widetilde {Q}\), \(\widetilde {\Lambda }(q^{\prime }_{h,e^{\prime }_{i}}, e_{i}, q_{h,e_{i}})=\widetilde {A}_{e_{i}}(q_{h,e_{i}}, e_{i}, q^{\prime }_{h,e^{\prime }_{i}})\) (zero otherwise). We observe that matrix \(\widetilde {A}_{e_{i}}\) is constructed by blocks of matrix \(A_{e_{i}}\). If we define row-vector \(R_{|E|}= \underbrace {[1 1 {\cdots } 1]}_{|E|- times}\) and let

then the state transition matrix \(\widetilde {A}^{(j)}_{e_{i}}\) for the enhanced model \(\widetilde {S}^{(j)}\) can be written asFootnote 5

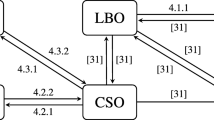

Example 2 We create the enhanced HMM models \(\widetilde {S}^{(1)}\) (shown in Fig. 3) and \(\widetilde {S}^{(2)}\) for S (1) and S (2) respectively (shown in Fig. 1). We note that the underlying state transition matrix, for each enhanced model, is irreducible and aperiodic (as we will see, \(\widetilde {S}^{(j)}\) will be irreducible and aperiodic as long as S (j) is irreducible and aperiodic). The corresponding \(\widetilde {A}^{(1)}_{\alpha },\widetilde {A}^{(1)}_{\beta },\widetilde {A}^{(2)}_{\alpha },\widetilde {A}^{(2)}_{\beta }\) are as follows:

Enhanced model \(\widetilde {S}^{(1)}\) for HMM model S (1) in Fig. 1

1.3 A.3 Required conditions for using Hoeffding’s inequality on enhanced models

Proposition 1 (Hoeffding’s Inequality on Enhanced HMM Model)

Consider enhanced HMMs, \(\widetilde {S}^{(j)}= \{\widetilde {Q}^{(j)}, E, \widetilde {\Delta }^{(j)},\allowbreak \widetilde {\Lambda }^{(j)},\allowbreak \widetilde {\pi _{0}}^{(j)} \}\) , j ∈{1, 2},with |E|events and transition matrix \(\widetilde {A}^{(j)}\) . Assuming the Markov chains that correspond to the enhanced models \(\widetilde {S}^{(j)}\) , j ∈{1, 2}, are irreducible and aperiodic, we denote their stationary distributions by \(\widetilde {\pi }^{(j)}>0\) and stationary emission distribution for events e i ∈ E by \(\widetilde {\pi }^{(j)}_{e}>0\) .

Using the enhanced models ( \(\widetilde {S}^{(1)}\) and \(\widetilde {S}^{(2)}\) ) for each e i ∈ E , we define the indicator functions \(f_{e_{i}}(q_{h,e_{j}})\) , \(\forall q_{h, e_{j}} \in \widetilde {Q}\) ,as

Let \(m_{n}(e_{i})= \frac {1}{n}\displaystyle \sum \limits _{t = 1}^{n} f_{e_{i}}(q[t])\), i.e., the |E|-dimensional vector m n = [m n (e 1), m n (e 2),...,m n (e |E|)]T denotes the empirical frequencies with which each event appears in the given observation window of length n. Let M j , be the smallest integer such that \({(\widetilde {A}^{(j)})}^{M_{j}}>0\), element-wise, and \(\lambda _{j}=\min _{l,l^{\prime }} \left \{ \frac {{(\widetilde {A}^{(j)})}^{M_{j}}(l,l^{\prime })}{\widetilde {\pi }^{(j)}(l)} \right \}\), where \(\widetilde {\pi }^{(j)}(l)\) is the stationary distribution of \(\widetilde {S}^{(j)}\). As long as the enhanced model \(\widetilde {S}^{(j)}\) is irreducible and aperiodic, it can be shown Glynn and Ormoneit (2002) and Hadjicostis (2005) that the following is true for \(n>\frac {2M_{j}}{\lambda _{j}\epsilon }\), for each event e i (1 ≤ i ≤|E|), and for \(F^{(j)}(n)=\exp \left (-\frac {{\lambda _{j}}^{2}\left (n\epsilon - \frac {2M_{j}}{\lambda _{j}}\right )^{2}}{2n{M_{j}}^{2}}\right )\):

In order to use Eq. 5, we need \(\widetilde {S}^{(j)}\) to correspond to an irreducible (Definition 3) and aperiodic (Definition 4) Markov chain. We now show that \(\widetilde {S}^{(j)}\) is irreducible and aperiodic if S (j) is irreducible and aperiodic. Also, we establish that \(\widetilde {\pi }^{(j)}_{e}=\pi ^{(j)}_{e}\).

Lemma 4

If HMM \(S^{(j)}=(Q^{(j)}, E^{(j)}, \Delta ^{(j)}, \Lambda ^{(j)}, \pi ^{(j)}_{0})\) is irreducible (Definition 3), then the enhanced HMM \(\widetilde {S}^{(j)}= \{\widetilde {Q}^{(j)}, E, \widetilde {\Delta }^{(j)},\allowbreak \widetilde {\Lambda }^{(j)}, \widetilde {\pi _{0}}^{(j)} \}\) is also irreducible.

Proof

We prove irreducibility by establishing the property that any state\(q_{h,e_{i}} \in \widetilde {Q}^{(j)}\)thatdoes not belong to a set of strongly connected states (Definition 3), may exhibit outgoingtransitions but will have no incoming transition. Consider in the enhanced model the set ofstates

Sincethe set of states Q in the original system is strongly connected, we can easily show that the states in\(\widetilde {Q}_{ss}\)are stronglyconnected: given q m, e ,\(q_{m^{\prime },e^{\prime }}\) \(\in \widetilde {Q}\)we can find a path to connectthem as follows: Let \(q_{m^{\prime \prime }}\)be such that \(\Lambda (q_{m^{\prime \prime }},e^{\prime },q_{m^{\prime }})>0\). Then, we can find a path

(because the originalHMM is irreducible). Therefore

is a path thatconnects \(q_{m,e} \in \widetilde {Q}\)to\(q_{m^{\prime },e^{\prime }} \in \widetilde {Q}\). We finallyconclude that the states that do not belong to the set of strongly connected states, have only outgoingtransitions. Therefore, by choosing an appropriate initial distribution function that excludes all these transientstates,Footnote 6we can ensure that all of these transient states will never be visited. □

Lemma 5

If HMM \(S^{(j)}=(Q^{(j)}, E^{(j)}, \Delta ^{(j)}, \Lambda ^{(j)}, \pi ^{(j)}_{0})\) is aperiodic (Definition 4), then the enhanced HMM \(\widetilde {S}^{(j)}= \{\widetilde {Q}^{(j)}, E, \widetilde {\Delta }^{(j)},\allowbreak \widetilde {\Lambda }^{(j)}, \widetilde {\pi _{0}}^{(j)} \}\) is also aperiodic.

Proof

We show that if the enhanced model \(\widetilde {S}^{(j)}\)is periodic with period k, this contradicts the fact that S (j)is aperiodic. Suppose that \(\widetilde {S}^{(j)}\)is periodic with period k (Definition 4 and Lemma2). This means we can group all possible states of\(\widetilde {S}^{(j)}\)to k groups (\(\widetilde {C}_{1},\widetilde {C}_{2},\cdots ,\widetilde {C}_{k}\))such that for a state \(q_{l,e} \in \widetilde {C}_{m}\),there exist one-step transitions only to states in\(\widetilde {C}_{m^{\prime }}\),where m ′ = m + 1 mod k.

Due to the construction of enhanced models, the outgoing behaviour of q l, e states ∀e ∈ E are copies of the outgoing behaviour of q l ∈ Q.We can easily see that if there exists \(q_{l,e} \in \widetilde {Q}\),that belongs to \(\widetilde {C}_{m}\),then also \(q_{l,e^{\prime }} \in \widetilde {Q}\)belongs to \(\widetilde {C}_{m}\),for all e, e ′∈ E(due to the same outgoing behaviour). Thus, we can also group q ∈ Q into C i , i ∈{1, 2,...,k},classes. Thus, S (j)is periodic, with period k, which is a contradiction. □

1.4 A.4 Consistency of stationary emission probabilities for S (j) and \(\widetilde {S}^{(j)}\)

We now show that in the enhanced model \(\widetilde {S}^{(j)}\), the stationary emission probabilities of each event are consistent with the original model S (j) for j = 1, 2.

Lemma 6

The computed stationary emission probabilities for symbols in the enhanced model \(\widetilde {S}^{(j)}\) , j ∈{1, 2}which is denoted respectively by \(\widetilde {\pi }^{(j)}_{e}\) ,is identical to \(\pi ^{(j)}_{e}\) corresponding to S (j) .

Proof

Let \(\widetilde {\pi }^{(j)}_{s}\) denote the steady-state distribution vector in the enhanced model j. Then, we have that under each model

For S (j) and ∀e i ∈ E, the stationary emission probability \(\pi ^{(j)}_{e}(e_{i})\)can be expressed as

where as for the enhanced modelFootnote 7 \(\widetilde {S}^{(j)}\).

Moreover, we have

which allows us to conclude that \(\pi ^{(j)}_{e}(e_{i})=\widetilde {\pi }^{(j)}_{e}(e_{i})\), ∀e i ∈ E. □

1.5 A.5 Upper bound on the probability of error

Given two HMMs S (1) and S (2) (each irreducible and aperiodic), we construct the corresponding enhanced HMM models (\(\widetilde {S}^{(1)}\), \(\widetilde {S}^{(2)}\)) with underlying irreducible and a periodic Markov chains \(\widetilde {MC}^{(1)}=(\widetilde {Q}^{(1)},\widetilde {A}^{(1)},\widetilde {\pi _{0}}^{(1)})\) and \(\widetilde {MC}^{(2)}=(\widetilde {Q}^{(2)},\widetilde {A}^{(2)},\widetilde {\pi _{0}}^{(2)})\) (i.e., this means that \(\widetilde {A}^{(1)}\) and \(\widetilde {A}^{(2)}\) are primitive matrices). Suppose we haveFootnote 8 \(d_{V}(\widetilde {\pi }^{(1)}_{e}, \widetilde {\pi }^{(2)}_{e})>0\). Then, if we apply the empirical rule and use Hoeffding’s inequality (Proposition 1), we obtain the upper bound on the probability of error using the empirical rule where F(n) is given by

Proof

We consider two error cases:

- Case 1::

-

Decide S (1)when the system is S (2);

- Case 2::

-

Decide S (2)when the system is S (1).

Case 1:

The decision of S (1)is equivalent to the event

which necessarily implies d V (m n , π e(2)) ≥ 𝜃 \(\left (\text {for } \theta =\frac {1}{2}d_{V}\left (\pi ^{(1)}_{e},\pi ^{(2)}_{e}\right )\right )\). Otherwise, we reach acontradiction, because d V (m n , π e(2)) < 𝜃 and \(d_{V}(m_{n},\pi ^{(1)}_{e})< \theta \)imply

Thus, H (1)implies\(d_{V}(m_{n},\pi ^{(1)}_{e})\geq \theta \), whichimplies

Therefore, we have to consider two different subcases:

- a):

-

\(m_{n}(e_{k})-\pi ^{(2)}_{e}(e_{k})>0\),

- b):

-

\(m_{n}(e_{k})-\pi ^{(2)}_{e}(e_{k})<0\).

The probability of error for Case 1 and subcase a), after n observations, is

where \(\Pr \left (H^{(1)}_{k}| S^{(2)}\right )\equiv \Pr (m_{n}(e_{k})-\pi ^{(2)}_{e}(e_{k}))>\frac {\theta }{|E|})\leq F^{(2)}_{n}\),for \(\epsilon =\frac {\theta }{|E|}\).

In Case 1a) we can immediately apply Eq. 5, but in Case 1b) in order to find apositive measure we choose to count the number of appearances of all elements in k c = {e ∈ E | s.t. e≠e k }, i.e., all possibleevents except e k ∈ E.Then \(m_{n}(e_{k^{c}})-\pi ^{(2)}_{e}(e_{k^{c}})=(1-m_{n}(e_{k}))-(1-\pi ^{(2)}_{e}(e_{k}))>0\),and we can apply (5), which leads us to the same bound.

Case 2:

With the same reasoning as in Case 1, we establish the following inequality

where \(H^{(2)}: d_{V}(m_{n},\pi ^{(1)}_{e})>\theta \), which implies that \(H^{(2)}_{k^{\prime }}\): {There existsat least one \(e_{k^{\prime }}\)such that \(|m_{n}(e_{k^{\prime }})-\pi ^{(1)}_{e}(e_{k^{\prime }})|>\frac {\theta }{|E|}\),where \(e_{k^{\prime }}\) ∈ E }. Theclaim follows using similar arguments as in Case 1.

Finally, we prove that

In other words, if two HMMs have different expected frequencies for at least one single event, thisallows to asymptotically distinguish the two HMMs using the empirical rule. Without loss ofgenerality, we can extend this result to event sequences, that have different expected frequencies.Knowing that the expected frequency of an event sequence can be computed as the probability ofoccurrence of an event sequence, generated by an HMM that starts at the steady-state, then if thereis at least one event sequence with different expected frequencies, is equivalent to the fact that thetwo HMMs, are not probabilistically equivalent from the steady-state. This concludes the proof. □

Rights and permissions

About this article

Cite this article

Keroglou, C., Hadjicostis, C.N. Probabilistic system opacity in discrete event systems. Discrete Event Dyn Syst 28, 289–314 (2018). https://doi.org/10.1007/s10626-017-0263-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10626-017-0263-8