Abstract

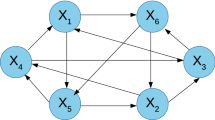

Estimation of distribution algorithms are considered to be a new class of evolutionary algorithms which are applied as an alternative to genetic algorithms. Such algorithms sample the new generation from a probabilistic model of promising solutions. The search space of the optimization problem is improved by such probabilistic models. In the Bayesian optimization algorithm (BOA), the set of promising solutions forms a Bayesian network and the new solutions are sampled from the built Bayesian network. This paper proposes a novel real-coded stochastic BOA for continuous global optimization by utilizing a stochastic Bayesian network. In the proposed algorithm, the new Bayesian network takes advantage of using a stochastic structure (that there is a probability distribution function for each edge in the network) and the new generation is sampled from the stochastic structure. In order to generate a new solution, some new structure, and therefore a new Bayesian network is sampled from the current stochastic structure and the new solution will be produced from the sampled Bayesian network. Due to the stochastic structure used in the sampling phase, each sample can be generated based on a different structure. Therefore the different dependency structures can be preserved. Before the new generation is generated, the stochastic network’s probability distributions are updated according to the fitness evaluation of the current generation. The proposed method is able to take advantage of using different dependency structures through the sampling phase just by using one stochastic structure. The experimental results reported in this paper show that the proposed algorithm increases the quality of the solutions on the general optimization benchmark problems.

Similar content being viewed by others

References

D.E. Goldberg, Genetic Algorithms in Search, Optimization and Machine Learning (Addison-Wesley, Reading, 1989)

M. Gallagher, I. Wood, J. Keith, Bayesian inference in estimation of distribution algorithms, in Proceedings of the IEEE Congress on Evolutionary Computation (2007), pp. 127–133

D. Heckerman, D. Geiger, D.M. Chickering, Learning Bayesian networks: the combination of knowledge and statistical data, Technical Report MSR-TR-94-09 (Microsoft Research, Redmond, 1995)

M. Pelikan, Bayesian optimization algorithm: from single level to hierarchy. Ph.D. Dissertation, University of Illinois at Urbana-Champaign, Urbana, IL (2002)

J.A. Lozano, P. Larrañaga, I. Inza, E. Bengoetxea (eds.), Towards a new evolutionary computation: advances on estimation of distribution algorithms (Springer, Berlin, 2006)

H. Karshenas, R. Santana, C. Bielza, P. Larrañaga, Regularized continuous estimation of distribution algorithms. Appl. Soft Comput. 13, 2412–2432 (2013)

P. Larrañaga, J. Lozano (eds.), Estimation of Distribution Algorithms: A New Tool for Evolutionary Computation (Kluwer Academic Publishers, Norwell, 2001)

A. Abdollahzadeh, A. Reynolds, M. Christie, D.W. Corne, G.J. Williams, B.J. Davies, Estimation of distribution algorithms applied to history matching. SPE J 18(03), 508–517 (2013)

D. Heckerman, in Innovations in Bayesian Networks, chap. 3, ed. by D.E. Holmes, L.C. Jain (Springer, Berlin, 2008), pp. 33–82

E.C. Conde, S.I. Valdez, E. Hernández, in Multibody Mechatronic Systems, ed. by M. Ceccarelli, E.E.H. Martinez (Springer, Berlin, 2014), pp. 315–325

D.H. Wolpert, W.G. Macready, No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1(1), 67–82 (1997)

C.K. Chow, C.N. Liu, Approximating discrete probability distributions with dependence trees. IEEE Trans. Inf. Theory 14(3), 462–467 (1968)

B. Moradabadi, H. Beigy, C.W. Ahn, An improved real-coded Bayesian optimization algorithm for continuous global optimization. Int J Innov Comput Inf Control 9(6), 2505–2519 (2013)

D.M. Chickering, D. Geiger, D. Heckerman, Learning Bayesian network is NP-hard. Technical Report MSR-TR-94-17 (1994)

L. Wang, Sh Wang, M. Liu, A Pareto-based estimation of distribution algorithm for the multi-objective flexible job-shop scheduling problem. Int. J. Prod. Res. 51(12), 3574–3592 (2013)

M. Pelikan, K. Sastry, E. Cantu-Paz (eds.), Scalable optimization via probabilistic modeling: from algorithms to applications (Springer, Berlin, 2006)

P. Larrañaga, H. Karshenas, C. Bielza, R. Santana, A review on evolutionary algorithms in Bayesian network learning and inference tasks. Inf. Sci. 233, 109–125 (2013)

C.W. Ahn, R.S. Ramakrishna, On the scalability of real-coded Bayesian optimization algorithm. IEEE Trans. Evol. Comput. 12(3), 307–322 (2008)

P.A.N. Bosman, Design and application of iterated density-estimation evolutionary algorithms. Ph.D. Dissertation, Utrecht University, Utrecht, The Netherlands (2003)

C.W. Ahn, R.S. Ramakrishna, D.E. Goldberg, Real-coded Bayesian optimization algorithm: bringing the strength of BOA into the continuous world, in Lecture Notes in Computer Science, vol 3102 (Springer, Berlin, 2004), pp. 840–851

G. Harik, Linkage learning in via probabilistic modeling in the ECGA (University of Illinois at Urbana-Champaign, Urbana, 1999)

Function definitions and performance criteria for the special session on real-parameter optimization at CEC2005 (2005). http://www.ntu.edu.sg/home/EPNSugan

B. Moradabadi, H. Beigy, C.W. Ahn, An Improved real-coded bayesian optimization algorithm, in Proceedings of IEEE Congress on Evolutionary Algorithm (2011)

D.E. Goldberg, K. Sastry, Genetic algorithms: the design of innovation, 2nd edn. (Springer, Berlin, 2002)

X. Wei, Evolutionary continuous optimization by Bayesian networks and Gaussian mixture model, in Proceedings of the IEEE 10th International Conference of Signal Processing (ICSP) (2010), pp. 1437–1440

M. Pelikan, BOA: The Bayesian Optimization Algorithm (Springer, Berlin, 2005)

J. Ocenasek, J. Schwarz, Estimation of distribution algorithm for mixed continuous discrete optimization problems, in Proceedings of the 2nd Euro-International Symposium on Computational Intelligence (2002), pp. 227–232

C.W. Ahn, Advances in Evolutionary Algorithms: Theory, Design and Practice, Studies in Computational Intelligence (Springer, Berlin, 2006)

Q. Shi, S. Liang, W. Fei, Y. Shi, R. Shi, Study on Bayesian network parameters learning of power system component fault diagnosis based on particle swarm optimization. Int J Smart Grid Clean Energy 2(1), 132–137 (2013)

H. Muhlenbein, T. Mahnig, FDA—a scalable evolutionary algorithm for the optimization of additively decomposed functions. Evol. Comput. 7(4), 353–376 (1999)

P. Larrañaga, R. Etxeberria, J.A. Lozano, J.M. Pena, Optimization in continuous domains by learning and simulation of Gaussian networks, in Proceedings of the 2000 Genetic and Evolutionary Computation Conference Workshop Program (2000), pp. 201–204

K. Swersky, J. Snoek, R.P. Adams, in Advances in Neural Information Processing Systems, ed. by C.J.C. Burges, L. Bottou, M. Welling, Z. Ghahramani, K.Q. Weinberger (2013), pp. 2004–2012

B. Moradabadi, H. Beigy, C.W. Ahn, An adaptive clustering based bayesian optimization algorithm for continuous global optimization. J. Comput. Sci. Eng. 9(1), 80–85 (2012)

Y.P. Chen, C.H. Chen, Enabling the extended compact genetic algorithm for real-parameter optimization by using adaptive discretization. Evol. Comput. 18(2), 199–228 (2010)

A. Auger, N. Hansen, A restart CMA evolution strategy with increasing population size, in The 2005 IEEE Congress on Evolutionary Computation, vol 2 (2005), pp. 1769–1776

B. Moradabadi, H. Beigy, A new real-coded Bayesian optimization algorithm based on a team of learning automata for continuous global optimization. J. Genetic Program. Evol. Mach. 15(2), 169–193 (2014)

C.H.E.N. Chao-Hong, Y.P. Chen, Quality analysis of discretization methods for estimation of distribution algorithms. IEICE Trans. Inf. Syst. 97(5), 1312–1323 (2014)

S.F. Chen, B. Qian, B. Liu, R. Hu, C.S. Zhang, in Intelligent Computing Methodologies, ed. by D.-S. Huang, K.-H. Jo, L. Wang (Springer, Berlin, 2014), pp. 686–696

M. Kaedi, N.G. Aghaee, C.W. Ahn, Evolutionary optimization in dynamic environments: bringing the strengths of dynamic Bayesian networks into Bayesian optimization algorithm. Int J Innov Comput Inf Control 9, 2485–2503 (2013)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Moradabadi, B., Ebadzadeh, M.M. & Meybodi, M.R. A new real-coded stochastic Bayesian optimization algorithm for continuous global optimization. Genet Program Evolvable Mach 17, 145–167 (2016). https://doi.org/10.1007/s10710-015-9255-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10710-015-9255-3