Abstract

The objective of the present work is to provide a detailed review of expressive speech synthesis (ESS). Among various approaches for ESS, the present paper focuses the development of ESS systems by explicit control. In this approach, the ESS is achieved by modifying the parameters of the neutral speech which is synthesized from the text. The present paper reviews the works addressing various issues related to the development of ESS systems by explicit control. The review provided in this paper include, review of the various approaches for text to speech synthesis, various studies on the analysis and estimation of expressive parameters and various studies on methods to incorporate expressive parameters. Finally the review is concluded by mentioning the scope of future work for ESS by explicit control.

Similar content being viewed by others

Notes

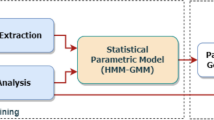

In contrast with the HMM based speech recognition, HMM based speech synthesis uses Hidden Semi Markov Models (HSMM) for representing the speech parameters for each sound unit (King 2011). The terminology of HMM models used in this chapter refers to HSMM models that is used for the speech synthesis.

References

Atal, B. S., & Hanauer, S. L. (1971). Speech analysis and synthesis by linear prediction of the speech wave. The Journal of the Acoustical Society of America, 50, 637–655.

Badin, P., & Fant, G. (1984). Notes on vocal tract computation (Tech. rep.). STL-QPSR.

Banks, G. F., & Hoaglin, L. W. (1941). An experimental study of duration characteristics of voice during the expression of emotion. Speech Monographs, 8, 85–90.

Barra-Chicote, R., Yamagishi, J., King, S., Montero, J. M., & Macias-Guarasa, J. (2010). Analysis of statistical parametric and unit selection speech synthesis systems applied to emotional speech. Speech Communication, 52(5), 394–404.

Beautemps, D., Badin, P., & Bailly, G. (2001). Linear degrees of freedom in speech production: analysis of cineradio- and labio-films data for a reference subject, and articulatory-acoustic modeling. The Journal of the Acoustical Society of America, 109(5), 2165–2180.

Black, A. W., & Campbell, N. (1995). Optimising selection of units from speech database. In Proc. EUROSPEECH.

Bulut, M., & Narayanan, S. (2008). On the robustness of overall f0-only modifications to the perception of emotions in speech. The Journal of the Acoustical Society of America, 123, 4547–4558.

Burkhardt, F., & Sendilmeier, W. F. (2000). Verification of acoustical correlates of emotional speech using formant synthesis. In Proc. ISCA workshop on speech & emotion (pp. 151–156).

Burkhardt, F., Paeschke, A., Rolfes, M., Sendlemeier, W., & Weiss, B. (2005). A database of German emotional speech. In Proc. INTERSPEECH (pp. 1517–1520).

Cabral, J. P. (2006). Transforming prosody and voice quality to generate emotions in speech. Master’s thesis, L2F-Spoken Language Systems Lab, Lisboa, Portugal.

Cabral, J. P., & Oliveira, L. C. (2006a). Emo voice: a system to generate emotions in speech. In Proc. INTERSPEECH.

Cabral, J. P., & Oliveira, L. C. (2006b). Pitch-synchronous time-scaling for prosodic and voice quality transformations. In Proc. INTERSPEECH.

Cabral, J. P., Renals, S., Yamagishi, J., & Richmond, K. (2011). Hmm-based speech synthesiser using the lf-model of the glottal source. In Proc. ICASSP.

Cahn, J. E. (1989). Generation of affect in synthesized speech. In Proc. American voice I/O society.

Campbell, N. (2004). Developments in corpus-based speech synthesis: approaching natural conversational speech. IEICE Transactions, 87, 497–500.

Campbell, N. (2006). Conversational speech synthesis and the need for some laughter. IEEE Transactions on Audio, Speech, and Language Processing, 17(4), 1171–1179.

Campell, N., Hamza, W., Hog, H., & Tao, J. (2006). Editorial special section on expressive speech synthesis. IEEE Transactions on Audio, Speech, and Language Processing, 14, 1097–1098.

Carlson, R., Sigvardson, T., & Sjölander, A. (2002). Data-driven formant synthesis (Tech. rep.). TMH-QPSR.

Clark, R. A. J., Richmond, K., & King, S. (2007). Multisyn: open-domain unit selection for the Festival speech synthesis system. Speech Communication, 49, 317–330.

Courbon, J. L., & Emerald, F. (1982). A text to speech machine by synthesis from diphones. In Proc. ICASSP.

Deller, J. R., Proakis, J. G., & Hanson, J. H. L. (1993). Discrete-time processing of speech signals. New York: McMillan.

Drioli, C., Tisato, G., Cosi, P., & Tesser, F. (2003). Emotions and voice quality: experiments with sinusoidal modeling. In Proc. ITRW VOQUAL’03 (pp. 127–132).

Dudley, H. (1939). The vocoder (Tech. rep.). Bell laboratories.

Dunn, H. K. (1950). The calculation of vowel resonances, and an electrical vocal tract. The Journal of the Acoustical Society of America, 22, 740–753.

Engwall, O. (1999). Modeling of the vocal tract in three dimensions. In Proc. EUROSPEECH.

Erickson, D. (2005). Expressive speech: production, perception and application to speech synthesis. Acoustical Science and Technology, 26(4), 317–325.

Erickson, D., Schochi, T., Menezes, C., Kawahara, H., & Sakakibara, K.-I. (2008). Some non-f0 cues to emotional speech: an experiment with morphing. In Proc. speech prosody (pp. 677–680).

Fairbanks, G., & Hoaglin, L. W. (1939). An experimental study of pitch characteristics of voice during the expression of emotion. Speech Monographs, 6, 87–104.

Fant, G. (1960). Acoustic theory of speech production. s-Gravenhage: Moutan & Co.

Fant, G., Liljencrants, J., & Lin, Q. (1985). A four parameter model of glottal flow. STL-QPSR, 26(4), 1–13.

Farrus, M., & Hernando, J. (2009). Using jitter and shimmer in speaker verification. IET Signal Processing, 3(4), 247–257.

Fernandez, R., & Ramabhadran, B. (2007). Automatic exploration of corpus specific properties for expressive text-to-speech: a case study in emphasis. In Proc. ISCA workshop on speech synthesis (pp. 34–39).

Gauffin, J., & Sundberge, J. (1978). Pharyngeal constrictions. Phonetica, 35, 157–168.

Govind, D., & Prasanna, S. R. M. (2012). Epoch extraction from emotional speech. In Proc. SPCOM.

Govind, D., Prasanna, S. R. M., & Yegnanarayana, B. (2011). Neutral to target emotion conversion using source and suprasegmental information. In Proc. INTERSPEECH.

Hardam, E. (1990). High quality time scale modification of speech signals using fast synchronized overlap add algorithms. In Proc. IEEE.

Hashizawa, Y., Hamzah, S. T. M. D., & Ohyama, G. (2004). On the differences in prosodic features of emotional expressions in Japanese speech according to the degree of the emotion. In Proc. speech prosody (pp. 655–658).

Heinz, J. M., & Stevens, K. N. (1964). On the derivation of area functions and acoustic spectra from cineradiographic films of speech. The Journal of the Acoustical Society of America, 36(5), 1037–1038.

Hess, W. (1983). Pitch determination of speech signals. Berlin: Springer.

Hofer, G., Richmond, K., & Clark, R. (2005). Informed blending of databases for emotional speech synthesis. In Proc. INTERSPEECH.

House, D., Bell, L., Gustafson, K., & Johansson, L. (1999). Child-directed speech synthesis: evaluation of prosodic variation for an educational computer program. In Proc. EUROSPEECH (pp. 1843–1846).

Hunt, A., & Black, A. (1996). Unit selection in a concatenative speech synthesis system using a large speech database. In Proc. ICASSP (pp. 373–376).

Iida, A., Campbell, N., Iga, S., Higuchi, F., & Yasumura, M. (2000). A speech synthesis system for assisting communications. In ISCA workshop on speech & emotion (pp. 167–172).

Imai, S. (1983). Cepstral analysis synthesis on the mel frequency scale. In Proc. ICASSP (pp. 93–96).

Ishii, C. T., & Campbell, N. (2002). Analysis of acoustic-prosodic features of spontaneous expressive speech. In Proc. Ist international congress of phonetics and phonology, Kobe, Japan (pp. 85–88).

Jhonstone, T., & Scherer, K. R. (1999). The effects of emotions on voice quality. In Proc. int. congr. phoetic sciences, San Fransisco (pp. 2029–2031).

Johnson, W. L., Narayanan, S., Whitney, R., Das, R., Bulut, M., & Labore, C. (2002). Limited domain synthesis of expressive military speech for animated characters. In Proc. IEEE speech synthesis workshop, Santa Monica, CA.

Joseph, M. A., Reddy, M. H., & Yegnanarayana, B. (2010). Speaker-dependent mapping of source and system features for enhancement of throat microphone speech. In Proc. INTERSPEECH 2010 (pp. 985–988).

Kawahara, H. (1997). Speech representation and transformation using adaptive interpolation of weighted spectrum: vocoder revisited. In Proc. ICASSP (pp. 1303–1306).

Kawahara, H., Masuda-Katsuse, I., & de Cheveigne, A. (1999). Restructuring speech representations using a pitch adaptive time-frequency smoothing and an instantaneous frequency based f0 extraction: possible role of repetitive structure in sounds. Speech Communication, 27(3–4), 187–207.

Kelly, J., & Lochbaum, C. (1962). Speech synthesis. In Proc. international congress on acoustics.

King, S. (2011). An introduction to statistical parametric speech synthesis. Sadhana, 36(5), 837–852.

Klatt, D. H. (1980). Software for a cascade/parallel formant synthesizer. The Journal of the Acoustical Society of America, 67, 971–995.

Klatt, D. H. (1987). Review of text to speech conversion for English. The Journal of the Acoustical Society of America, 82, 737–793.

Liberman, M., Davis, K., Grossman, M., Martey, N., & Bell, J. (2002). LDC emotional prosody speech transcripts database. University of Pennsylvania, Linguistic data consortium.

Ling, Z.-H., Richmond, K., & Yamagishi, J. (2011). Feature-space transform tying in unified acoustic-articulatory modelling of articulatory control of hmm-based speech synthesis. In Proc. INTERSPEECH.

Maeda, S. (1979). An articulatory model of the tongue based on a statistical analysis. The Journal of the Acoustical Society of America, 65, S22–S22.

Makhoul, J. (1975). Linear prediction: a tutorial review. Proceedings of the IEEE, 63, 561–580.

Markel, J. D. (1972). The sift algorithm for fundamental frequency estimation. IEEE Transactions on Audio and Electroacoustics, AU-20, 367–377.

Mermelstein, P. (1973). Articulatory model for the study of speech production. The Journal of the Acoustical Society of America, 53, 1070–1082.

Miyanaga, K., Masuko, T., & Kobayashi, T. (2004). A style control techniques for hmm-based speech synthesis. In Proc. ICSLP.

Montero, J. M., Gutierrez-Arriola, J., Colas, J., Enriquez, E., & Pardo, J. M. (1999). Analysis and modelling of emotional speech in Spanish. In Proc. ICPhS (pp. 671–674).

Moulines, E., & Charpentier, F. (1990). Pitch-synchronous waveform processing techniques for text-to-speech synthesis using diphones. Speech Communication, 9, 452–467.

Mourlines, E., & Laroche, J. (1995). Non-parametric techniques for pitch-scale and time-scale modification of speech. Speech Communication, 16, 175–205.

Muralishankar, R., Ramakrishnan, A. G., & Prathibha, P. (2004). Modification pitch using dct in the source domain. Speech Communication, 42, 143–154.

Murray, I. R., & Arnott, J. L. (1993). Toward the simulation of emotion in synthetic speech: a review of the literature on human vocal emotion. The Journal of the Acoustical Society of America, 93, 1097–1108.

Murray, I. R., & Arnott, J. L. (1995). Implementation and testing of a system for producing emotion by rule in synthetic speech. Speech Communication, 16, 369–390.

Murthy, H. A., & Yegnanarayana, B. (1991). Formant extraction from group delay function. Speech Communication, 10(3), 209–221.

Murthy, P. S., & Yegnanarayana, B. (1999). Robustness of group-delay-based method for extraction of significant instants of excitation from speech signals. IEEE Transactions on Speech and Audio Processing, 7(6), 609–619.

Murty, K. S. R., & Yegnanarayana, B. (2008). Epoch extraction from speech signals. IEEE Transactions on Audio, Speech, and Language Processing, 16(8), 1602–1614.

Murty, K. S. R., & Yegnanarayana, B. (2009). Characterization of glottal activity from speech signals. IEEE Signal Processing Letters, 16(6), 469–472.

Narayanan, S. S., Alwan, A. A., & Haker, K. (1995). An articulatory study of fricative consonants using magnetic resonance imaging. The Journal of the Acoustical Society of America, 98(3), 1325–1347.

Naylor, P. A., Kounoudes, A., Gudnason, J., & Brookes, M. (2007). Estimation of glottal closure instants in voiced speech using DYPSA algorithm. IEEE Transactions on Audio, Speech, and Language Processing, 15(1), 34–43.

Nose, T., Yamagishi, J., & Kobayashi, T. (2007). A style control technique for hmm-based expressive speech synthesis. IEICE Transactions on Information and Systems E, 90-D(9), 1406–1413.

Olive, J. P. (1977). Rule synthesis of speech from dyadic units. In Proc. ICASSP.

Palo, P. (2006). A review of articulatory speech synthesis. Master’s thesis, Helsinki University of Technology.

Pitrelli, J. F., Bakis, R., Eide, E. M., Fernandez, R., Hamza, W., & Picheny, M. A. (2006). The ibm expressive text to speech synthesis system for American English. IEEE Transactions on Audio, Speech, and Language Processing, 14, 1099–1109.

Prasanna, S. R. M., & Yegnanarayana, B. (2004). Extraction of pitch in adverse conditions. In Proc. ICASSP, Montreal, Canada.

Rao, K. S. (2010). Voice conversion by mapping the speaker-specific features using pitch synchronous approach. Computer Speech & Language, 24(3), 474–494.

Rao, K. S., & Yegnanarayana, B. (2003). Prosodic manipulation using instants of significant excitation. In IEEE int. conf. multimedia and expo.

Rao, K. S., & Yegnanarayana, B. (2006a). Voice conversion by prosody and vocal tract modification. In Proc. ICIT, Bhubaneswar.

Rao, K. S., & Yegnanarayana, B. (2006b). Prosody modification using instants of significant excitation. IEEE Transactions on Audio, Speech, and Language Processing, 14, 972–980.

Rao, K. S., Prasanna, S. R. M., & Yegnanarayana, B. (2007). Determination of instants of significant excitation in speech using Hilbert envelope and group delay function. IEEE Signal Processing Letters, 14, 762–765.

Ross, M., Shaffer, H. L., Cohen, A., Freudberg, R., & Manley, H. J. (1974). Average magnitude difference function pitch extractor. IEEE Transactions on Acoustics, Speech, and Signal Processing, ASSP-22, 353–362.

Ruinskiy, D., & Lavner, Y. (2008). Stochastic models of pitch jitter and amplitude shimmer for voice modification. In Proc. IEEE (pp. 489–493).

Schafer, R. W., & Rabiner, L. R. (1970). System for automatic formant analysis of voiced speech. The Journal of the Acoustical Society of America, 47, 634–648.

Scherer, K. R. (1986). Vocal affect expressions: a review and a model for future research. Psychological Bulletin, 99, 143–165.

Schröder, M. (2001). Emotional speech synthesis—a review. In Proc. EUROSPEECH (pp. 561–564).

Schroder, M. (2009). Expressive speech synthesis: past, present and possible futures. Affective Information Processing, 2, 111–126.

Smits, R., & Yegnanarayana, B. (1995). Determination of instants of significant excitation in speech using group delay function. IEEE Transactions on Acoustics, Speech, and Signal Processing, 4, 325–333.

Steidl, S., Polzehl, T., Bunnell, H. T., Dou, Y., Muthukumar, P. K., Perry, D., Prahallad, K., Vaughn, C., Black, A. W., & Metze, F. (2012). Emotion identification for evaluation of synthesized emotional speech. In Proc. speech prosody.

Tachibana, M., Yamagishi, J., Masuko, T., & Kobayashi, T. (2005). Speech synthesis with various emotional expressions and speaking styles by style interpolation and morphing. IEICE Transactions on Information and Systems E, 88-D(3), 1092–1099.

Tao, J., Kang, Y., & Li, A. (2006). Prosody conversion from neutral speech to emotional speech. IEEE Transactions on Audio, Speech, and Language Processing, 14, 1145–1154.

Taylor, P. (2009). Text to speech synthesis. Cambridge: Cambridge University Press.

Theune, M., Meijs, K., Heylen, D., & Ordelman, R. (2006). Generating expressive speech for story telling applications. IEEE Transactions on Audio, Speech, and Language Processing, 14(4), 1099–1108.

Tokuda, K., Kobayashi, T., & Imai, S. (1995). Speech parameter generation from hmm using dynamic features. In Proc. ICASSP (pp. 660–663).

van Santen, J., Black, L., Cohen, G., Kain, A., Klabbers, E., Mishra, T., de Villiers, J., & Niu, X. (2003). Applications of computer generated expressive speech for communication disorders. In Proc. EUROSPEECH (pp. 1657–1660).

Vroomen, J., Collier, R., & Mozziconacci, S. J. L. (1993). Duration and intonation in emotional speech. In Proc. EUROSPEECH (pp. 577–580).

Whiteside, S. P. (1998). Simulated emotions: an acoustic study of voice and perturbation measures. In Proc. ICSLP, Sydney, Australia (pp. 699–703).

Williams, C. E., & Stevens, K. (1972). Emotions and speech: some acoustic correlates. The Journal of the Acoustical Society of America, 52, 1238–1250.

Yamagishi, J., Onishi, K., Masuko, T., & Kobayashi, T. (2003). Modeling of various speaking styles and emotions for hmm-based speech synthesis. In Proc. EUROSPEECH (pp. 2461–2464).

Yamagishi, J., Kobayashi, T., Tachibana, M., Ogata, K., & Nakano, Y. (2007). Model adaptation approach to speech synthesis with diverse voices and styles. In Proc. ICASSP (pp. 1233–1236).

Yegnanarayana, B. (1978). Formant extraction from linear-prediction spectra. The Journal of the Acoustical Society of America, 63(5), 1638–1641.

Yegnanarayana, B., & Murty, K. S. R. (2009). Event-based instantaneous fundamental frequency estimation from speech signals. IEEE Transactions on Audio, Speech, and Language Processing, 17(4), 614–625.

Yegnanarayana, B., & Veldhuis, R. N. J. (1998). Extraction of vocal-tract system characteristics from speech signals. IEEE Transactions on Speech and Audio Processing, 6(4), 313–327.

Yoshimura, T. (1999). Simultaneous modeling of phonetic and prosodic parameters and characteristic conversion for hmm-based text-to-speech systems. Ph.D. thesis, Nagoya Institute of Technology.

Yoshimura, T., Tokuda, K., Masuko, T., Kobayashi, T., & Kitamura, T. (1999). Simultaneous modeling of spectrum, pitch and duration in HMM-based speech synthesis. In Proc. EUROSPEECH.

Zen, H., Toda, T., Nakamura, M., & Tokuda, K. (2007). Details of nitech HMM-based speech synthesis system for the Blizzard challenge 2005. IEICE Transactions on Information and Systems E, 90-D, 325–333.

Zen, H., Tokuda, K., & Black, A. (2009). Statistical parametric speech synthesis. Speech Communication, 51, 1039–1064.

Zovato, E., Pacchiotti, A., Quazza, S., & Sandri, S. (2004). Towards emotional speech synthesis: a rule based approach. In Proc. ISCA SSW5 (pp. 219–222).

Acknowledgement

The work done in this paper is funded by the on going UK-India Education Research Initiative (UKIERI) project titled “study of source features for speech synthesis and speaker recognition” between IIT Guwahati, IIIT Hyderabad and University of Edinburgh. The present work is also supported from the ongoing DIT funded project on the Development of text to speech synthesis systems in Assamese and Manipuri languages.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Govind, D., Prasanna, S.R.M. Expressive speech synthesis: a review. Int J Speech Technol 16, 237–260 (2013). https://doi.org/10.1007/s10772-012-9180-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-012-9180-2