Abstract

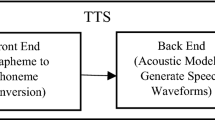

A text-to-speech synthesis system produces intelligible and natural speech corresponding to any given text. Two main attributes of a synthesizer are the quality of speech produced and the footprint size. In the current work, HMM-based speech synthesizers have been built and assessed using various kinds of phone-sized units, namely, monophone, triphone, triphone with contextual features, pentaphone, and pentaphone with contextual features. It is observed that the quality of synthetic speech improves with the addition of contexts, with a mean opinion score (MOS) of 2.4 for a synthesizer that uses monophones and 3.98 for one that uses pentaphones with 48 additional contextual features (pentaphone+). However, the footprint size also increases from 269 to 1840 kB, with the addition of contextual information. Therefore, based on a desired application, a compromise has to be made either on the quality or the footprint size. Analysis reveals that although speech synthesized by a monophone-based system lacks naturalness, it is intelligible. The lack of naturalness is primarily due to the discontinuities in the pitch contour. Therefore, an attempt is made to improve the quality of synthesized speech by smoothening the pitch contour, thereby retaining the small footprint size, while attaining quality of a synthesizer that uses contextual information. It is observed that smoothening the pitch contour at the word-level yields the best quality, with an MOS of 3.4. Further, a preference test reveals that 71.25 % of the sentences are similar in quality to the speech synthesized by a pentaphone+ HTS, while 5 % are better.

Similar content being viewed by others

References

Black, A., Taylor, P., & Caley, R. (1998). The festival speech synthesis system.

Cernak, M., Motlicek, P., & Garner, P. (2013). On the (un)importance of the contextual factors in HMM-based speech synthesis and coding. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 8140–8143).

Drugman, T., Thomas, M., Gudnason, J., Naylor, P. A., & Dutoit, T. (2012). Detection of glottal closure instants from speech signals: A quantitative review. IEEE Transactions on Audio Speech and Language Processing, 20, 994–1001.

Karabetsos, S., Tsiakoulis, P., Chalamandaris, A., & Raptis, S. (2009). Embedded unit selection text-to-speech synthesis for mobile devices. IEEE Transactions on Consumer Electronics, 55, 613–621.

Kim, S. J., Kim, J. J., & Hahn, M. (2006). HMM-based Korean speech synthesis system for hand-held devices. IEEE Transactions on Consumer Electronics, 52, 1384–1390.

Le Maguer, S., Barbot, N., & Boffard, O. (2013). Evaluation of contextual descriptors for HMM-based speech synthesis in French. In ISCA Speech Synthesis Workshop (SSW8) (pp. 153–158). Barcelona, Spain.

Lu, H., & King, S. (2012) Using Bayesian networks to find relevant context features for HMM-based speech synthesis. In ISCA INTERSPEECH (pp. 1–4).

Ramani B., Lilly Christina S., Anushiya Rachel G., Sherlin Solomi V., Nandwana, M. K., Prakash, A., Aswin Shanmugam, S., Krishnan, R., Prahalad, S. K., Samudravijaya, K., Vijayalakshmi, P., Nagarajan, T., & Murthy, H. (2013). A common attribute based unifed HTS framework for speech synthesis in Indian languages. In 8th ISCA Workshop on Speech Synthesis (pp. 311–316). Barcelona, Spain.

Tabet, Y., & Boughazi, M. (2011). Speech synthesis techniques. A survey (pp. 67–70). WOSSPA.

Toth, B., & Nemeth, G. (2011). Some aspects of HMM speech synthesis optimization on mobile devices. In 2nd International Conference on Cognitive Infocommunications (CogInfoCom) (pp. 1–5).

Watts, O., Yamagishi, J., & King, S. (2010). The role of higher-level linguistic features in HMM-based speech synthesis. In INTERSPEECH (pp. 841–844). ISCA.

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X. A., Moore, G., Odell, J., Ollason, D., Povey, D., Valtchev, V., & Woodland, P. (2002). The HTK book (for HTK Version 3.4). Cambridge: Cambridge University Engineering Department.

Zen, H., Nose, T., Yamagishi, J., Sako, S., Masuko, T., Black, A. W., & Tokuda, K. (2007). The HMM-based speech synthesis system (HTS) version 2.0. In ISCA Workshop on Speech Synthesis (pp. 294–299). Bonn, Germany.

Zen, H., Tokuda, K., & Black, A. W. (2009). Statistical parametric speech synthesis. Speech Communication, 51, 1039–1064.

Acknowledgments

The authors would like to thank the Department of Information Technology, Ministry of Communication and Information Technology, Government of India, for funding the project on Development of text-to-speech synthesis systems for Indian languages Phase II, Ref. no. 11(7)/2011-HCC(TDIL).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Anushiya Rachel, G., Sherlin Solomi, V., Naveenkumar, K. et al. A small-footprint context-independent HMM-based synthesizer for Tamil. Int J Speech Technol 18, 405–418 (2015). https://doi.org/10.1007/s10772-015-9278-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-015-9278-4