Abstract

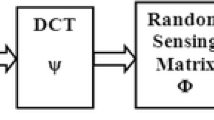

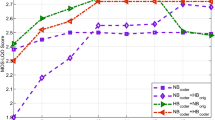

Speech coding facilitates speech compression without perceptual loss that results in the elimination or deterioration of both speech and speaker specific features used for a wide range of applications like automatic speaker and speech recognition, biometric authentication, prosody evaluations etc. The present work investigates the effect of speech coding in the quality of features which include Mel Frequency Cepstral Coefficients, Gammatone Frequency Cepstral Coefficients, Power-Normalized Cepstral Coefficients, Perceptual Linear Prediction Cepstral Coefficients, Rasta-Perceptual Linear Prediction Cepstral Coefficients, Residue Cepstrum Coefficients and Linear Predictive Coding-derived cepstral coefficients extracted from codec compressed speech. The codecs selected for this study are G.711, G.729, G.722.2, Enhanced Voice Services, Mixed Excitation Linear Prediction and also three codecs based on compressive sensing frame work. The analysis also includes the variation in the quality of extracted features with various bit-rates supported by Enhanced Voice Services, G.722.2 and compressive sensing codecs. The quality analysis of extracted epochs, fundamental frequency and formants estimated from codec compressed speech was also performed here. In the case of various features extracted from the output of selected codecs, the variation introduced by Mixed Excitation Linear Prediction codec is the least due to its unique method for the representation of excitation. In the case of compressive sensing based codecs, there is a drastic improvement in the quality of extracted features with the augmentation of bit rate due to the waveform type coding used in compressive sensing based codecs. For the most popular Code Excited Linear Prediction codec based on Analysis-by-Synthesis coding paradigm, the impact of Linear Predictive Coding order in feature extraction is investigated. There is an improvement in the quality of extracted features with the order of linear prediction and the optimum performance is obtained for Linear Predictive Coding order between 20 and 30, and this varies with gender and statistical characteristics of speech. Even though the basic motive of a codec is to compress single voice source, the performance of codecs in multi speaker environment is also studied, which is the most common environment in majority of the speech processing applications. Here, the multi speaker environment with two speakers is considered and there is an augmentation in the quality of individual speeches with increase in diversity of mixtures that are passed through codecs. The perceptual quality of individual speeches extracted from the codec compressed speech is almost same for both Mixed Excitation Linear Prediction and Enhanced Voice Services codecs but regarding the preservation of features, the Mixed Excitation Linear Prediction codec has shown a superior performance over Enhanced Voice Services codec.

Similar content being viewed by others

References

Al-Ali, A. K. H., Dean, D., Senadji, B., Chandran, V., & Naik, G. R. (2017). Enhanced forensic speaker verification using a combination of DWT and MFCC feature warping in the presence of noise and reverberation conditions. IEEE Access, 5, 15400–15413.

Assmann, P. F., Nearey, T. M., & Scott, J. M. (2002). Modeling the perception of frequency-shifted vowels. In Proceedings of the 7th international conference on spoken language processing, Denver, CO (pp. 425–428).

Assmann, P. F., Dembling, S., & Nearey, T. M. (2006). Effects of frequency shifts on perceived naturalness and gender information in speech. In Proceedings of the 9th international conference on spoken language processing, Pittsburgh, PA (pp. 889–892).

Besacier, L., Grassi, S., & Dufaux, A. (2000). GSM speech coding and speaker recognition. In Proceedings of ICASSP’00, Istanbul (Vol. 2, pp. 111085–111088).

Bouguelia M.-R., Nowaczyk, S., Santosh, K. C., Verikas, A., (2018). Agreeing to disagree: Active learning with noisy labels without crowdsourcing. International Journal of Machine Learning and Cybernetics, 9, 1307–1319.

Bouzid, A., & Ellouze, N. (2004). Glottal opening instant detection from speech signal. In 2004 12th European signal processing conference, Vienna (pp. 729–732).

Chamberlain, M. W. (2001). A 600 bps MELP vocoder for use on HF channels. In MILCOM proceedings communications for network-centric operations (Vol. 1, pp. 447–453).

Chelali, F. Z.,Djeradi, A., & Djeradi, R. (2011). Speaker identification system based on PLP coefficients and artificial neural network. In Proceedings of the world congress on engineering, London, UK (Vol. II).

Chen, Z., Luo, Y., & Mesgarani, N. (2017). Deep attractor network for single-microphone speaker separation. In IEEE international conference on acoustics, speech and signal processing (ICASSP), New Orleans, LA (pp. 246–250).

Chu, W. C. (2003). Speech coding Algorithms: Foundation and evolution of standardized coders. Hoboken: Wiley.

Dey, N., & Ashour, A. S. (2018). Applied examples and applications of localization and tracking problem of multiple speech sources, in direction of arrival estimation and localization of multi-speech sources (pp. 35–48). Cham: Springer.

Dey, N., & Ashour, A. S. (2018). Sources localization and DOAE techniques of moving multiple sources, in direction of arrival estimation and localization of multi-speech sources (pp. 23–34). Cham: Springer.

Dey, N., & Ashour, A. S. (2018). Challenges and future perspectives in speech-sources direction of arrival estimation and localization, in direction of arrival estimation and localization of multi-speech sources (pp. 49–52). Cham: Springer.

Dunn, R. B., Quatieri, T. F., Reynolds, D. A., & Campbell J. P. (2001). Speaker recognition from coded speech in matched and mismatched conditions. In Speaker recognition workshop in Odyssey (pp. 115–120).

Gallardo, L. F. (2016). Human and automatic speaker recognition over telecommunication channels. Singapore: Springer.

Gallardo, L.F., Moller, S., & Wagner, M. (2013). Human speaker identification of known voices transmitted through different user interfaces and transmission channels In Proceedings of international conference on ICASSP’13, Vancouver, BC (pp. 7775–7779).

Gallardo, L.F., Wagner, M., & Mller, S. (2014). I-vector speaker verification for speech degraded by narrowband and wideband channels. In Proceedings of 11th ITG sympsium on speech communication, Erlangen.

He, J., Liu, L., & Palm, G. (1996). On the use of residual cepstrum in speech recognition. In IEEE international conference on acoustics, speech, and signal processing.

Hermansky, H., Morgan, N., Bayya, A., & Kohn, P. (1992). RASTA-PLP speech analysis technique. In ICASSP-92: 1992 IEEE international conference on acoustics, speech, and signal processing, San Francisco, CA (Vol.1, pp. 121–124).

Jarina, R., Polacky, J., Pocta, P., & Chmulik, M. (2017). Automatic speaker verification on narrowband and wideband lossy coded clean speech. IET Biometrics, 6(4), 276–281.

Kim, C., & Stern, R. M. (2012). Power-normalized cepstral coefficients (PNCC) for robust speech recognition. IEEE International conference on acoustics, speech and signal processing (ICASSP), Kyoto (pp. 4101–4104).

Kuitert, M., & Boves, L. (1997). Speaker verification with GSM coded telephone speech. In Proceedings of 5th European Conference on EUROSPEECH’97, Rhodes (pp. 975–978).

McCree, A. V., & Barnwell, T. P. (1995). A mixed excitation LPC vocoder model for low bit rate speech coding. IEEE Transactions on Speech and Audio Processing, 3(4), 242–250.

Mclaren, M., Abrash, V., & Graciarena, M. (2013). Improving robustness to compressed speech in speaker recognition. In Proceedings of INTERSPEECH’13, Lyon (pp. 3698–3702).

Mukherjee, H., Obaidullah Sk. Md., Santosh, K. C., Phadikar, S., & Roy, K. (2018). Line spectral frequency-based features and extreme learning machine for voice activity detection from audio signal. International Journal of Speech Technology. https://doi.org/10.1007/s10772-018-9525-6

Pisanski, K., Fraccaro, P. J., Tigue, C. C., OConnor, J. J. M., Rder, S., Andrews, P. W., et al. (2014). Vocal indicators of body size in men and women: A meta-analysis. Animal Behaviour, 95, 89–99.

Quatieri, F., Singer, E., Dunn, R. B., Reynolds, D. A., & Campbell, J. P. (1999) Speaker and language recognition using speech codec parameters. In Proceedings of eurospeech (pp. 787–790).

Rao, K. S., & Yegnanarayana, B. (2006). Prosody modification using instants of significant excitation. IEEE Transactions on Audio, Speech, and Language Processing, 14(3), 972–980.

Reynolds, D. A., & Rose, R. C., (1995). Robust text-independent speaker identification using Gaussian mixture speaker model. IEEE Transaction on Speech and Audio Processing, 3(1), 72–83.

Stauffer, A.R., & Lawson, A.D. (2009). Speaker recognition on lossy compressed speech using the Speex codec. In Proceedings of INTERSPEECH’09, Brighton (pp. 2363–2366).

Vuppala, A. K., Rao, K. S., & Chakrabarti, S,. (2010). Effect of speech coding on speaker identification. In Annual IEEE India conference (INDICON), Kolkata (pp. 1–4).

Vuppala, A. K., Yadav, J., Chakrabarti, S., & Rao, K. S. (2011). Effect of low bit rate speech coding on epoch extraction. In 2011 international conference on devices and communications (ICDeCom), Mesra (pp. 1–4).

Wang, L., Chen, Z., & Yin, F. (2015). A novel hierarchical decomposition vector quantization method for high-order LPC parameters. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 23(1), 212–221.

Wang, L., Phapatanaburi, K., Go, Z., Nakagawa, S., Iwahashi, M., & Dang, J. (2017). Phase aware deep neural network for noise robust voice activity detection. In ICME-17 (pp. 1087–1092).

Wang, L., Ohtsuka, S., & Nakagawa, S. (2009) High improvement of speaker identification and verification by combining MFCC and phase information. In IEEE International conference on acoustics, speech and signal processing, Taipei (pp. 4529–4532).

Zhang, Y., & Ni, L. (2017). Feature extraction algorithm fusing GFCC and phase information. In 2017 IEEE 2nd advanced information technology, electronic and automation control conference (IAEAC), Chongqing (pp. 1163–1167).

Zhao, X., & Wang, D. (2013). Analyzing noise robustness of MFCC and GFCC features in speaker identification. In 2013 IEEE international conference on acoustics, speech and signal processing, Vancouver, BC (pp. 7204–7208).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sankar, M.S.A., Sathidevi, P.S. An investigation on the degradation of different features extracted from the compressed American English speech using narrowband and wideband codecs. Int J Speech Technol 21, 861–876 (2018). https://doi.org/10.1007/s10772-018-09559-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-018-09559-5