Abstract

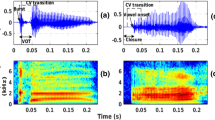

Consonant omission is one of the most typical and common articulation disorders in cleft palate (CP) speech. The automatic evaluation of consonant omission provides an objective and aided diagnosis to speech-language pathologists and CP patients. This work proposes an automatic consonant omission method. The collection of pathologic speech data is far more difficult than that of normal speech. The speech samples applied in this work are collected from 80 CP patients, with annotation on the phonemic level by professional speech-language pathologists. The proposed method requires no pre-training or modeling, by taking advantages of priori knowledge of CP speech and Chinese phonetics. The classification between voiced initials and finals is a difficulty in Mandarin speech processing researches, this work proposes a time-domain waveform difference analysis method to address this difficulty. The proposed method achieves the accuracy of 88.9% for consonant omission detection in CP speech, and the sensitivity and specificity of the proposed method are 70.89% and 91.86% respectively.

Similar content being viewed by others

References

Bansal, D., Turk, N., Mendiratta, S. (2015). Automatic speech recognition by Cuckoo Search Optimization based Artificial Neural Network classifier. In International Conference on Soft Computing Techniques and Implementations (ICSCTI). Faridabad, pp. 29–34.

Chen, B., Zhang, L., Wang, B., & Qu, D. (2012). Boundary detection of Chinese initial and finals based on senff’s auditory spectrum features. Acta Acustica, 37(1), 104–111.

De Leon, P. L., Pucher, M., Yamagishi, J., Hernaez, I., & Saratxaga, I. (2012). Evaluation of speaker verification security and detection of HMM-based synthetic speech. IEEE Transactions on Audio, Speech, and Language Processing, 20(8), 2280–2290.

Delgado, E., Sepulveda, F. A., Röthlisberger, S., et al. (2011). The Rademacher Complexity Model over acoustic features for improving robustness in hypernasal speech detection. In Computers and Simulation in Modern Science (pp. 130–135). Cambridge: Press/Univ/Cambridge Press.

Han, W., Zhang, X., Min, G., Zhou, X. (2015). A novel single channel speech enhancement based on joint Deep Neural Network and Wiener Filter. In IEEE International Conference on Progress in Informatics and Computing (PIC). Nanjing, pp. 163–167.

He, L., Jing, Z., Qi, L., Heng, Y., & Lech, M. (2014). Automatic evaluation of hypernasality and consonant misarticulation in cleft palate speech. IEEE Signal Processing Letters, 21(10), 298–1301.

He, L., Jing, Z., Qi, L., Heng, Y., & Lech, M. (2015). Automatic evaluation of hypernasality based on a cleft palate speech database. Journal of Medical Systems, 39, 61.

He, L., Zhang, J., Liu, Q., Yin, H., & Lech, M. (2013). Automatic evaluation of hypernasality and speech intelligibility for children with cleft palate. In IEEE Conference Industrial Electronics and Applications (ICIEA), pp. 220–223.

Kang, B. O., & Kwon, O. W. (2016). Combining multiple acoustic models in GMM spaces for robust speech recognition. IEICE Transactions on Information and Systems, 99(3), 724–730.

Kanth, N. R., Saraswathi, S. (2015). Efficient speech emotion recognition using binary support vector machines & multiclass SVM. In IEEE International Conference on Computational Intelligence and Computing Research (ICCIC). Madurai, pp. 1–6.

Khaldi, K., Boudraa, A. O., & Turki, M. (2016). Voiced/unvoiced speech classification-based adaptive filtering of decomposed empirical modes for speech enhancement. IET Signal Processing, 10(1), 69–80.

Koutrouvelis, A. I., Kafentzis, G. P., Gaubitch, N. D., & Heusdens, R. A. (2016). Fast method for high-resolution voiced/unvoiced detection and glottal closure/opening instant estimation of speech. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 24(2), 316–328.

Li, H., & Tang, C. (2012). Initial/final segmentation using loss function and acoustic features. Acta Acustica, 37(3), 340–345.

Liang, H., Qin, Y., & Wen, L. (2012). Chinese syllable initial-final segmentation based on genetic matching pursuit. Computers System Applications, 21(2), 233–238.

Lin, X., Wang, L.. Tutorials of phonetic (Mandarin). Beijing: Peking University Press (2013).

Liu, S., Sim, K. C. (2014). On combining DNN and GMM with unsupervised speaker adaptation for robust automatic speech recognition. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Florence, pp. 195–199.

Losee, J. E., & Kirschner, R. E. (2008). Comprehensive cleft care (pp. 589–617). New York: McGraw-Hill Professional Press.

Maier, A., Honig, F., Hacker, C., et al. (2009). Automatic detection of articulation disorders in children with cleft lip and palate. The Journal of Acoustical Society of America, 126(5), 2589–2602.

Murillo, S., Vargas, J. F., Arias, J. D., et al. (2011). Automatic detection of hypernasality in children. Berlin: Lecture Notes in Computer Science.

OrozcoArroyave, J. R., MurilloRendon, S., AlvareaMeza, A. M., et al. (2011). Automatic selection of acoustic and non-linear dynamic features in voice signals for hypernasality detection. In 12th Annual Conference of the International Speech Communication Association. Florence, Italy, 27–31 August 2011.

OrozcoArroyave, J. R., VargasBonilla, J. F., AriasLondoño, J. D., et al. (2012). Nonlinear dynamics for hypernasality detection in spanish vowels and words. Cognitive Computation 4(2), 448–457.

Schuster, M., Maier, A., Haderlein, T., et al. (2006). Evaluation of speech intelligibility for children with cleft lip and palate by means of automatic speech recognition. International Journal of Pediatric Otorhinolaryngology, 70(10), 1741–1747.

SoleraUrena, R., GarciaMoral, A. I., PelaezMoreno, C., MartinezRamon, M., & DiazdeMaria, F. (2012). Real-time robust automatic speech recognition using compact support vector machines. IEEE Transactions on Audio, Speech, and Language Processing, 20(4), 1347–1361.

Tokuda, K., Zen, H. (2016). Directly modeling voiced and unvoiced components in speech waveforms by neural networks. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Shanghai, pp. 5640–5644.

Vijayalakshmi, P., Nagarajan, T., & Jayanthan, R. (2009). Selective pole modification-based technique for the analysis and detection of hypernasality. In Proceedings of TENCON 2009. Singapore, pp. 1–5.

Vijayalakshmi, P. R., Ramasubba, M., & Douglas, O. (2007). Acoustic analysis and detection of hypernasality using a group delay function. IEEE Transactions on Biomedical Engineering, 54(4), 621–629.

Wang, Y., Feng, H., Zhang, L., et al. (2011). I/F Segmentation for Chinese continuous speech based on vowel detection. Computer Engineering and Applications, 47(14), 134–136.

Yuan, J., & Liberman, M. (2015). Investigating consonant reduction in Mandarin Chinese with improved forced alignment. In Proceedings of Interspeech 2015, pp. 2675–2267.

Zhang, B., Zhang, L., & Qu, D. (2010). Segmention of Chineses initial and finals based on auditory event detection. Acta Acustica, 35(6), 701–707.

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 61503264).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

He, L., Wang, X., Zhang, J. et al. Automatic detection of consonant omission in cleft palate speech. Int J Speech Technol 22, 59–65 (2019). https://doi.org/10.1007/s10772-018-09570-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-018-09570-w