Abstract

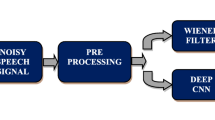

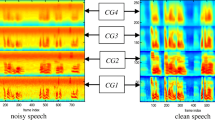

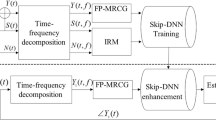

Getting enhanced speech from the noisy speech signal is a task of particular importance in the area of speech processing. Here we propose a deep neural network (DNN) based speech enhancement method utilising mono channel mask. The proposed method employs cochleagram to find an initial binary mask. Then modified sub-harmonic summation algorithm is applied on initial binary mask to obtain an intermediate mask. The spectro-temporal features of this intermediate mask are fed to DNN. DNN finds out the correct spectral structure in the frames associated with the target speech which are further used to develop the mono channel mask. Speech signal is reconstructed using mono channel mask. Mono channel mask avoids the unnecessary interference from the noisy time–frequency (T–F) units. Objective evaluations done using perceptual evaluation of speech quality (PESQ) and normalized source to distortion ratio indicate that the proposed method outperforms the state of the art methods in the area of speech enhancement. Obtained values of PESQ shows that proposed method improves the quality of the speech in noisy conditions. The experimental results present the effectiveness of the mono channel mask in speech enhancement. The proposed method gives better performance compared to other methods.

Similar content being viewed by others

References

Barfuss, H., Huemmer, C., Schwarz, A., & Kellermann, W. (2017). Robust coherence-based spectral enhancement for speech recognition in adverse real-world environments. Computer Speech & Language, 46, 388–400.

Bengio, Y. (2012). Practical recommendations for gradient-based training of deep architectures. Neural networks: Tricks of the trade (pp. 437–478). Berlin: Springer.

Chehrehsa, S., & Moir, T. J. (2016). Speech enhancement using maximum a-posteriori and gaussian mixture models for speech and noise periodogram estimation. Computer Speech & Language, 36, 58–71.

Delfarah, M., & Wang, D. (2017). Features for masking-based monaural speech separation in reverberant conditions. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 25(5), 1085–1094.

Ephraim, Y., & Malah, D. (1984). Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Transactions on Acoustics, Speech, and Signal Processing, 32(6), 1109–1121.

Févotte, C., Gribonval, R., & Vincent, E. (2005). Bss_eval toolbox user guide-revision 2.0.

Garofolo, J. S., Lamel, L. F., Fisher, W. M., Fiscus, J. G., & Pallett, D. S. (1993). Darpa timit acoustic-phonetic continous speech corpus cd-rom. nist speech disc 1-1.1. NASA STI/Recon technical report n, 93.

Han, K., & Wang, D. (2012). A classification based approach to speech segregation. The Journal of the Acoustical Society of America, 132(5), 3475–3483.

Hasan, M. K., Salahuddin, S., & Khan, M. R. (2004). A modified a priori snr for speech enhancement using spectral subtraction rules. IEEE Signal Processing Letters, 11(4), 450–453.

Hu, G., & Wang, D. (2006). An auditory scene analysis approach to monaural speech segregation. Topics in acoustic echo and noise control (pp. 485–515). Berlin: Springer.

Hu, G., & Wang, D. (2007). Auditory segmentation based on onset and offset analysis. IEEE Transactions on Audio, Speech, and Language Processing, 15(2), 396–405.

Ingale, P. P., & Nalbalwar, S. L. (2018). Singing voice separation using mono-channel mask. International Journal of Speech Technology, 21(2), 309–318.

Islam, M. T., Shahnaz, C., Zhu, W.-P., & Ahmad, M. O. (2015). Speech enhancement based on student t modeling of teager energy operated perceptual wavelet packet coefficients and a custom thresholding function. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 23(11), 1800–1811.

Kang, T. G., Shin, J. W., & Kim, N. S. (2018). Dnn-based monaural speech enhancement with temporal and spectral variations equalization. Digital Signal Processing, 74, 102–110.

Kim, G., Lu, Y., Hu, Y., & Loizou, P. C. (2009). An algorithm that improves speech intelligibility in noise for normal-hearing listeners. The Journal of the Acoustical Society of America, 126(3), 1486–1494.

Lu, Y., & Loizou, P. C. (2008). A geometric approach to spectral subtraction. Speech Communication, 50(6), 453–466.

Mohammadiha, N., Taghia, J., & Leijon, A. (2012). Single channel speech enhancement using bayesian nmf with recursive temporal updates of prior distributions. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), 2012 (pp. 4561–4564). IEEE.

Polikar, R. (1996). The wavelet tutorial.

Recommendation, I.-T. (2001). Perceptual evaluation of speech quality (pesq): An objective method for end-to-end speech quality assessment of narrow-band telephone networks and speech codecs. Rec. ITU-T P. 862.

Tseng, H.-W., Hong, M., & Luo, Z.-Q. (2015). Combining sparse nmf with deep neural network: A new classification-based approach for speech enhancement. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), 2015 (pp. 2145–2149). IEEE.

Wang, D. (2005). On ideal binary mask as the computational goal of auditory scene analysis. Speech separation by humans and machines (pp. 181–197). New York: Springer.

Wang, Y., Narayanan, A., & Wang, D. (2014). On training targets for supervised speech separation. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 22(12), 1849–1858.

Wang, Y., & Wang, D. (2014). A structure-preserving training target for supervised speech separation. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), 2014 (pp. 6107–6111). IEEE.

Wang, Z., Sha, F. (2014). Discriminative non-negative matrix factorization for single-channel speech separation. In: IEEE international conference on acoustics, speech and signal processing (ICASSP), 2014 (pp. 3749–3753). IEEE.

Wilson, K. W., Raj, B., Smaragdis, P., & Divakaran, A. (2008). Speech denoising using nonnegative matrix factorization with priors. In: IEEE international conference on acoustics, speech and signal processing, 2008. ICASSP (2008) (pp. 4029–4032). IEEE.

Yu, W., Jiajun, L., Ning, C., & Wenhao, Y. (2013). Improved monaural speech segregation based on computational auditory scene analysis. EURASIP Journal on Audio, Speech, and Music Processing, 2013(1), 2.

Zhao, X., Wang, Y., & Wang, D. (2014). Robust speaker identification in noisy and reverberant conditions. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP), 22(4), 836–845.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ingale, P.P., Nalbalwar, S.L. Deep neural network based speech enhancement using mono channel mask. Int J Speech Technol 22, 841–850 (2019). https://doi.org/10.1007/s10772-019-09627-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-019-09627-4