Abstract

We present software that generates phrase-based concordances in real-time based on Internet searching. When a user enters a string of words for which he wants to find concordances, the system sends this string as a query to a search engine and obtains search results for the string. The concordances are extracted by performing statistical analysis on search results and then fed back to the user. Unlike existing tools, this concordance consultation tool is language-independent, so concordances can be obtained even in a language for which there are no well-established analytical methods. Our evaluation has revealed that concordances can be obtained more effectively than by only using a search engine directly.

Similar content being viewed by others

Introduction

With the recent developments in communication media and a growing awareness of foreign cultures, people have become more exposed to foreign languages than at any other time in recent history. More people are learning foreign languages, and language tools are becoming more and more important. With regard to language learning tools, the issue this paper addresses is concordance generation that can be done in real-time using the Internet for a query given by a user.

A concordance is an orderly list of occurrences of an individual word or a phrase (Trask, 1999). A language learner should acquire numerous concordances to better practice the language. For language learners stuck on problems of language concordance, dictionaries based on fine distinctions and abstracted concordances have been the main means of obtaining answers for many years. Recently, there have been a series of published dictionaries focusing especially on usages and concordances; one example is seen in Sinclair (2004). However, dictionary revisions are few and far between, and users often cannot find concordance examples for recent terms or specific contexts of their own.

In the late 1980s, a new method to search for concordances was developed using the KWIC (key word in context) tool, or concordance software. Such tools differ from dictionaries in that they extract concordances from preliminary data given to the software through the use of complex data structures such as suffix arrays/trees and a directed acyclic word graph (DAWG); this allows instant consultation of data on a gigabyte scale (Manber and Myers, 1990; Crochemore and Rytter, 2002). Concordance software above all is an essential application for language learners as well as an important tool for linguists to investigate specific linguistic phenomena (Levy, 1997; Zaphiris and Zacharia, 2006). Various concordance software programs are now available: as well as those provided by dictionary companies as in the Collins Cobuild Concordance Sampler,Footnote 1 there is more general software, such as Concordance,Footnote 2 that can be used to build personal tools to meet each user's personal needs. The problem is that large bodies of data are sometimes not readily available in less widely used languages. Also, even in common languages such as English, the huge amounts of data available for such use are usually limited to that from newspapers.

Related to this, there have been recent attempts to construct a search engine dedicated to a linguistic objective (Cafarella and Etzioni, 2005; Resnik and Elkis, 2005). These researchers propose regular collection of Web data, linguistic analysis of the data, and its application for various linguistic purposes. However, such attempts are still in their early stages, and the limitation of the language used is again a problem as high performance language analysis software is still limited to very common languages such as English.

Under such circumstances, many language-learners use search engines as concordance software. For example, if a learner wants to find out which verb is used with “jet lag” in English, he can simply type “jet lag” into a search engine and obtain concordances such as “avoid jet lag” and “recover from jet lag” among the various snippets (i.e., the summaries and fragments of the links provided by the search engine). Similarly, search engines can be used to find out how to use articles correctly: for instance, in French, nonnatives will have problems with phrases such as “revenir du|de Paris” (here, it is de), compared with “revenir du|de Japon” (here, it is du).

Unfortunately, the problem here for users is that the search results are simply lists of individual concordances. Of course, the search engine ranks the search results with snippets inclusively of concordances, but they are not ranked in terms of linguistic relevance. Also, using materials from the Web for language learning can be risky, since there are sites where language is not used in an orderly way. One way to verify such concordances is to scan through several pages and statistically determine the prominent concordance. In the case of the verb used with “jet lag,” a user can see that “avoid” appears many times by scanning through multiple pages of search results.

This scanning process can be enhanced through automation, enabling scanning of hundreds of occurrences. Therefore, we created a tool, named Tonguen, that reads a large number of pages on behalf of the user and statistically accumulates concordances from information supplied from the search engine. Unlike existing KWIC tools, Tonguen does not assume any preexisting data, but only connects to a search engine and acquires concordances in real-time.

The idea of creating such a concordance tool that facilitates dynamic concordance lookup based on an Internet search engine is not new, which shows how large the need is. The earliest such tool was WebCorp.Footnote 3 This tool is only available in English, however, and is not readily applicable to languages without word segmentation. Also, the output is akin to a KWIC tool in that it cuts out a fixed length of words surrounding the query. Therefore, this system still shows instances rather than summarized results. Other interesting tools are Google Fight,Footnote 4 and Google Duel,Footnote 5 in which the user enters two different phrases and the tool suggests which is more commonly used according to the frequency reported by Google. Although these two sites allow multilingual consultation, the range of flexibility is limited to the duel of two given phrases.

In contrast to these existing tools, our tool, Tonguen has two important features:

-

Language-independent feature: any language can be looked up as long as the search engine can obtain examples. Tonguen is Unicode-based and does not incorporate any language-specific methods or applications.

-

Flexible query form: wild cards, comparisons, and context and domain restrictions are supported.

In this sense, although it is motivated by the specific objective of implementing a “concordance generation tool,” Tonguen utilizes a general technique for accumulating search results that is applicable to any language. Especially, our phrase border extraction method is also applicable to other concordance systems besides search engines (e.g., KWIC systems).

In fact, according to Tonguen's query logs, many users use Tonguen not only for concordance retrieval, but also for any other task requiring global trends in the search results. One such use is for a “pseudo” question-answering (QA) tool, or a tool for studying markets. In QA, a user might look up the diameter of earth or the first names of famous people. The input to our tool is a mere query, and it therefore cannot directly receive questions, as is done in QA. We can obtain answers, however, by entering a query in the form of an answer, such as “* is the president of France,” or “* is the capital of Tonga.” The system will then look for strings that can replace the *, as if looking for concordances. Thus, Tonguen can be situated between a search engine and a QA tool, because it summarizes search engine results.

From a technical viewpoint, a similar procedure—i.e., to summarize the search results obtained from a search engine—has also been incorporated in recent QA systems. For example, several QA systems utilize search engine results to obtain answers by summing the results (Brill et al., 2001; Kwok et al., 2001; Dumais et al., 2002). In such studies, the interest was in the quality of the answers within the QA context, so the performance was evaluated in terms of the overall results of the QA procedure; therefore, the performance of the accumulation procedure itself was less emphasized. Focusing on the effect of summarization, we discuss how to accumulate search results, and then discuss the effectiveness of the result summarization.

In the next section, we describe the overall design of Tonguen. In Sections 3 and 4, we present language-independent data analysis methods; i.e., candidate extraction and ranking techniques. In Section 5, we discuss examples of concordances obtained with Tonguen. Then, from Section 6 on, we evaluate the usability of Tonguen from various points of view.

Overall system design

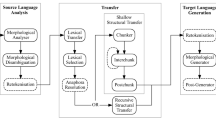

The Tonguen software is situated between a user and a search engine (Fig. 1). It goes through the following stages in one cycle of concordance generation:

-

1.

Receive an input query from a user.

-

2.

Send the query and obtain a fixed number of search engine results that match the query.

-

3.

Get all fixed-length strings that include the query.

-

4.

Extract the candidates from these strings (as explained in Section 3).

-

5.

Rank the candidates (as explained in Section 4).

-

6.

Display the results.

The essential procedure of Tonguen is to summarize the concordances acquired by a query, which corresponds to stages 4 and 5. Tonguen can be implemented as simple client software communicating directly with a search engine server. Currently, it has been developed on a Tonguen server.Footnote 6

Figure 2 shows a Tonguen site with a query on the English expression of “how to * jet lag” (to look for strings in the position of *). In the topmost part is a query box, where the user enters a query; in the next line is a pull-down menu for choosing the number of snippets to be downloaded. The lower half contains lists of the resulting concordances. Each line shows a possible concordance acquired from the query. Each concordance has two clickable buttons, one before and one after it, which enable a new concordance search through extension by attaching * before or after the concordance. For example, upon clicking the button before the concordance of “how to prevent jet lag,” Tonguen searches for “* how to prevent jet lag.” Each concordance itself is clickable, which facilitates returning to the original, individual list of snippets. The result obtained by selecting “how to prevent jet lag” is pasted on the right-hand side of Fig. 2. With this click function, the user can view actual instances occurring in a longer context.

Compared with previous work, such as WebCorp,Footnote 7 and Google Fight,Footnote 8 Tonguen has two distinctive features: query flexibility and multilingual capability. First, regarding flexibility, every query is constructed by a user based on a pattern match. Basically, there are four patterns:

-

Wild card ‘*’ can be used to replace a missing word or string. For example, “human *,” “* sans fil,” or “fed * with” can be entered into Tonguen, and then the * matches to a word or string such as (human) “nature,” or “reseau” (sans fil), (fed) “up” (with), respectively. Wild cards can be used multiple times in a query. Also, the user can enter “* number” to limit the length of the matching word or string. For example, the user can enter “how to *3 jet lag” to get no more than three words that replace the “*3” part.

-

Comparison ‘|’ allows users to compare two or more phrases and prioritize them depending on which is the most relevant. This can be expressed as (A|B|C…). For example, a search such as “revenir (de|du) (Tokyo|Japon)” can be entered.

-

Context restriction ‘+’ indicates the cooccurring word and can be used to narrow the search context. For example, “* Bush +American president” searches for expressions with the postfix words being “Bush” co‐occurring with the string “American president.”

-

Domain restriction ‘@’ can be used to search only in a specific URL domain. For example, “DB stands for * @.de” gives concordances only from within the “.de” domain (i.e., German pages). The system determines the language from this domain indicated by the user. Otherwise, the system automatically determines whether the query is in segmented or unsegmented language from the character set existing within the query. How the system changes the procedure depending on the segmented/unsegmented language is further explained at the end of the next section.

The cognitive basis of the design of such patterns is as follows. A user who has a question regarding language concordance often cannot clearly remember the complete expression, but still has a few clues that can be used to access language data. We expect such vague recollections to typically follow two major patterns: in one, the user lacks the information needed to complete part of a phrase; in the other, the user is uncertain in choosing the correct word from among several candidates. Tonguen is designed so that users can verify such concordances at the tip or the end of the tongue, which led to the system name “Tonguen.”

As noted, another feature of Tonguen is its language-independence. In any language, there is the problem of updating conventional resources to include current language concordances. This problem is more severe for less-international languages. The available dictionaries are usually older, the available corpora are too small for KWIC, and the cycle of revising the data is likely to be longer. Therefore, learners of a foreign language other than English often find that the available language resources are limited, and that they must rely more on search engine results. This is the main reason why we designed Tonguen to be independent of language; that is, Tonguen uses neither language-dependent algorithms nor a language dictionary to analyze search engine results. Tonguen can be interpreted as a dynamic dictionary constructed automatically every time a user wants a concordance.

The first preliminary version of the system based on this philosophy was reported in Tanaka-Ishii and Nakagawa (2005). At that time, however, the method adopted in this preliminary system was not well formulated. Also, the variation of queries was limited to one wild card per query. Thus, this paper can be situated as an overall report on an improved version of the study along the same line.

In the next section, we describe the essential procedure within Tonguen: concordance extraction and ranking.

Candidate extraction

When a wild card is used at the beginning or end of a query, Tonguen needs to cut out the relevant chunk.Footnote 9 As the method for processing the beginning and end of a query is symmetric, candidate extraction to obtain the end part of the query is discussed here. Thus, we only consider the query with the form “A *,” with A being a string.

Note that Tonguen must use a technique other than dictionary-based segmentation, because one characteristic of Tonguen is its language-independent capability. Usually, dictionaries for segmentation are not available for many languages. Also, dictionary-based segmentation is limited, because Tonguen allows queries in various forms in phrases as well as words, and so no dictionaries can provide the segmentation for any given phrases.

Without such a segmentation dictionary, the easiest way of extraction is to cut out a string, or a group of words, of a fixed length. Fixed-length strings are commonly used in the KWIC system for nonsegmented languages. One drawback of using fixed-length strings is that it hinders the aggregation of statistics obtained from data. For example, although “with” is often found after “be acquainted,” all occurrences of “with” cannot be put together if the length is fixed at 10 characters, because there are a variety of strings that can come after “with” such as “with him.” Therefore, we want to extract a candidate with a better boundary.

One way to define a boundary is to segment after a string that is tightly coupled with the query. In fact, the above example of “with” gives a hint as to how this can be done. If text is regarded as a character or word sequence, the variety of successive tokens at each character inside a word monotonically decreases according to the offset length, because the longer the preceding character/word n-gram, the longer the preceding context, and the more it restricts the appearance of possible next tokens. However, the uncertainty at the position of a word/collocation border becomes greater and the complexity increases as the position is further out of that specific context. This suggests that a word border can be detected by focusing on the differentials of the uncertainty of branching. This assumption is illustrated in Fig. 3 in a tree structure for the case of characters for the query “human *.” Examples such as “human nature” or “human behavior” would probably occur. Here, it is easier to guess which character comes after “natura” than after “na,” because the uncertainty is greater for the tokens coming after “na.” On the contrary, the uncertainty at the position of a word border becomes greater, and the complexity increases, as the position is further out of context. With the same example, it is difficult to guess which character comes after “natural.”

Such observations date back to the fundamental work done by Harris (1955), who stated that when the number of different tokens coming after every prefix of a word marks the maximum value, then the location corresponds to the morpheme boundary. Recently, with the increasing availability of corpora, this characteristic underlying language data, which seems to universally govern language, has been applied for unsupervised text segmentation into words and morphemes. Kempe (1999) reports on a small experiment to detect word borders in German and English texts by monitoring the entropy of successive characters for 4-g. We tested the effectiveness of this technique by formulating the uncertainty as branching entropy in Chinese (Tanaka-Ishii, 2005; Jin and Tanaka-Ishii, 2006), and found that using the increasing point of the entropy of successive characters as the location of a word boundary enabled precision as high as 90%. A thorough verification comparing English and Chinese based on Harris's hypothesis from a more linguistic viewpoint is reported in Tanaka-Ishii and Jin (2006).

The same observation is also valid at the word level. For example, the uncertainty of words coming after the word sequence, “The United States of,” is small (because the word “America” is very likely to occur), whereas the uncertainty is greater for the sequence “information retrieval,” suggesting that there is a context boundary just after this term. This observation at the word level has been applied to term extraction by utilizing the number of different words coming after a word sequence as an indicator of collocation boundaries, as seen in Frantzi and Ananiadou (1996).

As our system is based on a philosophy of not incorporating any language-dependent processes, we mathematically formulate this observation as the branching entropy and use it to extract candidates.

Given a set of elements χ and a set of n-gram sequences χ n formed of χ, the conditional entropy of an element occurring after an n-gram sequence X n is defined as

where \(P(x) = P(X = x)\), \(P(x|x_n ) = P(X = x|X_n = x_n )\), and \(P(X = x)\) indicates the probability of an occurrence of x.

A well-known observation on language data states that \(H(X|X_n )\) decreases as n increases (Bell et al., 1990). This phenomenon indicates that X will become easier to estimate as the context of X n gets longer. This can be intuitively understood: it is easy to guess that “e” will follow after “Hello! How ar,” but it is difficult to guess what comes after the short string “H.” The last term—\(\log \,P(x|x_n )\) in the above formula indicates the information of a token of x coming after x n , and thus, the branching after x n . The latter half of the formula, the local entropy value for a given x n ,

indicates the average information of branching for a specific n-gram sequence x n . As our interest in this paper is this local entropy, we denote \(H(X|X_n = x_n )\) simply as h(x n ) in the rest of this paper.

The decrease in \(H(X|X_n )\) globally indicates that given an n-length sequence x n and another (n + 1)-length sequence y n+1, the following inequality holds on average:

One reason why inequality (2) holds for language data is that there is context in language, and y n+1 carries a longer context than x n . Therefore, if we suppose that x n is the prefix of x n+1, it is very likely that

holds, because the longer the preceding n-gram, the longer the same context. For example, it is easier to guess what comes after x 6 = “natura” than to guess what comes after x 5 = “natur.” Therefore, the decrease in \(H(X|X_n )\) can be expressed as the concept that if the context is longer, the uncertainty of the branching decreases on average. Then, taking the logical contraposition, if the uncertainty does not decrease, the context is not longer, which can be interpreted as the following:

If the entropy of successive tokens increases, the location is at a border.

For example, in the case of x 7 = “natural, ” the entropy h(“natural”) should be larger than h(“natura”); i.e., it is uncertain what character will come after x 7. Thus, when

the location of n + 1 is at the boundary.

We thus utilize inequality (4) as the boundary of strings and extract candidates. Precisely, for segmented languages, we detect the boundary with χ being a set of words, whereas for nonsegmented languages, we detect the boundary with χ being a set of characters.

Thus, after procedures 1–3 listed at the beginning of Section 2, strings of fixed length are input to procedure 4. In this stage, the system transforms the data into a trie data structure starting with the query. The trie structure is then traversed in order to detect all places where inequality (4) holds. After that, the whole prefix is cut out so it can be ranked. Next, we explain our ranking method.

Candidate ranking

After candidates are obtained, Tonguen ranks them. The most trivial possibility is to use the frequency. However, whether a candidate is an orderly concordance cannot be judged for a given query by looking at only the frequency of the candidate. For example, terms such as “the” occur frequently, therefore, if we use the simple frequency, “the” would appear as highly relevant candidates for many of the queries; which of course is inappropriate. Alternatively, the previous version of Tonguen described in Tanaka-Ishii and Nakagawa (2005) used a naïve ranking method by multiplying the frequency with the logarithm of the candidate length, but this has no mathematical basis.

Our task is to generate concordances for a given query. The problem can be regarded as a task similar to the collocation extraction studied within NLP. We decided to rank candidates by using a statistical test of dependency, based on the likelihood ratio. Among various statistical tests, we used the likelihood ratio test because within the NLP context it has been proposed for collocation extraction (Dunning, 1993).

Precisely, for a given query Q and several concordance candidates \(A_i (i = 0, \ldots ,n)\), the two hypotheses,

-

H 0: Q and A i are independent,

-

H 1: Q and A i are not independent,

are tested. Given a likelihood function for hypothesis H of L(H), its ratio \(\lambda = L(H_0 )/L(H_1 )\) is known to follow the χ2 distribution with one degree of freedom. λ is calculated from the contingency table of frequencies as regards Q and A i ; i.e., a 2 × 2 matrix that indicates frequencies of events of \(Q \cap A_i ,\neg Q \cap A_i ,Q \cap \neg A_i ,\neg Q \cap \neg A_i\).

To conduct statistical tests for all candidates, Tonguen constructs the negative pattern for all candidates. For example, if “A * B *” is the query and one of its concordance candidates is “A Z B X,” then the negative pattern is “* Z * X.” The contingency matrix between the query and the negative pattern is then constructed. This procedure is conducted for all candidates extracted in the previous stage. The candidates are then ranked in the order of the likelihood ratio; i.e., the order of greater dependency on the query.

Tonguen examples

Before moving on to our verification of Tonguen's performance, we consider some actual examples of how Tonguen can be used (Table 1). These examples are in seven languages: English, Japanese, French, German, Spanish, Arabic, and Chinese.

The first block of the table involves linguistic concordances, and the bottom half shows a more general use of Tonguen on QA-like queries. The first block includes queries on expressions, vocabulary, and spellings in transliterations. The purpose of the query and the explanation of answers are noted in italic fonts and parenthesized. These examples are often useful to language learners because they only appear in dictionaries in abstracted form and not as concrete examples.

The second block includes examples for the query “wireless *” in three languages. A complete English example for “wireless *” is given in Table 2, in comparison to AltaVista's direct search results. The AltaVista results are shown in terms of the unit of snippets. Note how AltaVista's direct results include company names and concrete examples of instances. By comparison, even though Tonguen summarized only 100 snippets, it gives far clearer overviews of how “wireless” is used: wireless LAN, network, and technologies. Without Tonguen, such an overall view of the concordances of “wireless” could not be obtained.

The last block of Table 1 shows freer uses of Tonguen, such as querying book (film) titles, acronyms, QA-type questions regarding the diameter of the earth or the first name of Vermeer, the impressions of three huge search engine sites, and different variations of the Spanish dish “paella.” Even though half of the queries here are in English (for clarity), the same use is also possible in any other language.

System performance

Experimental settings

A proper evaluation of a system like Tonguen is very difficult, as plausible answers for a query cannot be defined. This is because the answer required by social convention could differ from the actual trends of language evidence on the Internet.

One possibility is to test on a query with a conventionally fixed concordance and see if the correct answer is presented. For example, this could be a query such as the collocation “get acquainted *” to see if “with” appears, or entering “it is no use crying over *” to see if “spilt milk” appears. However, there are two problems with this approach. The first is that such unique expressions are also presented at a higher ranking among snippets when a search engine is used directly. Therefore, there will not be much gain from the effect of accumulation. The second problem is that such queries are difficult to define and collect, because there are always multiple concordances on the Web, and there are also parodies and misuses on the Internet: these are also cases of language evidence. For example, in the case of the colloquial saying “it is no use crying over *,” concordances such as “split milk,” “spilled milk,” “milk,” or even “a lost watch” actually appear. All of these exist as actual concordances, and they cannot be defined as incorrect concordances. Therefore, even when the desired concordance does not appear, this could be due to a mismatch between what is thought of as convention and what actually exists.

Even under such circumstances, we still investigated the effect of accumulation by collecting queries that possibly have more or fewer unique answers/concordances. We chose collocations and colloquial sayings for this task because they are conventionally fixed expressions. As the uses of Tonguen could be varied, the generality of conclusions drawn from the evaluation based on collocation and colloquial sayings will remain limited. However, we believe that if Tonguen does not present better results than the direct use of search engine even for collocation and colloquial sayings, we cannot hope for any better for more various and general queries, which are usually more ambiguous and difficult for proper evaluation. Thus, on this basis, we verified the Tonguen results for these two types of expressions.

We took collocations in English and colloquial sayings in English and Japanese with more than three words from dictionaries and language learner's books. Then, for each piece of data, we replaced the head, middle, and tail parts with wild cards. Such replacements were done arbitrarily. We call the generated string with the wild card a query, and the string replaced by the wild card an answer (in the sense of hopeful answer from the earlier discussion.)

For example, the colloquial saying “it is no use crying over spilt milk” was transformed into three queries: “it is no use crying over *” (the answer is spilt milk), “* crying over spilt milk” (the answer is it is no use), and “it is * crying over spilt milk” (the answer is no use). A summary of this manually created data is listed in Table 3. In total, we had 1075 queries.

The search engine used here was mainly AltaVista for the main experiment. In addition, we used Goo,Footnote 10 Yahoo,Footnote 11 and AllTheWebFootnote 12 in order to verify the generalness of the results obtained. We could not use Google, as they only allow a limited number of snippets to be downloaded via the Google API; this limit is so small that any large-scale evaluation was impossible. Although most language learners and linguists who use a search engine as a concordance generator actually use Google, we believe that the need for accumulation of the results remains the same.

Tonguen versus AltaVista

First, we verified the performance of Tonguen by comparing its results with those obtained by AltaVista. The ranking of direct results obtained from AltaVista was considered the baseline ranking. Note that Tonguen's results are accumulated, whereas AltaVista's are not, so the importance of each candidate should not be viewed equally. However, we hoped to see that the ranking improved in Tonguen, and therefore see the effect of summarization.

The ranking of the AltaVista results was defined by the ranking of the first appearance of the answer in the search engine results. The ranking for AltaVista was calculated after careful page analysis and elimination of advertisements. As AltaVista cannot provide exact matching results (as snippets always include results), we compared the ranking of the first output of Tonguen that included the answer, too. We compare the performance for inclusive matches with that for exact matches in Section 6.3.

The effect of Tonguen is first shown in the bar chart in Fig. 4. All results for head, middle, tail, English, Japanese, colloquial sayings, and collocation were averaged to generate these bar charts. The chart shows the rates of wins, draws, and losses for Tonguen and AltaVista. Wins and losses were defined as follows. For a query, Tonguen output the answer at a rank of n, and AltaVista output the answer at a rank of m. If any of the answers were not found, n or m was set to infinity. If n < m, Tonguen was considered to win, and in the opposite case AltaVista won. If n == m, the result was a draw.

The numbers at the head of each bar show the number of snippets downloaded. We see that with any setting, Tonguen won against AltaVista. When 1000 snippets were downloaded, Tonguen won against AltaVista 54.4% of the time. Including draws, Tonguen provided an equal or a better result 81.8% of the time. We also see that Tonguen's win rate increased when the number of downloaded snippets increased; i.e., increasing the quantity of downloaded snippets seemed to enhance performance. However, this increase was not large and Tonguen's effect was apparent even when the number of downloaded snippets was small.

The two graphs in Fig. 5 show results of a finer analysis of Tonguen compared with AltaVista results. There are two lines, a solid one for Tonguen and a dashed one for AltaVista. All results for head, middle, tail, English, Japanese, colloquial sayings, and collocation were averaged to generate these plots. The log-scale horizontal axis indicates the number of top N candidates examined. The vertical axis shows the accuracy; i.e., the proportion of queries in which the correct answer occurred within the top N ranked candidates. For the left graph of Fig. 5, the number of snippets downloaded was 100, so the maximum value of N on the horizontal axis is 100.

When N = 1 (where only the top-ranking candidate is verified), Tonguen had an answer rate of 47.5%, while that for AltaVista was 34.4%. When the top 10 candidates were verified, Tonguen had an answer rate of 83.8%, which was again superior to the AltaVista result of 75.7%. As this tool is intended for human use, the answers included among the candidates for small N are important. The accuracy of Tonguen converges with that of AltaVista at about 10, and this is a clear effect of accumulation.

The orders of the two lines changed when N was between 20 and 30, and this is natural, because when all of the candidates are examined (N = 100), AltaVista reaches the upper bound that Tonguen could achieve. That is, Tonguen cannot summarize data that does not exist within a snippet. According to this graph, the upper bound that Tonguen could obtain was about 94.2%, where the dashed line crosses the vertical axis of N = 100 (at the right end).

The difference between the Tonguen and AltaVista plots at N = 100 indicates the error mostly caused by candidate extraction. In the case of 100 downloaded snippets, this difference amounted to 9.8%. The difference between the plots at N = 1 and at N = 100 for Tonguen indicates the error due to the ranking. This amounted to about 35%. These errors were due to cases where the answers did not occur frequently enough within the snippet for Tonguen to extract and rank them. This defect is caused not only by Tonguen's methods, but also by the query not being appropriate, or by the Web not having enough data concerning the query.

Upon changing the number of downloaded snippets to 1000 (right chart, where the maximum value of the horizontal axis is 1000), we obtained results similar to those with 100 downloaded snippets. The graph shows the effect of a large number of snippets being used. When N = 1, for Tonguen the answer appeared in almost half of the cases. The crossing point of the two lines at N = 1000 was improved. AltaVista's upper bound was 97.7%, and Tonguen's accuracy was 92.0%, so the extraction error decreased to 5.7%. Also, the crossing of AltaVista and Tonguen occurred at around N = 40.

The harmonic mean of the ranking of the correct answer depending on the number of snippets downloaded is shown in Fig. 6. The horizontal axis shows the number of snippets downloaded, while the vertical axis shows the harmonic mean of the ranking of the answers. There are two lines, with the solid lower line being the Tonguen result and the upper line indicating AltaVista's average ranking. The harmonic means are average ratios, which is advantageous when considering cases where no results are obtained (ranks are set as infinite when no results are obtained.)

The harmonic mean of the ranking for Tonguen was consistently better than that of AltaVista. According to our analysis of actual ranks, the user should seek targets up to several tens of candidates for the case of AltaVista; even when a candidate appears, it will be no more than an instance. In contrast, with Tonguen, the user has to seek very few candidates, which are reliable in the sense that they are accumulated. This difference suggests the effect of summarization.

Last, we examined the difference in the results when we changed the kinds of data. Collocations and colloquial sayings differ in that queries for collocation require only one word, whereas those for colloquial sayings require multiple words. English and Japanese differ in that data on the Internet is far more abundant for English than for Japanese. We show this result through win/lose bar charts similar to that shown for the first evaluation of this section.

Figure 7 shows bar charts for collocations in English and colloquial sayings in English and Japanese. There are three blocks, the first for collocations, the second for colloquial sayings in English, and the third for colloquial sayings in Japanese, each with the number of downloaded snippets varying from 50 to 1000.

Tonguen outperformed AltaVista in all cases. Comparing collocation and colloquial sayings in English (the first and second blocks of Fig. 7), we see that the results for collocations were more accurate than those for colloquial sayings. This was because far more data was available for collocation than for colloquial sayings. Colloquial sayings are lengthy expressions and their use within the Internet is limited. This also leads to larger rates of draws with the correct answer being proposed at rank 1.

As for the language differences, we compared the results for colloquial sayings in English and Japanese (the second and third blocks in Fig. 7). We see that the results for English were better than those for Japanese. When the number of downloaded snippets was 50, Tonguen's win rate was comparable to that of AltaVista. This was again due to English being the most commonly used language. According to the Global Reach Web site,Footnote 13 English content accounts for 68.4% of the 313 billion pages of the Web, whereas Japanese accounts for only 5.9%. This difference is significant. A similar outcome was reported in Tanaka-Ishii and Nakagawa (2005), where one of the languages was French; results for French, which accounts for just 3.0% of the Web content, were worse than those for either English or Japanese. Thus, Tonguen's performance reflects the amount of data on the Internet. We can also say that even with small amounts of data, concordance generation by summarization outperforms the use of AltaVista.

Comparison among various tonguen settings

First, we verified the difference for the candidate extraction method. In our previous system, reported in Tanaka-Ishii and Nakagawa (2005), the candidate borders are detected at increasing points of the branching number, rather than at increasing points of entropy. Precisely, the function h in inequality (4) in Section 3 is replaced by another function g, which counts the number of kinds of successive tokens in the trie structure. The left part of Fig. 8 shows the result.

Extraction by entropy outperformed that with the branching number when N was small, though the order was reversed at higher N. Finally, the branching number converged to a score slightly closer to the upper bound. The critical queries that are better extracted by the branching number are those with lower frequencies. Therefore, if the counts are small, branching performs better. When sufficient statistics are obtained, however, entropy is better. This is natural given the statistical nature of entropy. As users are more interested in higher performance at smaller N, we adopted entropy in Tonguen.

Another result is the difference when adopting exact matches to calculate the accuracy. Thus far, candidates have been considered accurate when answers were included in the proposed candidate to make the comparison as equal as possible regarding the performance of AltaVista. Exact matches indicate the accuracy of the segmentation technique discussed in Section 3. The right part of Fig. 8 shows the result. Of course, the accuracy of exact matches decreased, but this margin was small: 4–5%. This shows that our extraction process is accurate together with the ranking process. Given that we use no language-specific application software, the method of candidate extraction has worked fairly well.

Different search engines

Second, we verified the commonness of candidates for different search engines. The results are shown in Fig. 9. The horizontal axis shows values of N up to 100, while the vertical axis indicates the percentage of common candidates. First, we obtained Tonguen results for four different search engines: Goo, Yahoo, AltaVista, and AllTheWeb. Then, for the results from all search engine pairs (six pairs = 4 C 2), we determined the rate of common candidates and averaged these into one score. This procedure was done for different values of N and different numbers of downloaded snippets.

We show four lines, corresponding to the different numbers of downloaded snippets: 1000, 500, 300, and 100. Here, we see that 68.9–74.1% of the candidates were the same between two search engines when the best-ranking candidate was verified. This fell to a range of 52.6–62.2% when the top five candidates were examined, and to one of about 48.2–57.4% when N = 10. We can also see the effect of the data amount: the more data, the more common the results, which was due to the accumulation process in Tonguen.

Processing time

As Tonguen relies on search engines, we measured the processing time required by Tonguen. Figure 10 shows the results, with the horizontal axis indicating the number of downloaded snippets from 50 to 1000 while the vertical axis indicates the elapsed time in seconds.

There are two procedures related to Tonguen: the page download time in communication with AltaVista (t download), and the processing time of the pages thus downloaded (t tonguen). In the figure, the upper line shows the sum of the two (t download + t tonguen), whereas the lower line shows t tonguen. The upper line therefore shows the actual time the user has to wait for the results to appear.

The time in both cases changed linearly according to the number of pages downloaded from AltaVista. When 1000 snippets were processed, the total waiting time for the user was about 40 s, whereas if the number of pages was small (up to 200), Tonguen's response was less than 10 s.

We can further reduce t tonguen by adopting a more sophisticated matching algorithm. Within the current Tonguen, the pattern matching (as explained in Sections 3 and 4) is done pattern by pattern. However, some methods enable efficient pattern matching that is done all at once (Chan et al., 2003). The application of such algorithms to Tonguen will be a significant task, though, and remains for our future work.

As for t download, as long as we use the system architecture shown in Fig. 1, which incorporates the basic idea and the key technique of Tonguen for dynamically generating concordances, the user has to wait a certain downloading time. Still, this time can be reduced by downloading pages via a direct communication line with the search engine server, which exists within search engine companies. We are making efforts to complete business contracts that will enable greater speed in this way.

User evaluation

As noted in the Introduction and in Section 5, Tonguen can be used for other tasks, apart from concordance consultation, that benefit from accumulation. However, it is difficult to test such general cases in an automatic manner, as the accuracy will depend on the query. Therefore, for the predecessor system to Tonguen reported in Tanaka-Ishii and Nakagawa (2005) (which we call the pre-Tonguen system in this section), a user test was done using TREC-like questions. Note that the current Tonguen includes the functionality of the prior system. Also, Tonguen performs better than the prior system regarding the candidate extraction procedure (as shown in Fig. 8) and has a more mathematically sound procedure for both candidate extraction and ranking (as explained in Section 4). Even so, the user performance of our current system does not differ that greatly from the pre-Tonguen system, especially for QA-like tasks, and here we summarize our earlier results from Tanaka-Ishii and Nakagawa (2005).

Our subjects were 20 Japanese students from the computer science field, all of whom had been using the Internet for more than 5 years. Each of the students was asked to answer TREC-like questions in Japanese by using either AltaVista or pre-Tonguen. The 32 questions were constructed based on Website usability test guidelines from Spool (1998). Examples of the questions are shown in Fig. 11. The questions were divided into two groups of 16. Ten students answered the first group using pre-Tonguen and the second group with AltaVista, whereas the other 10 students answered the second group with pre-Tonguen and the first group with AltaVista. Each student was asked to answer the 32 questions by using pre-Tonguen and AltaVista in turn. When using both AltaVista and pre-Tonguen, the students were asked to create their own queries to answer the questions. They were allowed to refine these queries while searching for the answers. They were also allowed to use “|” (comparison) and “+” (And search) with pre-Tonguen and the full functionality of query construction with AltaVista.

The results are shown in Table 4. The respective blocks indicate the time, number of clicks, confidence score, and accuracy for pre-Tonguen and AltaVista, while the columns represent the averages and standard deviations of the values for 10 subjects. Pre-Tonguen outperformed AltaVista in all categories: users could get more accurate answers faster, with fewer clicks and more confidence. Although the method of formulating a query was totally up to the students when using either system, the students could get answers more quickly with less action with pre-Tonguen. Additionally, the deviations were smaller for pre-Tonguen, meaning that many of the users could use pre-Tonguen in a more stable manner. We believe this benefit results from pre-Tonguen's summarization of the search engine results helping users to think based on the arranged information.

The performance of pre-Tonguen was lower for two types of questions. The first type was a question whose answer was not available on the Web: in this case, the users could not obtain the correct answer. The subjects who used AltaVista had the same problem with this sort of question. The second type was a question whose query form did not match its form in pre-Tonguen. Pre-Tonguen only supported one wild card per query. When the subjects had a question like No. 4 from Fig. 6, they faced the problem of not being able to enter several wild cards. Therefore, in the current Tonguen we modified this so that any number of wild cards can be put into a query.

Conclusion

Tonguen is a real-time concordance generation tool based on search engines. When the user enters a string of words to check concordances, the system sends the query to a search engine to obtain data related to the string. The corpus is then statistically analyzed, and the results are displayed.

Our system differs from existing systems in two ways. First, users may enter more flexible queries than with other existing, related software. Second, Tonguen is language independent, so queries can be made in any language if the search engine supports that language. This is achieved by string-based processing of the candidate extraction and ranking. We formalized the extraction by using the branching entropy and ranked candidates based on a statistical test of the likelihood ratio.

According to our evaluation, about half of the missing parts of expressions were provided by the highest-ranked candidates when we tested English and Japanese colloquial sayings and collocations. Tonguen uniformly outperformed AltaVista. When the top 10 candidates were considered, around 85–90% of the answers were found. Moreover, the average ranking of the answer was smaller compared with the ranking obtained from AltaVista. We also found that our extraction process using the branching entropy and the ranking using the statistical test provided stable and high performance.

There are several future directions for our work. The first is to refine each component of the system, such as including a wider variety of query patterns. The second is to apply our method to other search systems, such as KWIC or desktop search. Through such studies, we hope to generate dynamic concordances that are truly useful for language learners. In addition, we wish to conduct studies with actual language learners as they perform real language learning tasks.

Notes

http://www.collins.co.uk/Corpus/CorpusSearch.aspx

http://www.concordancesoftware.co.uk/

http://www.webcorp.org.uk/

http://www.googlefight.com/

http://www.googleduel.com/original.php

http://www.ish.ci.i.u-tokyo.ac.jp/tonguen/

http://www.webcorp.org.uk/

http://www.googlefight.com/

When it is used in the middle of a query, the boundary can be straightforwardly determined.

http://www.goo.ne.jp/

http://search.yahoo.com/

http://www.alltheweb.com/

http://www.glreach.com/

References

Bell, T., Cleary, J., & Witten, I. (1990). Text compression. Englewood Cliffs, NJ: Prentice-Hall.

Brill, E., Lin, J., Banko, M., Dumais, S., & Ng, A. (2001). Data-intensive question answering. In Proceedings of the TREC (pp. 393–400).

Cafarella, M., & Etzioni, O. (2005). A search engine for large-corpus language applications. In Proceedings of the WWW Conference (pp. 442–452).

Chan, C., Garofalakis, M., & Rastogl, R. (2003). RE-tree: An efficient index structure for regular expressions. The VLDB Journal, 12, 102–119.

Crochemore, M., & Rytter, W. (2002). Jewels of stringology. Singapore: World Scientific Press.

Dumais, S., Banko, M., et al. (2002). Web question answering: Is more always better? In Proceedings of the SIGIR Conference (pp. 291–298).

Dunning, T. (1993). Accurate methods for the statistics of surprise and coincidence. Computational Linguistics, 19(1), 61–74.

Frantzi, T., & Ananiadou, S. (1996). Extracting nested collocations. In Proceedings of the 16th COLING (pp. 41–46).

Harris, S. (1955). From phoneme to morpheme. Language, 31, 190–222.

Jin, Z., & Tanaka-Ishii, K. (2006). Unsupervised segmentation of Chinese text by use of branching entropy. In Proceedings of the International Joint Conference of Annual Meeting of Computational Linguistics and Computational Linguistics (ACL/COLING) (pp. 428–435).

Kempe, A. (1999). Experiments in unsupervised entropy-based corpus segmentation. In Proceedings of the Workshop of EACL in Computational Natural Language Learning (pp. 7–13).

Kwok, C., Etzioni, O., & Weld, D. (2001). Scaling question answering to the Web. In Proceedings of the WWW Conference (pp. 150–161).

Levy, M. (1997). Computer assisted language learning. London: Oxford University Press.

Manber, U., & Myers, G. (1990). Suffix arrays: A new method for on-line string searches. In Proceedings of the ACM-SIAM Symposium on Discrete Algorithms (pp. 319–327).

Resnik, P., & Elkis, A. (2005). The linguist's search engine: An overview. In Proceedings of the ACL Conference (pp. 150–161).

Sinclair, J. (2004). English usage. Collins Cobuild.

Spool, M. (1998). Web Site Usability: A Designer's guide. San Mateo, CA: Morgan Kaufmann Publishers.

Tanaka-Ishii, K. (2005). Entropy as an indicator of context boundaries—an experiment using a Web search engine. In Proceedings of the International Joint Conference on Natural Language Processing (pp. 93–105).

Tanaka-Ishii, K., & Jin, Z. (2006). From phoneme to morpheme—another verification using a corpus. Journal of Corpus Linguistics and Linguistic Theory, in press.

Tanaka-Ishii, K., & Nakagawa, H. (2005). A multilingual usage consultation tool based on Internet searching—more than a search engine, less than QA. In Proceedings of the WWW Conference (pp. 363–371).

Trask, R. (1999). Key concepts in language and linguistics. Evanston, IL: Routledge.

Zaphiris, P., & Zacharia, G. (2006). User-centered computer aided language learning. Hershey, PA: Idea Group.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tanaka-Ishii, K., Ishii, Y. Multilingual phrase-based concordance generation in real-time. Inf Retrieval 10, 275–295 (2007). https://doi.org/10.1007/s10791-006-9021-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-006-9021-5