Abstract

In recent years graph-ranking based algorithms have been proposed for single document summarization and generic multi-document summarization. The algorithms make use of the “votings” or “recommendations” between sentences to evaluate the importance of the sentences in the documents. This study aims to differentiate the cross-document and within-document relationships between sentences for generic multi-document summarization and adapt the graph-ranking based algorithm for topic-focused summarization. The contributions of this study are two-fold: (1) For generic multi-document summarization, we apply the graph-based ranking algorithm based on each kind of sentence relationship and explore their relative importance for summarization performance. (2) For topic-focused multi-document summarization, we propose to integrate the relevance of the sentences to the specified topic into the graph-ranking based method. Each individual kind of sentence relationship is also differentiated and investigated in the algorithm. Experimental results on DUC 2002–DUC 2005 data demonstrate the great importance of the cross-document relationships between sentences for both generic and topic-focused multi-document summarizations. Even the approach based only on the cross-document relationships can perform better than or at least as well as the approaches based on both kinds of relationships between sentences.

Similar content being viewed by others

1 Introduction

Text summarization is the process of automatically creating a compressed version of a given text that provides useful information to users, and multi-document summarization is to produce a summary delivering the majority of information content from a set of documents about an explicit or implicit main topic. Generally speaking, multi-document summarization can be either generic or topic-focused. Topic-focused summarization differs from generic summarization in that given a specified topic description (i.e. user profile, user query), topic-focused summarization is to create from the documents a summary which either answers the information need expressed in the topic or explains the topic.

Automatic multi-document summarization has drawn much attention in recent years and it has been widely used in many web applications. Multi-document summary can be used to concisely describe the information contained in a cluster of documents and facilitate users to understand the document cluster. For example, a number of web news services, such as Google News,Footnote 1 NewsBlaster,Footnote 2 have been developed to group news articles into news topics, and then produce a short summary for each news topic. The users can easily understand the topic they have interest in by taking a look at the short summary. Topic-focused summary can be used to provide personalized services to users after the user profiles are provided manually or automatically. The above news services can be personalized by collecting the users’ profiles, and delivering both the related news articles and the produced news summary biased to the profile of the specific user. Other examples include Question/Answer systems, in which a question-focused summary is usually required to answer the information need in the issued question.

A particular challenge for multi-document summarization is that the document set might contain much information unrelated to the main topic. Hence we need effective summarization methods to analyze the information stored in different documents and extract the important information related to the main topic. In other words, a good summary is expected to preserve the globally important information contained in the documents as much as possible, and at the same time keep the information as novel as possible.

The challenges for topic-focused multi-document summarization are as follows: the first one is a common problem for generic multi-document summarization, that the globally important information needs to be extracted and merged; the second one is a particular challenge for topic-focused multi-document summarization that the information in the summary must be biased to the given topic, so we need effective summarization methods to take into account this topic-biasing characteristic during the summarization process. In brief, a good topic-focused summary is expected to preserve the information contained in the documents as much as possible, and at the same time keep the information as novel as possible, and moreover, the information must be biased to the given topic.

Both generic and topic-focused multi-document summarizations have been widely explored in the natural language processing and information retrieval communities. A series of workshops and conferences on automatic text summarization (e.g. NTCIR,Footnote 3 DUCFootnote 4), special topic sessions in ACL, COLING, and SIGIR have advanced the technology and produced a couple of experimental online systems.

In recent years, graph-ranking based methods (Mani and Bloedorn 1999; Erkan and Radev 2004a, b; Mihalcea and Tarau 2005) have been proposed for generic multi-document summarization based on sentence relationships. All these methods make use of the relationships between sentences and select sentences according to the “votes” or “recommendations” from their neighboring sentences, which is similar to PageRank (Page et al. 1998) and HITS (Kleinberg 1999). However, all the methods have not differentiated different kinds of relationships between sentences, i.e. the cross-document relationships and the within-document relationships. They all assume that the two kinds of sentence relationships are equally important, which is in fact inappropriate. In this study, we investigate the relative importance of the cross-document relationships and the within-document relationships between sentences in an extended graph-ranking based approach. The approach extends previous works by treating each kind of sentence relationship as a separate “modality” and computing the information richness of sentences based on each “modality”. Also, the approach applies the diversity penalty process to keep the novelty of the summary. Experiments on DUC 2002 and DUC 2004 are performed and we find that the cross-document relationships between sentences are very important for multi-document summarization. The system based only on the cross-document relationships can always perform better than or at least as well as the systems based on both the cross-document relationships and the within-document relationships between sentences.

Furthermore, we extend the graph-ranking based method for topic-focused summarization by integrating the relevance of the sentences to the specified topic, which can be considered as a topic-sensitive version of the random walk model. Likewise, the relative importance of the cross-document relationships and the within-document relationships between sentences are investigated. Experiments on DUC 2003 and DUC 2005 are performed and we find that the proposed graph-ranking based approach using only the cross-document relationships between sentences outperforms the top performing summarization approaches and baseline approaches, including the graph-ranking based approach using both the cross-document relationships and the within-document relationships. The cross-document relationships between sentences are confirmed to be very important for topic-focused multi-document summarization.

The rest of this paper is organized as follows: Section 2 introduces related works. The proposed graph-ranking based approaches for generic multi-document summarization and topic-focused multi-document summarization are presented in Sect. 3. The experiments and results are given in Sect. 4. Lastly, we conclude our paper in Sect. 5.

2 Related works

2.1 Generic multi-document summarization

A variety of summarization methods have been developed recently. Generally speaking, the methods can be extractive summarization, abstractive summarization, or hybrid summarization. Extractive summarization is a simple but robust method for text summarization and it involves assigning saliency scores to some units (e.g. sentences, paragraphs) of the documents and then extracting the sentences with highest scores, while abstraction summarization (e.g. NewsBlaster) usually needs information fusion (Barzilay et al. 1999), sentence compression (Knight and Marcu 2002) and reformulation (McKeown et al. 1999). In this study, we focus on extractive summarization.

The centroid-based method (Radev et al. 2004) is one of the most popular extractive summarization methods. MEAD (Radev et al. 2003) is an implementation of the centroid-based method that assigns scores to sentences based on sentence-level and inter-sentence features, including cluster centroids, position, TF*IDF, etc. Based on MEAD, an online system—NewsInEssence is developed to summarize online news articles.

NeATS (Lin and Hovy 2002) is a project on multi-document summarization at ISI based on the single-document summarizer-SUMMARIST. Sentence position, term frequency, topic signature and term clustering are used to select important content. Stigma word filters and time stamps are used to improve cohesion and coherence.

XDoX (Hardy et al. 2002) is a cross document summarizer designed specifically to summarize large document sets. It identifies the most salient themes within the set by passage clustering and then composes an extraction summary, which reflects these main themes. The passages are clustered based on n-gram matching. Much other works have also explored to find topic themes in the documents for summarization, e.g. Harabagiu and Lacatusu (2005) investigate five different topic representations and introduce a novel representation of topics based on topic themes. Nenkova et al. (2006) thoroughly study the contribution to summarization of three factors related to frequency: content word frequency, composition functions for estimating sentence importance from word frequency, and adjustment of frequency weights based on context, and they show that a frequency based summarizer can achieve performance comparable to that of state-of-the-art systems, but only with a good composition function. Zhang et al. (2002) propose to use the Cross-document Structure Theory (CST) to enhance the arbitrary multi-document extract by replacing low-salience sentences with other sentences that increase the total number of CST relationships included in the summary. Sentence ordering for multi-document summarization has also been explored to make summaries coherent and readable (Bollegala et al. 2006; Ji and Pulman 2006).

Graph-ranking based methods have been proposed to rank sentences or passages recently. Earlier summarization method (Salton et al. 1997) generates intra-document links between passages of a document and characterizes the structure of the document based on the intra-document linkage pattern, and then it applies the knowledge of text structure to passage extraction. Websumm (Mani and Bloedorn 1999) uses a graph-connectivity model and operates under the assumption that nodes which are connected to many other nodes are likely to carry salient information. LexRank (Erkan and Radev 2004a, b) is an approach for computing sentence importance based on the concept of eigenvector centrality. It constructs a sentence connectivity matrix and compute the sentence importance based on an algorithm similar to PageRank (Page et al. 1998). Mihalcea and Tarau (2005) also propose similar algorithms based on PageRank and HITS (Kleinberg 1999) to compute the sentence importance for single document summarization, and for multi-document summarization, they use a meta-summarization process to summarize the meta-document produced by assembling all the single summary of each document. Instead of using sentences for ranking, Li et al. (2006) propose a novel approach to derive event relevance from the documents, where an event is defined as one or more event terms along with the named entities associated, and then apply PageRank algorithm to estimate the significance of an event for inclusion in a summary.

The above graph-based methods make uniform use of all kinds of sentence relationships under an inaccurate assumption that the cross-document relationships and the within-document relationships between sentences are equally important for multi-document summarization. We extend the above graph-based works by differentiating the two kinds of relationships between sentences and thoroughly investigate their relative importance for generic multi-document summarization in this study.

2.2 Topic-focused document summarization

Most topic-focused document summarization methods integrate the information of the given topic or query into generic summarizers and extracts sentences suiting the user’s declared information need. In Saggion et al. (2003), a simple query-based scorer by computing the similarity value between each sentence and the query is incorporated into a generic summarizer to produce the query-based summary. The query words and named entities in the topic description are investigated in Ge et al. (2003) and CLASSY (Conroy and Schlesinger 2005) for event-focused/query-based multi-document summarization. In Hovy et al. (2005), the important sentences are selected based on the scores of basic elements (BE). CATS (Farzindar et al. 2005) is a topic-oriented multi-document summarizer which first performs a thematic analysis of the documents, and then matches these themes with the ones identified in the topic. BAYESUM (Daumé and Marcu 2006) is proposed to extract sentences by comparing query models against sentence models using the language modeling techniques for IR framework. Maximal marginal relevance (MMR) (Carbonell and Goldstein 1998) is a method for combining query-relevance with information-novelty in the context of text retrieval and summarization, and it strives to reduce redundancy while maintaining query relevance in re-ranking retrieved documents and in selecting appropriate passages for text summarization in a greedy manner, which has been widely used to remove redundancy in both generic summaries and topic-focused summaries.

In this study, we extend the above graph-ranking based methods for topic-focused multi-document summarization by (1) integrating the relevance of the sentences to the topic in the algorithm by using the topic-sensitive PageRank (Haveliwala 2002), similar to the work for question-focused sentence retrieval (Otterbacher et al. 2005); (2) differentiating the two kinds of relationships between sentences and using only the cross-document relationships between sentences in the graph-ranking based algorithm.

3 The proposed approach

3.1 Overview

The proposed approaches to generic and topic-focused multi-document summarizations are generally in the same framework, which is an extension of previous graph-ranking based summarization methods (Mani and Bloedorn 1999; Erkan and Radev 2004a, b; Mihalcea and Tarau 2005). The framework consists of the following three steps: (1) different affinity graphs are built to reflect different kinds of relationships between the sentences in the document set respectively; for topic-focused document summarization, the relevance values of the sentences to the topic are computed; (2) the information richness of the sentences can be computed based on each affinity graph respectively, and the final information richness of the sentences is either one of the computed information richness or a linear combination of them; for topic-focused document summarization, the biased information richness of the sentences is computed based on each affinity graph; (3) based on the whole affinity graph and the information richness scores, the diversity penalty is imposed on the sentences and the affinity rank score of each sentence is obtained to reflect both information richness and information novelty of the sentence. The sentences with high affinity rank scores are chosen to produce the summary.

The formal definitions of (biased) information richness and information novelty are given as follows:

-

Information richness Given a sentence collection \( S = {\left\{ {s_{i} |1 \le i \le n} \right\}}, \) the information richness InfoRich(s i ) is used to denote the information degree of the sentence s i , i.e. the richness of information contained in the sentence s i with respect to the entire collection S.

-

Biased information richness Given a sentence collection \( \chi = {\left\{ {x_{i} |1 \le i \le n} \right\}} \) and a topic T, the biased information richness of a sentence x i is used to denote the information degree of the sentence x i with respect to both the sentence collection and T, i.e. the richness of information contained in the sentence x i biased towards T.

-

Information novelty Given a set of sentences in the summary \( R = {\left\{ {s_{i} |1 \le i \le m} \right\}}, \) the information novelty InfoNov(s i ) is used to measure the novelty degree of information contained in the sentence s i with respect to all other sentences in the set R.

The aim of the proposed approaches is to include the sentences with both high information richness and high information novelty in the generic summary, and the sentences with both high biased information richness and high information novelty are included in the topic-focused summary.

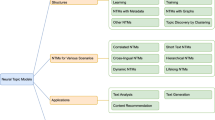

Figure 1 shows the framework of the summarization approach. Note that the modules in the dashed box (at top right corner) are only for topic-focused document summarization.

3.2 Affinity graph building

3.2.1 Generic multi-document summarization

Given a sentence collection \( S = {\left\{ {s_{i} |1 \le i \le n} \right\}} \), where n is the size of the sentence collection, the affinity weight aff(s i , s j ) between a sentence pair of s i and s j is calculated using the standard Cosine measure (Baeza-Yates and Ribeiro-Neto 1999) as follows:

where \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{s} \) and \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{s}_{j} \) are the corresponding term vectors of s i and s j . The weight associated with term t is calculated with the tf t *isf t formula, where tf t is the frequency of term t in the corresponding sentence and isf t is the inverse sentence frequency of term t, i.e. \( 1 + \log (N/n_{t} ), \) where N is the total number of sentences and n t is the number of sentences containing term t.

If sentences are considered as nodes, the sentence collection can be modeled as an undirected graph by generating a link between two sentences if their affinity weight exceeds 0, i.e. an undirected link between s i and \( s_{j} (i \ne j) \) with affinity weight aff(s i , s j ) is constructed if aff(s i , s j ) > 0; otherwise no link is constructed. Thus, we construct an undirected graph G reflecting the semantic relationship between sentences by their content similarity. The graph G contains all kinds of links between sentences and is called as the Whole Affinity Graph. We use an adjacency (affinity) matrix M to describe the whole affinity graph G with each entry corresponding to the weight of a link in the graph. \( {M} = (M_{{i,j}} )_{{n \times n}} \) is defined as follows:

Then M is normalized to \( \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M} \) as follows to make the sum of each row equal to 1:

Similar to the above process, the other two affinity graphs G intra and G inter are also built: the within-document affinity graph G intra is to include only within-document links between sentences (the entries of cross-document links are set to 0); the cross-document affinity graph G inter is to include only cross-document links between sentences (the entries of within-document links are set to 0). Note that given a sentence pair of s i and s j , if s i and s j belong to different documents, the link between s i and s j is a cross-document link (relationship); otherwise, the link is a within-document link (relationship). The corresponding adjacency (affinity) matrices of G intra and G inter are denoted by M intra and M inter respectively. In fact, M intra and M inter can be extracted from M and we have M = M intra + M inter. Similar to Eq. 3, M inter and M intra are respectively normalized to \(M _{\text {intra}}\) and \( \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}_{{{\text{inter}}}} \) to make the sum of each row equal to 1.

3.2.2 Topic-focused multi-document summarization

Similar to generic multi-document summarization, the whole affinity graph G, the within-document affinity graph G intra and the cross-document affinity graph G inter are built to reflect different relationships between sentences. The corresponding normalized affinity matrices are denoted by \( \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}, \) \( \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}_{{{\text{intra}}}} \) and \( \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}_{{{\text{inter}}}}, \) respectively.

In order to compute the biased information richness of sentences, the relevance values of the sentences to the topic need to be computed. Given a topic description q and a sentence collection \( S = {\left\{ {s_{i} |1 \le i \le n} \right\}} \) for a document set, we first compute the relevance of a sentence s i to the topic q using the standard Cosine measure as follows:

where \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{s}_{i} \) and \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{q} \) are the corresponding term vectors of s i and q. The weight associated with term t is calculated with the tf t *isf t formula.

The relevance of sentence s i to the topic q is then normalized as follows to make the sum of all relevance values of the sentences equal to 1.

The relevance value of each sentence can be considered as a weight attached to each node in the affinity graph, reflecting the degree of the node’s topic-biasing.

3.3 Information richness computation

3.3.1 Generic multi-document summarization

The computation of the information richness scores of the sentences is based on the following three intuitions: (1) The more neighbors a sentence has, the more informative it is; (2) The more informative a sentence’s neighbors are, the more informative it is; (3) The more heavily a sentence is linked to by other informative sentences, the more informative it is. In brief, a sentence heavily linked to by many sentences with high information richness will also have high information richness. Based on the above intuitions, we apply a graph-ranking based algorithm to compute the information richness score for each node in the obtained affinity graph, similar to PageRank.

Based on the whole affinity graph G, the information richness score InfoRich all (s i ) for sentence s i can be deduced from those of all other sentences linked with it and it can be formulated in a recursive form as follows:

And the matrix form is:

where n is the number of sentences in S; \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{\lambda } = {\text{[}}InfoRich_{{all}} {\text{(}}s_{i} {\text{)]}}_{{n \times 1}} \) is the vector containing the information richness of all the sentences; \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{e} \) is a unit vector with all elements equaling to 1; d is the damping factor set to 0.85.

The above process can be considered as a Markov chain by taking the sentences as the states and the corresponding transition matrix is given by \( d \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}^{T} + {\text{(}}1 - d{\text{)}}U \), where \( U = {\left[ {\frac{1} {n}} \right]}_{{n \times n}} \). Based on the transition matrix, we can construct a random walk model. The stationary probability distribution of each state is obtained by the principal eigenvector of the transition matrix.

For implementation, the initial information richness scores of all sentences are set to 1 and the iteration algorithm in Eq. 6 is adopted to compute the new information richness scores of the sentences. Usually the convergence of the iteration algorithm is achieved when the difference between the information richness scores computed at two successive iterations for any sentences falls below a given threshold (0.0001 in this study).

Similarly, the information richness score for sentence s i can be deduced based on either the within-document affinity graph G intra or the cross-document affinity graph G inter as follows:

The model constructed in Eq. 8 is called as within-document random walk and the model constructed in Eq. 9 is called as cross-document random walk.

The final information richness InfoRich(s i ) of a sentence s i can be either InfoRich all (s i ), InfoRich intra (s i ) or InfoRich inter (s i ). Note that all previous graph-ranking based summarization methods have InfoRich(s i ) = InfoRich all (s i ).

3.3.2 Topic-focused multi-document summarization

In order to compute the biased information richness scores of the sentences, the topic-sensitive PageRank is employed to incorporate the relevance of the sentences to the topic.

Based on the whole affinity graph G, the biased information richness score InfoRich all (s i ) for sentence s i can be deduced from those of all other sentences linked with it and the relevance value of the sentence to the topic. It can be formulated in a recursive form as follows:

And the matrix form is:

where \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{\lambda } = {\text{[}}InfoRich_{{all}} {\text{(}}s_{i} {\text{)]}}_{{n \times 1}} \) is the vector containing the information richness scores of all the sentences; \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{\alpha } = {\text{[}}rel'{\text{(}}s_{i} |q{\text{)]}}_{{n \times 1}} \) is the vector containing the relevance scores of all the sentences to the topic; d is the damping factor simply set to 0.85 as in PageRank.Footnote 5

Likewise, the above process can be considered as a Markov chain by taking the sentences as the states and the corresponding transition matrix is given by \( d \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}^{T} + (1 - d)\ifmmode\expandafter\vec\else\expandafter\vec\fi{\alpha }\ifmmode\expandafter\vec\else\expandafter\vec\fi{e}^{T}, \) where \( \ifmmode\expandafter\vec\else\expandafter\vec\fi{e} \) is a unit n × 1vector with all elements equaling to 1. Based on the transition matrix, we can construct a random walk model. The stationary probability distribution of each state is obtained by the principal eigenvector of the transition matrix.

The biased information richness score for sentence s i can be deduced based on either the within-document affinity graph G intra or the cross-document affinity graph G inter as follows:

Similarly, the final biased information richness score InfoRich(s i ) of a sentence s i can be either InfoRich all (s i ), InfoRich intra (s i ) or InfoRich inter (s i ).

3.4 Diversity penalty imposition

This step aims to remove redundant information in the summary by penalizing the sentences highly overlapping with other informative sentences. Based on the whole affinity graph G and the obtained final information richness scores, a greedy algorithm (Zhang et al. 2005) is applied to impose diversity penalty and compute the final affinity rank scores of the sentences as follows:

-

1.

Initialize two sets \( A = \emptyset ,\) \( B = {\left\{ {s_{i} |i = 1,2, \ldots ,n} \right\}} ,\) and each sentence’s affinity rank score is initialized to its information richness score, i.e. ARScore(s i ) = InfoRich(s i ), i=1,2,...,n.

-

2.

Sort the sentences in B by their current affinity rank scores in descending order.

-

3.

Suppose s i is the highest ranked sentence, i.e. the first sentence in the ranked list. Move sentence s i from B to A, and then the diversity penalty is imposed on the affinity rank score of each sentence linked with s i as follows: For each sentence s j in B, we have

$$ ARScore{\text{(}}s_{j} {\text{)}} = ARScore{\text{(}}s_{j} {\text{)}} - \omega \cdot \ifmmode\expandafter\tilde\else\expandafter\sim \fi{M}_{{j,i}} \cdot InfoRich{\text{(}}s_{i} {\text{)}} $$(14)where ω > 0 is the penalty degree factor. The larger ω is, the greater penalty is imposed on the affinity rank score. If ω = 0, no diversity penalty is imposed at all.

-

4.

Go to step 2 and iterate until \( B = \emptyset \) or the iteration count reaches a predefined maximum number.

In the above algorithm, the third step is the crucial step and its basic idea is to decrease the affinity rank score of less informative sentences by the part conveyed from the most informative one. Finally, the sentences with highest affinity rank scores are chosen to produce the summary according to the summary length limit.

4 Experiments and results

4.1 Experimental setup

4.1.1 Data set

Generic multi-document summarization has been one of the fundamental tasks in DUC 2001, DUC 2002 and DUC 2004 (i.e. task 2 in DUC 2001, task 2 in DUC 2002 and task 2 in DUC 2004), and we used DUC 2001 data as training set and DUC 2002 and DUC 2004 data as test set in our experiments. In DUC 2002, 59 document setsFootnote 6 of approximately 10 documents each were provided and generic summaries of each document set with lengths of approximately 100 words or less were required to be created. In DUC 2004, 50 TDT (Topic Detection and Tracking (Allan et al. 1998)) document clusters were provided and a short summary with lengths of 665 bytes or less was required to be created. Note that the TDT topic would not be input to the summarizer. Table 1 gives a summary of the datasets used in the experiments.

Topic-focused multi-document summarization has been evaluated on tasks 2 and 3 of DUC 2003 and the only task of DUC 2005, each task having a gold standard data set consisting of document clusters and reference summaries. In our experiments, task 2 of DUC 2003 was used for training and parameter tuning and the other two tasks were used for testing. The topic representations of the three topic-focused summarization tasks are different: task 2 of DUC 2003 is to produce summaries focused by events; task 3 of DUC 2003 is to produce summaries focused by viewpoints; the task of DUC 2005 is to produce summaries focused by DUC Topics. In the experiments, we do not differentiate the three kinds of topic representations and make use of them in the same way. Table 2 gives a short summary of the datasets.

As a preprocessing step, the sentences were extracted using the sentence-breaker tool provided by DUC and the dialog sentences (sentences in quotation marks) were removed. In the process of affinity graph building, the stop words were removed and Porter’s stemmer (Porter 1980) was used for word stemming.

4.1.2 Evaluation metric

For evaluation, we use the ROUGE (Lin and Hovy 2003) evaluation toolkit,Footnote 7 which is adopted by DUC for automatically summarization evaluation. It measures summary quality by counting overlapping units such as the n-gram, word sequences and word pairs between the candidate summary and the reference summary. ROUGE-N is an n-gram recall measure computed as follows:

where n stands for the length of the n-gram, and Count match (n-gram) is the maximum number of n-grams co-occurring in a candidate summary and a set of reference summaries. Count(n-gram) is the number of n-grams in the reference summaries.

ROUGE toolkit reports separate scores for 1, 2, 3 and 4-gram, and also for longest common subsequence co-occurrences. Among these different scores, unigram-based ROUGE score (ROUGE-1) has been shown to agree with human judgment most (Lin and Hovy 2003). We show three of the ROUGE metrics in the experimental results: ROUGE-1 (unigram-based), ROUGE-2 (bigram-based), and ROUGE-W (based on weighted longest common subsequence, weight = 1.2).

In order to truncate summaries longer than length limit, we used the “-l” or “-b” optionFootnote 8 in ROUGE toolkit and we also used the “-m” option for word stemming.

4.2 Experimental results

4.2.1 Generic multi-document summarization

The proposed approaches are compared with the top 3 performing systems and two baseline systems (i.e. the lead baseline and the coverage baseline) on task 2 of DUC 2002 and task 2 of DUC 2004 respectively. The top three systems are the systems with the highest ROUGE scores, chosen from the performing systems in the tasks of DUC 2002 and DUC 2004 respectively. The lead baseline and coverage baseline are two baselines employed in the multi-document summarization tasks at DUC. The lead baseline takes the first sentences one by one from the last document in the collection, where documents are assumed to be ordered chronologically, until the summary reaches the length limit. And the coverage baseline takes the first sentence from the first document, the first sentence from the second document, and the first sentence from the third document, and so on, until the summary reaches the length limit.

The following three approaches based on different sentence relationships are investigated in the experiments:

-

Uniform Link: The approach computes the information richness score of a sentence based on the whole affinity graph without differentiating the cross-document relationships and the within-document relationships, as in previous graph-ranking based summarization methods, i.e. InfoRich(s i ) = InfoRich all (s i );

-

Intra-Link: The approach computes the information richness score of a sentence based only on the within-document relationships between sentences, i.e. InfoRich(s i ) = InfoRich intra (s i );

-

Inter-Link: The approach computes the information richness score of a sentence based only on the cross-document relationships between sentences, i.e. InfoRich(s i ) = InfoRich inter (s i ).

Tables 3 and 4 show the comparison results on task 2 of DUC 2002 and task 2 of DUC 2004, respectively, where S19-S104 are the system IDs of the top performing systems, whose details are described in DUC publications. The penalty degree factor ω for the above three approaches was tuned on task 2 of DUC 2001 and set to 8.

We can see from the above tables that the two graph-ranking based approaches using the cross-document relationships between sentences (i.e. “Inter-Link” and “Uniform Link”) much outperform the top performing systems and baseline systems (including the “Intra-Link”) on both tasks over all three metrics. Among the three graph-ranking based approaches, the approach based only on the cross-document relationships between sentences (i.e. “Inter-Link”) performs best on both tasks, while the approach based only on the within-document relationships between sentences (i.e. “Intra-Link”) performs worst. The above observations demonstrate the great importance of the cross-document relationships between sentences for generic multi-document summarization.

Figures 2– 7 show the comparison results of the graph-ranking based approaches under different values of the penalty degree factor ω. Figures 2–4 show the comparison results over ROUGE-1, ROUGE-2 and ROUGE-W on DUC 2002 respectively and Figs. 5–7 show the comparison results over the three metrics on DUC 2004 respectively.

Seen from the above figures, when the penalty degree factor ω is increased, the ROUGE scores of almost all the approaches are first increased and then decreased. The reason is that the larger ω is, the greater penalty is imposed on the affinity rank score, and when ω is large enough, the diversity penalty could be over-imposed, thus deteriorating the final performance. The approaches considering the cross-document relationships between sentences (i.e. “Inter-Link” and “Uniform Link”) always outperform the approaches based only on the within-document relationships between sentences (i.e. “Intra-Link”) under different values of the penalty degree factor ω. Among the two approaches considering the cross-document relationships between sentences, the approach based only on the cross-document relationships between sentences (i.e. “Inter-Link”) performs better than or at least as well as the approach based on both the cross-document and within-document relationships (i.e. “Uniform Link”) under different values of the penalty degree factor ω. This result further validates the great importance of the cross-document relationships betweens sentences for generic multi-document summarization.

In order to investigate how the relative contributions from the cross-document relationships and the within-document relationships between sentences influence the summarization performance, we propose the following “Union-Link” method to compute the final information richness score of a sentence s i by linearly combing the information richness score InfoRich intra (s i ) based on the within-document relationships and the information richness score InfoRich inter (s i ) based on the cross-document relationships as follows:

where \( \lambda \in [0,1] \) is a weighting parameter, specifying the relative contributions to the final information richness of sentences from the cross-document relationships and the within-document relationships between sentences. If λ = 0, InfoRich(s i ) is equal to InfoRich inter (s i ); if λ = 1, InfoRich(s i ) is equal to InfoRich intra (s i ); and if λ = 0.5, the cross-document relationships and the within-document relationships are assumed to be equally important. Note that the “Union-Link” is almost the same with the “Uniform Link” when λ is set to 0.5.

Figures 8–10 show the performances of the “Union-Link” approach under different values of the weighting parameter λ. The summarization performances without diversity penalty imposition (ω = 0) and the summarization performances with diversity imposition (ω = 8) on task 2 of DUC 2002 and task 2 of DUC 2004 are shown in the figures.

Seen from Figs. 8–10, it is clear that the performance values of the approaches decrease with the increase of λ over all three metrics on both tasks of DUC 2002 and DUC 2004, which demonstrates that the less relative contributions are given to the cross-document relationships between sentences, the worse the summarization performance is. The cross-document relationships between sentences are much more important than the within-document relationships between sentences for generic multi-document summarization.

4.2.2 Topic-focused multi-document summarization

Similar to generic multi-document summarization, the proposed approaches were compared with the top 3 performing systems and two baseline systems (i.e. the lead baseline and the coverage baseline) on task 3 of DUC 2003 and the task of DUC 2005 respectively. The top three systems are the systems with the highest ROUGE scores, chosen from the performing systems in the tasks of DUC 2003 and DUC 2005 respectively.

The three approaches of “Uniform Link”, “Intra-Link” and “Inter-Link” were investigated in the experiments. The approaches are defined in the same way with that for generic multi-document summarization and they compute the biased information richness with Eqs. 10, 12 and 13 respectively.

Tables 5 and 6 show the ROUGE comparison results on task 3 of DUC 2003 and the only task of DUC 2005 respectively. In the tables, S4–S17 are the system IDs of the top performing systems. The penalty degree factor ω for the above approaches is tuned on task 2 of DUC 2003 and set to 10.

We can see from the tables that the two graph-ranking based approaches using the cross-document relationships between sentences (i.e. “Inter-Link” and “Uniform Link”) much outperform the top performing systems and baseline systems (including the “Intra-Link”) on both tasks over the three metrics. Among the three graph-ranking based approaches, the approach based only on the cross-document relationships between sentences (i.e. “Inter-Link”) performs best on both tasks, while the approach based only on the within-document relationships between sentences (i.e. “Intra-Link”) performs worst. The above observations demonstrate the great importance of the cross-document relationships between sentences for topic-focused multi-document summarization.

Figures 11–16 show the comparison results of the three graph-ranking based approaches under different values of the penalty degree factor ω. Figures 11–13 show the comparison results over the three metrics on task 3 of DUC 2003 respectively and Figs. 14–16 show the comparison results over the three metrics on DUC 2005 respectively.

Seen from the figures, the approaches based on the cross-document relationships between sentences (i.e. “Inter-Link” and “Uniform Link”) almost always outperform the approach based only on the within-document relationships between sentences (i.e. “Intra-Link”) when ω varies from 0 to 18. Among the two approaches using the cross-document relationships between sentences, the “Inter-Link” performs almost always better than or at least as well as the “Uniform Link” when ω varies. This further validates the great importance of the cross-document relationships betweens sentences for topic-focused multi-document summarization. We can also see that the ROUGE scores of almost all the approaches are first increased and then decreased with the increase of ω, which is because the diversity penalty could be over-imposed and thus deteriorate the final performance when ω is large enough.

In order to investigate how the relative contributions from the cross-document relationships and the within-document relationships between sentences influence the topic-focused summarization performance, we propose the approach of “Union-Link” to compute the final biased information richness of a sentence s i , which linearly combines the biased information richness InfoRich intra (s i ) based on the within-document random walk and the biased information richness InfoRich inter (s i ) based on the cross-document random walk as in Eq. 16.

Figures 17–19 show the performances of the “Union-Link” approach when λ varies from 0 to 1. The performances of the approach without diversity penalty imposition (ω = 0) and the approach with diversity penalty imposition (ω = 10) on task 3 of DUC 2003 and the task of DUC 2005 are shown in the figures, respectively.

Seen from Figs. 17–19, the ROUGE values of the approaches decrease with the increase of λ over almost all the three metrics on both tasks, which demonstrates that the less relative contributions are given to the cross-document relationships between sentences, the worse the system performance is. The cross-document relationships between sentences are much more important than the within-document relationships between sentences for topic-focused multi-document summarization.

In order to demonstrate the value of integrating the relevance between the sentences and the given topic for topic-focused summarization, we compare the proposed “Uniform Link” method with one particular baseline method, which treats the task of topic-focused summarization as the task of generic summarization by ignoring the sentence-to-topic relevance, i.e., the baseline method makes use of only the sentence-to-sentence relationships and use Eq 6 to compute the information richness scores of the sentences, instead of using Eq 10 to compute the biased information richness scores of the sentences.

Figures 20–22 show the comparison results over three ROUGE metrics on the two DUC tasks, respectively. In the figures, the ROUGE values based on different ω are presented and compared, and we can find that on both tasks, the proposed method (“w/ topic”) almost always outperforms the baseline method (“w/o topic”) over different ω and three ROUGE metrics. The results validate that the relevance between sentences and topic are important to the task of topic-focused summarization because the relevance benefits to compute the biased information richness of the sentences.

4.3 Discussion

The experimental results demonstrate the great importance of the cross-document relationships between sentences for both generic and topic-focused multi-document summarizations, which can be explained by the essence of multi-document summarization. The aim of multi-document summarization is to extract important information form the whole document set,Footnote 9 in other words, the information in the summary should be globally important on the whole document set. So the information contained in a globally informative sentence will be also expressed in the sentences of other documents and the votes or recommendations of neighbors in other documents are more important than the votes or recommendations of neighbors in the same document. The above idea has been applied in many other applications. For example, in the field of web search, a web page’s inlinks from other websites are usually more important than the inlinks from within the same website for evaluating the importance of the web page; in the field of digital library, the citing of a paper by some paper from other organizations is more important than the citing by some paper in the same organization for evaluating the quality of the paper.

Next, we explore whether the cross-document random walk model is more likely to select sentences from different documents and whether the summarization performance would be better if sentences are chosen from more documents. The number of different documents containing the top 5 and 10 sentences with the largest affinity rank scores is recorded for each summarization process, and then the document numbers are averaged over each DUC task. Table 7 gives the averaged numbers. We can see from the table that the cross-document random walk model (“Inter-Link”) does not always select sentences from more documents than the within-document random walk model (“Intra-Link”). For generic summarization tasks, the within-document random walk model (“Intra-Link”) selects sentences from more documents than the other two models considering the cross-document relationships (“Inter-Link” and “Uniform Link”), while it has the worst summarization performance. The comparison results demonstrate that the number of different documents containing summary sentences has no correlation with the summarization performance.

Finally, it is noteworthy that the use of only cross-document relationships for multi-document summarization will largely reduce the number of edges in the affinity graph and improve the efficiency of the iterative algorithm.

5 Conclusion and future work

In this paper we differentiate the two kinds of relationships between sentences for generic multi-document summarization, i.e. the cross-document relationships and the within-document relationships. Experimental results on DUC 2002 and DUC 2004 demonstrate the great importance of the cross-document relationships between sentences. The system can achieve best performance even based only on the cross-document relationships between sentences. Furthermore, we integrate the relevance of the sentences to the specified topic into the graph-ranking based method for topic-focused multi-document summarization. The within-document relationships and the cross-document relationships are differentiated and two separate random walk models are constructed. Experimental results on DUC 2003 and DUC 2005 demonstrate the great importance of the cross-document relationships between sentences for topic-focused multi-document summarization. The proposed approach can achieve best performance even based only on the cross-document relationships between sentences.

In future work, we will apply the graph-ranking based method to web page summarization, and further investigate to incorporate the link structure between web pages in the summarization process. The rich link information is unique for web page summarization, and the links usually have different purposes. We will classify the links between web pages according to different purposes, and then differentiate the link information in the summarization process to improve the summarization performance.

Notes

The damping factor d is set without tuning. Different values of d might have influence on the summarization performance, which is, however, not the focus of this paper and will be investigated in our future work.

At first, there were 60 document clusters, but the document cluster of D088 is withdrawn by NIST due to differences in documents used by systems and NIST summarizers.

We used ROUGEeval-1.4.2 downloaded from http://www.haydn.isi.edu/ROUGE/

This option is necessary for fair comparison because longer summary will usually increase ROUGE evaluation values.

Seen from Tables 3–6, we can see that the coverage baseline can always achieve much better performances than the lead baseline, which can be intuitively explained that the lead baseline produces the summary locally from only one document, while the coverage baseline produces the summary globally from a number of documents and thus coverage baseline more meets the need of multi-document summarization. This result validates the aim of multi-document summarization stated here.

References

Allan, J., Carbonell, J., Doddington, G., Yamron, J. P., & Yang, Y. (1998). Topic detection and tracking pilot study: final report. In Proceedings of DARPA Broadcast News Transcription and Understanding Workshop (pp. 194–218).

Baeza-Yates, R., & Ribeiro-Neto, B. (1999). Modern information retrival. ACM Press and Addison Wesley.

Barzilay, R., McKeown, K. R., & Elhadad, M. (1999). Information fusion in the context of multi-document summarization. In Proceedings of the 37th Association for Computational Linguistics on Computational Linguistics, Maryland (pp. 550–557).

Bollegala, D., Okazaki, N., & Ishizuka, M. (2006). A bottom-up approach to sentence ordering for multi-document summarization. In Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics (pp. 385–392).

Carbonell, J., & Goldstein, J. (1998). The use of MMR, diversity-based reranking for reordering documents and producing summaries. In Proceedings of the 21st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (pp. 335–336).

Conroy, J. M., & Schlesinger, J. D. (2005). CLASSY query-based multi-document summarization. In Proceedings of 2005 Document Understanding Conference.

Daumé, H., & Marcu, D. (2006). Bayesian query-focused summarization. In Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics (pp. 305–312).

Erkan, G., & Radev, D. (2004a). LexPageRank: Prestige in multi-document text summarization. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing (pp. 365–371).

Erkan, G., & Radev, D. (2004b) LexRank: Graph-based lexical centrality as salience in text summarization. Journal of Artificial Intelligence Research, 22, 457–479.

Farzindar, A., Rozon, F., & Lapalme, G. (2005). CATS a topic-oriented multi-document summarization system at DUC 2005. In Proceedings of the 2005 Document Understanding Conference.

Ge, J., Huang, X., & Wu, L. (2003). Approaches to event-focused summarization based on named entities and query words. In Proceedings of the 2003 Document Understanding Conference.

Harabagiu, S., & Lacatusu, F. (2005). Topic themes for multi-document summarization. In Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Salvador, Brazil (pp. 202–209).

Hardy, H., Shimizu, N., Strzalkowski, T., Ting, L., Wise, G. B., & Zhang, X. (2002). Cross-document summarization by concept classification. In Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Tampere, Finland (pp. 121–128).

Haveliwala, T. H. (2002). Topic-sensitive PageRank. In Proceedings of the Eleventh International World Wide Web Conference (pp. 517–526).

Hovy, E., Lin, C.-Y., & Zhou, L. (2005). A BE-based multi-document summarizer with query interpretation. In Proceedings of the 2005 Document Understanding Conference.

Ji, P. D., & Pulman, S. (2006). Sentence ordering with manifold-based classification in multi-document summarization. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing (pp. 526–533).

Kleinberg, J. M. (1999). Authoritative sources in a hyperlinked environment. Journal of the ACM, 46(5), 604–632.

Knight, K., & Marcu, D. (2002). Summarization beyond sentence extraction: A probabilistic approach to sentence compression. Artificial Intelligence, 139(1), 91–107.

Li, W., Wu, M., Lu, Q., Xu, W., & Yuan, C. (2006). Extractive summarization using inter- and intra- event relevance. In Proceedings of the 21st International Conference on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics (pp. 369–376).

Lin, C.-Y., & Hovy, E. H. (2002). From single to multi-document summarization: A prototype system and its evaluation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (pp. 25–34).

Lin, C.-Y., & Hovy, E. H. (2003). Automatic evaluation of summaries using n-gram co-occurrence statistics. In Proceedings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology (pp. 71–78).

Mani, I., & Bloedorn, E. (1999). Summarizing similarities and differences among related documents. Information Retrieval, 1(1–2), 35–67.

McKeown, K., Klavans, J., Hatzivassiloglou, V., Barzilay, R., & Eskin, E. (1999). Towards multidocument summarization by reformulation: Progress and prospects. In Proceedings of the Sixteenth National Conference on Artificial Intelligence, Orlando, Florida (pp. 453–460).

Mihalcea, R., & Tarau, P. (2005). A language independent algorithm for single and multiple document summarization. In Proceedings of the Second International Joint Conference on Natural Language Processing (pp. 19–24).

Nenkova, A., Vanderwende, L., & McKeown, K. (2006). A compositional context sensitive multi-document summarizer: Exploring the factors that influence summarization. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (pp. 573–580).

Otterbacher, J., Erkan, G., & Radev, D. R. (2005). Using random walks for question-focused sentence retrieval. In Proceedings of 2005 Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing (HLT/EMNLP2005) (pp. 915–922).

Page, L., Brin, S., Motwani, R., & Winograd, T. (1998) The PageRank citation ranking: Bringing order to the Web. Technical Report, Computer Science Department, Stanford University.

Porter, M. F. (1980). An algorithm for suffix stripping. Program, 14(3), 130–137.

Radev, D., Allison, T., Blair-Goldensohn, S., Blitzer, J., et al. (2003). The Mead multi-document summarizer. http://www.summarization.com/mead/. Accessed on 21 March 2006.

Radev, D. R., Jing, H. Y., Stys, M., & Tam, D. (2004). Centroid-based summarization of multiple documents. Information Processing and Management, 40, 919–938.

Saggion, H., Bontcheva, K., & Cunningham, H. (2003). Robust generic and query-based summarization. In Proceedings of the Tenth Conference on European Chapter of the Association for Computational Linguistics (pp. 235–238).

Salton, G., Singhal, A., Mitra, M., & Buckley, C. (1997). Automatic text structuring and summarization. Information Processing and Management, 33(2), 193–207.

Zhang, Z., Blair-Goldensohn, S., & Radev, D. R. (2002). Towards CST-enhanced summarization. In Proceedings of the 18th National Conference on Artificial Intelligence (pp. 439–445).

Zhang, B., Li, H., Liu, Y., Ji, L., Xi, W., Fan, W., Chen, Z., & Ma, W.-Y. (2005). Improving web search results using affinity graph. In Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (pp. 504–511).

Acknowledgement

Supported by the National Science Foundation of China (60703064).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wan, X. Using only cross-document relationships for both generic and topic-focused multi-document summarizations. Inf Retrieval 11, 25–49 (2008). https://doi.org/10.1007/s10791-007-9037-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-007-9037-5