Abstract

Query auto completion (QAC) is used in search interfaces to interactively offer a list of suggestions to users as they enter queries. The suggested completions are updated each time the user modifies their partial query, as they either add further keystrokes or interact directly with completions that have been offered. In this work we use a state model to capture the possible interactions that can occur in a QAC environment. Using this model, we show how an abstract QAC log can be derived from a sequence of QAC interactions; this log does not contain the actual characters entered, but records only the sequence of types of interaction, thus preserving user anonymity with extremely high confidence. To validate the usefulness of the approach, we use a large scale abstract QAC log collected from a popular commercial search engine to demonstrate how previous and new knowledge about QAC behavior can be inferred without knowledge of the queries being entered. An interaction model is then derived from this log to demonstrate its validity, and we report observations on user behavior with QAC systems based on the interaction model that is proposed.

Similar content being viewed by others

1 Introduction

Many search systems offer Query Auto Completion (QAC) to users to assist them as they enter queries. The common element of these QAC interfaces is that they offer a list of suggested complete queries that users can choose amongst, and that this list is updated each time the user enters another character. QAC functionality is not limited to Web search engines, but is also used in applications such as Apple Spotlight and interactive maps. Typically the completions are drawn from a repository of past queries, but could also be drawn from a synthetic query log (Bhatia et al. 2011; Maxwell et al. 2017) or, for example, in the case of location-based services, a gazetteer of geographic places.

The list of completions offered in each QAC event is based on a partial query, which we denote as \({{{\mathsf{P}}}}\). The user can modify their partial query \({{{\mathsf{P}}}}\) by adding or removing characters, or by interacting with the completions that are presented as a response to \({{{\mathsf{P}}}}\), stepping to a new partial query \({{{\mathsf{P}}}}'\). This behavior is in contrast to traditional search systems, where users examine and interact with the results of their search only after they have submitted a complete query, and interactions with a search result (such as clicking on an entry) do not modify the query. Thus a QAC system is itself a form of interactive search mechanism, where the aim is to retrieve a list of completions based on a partial query, and the users interact with the list of completions (search results from the QAC system) and modify their current partial query to begin the next round of QAC search. Being able to present the intended query after the user has entered only a few characters can save the keystrokes required for formulating queries and provide a more focused interactive search environment (Bar-Yossef and Kraus 2011). This can be particularly useful in mobile devices with small on-screen virtual keyboards, where the size of the keys can affect typing speed and accuracy (Ho Kim et al. 2014), and where the “fat finger” problem (Henze et al. 2012) reduces both the speed and accuracy of typing. In these interfaces the lack of tactile feedback (Findlater et al. 2011; Hoggan et al. 2008) and the inability to feel virtual key boundaries (Findlater et al. 2011) force the users to actively examine the screen during input. As a result, past studies have found that QAC usage patterns differ between desktop and mobile devices (Li et al. 2014, 2015).

Understanding of how users interact with QAC systems is essential to their design and evaluation (Hofmann et al. 2014). Such evaluations can be conducted online using user studies (Hofmann et al. 2014; Smith et al. 2016), with recent approaches to online evaluation (Garcia-Gathright et al. 2018) involving mixed methods such as surveys and modeling of user expectations. Evaluations staged offline (Kharitonov et al. 2013; Li et al. 2014; Mitra et al. 2014) have relied on large QAC logs and use of metrics such as reciprocal rank. Both of these approaches have significant limitations: user studies are expensive and are necessarily restricted in scale, while QAC logs contain personal information, invoking strong privacy concerns, and typically cannot be shared. In the past, query logs released for public access have been found to breach user privacy, despite efforts to anonymize them and prevent them from containing identifiable user information. For instance, an AOL query log released in 2006 was retracted after the queries from the log were traced back to the original users.Footnote 1 The recent General Data Protection RegulationFootnote 2 (GDPR) enforced by the European Union implies comprehensive restrictions on sharing of log and user data, and imposes penalties for data breaches that have made commercial search engines and social media platforms ever more cautious about data privacy. Recent data breachesFootnote 3 have added further concerns in regard to privacy and the appropriate uses of user data. As a result of these incidents, academic researchers face mounting obstacles in terms of gaining access to commercial logs to run large scale analyses.

Here we propose and explore a third approach, in which a QAC log is sanitized of potentially personal information, by removal of all specific characters entered by users, while retaining information about user actions, character types, and the timings of the user interactions. This abstract QAC log records, for example, whether a keystroke was a space, an alphanumeric character, or a backspace; records the lengths of the completions offered in response; and records the kind of device at which the query was entered. However, no specific character information is retained. In particular, the abstract QAC log used for the experiments described below follows a format sensitive to industry concerns regarding data privacy, and was cleared by the legal division of the company providing the logs, for limited use by the three academic collaborators in this project.

Based on our work with that abstract QAC log, we show that key conclusions from previous research can be rediscovered; we also present new findings, demonstrating the value of such a log as a viable option in support of future research. As neither partial queries nor completions are retained, and only a single interaction session is included for each possible user, we are confident that the abstract QAC log approach all but eliminates the risk of privacy violation, and thus provides the basis for a resource that could potentially be shared widely within the academic research community.Footnote 4

Given this context, the contributions of this paper are:

-

1.

We propose an interaction model to capture the sequence of operations that can arise in a QAC interface.

-

2.

Based on this interaction model, we create a format for an abstract QAC log that records a set of interaction statistics instead of the actual characters typed or the completion strings.

-

3.

Using an abstract QAC log, we demonstrate how several key results from previous research can be re-generated without accessing the original log, and report our observations on those user behaviors based on the new log.

-

4.

We derive an interaction model from the log and discuss the effects of this model on QAC system evaluation and design.

2 Background

Query Auto Completion (QAC) is found in a wide range of search interfaces. Command-line completion, provided by command-line interfaces, can be considered as an early form of auto completion. Some Unix shells, for instance, shows a list of completions with the press of a completion key (typically, “tab”), available after the user has entered a few characters of a known command. This feature can reduce the keystrokes required to enter long commands or filenames and helps to avoid spelling errors. A different form of auto completion, known as intelligent code completion (or simply code completion) is found in most syntax-aware program editors. They suggest lists of variable names, function names, and parameter lists, by constructing a real-time in-memory list based on the declarations in scope at that point in the program.

One of the first forms of QAC for large-scale web search was launched by Google in 2004, as a Google Labs feature, termed “Google Suggest” at the time.Footnote 5 Google initially offered the feature as an opt-in service, until it was fully integrated into web, mobile, maps, and browsers in 2008.Footnote 6 Currently, QAC can be found in many commercial search engines, including Google, Bing, Yahoo, and a range of desktop applications (email clients, Unity Dash, Apple Spotlight), as well as in mobile applications including interactive maps, contact look up, and multimedia apps.

Query auto completion is one of several mechanisms that might be provided as part of a search interface:

- Query auto completion (QAC) :

-

Present a list of completions extending the string that the user has already typed. How the completions extend the user input can vary based on the particular strategy variant adopted by the implementation. The most common approach is to offer completions that start with the string of characters that the user has already entered. The completions are updated interactively as the user types in additional characters.

- Query suggestion :

-

Query suggestions are displayed along with the search results after the user submits a query, sometimes under a “did you mean” or “users also searched for” heading. Query suggestions and QAC are closely related problems (Jiang et al. 2014; Shokouhi 2013), but are used during different stages of searching. Mei et al. (2008) report live examples for query suggestions from commercial search engines.

- Query expansion :

-

Query expansion mechanisms modify the query by adding or deleting more terms to the existing query to address the vocabulary mismatch problem (Carpineto and Romano 2012). While query suggestion methods present alternative queries for a user to choose from, Automatic Query Expansion (AQE) re-writes the query in the background (Carpineto and Romano 2012). The terms can also be expanded by the user (Ruthven 2003) via interactive Query Expansion (IQE). Query Auto Completion (QAC) systems can be redesigned (Bast et al. 2007) to support IQE by suggesting a list of related words (and not just character level extensions). For example, the query “buy computer” might be expanded interactively by suggesting further terms such as “laptop”, “monitor”, or “gaming”.

The ability of QAC systems to generate useful completions, even after the user has entered only the first few characters of a query, can reduce the effort in formulating queries by shortening the feedback cycle between query formulation and refinement (Bar-Yossef and Kraus 2011). The user sees a list of target queries based on what they have already typed before they even submit the query, providing real-time feedback, and also before they examine any of the results. On the other hand, in the absence of completions, the users may feel “left in the dark” (Li et al. 2009) while entering queries. Additionally, the completions provide a sense of the underlying collection content, and of the search environment itself, making it easier for users to formulate queries.

2.1 Definitions

Given a partial query \({{{\mathsf{P}}}}\) of length \(\left|{{{{\mathsf{P}}}}}\right|\) characters, the goal of a QAC system is to present an ordered list of k strings \({{{\mathsf{C}}}}_1,{{{\mathsf{C}}}}_2, \dots ,{{{\mathsf{C}}}}_k\). Each completion \({{{\mathsf{C}}}}_1,{{{\mathsf{C}}}}_2, \dots ,{{{\mathsf{C}}}}_k\) is selected from a dictionary, \(\mathbf{D}= ({{{\textsf{T}}}_{i}},{{{\textsf{{score }}}}_{i}})\) that stores each target string \({{{\textsf{T}}}_{i}}\) along with a corresponding static assessment \({{{\textsf{{score }}}}_{i}}\) of its merits as a query. Generally, the strings \({{{\textsf{T}}}_{i}}\) are past query strings generated by the universe of users, or by some subset of similar (in some sense) users, or by this specific user alone. A candidate set is a set of strings from D that match the partial query \({{{\mathsf{P}}}}\), where a range of different definitions of “match” is possible, even within the same search interface (Krishnan et al. 2017). That is, the universe of possible queries is first filtered to identify the candidates that match \({{{\mathsf{P}}}}\), and then some subset \({{{\mathsf{C}}}}_1,{{{\mathsf{C}}}}_2, \dots ,{{{\mathsf{C}}}}_k\) of the candidate set is selected via the use of a ranking function and presented to the user.

A conversation starts when the user begins to type their query, and continues as they modify their partial query \({{{\mathsf{P}}}}\) in subsequent interactions. The end of the conversation is marked by an implicit or explicit signal monitored throughout the interaction (see Sect. 3 for details). Through the course of a conversation, \({{{\mathsf{P}}}}\) might be issued once or more as a query to the underlying search system, represented as \({{\mathsf{Q}}}\). A partial query is converted to a query and submitted for execution by clicking on a completion, pressing “Enter” on the keyboard, or clicking the search button. Each query submission runs the current partial query using the underlying search system, and retrieves a set of results. A conversation may continue after such a submission, and further modifications are considered part of the same conversation. For instance, during a conversation, a user can click on the completions or press the enter key several times to submit \({{{\mathsf{P}}}}\). Note that this definition of partial query and of the submitted query is relevant only to QAC systems.

In some cases, the user will abandon the conversation without submitting a query; other times they will continue the conversation by typing another character (including backspace, to erase a character); and yet other times they will select one of the offered completions \({{{\mathsf{C}}}}_1,{{{\mathsf{C}}}}_2, \dots ,{{{\mathsf{C}}}}_k\). In that third case, the click position in the set of completions is the index i of the completion \({{{\textsf{C}}}_{i}}\) that was selected. That is, when we refer to click position, we consider the mouse clicks on the completion list by which the user submits the corresponding completion to the search mechanism.

2.2 Efficiency and ranking

The dictionary from which completions are generated (typically, but not necessarily, a query log) can, for a commercial search engine, be large, as these engines can receive billions of queries per day. Google receives over 3.5 billion search requests per day.Footnote 7 In 2017, Google reported that 15% of searches they see everyday are new.Footnote 8 By extension, for rest of the queries seen in the past, there is an opportunity to present completions by searching over previously recorded query logs. A report released by Bing in 2017Footnote 9 shows that they serve up to 12 billion search requests per month. Generating completions from such collections within an acceptable time frame (100–150 ms for a QAC system in production (Maxwell et al. 2017)) is a challenging task; with a non-trivial fraction of that time likely to be consumed by network latency. Implementation strategies vary depending on how the partial query \({{{\mathsf{P}}}}\) is matched against the strings in the dictionary (Krishnan et al. 2017). The most common approach is to choose the strings having \({{{\mathsf{P}}}}\) as an exact prefix (Bar-Yossef and Kraus 2011; Cai and de Rijke 2016; Cai et al. 2016). A common data structure used for efficient prefix look-up is a trie (Askitis and Sinha 2007; Askitis and Zobel 2010; Heinz et al. 2002; Hsu and Ottaviano 2013) built on the strings from D. In a different approach, each term from \({{{\mathsf{P}}}}\) (obtained by splitting on whitespace) is prefix-matched against the terms from strings in D (Bast and Weber 2006a, b, 2007) to generate the candidate set. An inverted index (Bast and Weber 2006a; Hawking and Billerbeck 2017) can be used to implement QAC systems operating in this mode. A partial query might differ from what the user intended to type due to input errors such as typographical errors and key-slips. Mismatches up to a fixed number of characters are allowed while generating a candidate set, yielding error tolerant (Chaudhuri and Kaushik 2009; Ji et al. 2009; Li et al. 2011; Xiao et al. 2013) QAC systems.

Once the candidate set has been retrieved, the final stage of a QAC system is to rank the candidates based on a scoring function, and select the top-k scoring candidates to be presented as the suggestion list. In some cases, this step is followed by additional re-ranking (Cai et al. 2016) to decide the order of presentation. Early ranking measures relied on the static popularity (Bar-Yossef and Kraus 2011), reflecting popularity as captured by other users. Context-based ranking methods (Bar-Yossef and Kraus 2011) use recent queries from the user as search context and incorporate the similarity to the context into ranking. Other ranking measures include temporal popularity (Cai et al. 2014), personalization (Cai et al. 2014; Shokouhi 2013), diversification (Cai et al. 2016), and location sensitive ranking (Zhong et al. 2012). Cai and de Rijke (2016) review the ranking methods used in QAC systems.

2.3 User interactions with QAC

Query auto completion is a form of interactive search and thus a QAC interface must provide a range of operations. Understanding the possible sequence of operations in a QAC environment is useful for improving the performance of QAC systems (Li et al. 2014). Chaudhuri and Kaushik (2009) present a set of operations that can be performed by a user in a QAC environment, including insertion or deletion of a character anywhere in the current partial query, or choice of one of the completions. Another user model for QAC, proposed by Kharitonov et al. (2013), assumes that the sequence of operations follows a Markovian property, and hence that QAC operations can be modeled using a set of random variables. The model of Kharitonov et al. further assumes that the characters of a query are always entered sequentially from left to right; that a user examining a specific completion is always satisfied and submits the completion as their query; and that a user who is not satisfied with any of the completions offered during their interaction continues until they have typed their query in full. This model can also adapt to factors such as dependence on the length of partial query, and decay in examination probability with the rank in completion list.

The tendency of users to ignore completions (presumably without examining them), and the examination bias against positions in the completion list, is presented as a two-dimensional click model by Li et al. (2014, 2015). This model assumes that after typing a character the user either examines the completions, or continues typing the next character. While examining the completions sequentially by scanning from top to bottom, the user clicks on one of the completions, moves on to the next one, or, once they reach the end of the list, goes back to typing the next character. Smith et al. (2016, 2017) propose a user model that follows a sequence where users initiate their query, examine suggestions, submit the query, or continue typing. Depending on how users interact with the QAC suggestions, Smith et al. (2016) classify queries into different classes such as “queries formulated completely by typing the entire string” or “queries where there was an interaction with the completion list”. In recent work, Krishnan et al. (2017) propose a state model for capturing user interactions and discuss additional sequences such as choosing one of the completions using arrow keys (which may entirely replace the current partial query), and appending of additional characters to the updated partial query.

2.4 Observation of user behavior

User behavior with QAC systems can be understood by either analyzing large-scale query logs or conducting small-scale user studies in a controlled environment. Large-scale query logs were used in several studies (Jansen and Spink 2006; Jansen et al. 2000) to understand user behavior with classic search systems. In contrast, QAC systems update completions for every modification of the partial query; and hence in order to fully understand user behavior with QAC systems, access is needed to a detailed query log recording interactions at a keystroke level (Li et al. 2014). As already noted, the confidential nature of query logs means that access is increasingly being denied to academic researchers.

Previous studies based on large-scale keystroke logs or user studies have led to a range of observations in regard to behavior. Users were found to interact with the completions while forming 26–29% of the submitted queries (Hofmann et al. 2014; Smith et al. 2016), with most of the interactions focused on the first three positions in the completion list (Hofmann et al. 2014; Li et al. 2014; Mitra et al. 2014). The probability of clicking on a completion was found to be less for shorter partial queries (Li et al. 2014; Mitra et al. 2014). Li et al. (2014) report that the majority of the clicks on the completions happen when the partial query has length between three and twelve. Similarly, within each word in \({{{\mathsf{P}}}}\), interactions with the completions are found to happen after the users had typed the first three characters of the word (Li et al. 2014; Mitra et al. 2014). Hofmann et al. (2014) report that the position bias (completions at the top receiving more attention) in the completion list was invariant to the ranking criteria or suggestion quality, and suggest that the position bias is due to the examination bias, that is, the tendency of users to first examine the completions at the top. Based on the study conducted on a web-based QAC interface, Smith et al. (2016) report that the majority of queries were submitted by typing in full and pressing the “Enter” key, without interacting with the completions. These findings agree with the users’ tendency to skip checking the completions on desktop devices, reported by Li et al. (2014).

The typing skill of users was also found to affect the interactions with the completions. Touch-typing users tend to continuously examine the completions as they type, whereas users who look at the keyboard while typing examine the completions only after they finish typing (Hofmann et al. 2014), or explicitly create a pause in their typing. The typing speed of the users is another factor affecting QAC usage. Fast users tend to keep typing further characters without examining the completions (Li et al. 2014). It was also observed that the engagement rate with the completions tends to be higher around word boundaries (Li et al. 2014; Mitra et al. 2014).

Bringing all of these observations together, the main findings from the literature on user behavior with QAC system can be summarized as follows:

-

User interaction with the completion list is focused on the first three positions (Hofmann et al. 2014; Li et al. 2014; Mitra et al. 2014).

-

The bulk of user interaction with the completion list arises only after the first few characters (typically three) are typed (Li et al. 2014; Mitra et al. 2014).

-

Roughly 26–29% of queries involved interaction with QAC (Hofmann et al. 2014; Smith et al. 2016).

-

Users are more likely to interact with the completions around word boundaries (Li et al. 2014; Mitra et al. 2014).

-

Individuals vary in terms of QAC engagement, in part because of their different typing abilities (Hofmann et al. 2014; Li et al. 2014).

2.5 Privacy preservation for query logs

Query logs have been found to contain information that is sensitive or personal (Adar 2007; Cooper 2008; Xiong and Agichtein 2007). Some of the information directly contained in the log is of a confidential nature, such as medical conditions, sexual activities, addresses, financial details, or personal identification numbers. There is also an indirect threat to privacy; even query logs that do not contain sensitive fields, or in which sensitive fields are masked, are still vulnerable to attack by combining them with information that is publicly available from other sources (Cooper 2008; Jones et al. 2007). However, despite the risks involved in maintaining and sharing query logs, search engine providers need to retain query logs for reasons such as performance evaluation, and because of legal compliance requirements. Similarly, researchers need to access query logs in order to undertake study of user behaviors and search systems. The challenge in development of methods to anonymize query logs is to decide how much information can be retained for legitimate purposes without compromising privacy. This is known as the privacy-utility tradeoff (Adar 2007; Cooper 2008) in query log anonymization.

Cooper (2008) surveyed techniques used to anonymize query logs. These techniques include applying secured one-way hashing to the query strings; hashing of query tokens (Kumar et al. 2007); scrubbing of sensitive query content (Xiong and Agichtein 2007); reducing the amount of information retained for each session (Xiong and Agichtein 2007); and encrypting infrequent queries containing identifying information (Adar 2007). A desirable property of privacy-preserving query logs is k-anonymity (Samarati 2001), so that identification of a particular user’s search history from the log can be avoided (Adar 2007; Gotz et al. 2012; Hong et al. 2009).

3 Interaction model and abstract QAC logs

To understand user interactions with QAC systems, we extend the set of user actions considered in previous studies (Kharitonov et al. 2013; Krishnan et al. 2017; Li et al. 2015) and observe that a QAC system moves through a set of states based on the user actions. To accurately model how users interact with a QAC system, it is necessary to know the state of the system at any given point; modeling of user actions alone will not capture sufficient information about QAC behavior.

We therefore propose an interaction model for QAC systems formalizing the set of user actions possible in a QAC interface and the corresponding system states. The model is based on the following assumptions.

Assumption 1

The user actions change the system state and behavior. The set of available user actions is determined by the current state of the system.

Assumption 2

An interaction model should allow for conversations to continue after completions have been selected.

Assumption 3

The transitions between different system states in the interaction model should be determined solely by the current state and the observed action. That is, given a system state and transition, the model predicts the next state without ambiguity.

Assumption 1 follows from the observation that user actions are constrained by the current state of the QAC system. Assumption 2 implies that the next action should be represented as an extension of the current partial query \({{{\mathsf{P}}}}\) until a conversation ends. For example, clicking on a completion produces search results, which can be regarded as a termination state. However, most of the interfaces retain the query in a redrawn search box as part of the results page, and the user can still modify \({{{\mathsf{P}}}}\). An interaction model should interpret such modifications as being part of the same conversation. Assumption 3 implies that the model should account for how the system behavior changes with user actions. For example, if the user clicks on a completion, the QAC system submits the query and no completions are displayed until further modifications are applied to the query. Similarly, if the user presses arrow keys to browse through completions and modify the partial query, the system doesn’t offer new completions until \({{{\mathsf{P}}}}\) is modified by adding or removing characters.

3.1 Interaction model

We formalize the operations that a user can perform at a QAC interface into a set of user actions. Assume that the system is in an initial state and the partial query \({{{\mathsf{P}}}}\) is a null string. The user then initiates the interaction by setting \({{{\mathsf{P}}}}\) to be a string of arbitrary length, typically by either typing a single character, or by pasting in a string copied from elsewhere. The user might also commence with an empty string in which case, for example, completions may be loaded from their browsing history. At each given point during the interaction, a QAC system is then in one of the following states:

- \({{{\textsf{q}}}_{i}}\)::

-

The search box is empty and \({{{\mathsf{P}}}}\) is set to null character \(\phi\). The system is in state \({{{\textsf{q}}}_{i}}\) when the search page is loaded and the user hasn’t yet interacted with the system.

- \({{{\textsf{q}}}_{a}}\)::

-

The active state in which the QAC system offers completions based on \({{{\mathsf{P}}}}\).

- \({{{\textsf{q}}}_{p}}\)::

-

A passive state where the completions are displayed and the user can Probe through the completions using the arrow keys. A series of Probe operations will replace \({{{\mathsf{P}}}}\) with the highlighted completion, but, in this state, the system doesn’t update the completions. For a logging mechanism running at the server side, this state is unobservable since completion requests are not sent from the browser.

- \({{{\textsf{q}}}_{s}}\)::

-

If the user submits\({{{\mathsf{P}}}}\) using one of the submit actions (discussed under user actions) the system goes to the search engine result page (SERP) and the system is said to be in a SERP display state \({{{\textsf{q}}}_{s}}\). The search box will retain \({{{\mathsf{P}}}}\) and no completions are offered.

Accordingly, a user can perform the following user actions at the interface.

- Initiate :

-

The user commences their interactions (or initiates a conversation) with an Initiate operation. Usually the interactions begin when a user enters the first character of the intended query, setting \({{{\mathsf{P}}}}= c \in \varSigma\), where \(\varSigma\) is the alphabet. However, they can start by pasting a string into the search box, or simply clicking on the search box may load queries from their history. To generalize these use cases, we say that interactions can be initiated by setting \({{{\mathsf{P}}}}= \varSigma ^*\), that is by entering a string of arbitrary length (including 0 length).

- InDel :

-

Insert and Delete. Insertions and deletions can happen at an arbitrary index in \({{{\mathsf{P}}}}\) and can modify several characters in one step. A special case of this operation is when the user selects a substring of \({{{\mathsf{P}}}}\) and then types replacement characters.

- Extend :

-

This operation is currently offered only on mobile devices with an on-screen keyboard. The users can replace \({{{\mathsf{P}}}}\) with one of the completions by tapping on the diagonal arrow (\(\nwarrow\)) next to the completion. This operation will replace \({{{\mathsf{P}}}}\) with the corresponding completion and append a whitespace at the end. The system remains in active state (\({{{\textsf{q}}}_{a}}\)) and the completions are updated based on the modified \({{{\mathsf{P}}}}\).

- Engage :

-

This operation only applies to a physical keyboard. The user presses the down arrow key to start highlighting the completions. An Engage operation replaces \({{{\mathsf{P}}}}\) with the first completion in the list.

- Probe :

-

This operation only applies to a physical keyboard. After an Engage, the user can use arrow keys to Probe through the completion list highlighting a completion of their choice, each time replacing \({{{\mathsf{P}}}}\) with the corresponding completion. They could also use the mouse to highlight the completions, resulting in a similar interaction. In both these cases, this operation is not observable at the server side since new completion requests are not sent (unless a custom keystroke logger has been installed).

- Submit :

-

A Submit operation sends \({{{\mathsf{P}}}}\) to the underlying search system and retrieves the results. A Submit can be performed either by pressing the Enter key, or by clicking on either a completion or the “search” button.

- Depart :

-

The current conversation is terminated by a Depart operation. There are two ways to end the conversation. One is an active departure, which occurs when the user reloads the page, or removes every character in \({{{\mathsf{P}}}}\) by consecutive Delete operations. The other is a passive departure, which occurs when, for example, the session times out or the system detects that the user is no longer participating in the conversation.

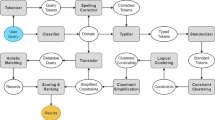

These user actions and system states are captured as a probabilistic state transition model in Fig. 1.

3.2 Specification of abstract QAC log

With an interaction model from Fig. 1 in place, we present a specification for an abstract QAC log that doesn’t record the characters entered by the user. This log contains enough information to reconstruct the interaction model and understand user behavior with QAC systems, without including the actual characters.

We make use of a keystroke-level query completion log that records the following fields for each interaction. An interaction is an observable change at the QAC interface as a result of user interaction. How an interaction is logged depends on the design of the logger. For instance, a user appending a character to \({{{\mathsf{P}}}}\), and then clicking on a completion to submit it can be logged as a single interaction. The fields included in such a keystroke level QAC log can be:

-

1.

The partial query entered by the user;

-

2.

A timestamp for the current interaction;

-

3.

A unique conversation ID;

-

4.

The set of completions presented, if any;

-

5.

The completion clicked if any; and,

-

6.

If a query is submitted, then the corresponding query string.

Table 2 give an example for a QAC log with these fields.

The conversion of a query completion log to an abstract QAC log is a unidirectional process, and given the complete absence of contextual information such as characters or answer documents, regarded as being non-reversible—at least, not in any way that might reveal any of the queries associated with any users. The fields recorded in the abstract QAC log used in the experiments described in Sect. 4 are given in Table 1. An abstract QAC log may well contain more fields compared to the actual log recorded because the any detailed information inferable from the original strings must be computed and stored in the abstract QAC log if it is to be preserved. Table 3 shows an example abstract QAC log corresponding to the conversation shown in full in Table 2. The desired privacy-utility trade-off in development of the abstract QAC log specification is to retain enough information from the original log to allow studies of behavior, while being consistent with the underlying principle of not allowing any leakage of information. Given a abstract QAC log conforming with our specification, the transition probabilities in the proposed interaction model can be derived without ambiguity. We also include additional fields that can be useful in analyzing the user behavior discussed in the experiments that are described in Sect. 4.

Our method differs from the techniques used to anonymize query logs. We are examining fragments of queries as they are entered, not the complete queries used in searches. For example, understanding how users interact with a QAC system at different lengths of partial query is a possible area for study; ideally an abstract QAC log should provide enough information to conduct such analyses. User interactions during query formation may not be of significance for in the usual foci of investigation of query logs.

4 Experiments

4.1 Preparing the abstract QAC log

Data gathering The data used in our experiments was randomly sampled over a week from 13 August 2018 and consists of BingFootnote 10 desktop and mobile QAC data, where users had set their locale to the United States and their language to English. In a preliminary sequence, the data was cleaned, as described below, anonymized, and reduced to the format given in Table 1. We refer to the cleaned log as Bing-Abs-QAC-2018. Note that all of the initial processing of the private Bing QAC log was carried out solely by the second author, using secure Microsoft systems at all times, operating within the terms of their employment contract. The results reported in this section were then obtained by analyzing Bing-Abs-QAC-2018, without any further reference to the original character-level logs. At no stage did the three non-Microsoft authors have access to the initial QAC logs from Bing.

Data cleaning Conversation data is organized as a series of partial queries and suggested completions, based on a timestamp. This timestamp is derived from the instant on the server when the entry was logged. Many conversations in the initial log data contained out-of-order timestamps, caused by a variety of problems, including differences in network latency. In some instances interactions were not logged at all, which could, as one possible explanation, be a consequence of data center fail overs. Where out-of-order timestamps were detected, jumps in the lengths of partial queries would be indistinguishable from interactions in which the user pasted multiple characters in a single operation.

When preparing Bing-Abs-QAC-2018, we removed all conversations that contained inconsistent or implausible timing information. We also removed a small number of conversations where lists of suggestions at times contained more than eight items (which can be the case under certain circumstances, depending on user interface configuration and type of device used). The data doesn’t distinguish between a user clicking on a completion or selecting a completion on the one hand (through Engage followed by a series of Probe) and on the other pressing “Enter” to submit the completion as their query. Throughout our experiment, both of these scenarios are referred to as a “click” at \({{{\textsf{clk}}}_{i}}\).

Anonymization Since the data was gathered from a real search engine and represents real user interactions, the Bing log contains two types of private user data: the partial queries entered (whether or not these were typed in their entirety) and the completions, which are often personalized, in particular for short or zero-length partial queries. When \(\left|{{{{\mathsf{P}}}}}\right| =0\), the completions may be loaded from previously issued queries from that user. That is, the partial queries likely reveal current user intents, and the completions may expose historic queries issued by that user. Both of those possible data leakages are eliminated by the abstraction process; moreover, to fully safeguard the privacy of users in regard to query volume and times of operation, we restrict Bing-Abs-QAC-2018 to only show at most a single conversation per user per day, chosen uniformly at random. We then furthermore anonymize Bing-Abs-QAC-2018 in the following ways:

-

Removal of all user IDs and any other metadata such as geographical locations of the user;

-

Replacement of all absolute timestamps with offsets relative to the start of the conversation; and

-

Replacement of partial query and completion strings with metadata that details the set of word lengths involved.

An example showing a subset of the available details of the original Bing log data and the abstracted version in Bing-Abs-QAC-2018 are is provided via Tables 2 and 3.

4.2 Description of Bing-Abs-QAC-2018

The log used for the experiments contains a total of 12.96 million interactions (a single row in Table 2) recording one million conversations each from mobile and desktop devices. The fields included in the dataset follow the specifications for an abstract QAC log discussed in Sect. 3.2. The conversations are identified by internal heuristics used by Bing and are explicitly marked with a unique \({{\mathsf{cid}}}\) (Conversation ID) in the log; those heuristics include a daily midnight reset at which time all conversations are terminated. In uncompressed form the log requires 1.34 GiB; some summary statistics are reported in Table 4. The majority of the collected conversations are short, with a median of four interactions, and the partial queries entered were also relatively short, with a median length of seven characters. Thus, the majority of the conversations involve only a few interactions, based on short partial queries. Comparing the conversation lengths (in number of interactions) between desktop and mobile devices, Figure 2 shows that the majority of the mobile conversions are short, with a slightly higher fraction of long (length greater than ten interactions) mobile conversations (11.5%) compared to desktop conversations (9.7%).

Initial length of partial query Users can initiate a conversation in three ways: (i) by clicking on the search box without entering any characters to load completions from their search history; (ii) by typing a single character; and (iii) by pasting a string of arbitrary length in the search box. Figure 3 shows the distribution of initial length of partial query for desktop and mobile devices, indicating how users typically initiate a conversation. Around 43% of conversations start with an empty partial query and 27% of conversations are initiated by entering a single character. These results show that it is reasonably common for users to start an interaction with an arbitrary string, as permitted by our interaction model.

Mode of submission Users can submit a query to the underlying search system in different ways. Pressing “Enter” or clicking the search button after typing a character submits \({{{\mathsf{P}}}}\) as the query. We collectively call these two events a “Search”. Alternatively the users can click on a completion or highlight a completion and press “Enter” to submit one of the completions as a query. We are not able to distinguish between these cases in Bing-Abs-QAC-2018, and treat both as “Click” events. Statistics in regard to the mode of submission are reported in Table 5. We see that on both mobile and desktop, users submit their partial queries more often (86.6% and 82.2%, respectively) by “search” than they submit a completion by clicking on the completion list.

Query Auto Completion usage patterns Users interact with the completions with two different goals: (i) to make advance via Engage or Extend operations, replacing their partial query with one of the completions; or (ii) to click on one of the completions to Submit it as a query. Both of these cases represent an interaction with the completions. Table 6 reports the percentage of conversations (by total number of conversations) where users engaged with the completions at least once. A total of 16.1% conversations received at least one click on one of the completions, and this number is much higher for mobile devices (20.2%) compared to desktop devices (12.0%). The percentage of QAC usage for advancing partial queries was found to be slightly higher on desktop (4.2%) than mobile (2.3%). The overall interaction percentage of 19.4% with the completions is less than the 26–29% previously reported (Hofmann et al. 2014; Smith et al. 2016). We believe that this difference may be a consequence of the exact way conversations are defined in the different logs.

4.3 User interactions at different completion indexes

To understand how the probability of clicking on a completion varies with the length of partial query, we revisit the results reported by Li et al. (2014) using Bing-Abs-QAC-2018; plotting in Fig. 4 the relationship between click position in the list of completions and the length of partial queries. In the heatmap, the probability of a click is normalized across the completion indexes for each bin of \(\left|{{{{\mathsf{P}}}}}\right|\). Most of the clicks on the completion list are focused on the first position. The bias towards the first index is prominent for partial queries of shorter length (≤ 4) and for long partial queries of length ≥ 36. The click positions are more spread out across the indexes for mobile devices, indicating that users examine completions (and find useful ones) at deeper ranks on mobile devices more often than they do on desktop devices.

Length of partial query and distribution of click positions in the completion list. The number of clicks across the completion indexes for each bin of \(\left|{{{{\mathsf{P}}}}}\right|\) is normalized to one. The completion indexes for desktop are truncated at five (maximum completion index for mobile) to allow the side-by-side comparison

The completion index used for advancing the partial query by Engage or Extend actions show similar usage patterns. From Fig. 5, on desktop, in more than 61.1% of cases the users advanced their partial query using the first completion in the list. This interaction rate reduces to 17.5% from the first index to the second. On mobile, this interaction rate for the first index is 54.0%. In general, we observe higher interaction rate with lower indexes for advancing partial queries on mobile compared to desktop. There is a strong bias towards top completions in terms of clicks and engagement for advancing the partial query, confirming the observations reported in past studies (Hofmann et al. 2014; Li et al. 2014; Mitra et al. 2014). On mobile, users are more likely to interact with completions at lower indexes either to advance their partial query or to click on a completion.

4.4 Typing speed

Logs can be used to examine the impact of typing speed. However, identification of the typing speed of users in Bing-Abs-QAC-2018 is not straightforward, as backspaces and selection of completions are confounding. In order to compare how fast users type in the search box on a desktop and mobile, we consider only the conversations where all the operations are an Append of a single character. For these conversations, the length of the partial query increases uniformly by 1 in each step and the only action is entry of characters. We further remove conversations that start with \(\left|{{{{\mathsf{P}}}}}\right| > 1\). Among the conversations in Bing-Abs-QAC-2018 32.6% have only Append and 4.59% have single character Append as the only user action.

The distribution of typing speed on desktop and mobile is shown in Fig. 6. As expected, users type faster on desktop (\({mean }={2.13}\) char/s, \({SD }= 1.41\)) compared to mobile \({mean }={1.31}\) char/s, \({SD }= 0.59\)). The typing speed is more widely spread on desktop compared to mobile. From Fig. 7 we see that mean typing speed is lower when users start typing (\(\left|{{{{\mathsf{P}}}}}\right| \le 4\)) on both desktop and mobile. The mean typing speed is above two characters per second for short to medium length partial queries (\(4 \le \left|{{{{\mathsf{P}}}}}\right| \le 23\)) and this speed reduces for longer partial queries, perhaps because of deliberate pauses while suggested completions are reviewed. Typing speed on mobile shows similar trend, with a maximum value for mean typing speed 1.23 char/s compared to 2.27 char/s for desktop.

Typing speed (in characters per second) with the length of partial query for conversations where users performed an Append of a single character between every subsequent interactions during the entire conversation. The shaded regions show the distribution of typing speeds across different lengths of partial queries and the solid lines show the mean of each distribution

4.5 Interaction model and transition probabilities

Each row in Bing-Abs-QAC-2018 corresponds to an interaction. For example, a row might record the sequence of user actions \({Engage }\rightarrow{Insert }\rightarrow{Submit }\), and can be tagged based on a set of rules. Identification of Initiate involves checking whether the timestamp field in the row is set to 0 (\({{\mathsf{ts}}}= 0\)). Interactions are explicitly tagged in Bing-Abs-QAC-2018 with Insert, Delete, Engage, or Extend whenever the action can be identified by comparing the current partial query with the previous partial query and the previous completions.Footnote 11 An interaction can be tagged as Depart if the next interaction starts with \({{\mathsf{ts}}}=0\). The Click and Submit actions have explicit markers in Bing-Abs-QAC-2018 (\({{{\textsf{clk}}}_{i}}\ne -1\) or non empty \({{{\mathsf{Q}}}}_{{{\mathsf{len}}}}\) field).

Given the ordered set of actions for each interaction in Bing-Abs-QAC-2018, the transition probabilities for the interaction model can be derived based on a first order Markov chain assumption. Assuming that each user action depends only on the action preceding it, we can estimate the probability for each user action to follow the previous one. The transition probabilities between user actions from desktop devices estimated from Bing-Abs-QAC-2018 are reported in Table 7. The transition probabilities for mobile devices show similar trend. We report the Kullback–Leibler (KL) divergence between the transition probabilities from each user action in Table 7 and that from mobile in Table 8. Since Bing-Abs-QAC-2018 contains a \({{\mathsf{date}}}\) field we can calculate the transition probabilities across different days for desktop and mobile. Table 8 shows the maximum value of KL divergence calculated over the transition probability matrices for mobile and desktop devices obtained from each of the seven days in the log. We see that the transitions from Initiate are slightly different on desktop and mobile (with a KL divergence of 0.34) and transitions from all other states show similar trends to that of desktop. The lack of substantial variation in the transition probabilities across different days in the log show that the interaction model is stable. In the same way, the notion of KL divergence can be extended to compare the transition probabilities derived from an abstract QAC log collected over a different period of time.

Long versus short conversations The last row of Table 8 gives the KL divergence between the transition probabilities for long and short conversations. A conversation is considered to be “long” if it contains seven or more interactions (the mean conversation length was 6.48), and considered to be “short” if not. The normalized transition probability for the \({Append }\rightarrow{Depart }\) transition, which characterizes the probability of abandoning the query after appending characters, was found to be 0.25 for short conversations, and much higher than the value 0.03 found for long conversations. In general, the transition probabilities into Depart are higher for short conversations, suggesting that short conversations have a higher probability of abandonment than long conversations. The exception is the transition \({Submit }\rightarrow{Depart }\) which represents the probability of ending a conversation after submitting a query. It has a probability of 0.63 for short conversations and 0.39 for long conversations. The transition probabilities from Submit to the operations Append, Insert, Pop and Delete were also higher for long conversations, indicating that long conversations are more likely to be continued after submitting a query. Considering the KL divergence, transition probabilities, except those from Engage and Submit, show higher variation between long and short conversations, more so than is evident when desktop and mobile users are compared.

Heatmaps showing the transitions probabilities are provided in Fig. 8, with the probabilities for the next user action given the current action normalized across each row. Comparing the interaction patterns between desktop and mobile devices, we see broadly similar activity, with initiation of an interaction on a desktop being followed by Submit in 48% of the cases and only slightly less (46%) on mobile. On a mobile device, users making partial advancements to the partial query by Extend are more likely to remove characters from the end (Pop) than when they Engage with completions on a desktop. On desktop and mobile devices, the probability of continuing a conversation after a Submit was found to be 44% and 42% respectively. If a conversation is continued after Submit then it is likely to be by appending characters to the end of partial query. Alternatively, we can visualize the flow of transitions from one user action to the other as an alluvial diagram as shown in Fig. 9. The flow of transitions indicate the fraction of transitions between each originating and terminating user action.

On a desktop, users move to Depart and terminate their interactions predominantly from Submit and Append. A higher fraction of transitions from Initiate to Depart on mobile compared to desktop indicates that users tend to abandon their queries more often on mobile than desktop. On both devices, users insert characters at arbitrary positions in \({{{\mathsf{P}}}}\) rather at the end (that is, perform an Insert) more frequently after Initiate or after they have submitted a query (Submit). The operations Append, Insert, Pop and Delete tend to run in chains. For instance, Append follows Append more often than other user actions. Similarly Insert, Pop and Delete actions are often followed by the same action.

4.6 Delete chains

The models in the literature have focused on how users add more characters to the partial query and interact with the completions. In Bing-Abs-QAC-2018, however, we observe a substantial volume of Delete operations from an arbitrary position in the partial query or Pop from the end of a partial query. From Fig. 9 we know that Pop and Delete actions repeat themselves in consecutive interactions. Figure 10 shows the distribution of run-length of Delete chains. For this experiment, we combine both Pop and Delete as a single operation Delete and count the length of Delete runs. On desktop, there are 23,436 runs of length ≥ 7, the median length of partial queries; on mobile, there are 38,905 runs with length ≥ 7. These results suggest that there are many cases where users delete characters from \({{{\mathsf{P}}}}\). The total number of Delete runs on mobile is 21.9% higher than the counts observed from desktop.

It is also interesting to see at which lengths of \({{{\mathsf{P}}}}\) users delete characters from \({{{\mathsf{P}}}}\), as a fraction of the final length of the partial query at the end of current conversation. This gives a measure of the fraction of length entered by the users before they Delete characters from \({{{\mathsf{P}}}}\). In Fig. 11, the fraction of the length of \({{{\mathsf{P}}}}\) is calculated by dividing \(\left|{{{{\mathsf{P}}}}}\right|\) when a Delete action is performed with the length of \({{{\mathsf{P}}}}\) at the end of the corresponding conversation. From Fig. 10 we know that runs of Delete exist in the log. The fraction reported in Fig. 11 can be greater than 1 if the final length of the partial query is less than the length of \({{{\mathsf{P}}}}\) at the time of the Delete operation. Figure 11 shows that users are more likely to start deleting characters at the beginning of a conversation or when they are about to terminate the conversation (when the fraction typed is close to one).

5 Discussion

Variations of the interaction model We argue that the proposed interaction model provides an accurate representation of the possible set of operations users perform when they interact with a typical commercial search engine-based QAC system via a browser. There is no universal interaction model for QAC systems, however; and models of necessity must vary with the design of QAC system and the interface. In particular, there are systems where the search results are updated as the user enters their query, including the popular media services SpotifyFootnote 12 and Netflix.Footnote 13 In practice, the scope of an interaction model is limited by the interface design and logging capabilities of the system. For instance, in the search interface provided by Netflix or Spotify, there is no option to Engage or Probe with the results as they enter queries. Since the fields recorded in the abstract QAC log are derived based on the interaction model, the properties of the log obtained from two different systems can substantially vary. Finally, it is likely to be counter-productive to have an interaction model based on user actions or states that cannot be explicitly recorded or inferred from the logging mechanism in place.

The proposed interaction model follows a first-order Markov assumption. We acknowledge that higher-order models may in general be expected to give better approximations for the transition probabilities than a first-order model; and that the time spent in each state might also have an impact on the choice of next action. We have not pursued these possibilities, and leave them for future work. Another dimension of QAC systems that could change the interaction pattern is the criteria for matching \({{{\mathsf{P}}}}\) with the dictionary of strings to generate the candidate set. The completions offered by Bing have a prefix match with \({{{\mathsf{P}}}}\). Different matching functions might lead to quite different QAC interaction patterns. Previous studies have shown that the ranking quality can affect QAC usage (Hofmann et al. 2014). An abstract QAC log can still be used to compare QAC systems based on these criteria.

Limitations of the abstract QAC log approach The scope of an abstract QAC log is restricted to interaction level statistics, which rules out the possibility of exploring string-level characteristics such as statistics based on character ngrams or keystrokes, as have been reported in past studies using a real log (Mitra et al. 2014). Evaluation metrics such as Reciprocal Rank (RR), or characters saved, can be measured directly from this log format. However there are other measures such as diversity or similarity to the search context that can only be computed from actual strings. Understanding how user interactions vary for different classes of queries (Broder 2002; Rose and Levinson 2004) such as “navigational” or “informational” is another interesting aspect of QAC systems. For example, it might be possible to add a field to the abstract QAC log specification capturing such broad-brush categorizations, noting, of course, the need to be vigilant in regard to privacy, and the need for negotiation in that regard between the various stakeholders involved in the research.

6 Conclusion

We have proposed an interaction model for QAC systems that models the state of a QAC system and the set of user actions that can be performed in that state. Based on this state-based interaction model, a specification for an abstract QAC log was then derived. In order to demonstrate the effectiveness of the abstract QAC log approach, we conducted a range of experiments on a large-scale abstract QAC log obtained from Microsoft’s Bing search service, which we call Bing-Abs-QAC-2018. In our experiments, previous results on QAC systems—such as positional bias, mode of submission, and interaction probabilities with the length of partial query—can all be verified using this new log format. By obtaining transition probabilities from the interaction model using Bing-Abs-QAC-2018, we find that users often perform operations in a QAC environment other than appending characters to their partial query or choosing one of the completions. In particular, we highlight the existence of frequent deletions in the log, and show that runs of deletes are common on both mobile and desktop devices.

We anticipate that the observations from this study will influence how QAC systems are evaluated and designed. For instance, we can say that loading completions from the history when users initiate their conversations can be an effective design strategy, considering the high fraction of conversations that commence with an empty partial query. We see that conversations continue after users submit queries and in most cases, a continuation is carried out by appending characters to the end of partial query. The presence of delete operations and delete chains indicate that, during evaluation, it is necessary to account for the effective number of keystrokes and not just character additions. From a design perspective, caching of the last few completions at the client side can handle deletions from the end of a partial query without additional requests needing to be sent to the server. While measuring QAC implementation efficiency, accounting for user interactions from the interaction model beyond character additions can provide more accurate evaluation strategies.

As a long-term goal, we also plan to make use of the abstract QAC log to explore issues to do with QAC system evaluation, including developing approaches that quantify the usefulness of different implementation options and modalities.

Notes

https://en.wikipedia.org/wiki/AOL_search_data_leak, accessed 2 December 2018.

https://eur-lex.europa.eu/eli/reg/2016/679/oj, accessed 2 December 2018.

https://www.theguardian.com/us-news/2015/dec/11/senator-ted-cruz-president-campaign-facebook-user-data, accessed 2 December 2018.

In the interests of disclosure, we note that at this time we have neither sought nor have permission for such sharing of the log that is discussed shortly.

https://googleblog.blogspot.com/2004/12/ive-got-suggestion.html, accessed 2 December 2018.

http://www.internetlivestats.com/google-search-statistics/, accessed 2 December 2018.

https://blog.google/products/search/our-latest-quality-improvements-search/, assessed 6 May 2019.

https://twitter.com/BingAds/status/898199009952546816, accessed 2 December 2018.

We note that Engage and Extend are tagged by comparing partial query with the previous completions during the process of generating Bing-Abs-QAC-2018. As a result, some of these tags in our log could be spurious.

References

Adar, E. (2007). User 4xxxxx9: Anonymizing query logs. In Proceedings WWW query log analysis workshop. http://www.cond.org/anonlogs.pdf. Accessed 4 June 2019.

Askitis, N., & Sinha, R. (2007). Hat-trie: A cache-conscious trie-based data structure for strings. In Proceedings Australasian computer science conference (pp. 97–105).

Askitis, N., & Zobel, J. (2010). Redesigning the string hash table, burst trie, and BST to exploit cache. ACM Journal of Experimental Algorithmics, 15, 1–7.

Bar-Yossef, Z., & Kraus, N. (2011). Context-sensitive query auto-completion. In Proceedings WWW (pp. 107–116).

Bast, H., & Weber, I. (2006a). Type less, find more: Fast autocompletion search with a succinct index. In Proceedings SIGIR (pp. 364–371).

Bast, H., & Weber, I. (2006b). When you’re lost for words: Faceted search with autocompletion. In Proceedings SIGIR workshop. Faceted Search (pp. 31–35).

Bast, H., & Weber, I. (2007). The CompleteSearch engine: Interactive, efficient, and towards IR & DB integration. In Proceedings CIDR (pp. 88–95).

Bast, H., Majumdar, D., & Weber, I. (2007). Efficient interactive query expansion with complete search. In Proceedings CIKM (pp. 857–860).

Bhatia, S., Majumdar, D., & Mitra, P. (2011). Query suggestions in the absence of query logs. In Proceedings SIGIR (pp. 795–804).

Broder, A. (2002). A taxonomy of web search. SIGIR Forum, 36(2), 3–10.

Cai, F., & de Rijke, M. (2016). A survey of query auto completion in information retrieval. Foundations and Trends in Information Retrieval, 10(4), 273–363.

Cai, F., Liang, S., & de Rijke, M. (2014). Time-sensitive personalized query auto-completion. In Proceedings CIKM (pp. 1599–1608).

Cai, F., Reinanda, R., & de Rijke, M. (2016). Diversifying query auto-completion. ACM Transactions on Information Systems, 34(4), 25:1–25:33.

Carpineto, C., & Romano, G. (2012). A survey of automatic query expansion in information retrieval. ACM Computing Surveys, 44(1), 1:1–1:50.

Chaudhuri, S., & Kaushik, R. (2009). Extending autocompletion to tolerate errors. In Proceedings SIGMOD (pp. 707–718).

Cooper, A. (2008). A survey of query log privacy-enhancing techniques from a policy perspective. ACM Transactions on the Web, 2(4), 19:1–19:27.

Findlater, L., Wobbrock, J. O., & Wigdor, D. J. (2011). Typing on flat glass: Examining ten-finger expert typing patterns on touch surfaces. In Proceedings CHI (pp. 2453–2462).

Garcia-Gathright, J., St Thomas, B., Hosey, C., Nazari, Z., & Diaz, F. (2018). Understanding and evaluating user satisfaction with music discovery. In Proceedings SIGIR (pp. 55–64).

Gotz, M., Machanavajjhala, A., Wang, G., Xiao, X., & Gehrke, J. (2012). Publishing search logs: A comparative study of privacy guarantees. IEEE Transactions on Knowledge and Data Engineering, 24(3), 520–532.

Hawking, D., & Billerbeck, B. (2017). Efficient in-memory, list-based text inversion. In Proceedings Australian document computing symposium (pp. 5.1–5.8).

Heinz, S., Zobel, J., & Williams, H. (2002). Burst tries: A fast, efficient data structure for string keys. ACM Transactions on Information Systems, 20(2), 192–223.

Henze, N., Rukzio, E., & Boll, S. (2012). Observational and experimental investigation of typing behaviour using virtual keyboards for mobile devices. In Proceedings CHI (pp. 2659–2668).

Ho Kim, J., Aulck, L., Thamsuwan, O., Bartha, M. C., & Johnson, P. W. (2014). The effect of key size of touch screen virtual keyboards on productivity, usability, and typing biomechanics. Human Factors, 56(7), 1235–1248.

Hofmann, K., Mitra, B., Radlinski, F., & Shokouhi, M. (2014). An eye-tracking study of user interactions with query auto completion. In Proceedings CIKM (pp. 549–558).

Hoggan, E., Brewster, S. A., & Johnston, J. (2008). Investigating the effectiveness of tactile feedback for mobile touchscreens. In Proceedings CHI (pp. 1573–1582).

Hong, Y., He, X., Vaidya, J., Adam, N., & Atluri, V. (2009). Effective anonymization of query logs. In Proceedings CIKM (pp. 1465–1468).

Hsu, B. J. P., & Ottaviano, G. (2013). Space-efficient data structures for top-k completion. In Proceedings WWW (pp. 583–594).

Jansen, B. J., & Spink, A. (2006). How are we searching the World Wide Web? A comparison of nine search engine transaction logs. Informaion Proceedings & Man, 42(1), 248–263.

Jansen, B. J., Spink, A., & Saracevic, T. (2000). Real life, real users, and real needs: A study and analysis of user queries on the web. Informaion Proceedings & Man, 36(2), 207–227.

Ji, S., Li, G., Li, C., & Feng, J. (2009). Efficient interactive fuzzy keyword search. In Proceedings WWW (pp. 371–380).

Jiang, J., Ke, Y., Chien, P., & Cheng, P. (2014). Learning user reformulation behavior for query auto-completion. In Proceedings SIGIR (pp. 445–454).

Jones, R., Kumar, R., Pang, B., & Tomkins, A. (2007). “I know what you did last summer”: Query logs and user privacy. In Proceedings CIKM (pp. 909–914).

Kharitonov, E., Macdonald, C., Serdyukov, P., & Ounis, I. (2013). User model-based metrics for offline query suggestion evaluation. In Proceedings SIGIR (pp. 633–642).

Krishnan, U., Moffat, A., & Zobel, J. (2017). A taxonomy of query auto completion modes. In Proceedings Australasian document computing symposium (pp. 6:1–6:8).

Kumar, R., Novak, J., Pang, B., & Tomkins, A. (2007). On anonymizing query logs via token-based hashing. In Proceedings WWW (pp. 629–638).

Li, G., Ji, S., Li, C., Feng, & J. (2009). Efficient type-ahead search on relational data: A tastier approach. In Proceedings SIGMOD (pp. 695–706).

Li, G., Ji, S., Li, C., & Feng, J. (2011). Efficient fuzzy full-text type-ahead search. Proceedings VLDB, 20(4), 617–640.

Li, L., Deng, H., Dong, A., Chang, Y., Zha, H., & Baeza-Yates, R. (2015). Analyzing user’s sequential behavior in query auto-completion via Markov processes. In Proceedings SIGIR (pp. 123–132).

Li, Y., Dong, A., Wang, H., Deng, H., Chang, Y., & Zhai, C. (2014). A two-dimensional click model for query auto-completion. In Proceedings SIGIR (pp. 455–464).

Maxwell, D., Bailey, P., & Hawking, D. (2017). Large-scale generative query autocompletion. In Proceedings Australasian document computing symposium (pp. 9:1–9:8).

Mei, Q., Zhou, D., & Church, K. (2008). Query suggestion using hitting time. In Proceedings CIKM (pp. 469–478).

Mitra, B., Shokouhi, M., Radlinski, F., & Hofmann, K. (2014). On user interactions with query auto-completion. In Proceedings SIGIR (pp. 1055–1058).

Rose, D. E., & Levinson, D. (2004). Understanding user goals in web search. In Proceedings WWW (pp. 13–19).

Ruthven, I. (2003). Re-examining the potential effectiveness of interactive query expansion. In Proceedings SIGIR (pp. 213–220).

Samarati, P. (2001). Protecting respondents identities in microdata release. IEEE Transactions on Knowledge and Data Engineering, 13(6), 1010–1027.

Shokouhi, M. (2013). Learning to personalize query auto-completion. In Proceedings SIGIR (pp. 103–112).

Smith, C.L., Gwizdka, J., Feild, H. (2016). Exploring the use of query auto completion: Search behavior and query entry profiles. In Proceedings conference on human information interaction & retrieval (pp. 101–110).

Smith, C. L., Gwizdka, J., & Feild, H. (2017). The use of query auto-completion over the course of search sessions with multifaceted information needs. Information Proceedings & Man, 53(5), 1139–1155.

Xiao, C., Qin, J., Wang, W., Ishikawa, Y., Tsuda, K., & Sadakane, K. (2013). Efficient error-tolerant query autocompletion. Proceedings of the VLDB Endowment, 6(6), 373–384.

Xiong, L., & Agichtein, E. (2007). Towards privacy-preserving query log publishing. In Proceedings WWW query log analysis workshop. http://www.mathcs.emory.edu/~lxiong/research/pub/xiong07towards.pdf. Accessed 4 June 2019.

Zhong, R., Fan, J., Li, G., Tan, K., & Zhou, L. (2012). Location-aware instant search. In Proceedings CIKM (pp. 385–394).

Acknowledgements

We are grateful to Microsoft for providing the abstracted Bing logs: in particular, Stephanie Drenchen for helping generate them; and Peter Bailey for working through the legal requirements and providing feedback on a draft of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the Microsoft Research Centre for Social Natural User Interfaces (SocialNUI) at The University of Melbourne.

Rights and permissions

About this article

Cite this article

Krishnan, U., Billerbeck, B., Moffat, A. et al. Abstraction of query auto completion logs for anonymity-preserving analysis. Inf Retrieval J 22, 499–524 (2019). https://doi.org/10.1007/s10791-019-09359-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-019-09359-8