Abstract

Medical data mining is currently actively pursued in computer science and statistical research but not in medical practice. The reasons therefore lie in the difficulties of handling and statistically analyzing medical data. We have developed a system that allows practitioners in the field to interactively analyze their data without assistance of statisticians or data mining experts. In the course of this paper we will introduce data mining of medical data and show how this can be achieved for survival data. We will demonstrate how to solve common problems of interactive survival analysis by presenting the Online Clinical Data Mining (OCDM) system. Thereby the main focus is on similarity based queries, a new method to select similar cases based on their covariables and the influence of these on their survival.

Similar content being viewed by others

1 Introduction

During the course of every day medical practice, patient specific data is stored in numerous databases. These range from accounting systems that focus on the storage of financially relevant information to specific systems like those used in pathology or radiology. Beyond their targeted use in the corresponding departments there is neither a further integration nor an overall statistical exploration of the data. Reasons for this are countless. For example the heterogeneous designs and structures of databases hinder an easy integration of sources. A robust follow-up of progress data is hard to achieve. Last but not least current statistical methods and models are, even with the help of modern statistical software, difficult to implement and the results are hard to interpret. Therefore these methods are almost exclusively used in clinical studies. There data can be modeled to suite the needs of the expert. Seldom these methods are used in interactive software where data preprocessing and validation would be the duty of the practitioner.

The University of Stuttgart (ISA) and the Robert-Bosch Hospital (Pathology) cooperatively developed a data management and analysis system for clinical data mining named Online Clinical Data Mining (OCDM) which allows a physician to analyze and interpret his data interactively and statistically. The system is developed so that all steps from Extract, Transform and Load (ETL) over data management to data analysis can be done automatically. Besides mere counting and aggregation of data, as is known from most OLAPFootnote 1-systems, advanced data mining methods like survival analysis or risk score assessment are available within the system. This way the practitioner can explore the data without any assistance of a statistician.

OCDM was considered as a knowledge discovery system with focus on interactive survival analysis. This means that the survival data can be loaded into the system and than be interactively analyzed by the user. The main objective thereby is to keep the requirements on the user interaction as low as possible. Most current research in clinical data mining focuses on new statistical methods or faster implementations (Mullins et al. 2006; Harkema et al. 2005; Ghannad-Rezaie et al. 2006) and (Brameier and Banzhaf 2001). Almost all of these projects require a large and very heterogeneous group of experts. From biomedical researchers to statisticians and computer scientists everybody has to bring in their knowledge for the project to be successful. The OCDM system was developed with solely the physician in mind. He or she should be the one in charge and he or she should have all the tools at hands to analyze their patients survival data independently.

One of the goals of medical statistics and also medical data mining is to have a case based statistic which allows the prediction of survival for an individual patient. The problem with this becomes more evident when one realizes that a physician wants to get statistical information like survival estimates for a very specific patient. As this is not possible, he or she is looking for cases that are as similar as possible to the one under consideration. For breast cancer there are many millions of different possible cancer types but usually only historical data of a few thousand patients. In such a situation it becomes almost impossible to find many identical cases, which would be required for a sufficiently correct statistical analysis. To overcome this problem we have developed and implemented a procedure based on a regression based distance measure. Our aim was thereby the prediction of survival for an individual by looking at similar patients. This case based reasoning (CBR) is, in this way, unprecedented for medical data. The OCDM system is therefor the only system that allows to actually have personalized statistics for breast cancer patients.

In the following section we will first present other tools for survival analysis and how they relate to the OCDM system. Later on we will describe the basic structure of modern knowledge discovery or data miningFootnote 2 systems and place them in context to OCDM. In Section 4 we will put a focus on the regression based distance measure. There we will show the basic ideas and how they were implemented.

2 Related work

Software for interactive survival analysis is rather rare. There are of course a large number of statistical software packages that support survival analysis like R (R Development Core Team 2008), SAS (http://www.sas.com/) or SPSS (http://www.spss.com/) but these are more or less statistics development environments that allow a user to create its own analysis. For these packages to be employed successfully a lot of hands on work is required that usually prevent practitioners using them.

The Finprog-Study (http://www.finprog.org/) as described by Lundin et al. (2003) and Black (2003) is a truly interactive survival analysis system. There 2,930 breast cancer cases diagnosed from 1991 to 1992 in Finland can be statistically analyzed over a web-frontend. The software is only capable of identifying identical cases, so for rare patient profiles only very few, if any cases can be found. We will present in Section 4 a method to circumvent this problem by using similarity based queries.

McAullay et al. (2005) present a health data mining tool for adverse drug reaction. Similar to the OCDM system it is designed for easy use and focuses on an user centered approach. A more general software prototype was developed by Houston et al. (1999). It is capable of mining different, also unstructured, data source. Inokuchi et al. (2007) describe their approach to a medical data mining system. They focus on the structuring of the data for a hierarchical analysis. Ölund et al. (2007) present a system for bio banking that handles many of the problems of bio-medical data mining.

Besides these system centered research works there is a large number of papers dealing with problems other than the actual implementation of survival analysis and medical data mining. Delen et al. (2005) for example present research on survival prediction methods, Ghannad-Rezaie et al. (2006) describe a particle swarm optimization for medical data mining and Abe et al. (2007) show how to mine medical time series—interesting to note is that they also explicitly mention the difficulties of mining medical data without several domain experts working together.

Regression based distance measure in survival analysis are usually considered in terms of distances between sets of data that can be easily described by the p-value. Meinicke et al. (2006) use it to visualize codon usage data and Radespiel-Tröger et al. (2003) find the splitting point of trees with it. So far Dippon et al. (2002) are the only one we know of that employs regression information to improve on a given distance measurement for survival data and the method presented later on improves on this by using a semi-parametric model.

3 Knowledge discovery in databases

The search for new and yet unknown information typically falls into the domain of data mining or more broadly put knowledge discovery in database systems. We will therefore begin by introducing the major concepts of these systems and show how they relate to other more commonly used concepts such as information systems and data warehouses.

Data mining is defined by Han and Kamber (2001) as the extraction or mining of knowledge from large amounts of data. It consists of seven steps: (i) data cleaning, (ii) data integration, (iii) data selection, (iv) data transformation (v) data analysis (vi) data evaluation and (vii) knowledge presentation. All these steps usually require or involve user interaction. Data cleaning and integration for example requires hands on work by IT staff members whereas data analysis and evaluation involves an extensive interaction by medical practitioners. For a KDD system to be actually used in everyday business it is important that most of the interaction occurs between the system and the person working with the data. Those situations when technical expert knowledge is required should be reduced to a minimum. One of the major objectives when developing the OCDM system was to reduces user involvement to those aspects that are of interest to a physician.

Information Systems, Data Mining and Data Warehouses

During the process of knowledge discovery a user interacts with three different types of systems (information, data mining and data warehouse systems). These form the basis of every such process. Only their tight interaction allows for a successful approach to user centered data mining.

Knowledge discovery always begins at the information system (IS). Here the data is entered and managed by the user. It serves as the major interface between data and users. As such the information system incorporates, from masks for the input of data to the visualization of analysis results, all relevant user interaction components. Inside an information system the operational data is kept. Because this data represents the current state of the organization (in our case the hospital departments with all its patients and resources) it is highly fluent and changes as the organization, its people and its resources change. Hospital information systems and ancillary systems like laboratory or radiology management systems are examples of such systems in hospitals.

Whereas information systems are an integral part of the every day data analysis process, data mining systems and data warehouses are often used independently. The focus of data mining is, in general, to detect new information and uncover unknown relations within the data. Here a data warehouse is helpful. Its objective is to store data from different sources, which is being done in a way such that it is easily accessible for further analysis. Because efficiency is a major aspect of a data warehouse the storage and management of precompiled query results and the provision of optimized data structures for fast processing of standard analysis is mandatory. The (OLAP-) cube is a popular example of such a data structure. All this makes knowledge discovery easier but is not necessary to its success.Footnote 3

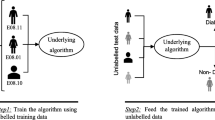

To allow for a user centered approach the three pieces of software must be integrated and developed in such a way that the information system and the data mining system can be used by medical practitioners without the need for assistance of the IT personnel. The data warehouse itself is hidden from the end user in such a way that there is never a direct interaction of the user with any component of this system. Data cleaning, integration, selection and transformation are prepared beforehand and triggered automatically as new data arrives. The whole process of knowledge discovery is visualized in Fig. 1.

3.1 Medical data

Until now we solely talked about information systems and data mining. Thereby we left one large aspect of this paper aside. Medical data is, as is discussed in many research papers (Cios and William 2002; Eggebraaten et al. 2007; Inokuchi et al. 2007; Ölund et al. 2007 and Dyreson et al. 1994), different from data generated by other systems. For example there are frequently changing attributes, a high degree of sparsity and a great deal of heterogeneity in data formats. All this makes structuring the data difficult. As for analysis there are non summarizable measurements and a large number of dimensions that pose problems. Pedersen and Jensen (1998, 1999), Cios and William (2002) discuss these problems in great detail.

Survival data can be seen as a special view on medical data. It highlights patient information and focuses thereby on the information when a certain disease began and when it ended. These two dates are usually given by diagnosis and death. The main task in this process is to find information how this time interval is related to the patient information and how one can establish a good estimate of how it evolves for a given patient. In Section 4.5 survival analysis and related aspects such as censoring will be discussed in more detail.

4 Data mining

The search for new evidences and the development of hypotheses was the main reason for developing the OCDM system. This can be achieved on different levels. For example there are general queries that allow for database queries as are known from languages like SQL. These return record sets based on well defined rules. Besides that, knowledge can also be gained from aggregated data which gives information about a whole set of records. The highest level of sophistication nowadays are non- or semi-parametric statistical methods which can be employed to detect characteristics that are common to all elements in a set (Cherkassky 2007).

In case of survival analysis it is often of great interest to see how the disease of patients with similar properties evolved. This approach, known as case based reasoning (Russell and Norvig 2003), allows a physician to gain insight by just looking at similar cases. The difficulty thereby lies in choosing cases that are similar enough. Especially for breast cancer, the number of relevant classifications and measurements is very high such that is extremely rare to find identical cases (cf. curse of dimensionality, Cherkassky 2007). To make things worse, common similarity measures like the euclidian distance or variants thereof don’t provide good results because they treat each measurement as if it had the same influence as all the others. We will, in the following, introduce a similarity measure which is based on the work of Dippon et al. (2002) that is capable to overcome these difficulties. Therefore we begin with general queries and extend that idea towards similarity based queries. After that we will introduce the Cox regression based similarity queries we implemented in OCDM.

4.1 General queries

Some questions in clinical practice can be answered by simple statistics. Common ones are for example how many patients had a certain diagnosis or how many of those received a special treatment. These questions are often related to quality assurance or accounting and can be answered by more or less complex database queries. As described by Eggebraaten et al. (2007), the EAV (Entity-Attribute-Value) data model is especially popular for medical database systems. There its flexibility is of great help but its complexity in handling also poses some difficulties. Because of this we also implemented a number of views, besides ad hoc queries, which can be used to answer questions occurring regularly.

Date (2002) states that the use of database views, as opposed to direct data access, provides a number of advantages. Especially important ones are security and reusability. Because the architecture of the system is divided into two separate pieces of software, access to one part can be restricted by the use of views to only anonymized virtual tables. Reusability can be achieved because the view table structure is independent of the underlying database structure. Thereby the database can be changed and adopted to new conditions without the need for redevelopment of the analysis component.

The analysis of survival data of a certain patient, as we will describe later on, is based on the survival times of other patients which inhibit similar characteristics. These can be similar classifications or measurements. General queries allow only to retrieve identical measurements as there is no information or knowledge about how a difference in one variable should be weighted as opposed to another (Figs. 2 and 3).

4.2 Similarity based queries

Similarity based queries introduce a weighting scheme that is capable of evaluating the influence of a set of factors on the survival time. Two cases with identical values in the set of factors will have identical expected survival time. Two cases which differ slightly in one factor will have different expected survival time, depending on the difference in the considered factor and on the influence the factor has on the survival time. Thus the functional impact of a vector of factors on the expected survival time or on the probability of survival up to a time t induces a distance measure on the space spanned by the factors. Since the functional relationship is usually unknown, it has to be estimated based on training data. Below we sketch two methods, the first one uses a kernel estimate to estimate the expected survival time, the second one uses Cox regression to estimate the hazard function from which survival probabilities up to a time point can be computed.

Equation 1 shows such a distance measure for two cases represented by vectors x and y consisting of the values of the factors as components:

where d j is a distance defined on the j-th components. This distance depends on the type of the measurement scale of the component. The size of α j reflects the importance of a change in component j on the outcome, for example expected survival.

It is crucial to define the distances d j such that they allow for missing values, which are to be expected quite frequently in high dimensional clinical data. For example, if the j-th component is of numeric or ordinal type, one may choose

where \(z_k^j,z_l^j\) run over the bounded set of admissible values of component j. If component j is of dichotomous or polychotomous type, we set

Thus, the distance given in Eq. 1 can operate on data vectors with components defined on different measurement scales such as numeric, ordinal or nominal scales. It was used in Dippon et al. (2002) for a case based reasoning approach applied on breast cancer data.

The distances in Eqs. 2 and 3 can be refined in order to appreciate the possible different relevance of certain levels. For example, if j is an ordinal or nominal factor with admissible values \(z_0^j,\ldots,z_{m^j}^j\), we set α j = 1 and choose certain real numbers \(\beta^j_0=0,\beta^j_1,\ldots,\beta^j_{m^j}\), and set

In the following two subsections we present two methods to determine sensible values for the α j’s and \(\beta_k^j\)’s.

4.3 Distances induced by expected survival regression

The main idea of Dippon et al. (2002) to determine the weights α j in Eq. 1 is based on the observation that the bandwith of the Nadaraya-Watson kernel regression estimateFootnote 4 is large if the regression function is flat, and it is small if the regression function is far from being a constant, which means that there is a strong influence of the factor on the outcome. For a new case with covariate vector x

estimates the expected survival time m(x) = E(T|X = x) for a given case x based on a training set of historical data {(X 1,Z 1),...,(X n ,Z n )} with possibly right censored survival times Z i . Here δ i equals one, if the survival time is uncensored, and zero otherwise. Furthermore, G n (t) estimates the survival function of the censoring time (see Györfi et al. 2002). In Eq. 5 the weight vector α is chosen by minimizing a related empirical appropriately weighted L 2-error measure via k-fold cross validation, for instance. However, such a procedure is computationally expensive and the rate of convergence is rather slow (see Ahmad and Ran 2004).

4.4 Distances induced by Cox proportional hazard regression

To circumvent the difficulties in processing the optimal bandwiths for a fully non-parametric model one can fall back on a semi-parametric model such as the Cox proportional hazard model. Clearly, this imposes some restrictions on the nature of the data. Good references on the subject are Kleinbaum and Klein (2005) and of course Cox (1972).

In the Cox proportional hazard model it is assumed that the hazard function h is given by the product of the baseline hazard function, which is assumed to depend on the time t only, and the exponential of the inner product of the weight vector β and the covariate vector x, both not depending on t:

In the inner product \(\beta'x=\sum_j \beta^jx^j\) the coefficients β j, possibly negative, rates the influence of changes in the covariates on the hazard function, and thus on the survival probability. To handle an ordinal or a nominal factor with m levels, often m − 1 zero-one valued dummy variables are introduced instead. Usually the first level of the factor is considered as the baseline value and all related dummy variables are chosen to be zero. Each other level is coded by one dummy variable to be one and all others to be zero. Therefore it is reasonable to compare two levels of one factor by the difference of their corresponding weights \(\beta_k^j\) und \(\beta_l^j\). This observation motivated the definition of the distance for an ordinal or nominal factor in Eq. 4. If factor j is of numerical type, the corresponding α j is chosen to be the absolute value of β j. Appearantly it is sufficient to calculate β which can be achieved by a Newton-Raphson optimization procedure (see Cox 1972).

The following query demonstrates the use of the distance measure to retrieve the first 20 most similar cases from the database.

The function getDistance(x,y) implements the measure described in Eq. 1. It is developed as a Java stored procedure that determines the distance between each of the covariates x j and y j of two given cases x and y. The distances d α are obtained by differentiating between the different types of variables, described by the Java objects of the variables and applied to the measures Eq. 2, 3 and 4. The maximum differences can be calculated by precompiled SQL-Statements.

4.5 Survival analysis

In survival analysis questions are asked that concern the amount of time that elapses till an event occurs. In case of breast cancer this event is often time to death or time to a recidive, e.g.

Censored Data

When dealing with time-to-event data, there is always a number of patients that are still alive and for whom the correct time to event, e.g. death, is not known. Because either the initial date or the events, diagnosis or death, is not known the time interval can not be correctly calculated. This fact—i.e. the incomplete information—is called censoring. It makes the estimation of the time to death more difficult. In our data many survival times are right-censored which means that the interval has an unknown end. Left-censoring would mean that the correct date of the beginning, in our case the time of diagnosis is not known.

Besides patients being still alive there is also another form of left-censoring, the premature death because of an unrelated or non identified cause. These could be for example car accidents or misclassified events as discussed in detail in Hoover and He (1994). The survival analysis software should be able to handle these cases.

Kaplan-Meier Estimator

The Kaplan-Meier (KM) product limit estimator for survival times is a commonly used non-parametric method to estimate and plot survival curves. It is based on the observation that the probability S(t j ) of surviving the time interval [0,t j ] is equal to the product of probability of to be still alive after t j − 1 and the conditional probability of surviving t j given that the patient is still alive beyond t j − 1:

with ordered time points 0 < t 1 < ... < t n at which deaths occurred in the given data set. For t 0: = 0 we assume S(t 0) = 1. The probabilities S(t j ) can be estimated by the product of the relative frequencies of patients alive just after t i given the number of patients alive just before t i :

The KM estimation is independent of the type of query used to select the data. This means that one can formulate a query that includes further information to collect the relevant cases and use the result set as input for the Kaplan-Meier estimator. This way it is also possible to use similarity based queries as described in Section 4.2. The advantage is that even if there is only a small number of cases with identical values in the specified set of covariables one can still get estimation results based on similar cases. Especially for breast cancer this is an interesting feature because the number of relevant information to determine such a case is rather large so that identical cases are often rare. Usually too rare to calculate a meaningful survival time estimation, c.f. Cherkassky (2007)—the curse of dimensionality.

Distribution of Covariables

Knowing the survival probability of a patient is important, but for further research it is also necessary to get more information on how facts and measurements, like age or the size of a tumor, are distributed among similar cases. If the survival probability of two subgroups of patients differ in their estimated survival, it is of interest whether this is associated with differences in the distribution of one or more of the related covariables. OCDM allows to investigate this question by showing appropriate statistical charts and executing statistical hypothesis tests.

4.6 Outlook

The OCDM system was developed to suite the needs of physicians, medical researchers and practitioners. This goal was largely achieved, but as users get more acquainted with the system they ask for further analysis tools. In case of survival analysis the inclusion of competing risk scenarios (Klein and Moeschberger 2005) is planed. As for further statistics, methods like association rules analysis or hierarchical clustering (Hastie et al. 2002) could be of interest especially when considered to be used with Survival Analysis specific properties like being done by Meinicke et al. (2006) or Radespiel-Tröger et al. (2003).

Data mining of different data sources can be achieved by integrating all data in one central data source or by means of distributed data mining. The later is of interest if, because of the protection of privacy of patients, an inclusion of data from different sources is not possible. In such situations the techniques of privacy preserving data mining could be of great help. We are planing on integrating Privacy Preserving methods for survival analysis, see Fung et al. (2008).

5 Case studies

So far we have described the ideas and techniques behind the OCDM system. In this section we want to present two case studies which will demonstrate the use and the benefit of the software to the practitioner. The first study describes how the system is used at the Robert Bosch Hospital (RBK) Breast Center, a breast cancer research and treatment center in Stuttgart. There the intention is to give physicians and patients information on survival expectations of individual cases or of different kinds of treatment. Besides that the software is actively used as a means of hyoptheses generation for further research.

The second study describes the use of the system within the OSP Stuttgart. Here the main intention is benchmarking of hospitals which is done by comparison of survival curves of patients treated in different hospitals.

5.1 RBK breast center

The Beast Center of the RBK is, with more than 3,000 cases stored in database, one of the largest institutions treating breast cancer in the area. Here the decision for the OCDM system was based on the continuous need for improvement in the quality of treatment and statistically valid survival estimations for patients.

In such a setting a typical question is that for breast cancer patients without treatment, except for surgery. Here the estimated five year survival is 0.7. For patients that receive surgery, chemotherapy, radiology and hormone therapy the 5-year-survival is just slightly less 0.8. This means that 10% of all patients with mamma carcinoma profit from a combined treatment of surgery, chemo-, radio- and hormone therapy. 20% of the patients will die from the disease and besides that suffer from side effects of the chemo-, radio- and hormone therapy. The majority though, 70% will be treated successfully, where the largest part of them will receive a therapy (chemo-, radio- or hormone) they have no benefit from but suffer the side effects.

Questions like these can be explored and answered by the OCDM system. We will demonstrate this by trying to gain inside into how breast preserving therapy (BPT) (the most favorable form of surgery) compares to non breast preserving therapy. Figure 4 shows the selection of a patient with breast preserving therapy and compares it to the rest of all patients (the default selection). Figure 5 shows the resulting survival curve where a clear benefit of the BPT treatment is visible.

A look at the distribution of factors like tumor size (pT) or number of effected lymph nodes (pN) shows that BPT is most commonly used in settings where the expected survival is highly independent of the treatment (besides surgery), i.e tumors which are small in size and have a small number of affected lymph nodes. A closer look shows that for relative values the difference between the two distributions, even though statistically significant, is not to large (Figs. 6 and 7). To gain further insight in the distribution of BPT patients, we compare BPT patients for different levels of tumor sizes and lymph node counts. Last but not least we can calculate the influence of the BPT with the help of Cox regression (Figs. 8 and 9).

The kind of exploration described above is only limited by the number of patients found within the database. This is especially a problem for rare cases. In such situations—Fig. 10 demonstrates this for a patient with the factor settings BPT=yes, pT1, pN0 and distant metastasis where only two identical cases where found in the database—the results obtained are only of little statistical significance and it is better to resort to similar, instead of identical cases as shown in Fig. 11. Because the results are calculated instantaneous (less than five seconds for similar search and no notable delay due to calculation for identical search) all these analyses can be calculated and refined in an interactive fashion.

5.2 Cancer registries

Cancer registries are usually an association of tumor hospitals. One of the responsibilities of these collaborations is the collection of data on diagnosis, therapy, course of the disease and on aftercare for the purpose of benchmarking, quality assurance as well as process and outcome improvement.

Here we want to highlight how the OCDM system is being used for the last of the above mentioned objectives, benchmarking and quality assurance. When comparing the survival of patients of different hospitals it is hardly sufficient just to look at the actual survival curve. The other given information such as the distribution of age, tumor size, number of effected lymph nodes and so on. These give important information on how the difference can be explained and what conclusions can be drawn. Sometimes it is important to include only a subgroup into the evaluation to compare equal populations. Most of the selections described here where already presented in the preceding section so we will just demonstrate the hospital comparison for certain subgroups (see Figs. 12 and 13).

6 Discussion and results

In the course of this paper we have presented the obstacles and challenges that come up when developing a medical data mining system. We have shown how we met them with the implementation of the Online Clinical Data Mining system (OCDM) for the interactive analysis of survival probabilities. The system was designed for an interactive usage by physicians and medical practitioners. They should be put in the position to analyze their own data without the assistance of statistical experts. We put a special focus of this paper on the methods for interactive survival analysis and there especially on the regression based distance measure we used to retrieve similar cases from the database system. We presented this method in the light of statistics for individual patients which is currently, to our best knowledge, only possible with the OCDM system.

OCDM is currently used in a number of hospitals and a cancer register for various data analysis and benchmark projects. The Schillerhöhe Hospital in Stuttgart-Gerlingen for example uses the software to analyze the data of 2,000 patients suffering of lung cancer. At the Robert Bosch Hospital the data of 3,000 breast cancer patients is managed and analyzed. The Stuttgart Cancer Registry (OSP-Stuttgart) has used the system to estimate survival probabilites based on the data of more than 14,000 breast cancer patients. The new version, which is currently being deployed, was successfully tested with the data of above 35,000 patients.

As a result of the application of the system, new knowledge about the expressiveness of histological classifiers were found. With the increased usage more clinically and biologically relevant hypothesis can be generated and explored by researchers and practitioners as well and clinical data mining will be more attractive for a broader audience.

6.1 Confirmatory and exploratory data analysis

The results obtained through clinical data mining shouldn’t be used without caution. Especially in medical statistics there is an ongoing discussion whereas exploratory data analysis can be used as an equivalent tool to confirmatory analysis. Cios and William (2002) discuss such problems as ambush in statistics. We have developed our analysis system as a hypothesis generator—rather than an hypothesis proofer—and in this context, discovered information must be validated by statisticians or maybe even confirmed in clinical studies to be accepted as valid medical knowledge. This division of worktasks—the exploratory work is done by the physician with the data mining system and the validation by the statisticians with the persons specialized tools—has in our experience proven to work very efficiently.

6.2 Conclusion

Clinical data is documented and analyzed in probably all medical institutions. Interactive analysis is seldom found and, if it is really practiced, the analytical methods are mostly based on technologies coming from other fields or are focused on the special interests of the physician, e.g. accounting or quality control. Medical data though requires special treatment and the analysis should be centered on the patient and its disease. To achieve all, interactivity, patient centering and the accounting for the specialties of biomedical data a new view on data integration, data management and data analyzation is required. If these aspects are already considered during development, huge quantities of data can be made accessible that currently lie untouched in clinical data storages and cancer registries.

Notes

OnLine Analytical Processing—A hypothesis driven, dimensional approach to decision support (Kimball 1996).

The terms knowledge discovery in databases (KDD) and data mining (DM) are used in accordance with Fayyad et al. (1996). When it comes to actual software systems we will use the terms data mining or knowledge discovery system variantly.

In this paper we use the term data warehouse in a broader sense, as a storage system for a large number of information from different sources.

A non-parametric method to estimate the conditional expectation of a random variable Y, given the value x of its covariate, by a locally weighted average of the observations Y i related to the vicinity of x which is moderated by a kernel function and a bandwidth, see Hastie et al. (2002), e.g.

References

Abe, H., Yokoi, H., Ohsaki, M., & Yamaguchi, T. (2007). Developing an integrated time-series data mining environment for medical data mining. In Data mining workshops, 2007 ICDM workshops 2007 seventh IEEE international conference (pp. 127–132).

Ahmad, I., & Ran, I. (2004). Data based bandwidth selection in kernel density estimation with parametric start via kernel contrasts. Journal of Nonparametric Statistics, 16(37), 841–877.

Black, N. (2003). Using clinical databases in practice. Basic Music Journal, 326(7379), 2–3.

Brameier, M., & Banzhaf, W. (2001). A comparison of linear genetic programming and neural networks in medical data mining. IEEE Transactions on Evolutionary Computation, 5(1), 17–26.

Cherkassky, V. (2007). Learning from data, 2nd edn. New York: Wiley.

Cios, K. J., & William, M. G. (2002). Uniqueness of medical data mining. Artificial Intelligence in Medicine, 26(1–2), 1–24.

Cox, D. R. (1972). Regression models and life-tables. Journal of the Royal Statistical Society Series B (Methodological), 34(3), 187–220.

Date, C. J. (2002). Introduction to database systems. Boston: Addison-Wesley Longman.

Delen, D., Walker, G., & Kadam, A. (2005). Predicting breast cancer survivability: A comparison of three data mining methods. Artificial Intelligence in Medicine, 34(3), 113–127.

Dippon, J., Fritz, P., & Kohler, M. (2002). A statistical approach to case based reasoning, with application to breast cancer data. Computational Statistics & Data Analysis, 40(3), 579–602.

Dyreson, C., Grandi, F., Käfer, W., Kline, N., Lorentzos, N., Mitsopoulos, Y. et al. (1994). A consensus glossary of temporal database concepts. ACM SIGMOD Rec, 23(1), 52–64.

Eggebraaten, T. J., Tenner, J. W., & Dubbels, J. C. (2007). A health-care data model based on the hl7 reference information model. IBM Systems Journal, 46(1), 5–18.

Fayyad, U., Piatetsky-Shapiro, G., & Smyth, P. (1996). From data mining to knowledge discovery in databases. Ai Magazine, 17, 37–54.

Fung, G., Yu, S., Dehing-Oberije, C., Ruysscher, D. D., Lambin, P., Krishnan, S. et al. (2008). Privacy-preserving predictive models for lung cancer survival analisys. In Privacy-preserving workshop at the SIAM data mining conference 2008.

Ghannad-Rezaie, M., Soltanain-Zadeh, H., Siadat, M. R., & Elisevich, K. (2006). Medical data mining using particle swarm optimization for temporal lobe epilepsy. Evolutionary Computation, 2006 CEC 2006 IEEE Congress on pp. 761–768.

Györfi, L., Kohler, M., Krzyzak, A., & Walk, H. (2002). A distribution-free theory of nonparametric regression. New York: Springer.

Han, J., & Kamber, M. (2001). Data mining. San Francisco: Morgan Kaufmann.

Harkema, H., Setzer, A., Gaizauskas, R., Hepple, M., Power, R., & Rogers, J. (2005). Mining and modelling temporal clinical data. In Cox, S. (Ed.), Proceedings of the 4th UK e-Science all hands meeting. Nottingham, UK, available at: http://www.allhands.org.uk/2005/proceedings/.

Hastie, T. J., Tibshirani, R. J., & Friedman, J. H. (2002). The elements of statistical learning, corrected print. edn. New York: Springer.

Hoover, D. R., & He, Y. (1994). Nonidentified responses in a proportional hazards setting. Biometrics, 50(1), 1–10.

Houston, A. L., Chen, H., Hubbard, S. M., Schatz, B. R., Ng, T. D., Sewell, R. R., et al. (1999). Medical data mining on the internet: Research on a cancer information system. Artificial Intelligence Review, 13(5–6), 437–466.

Inokuchi, A., Takeda, K., Inaoka, N., & Wakao, F. (2007). Medtakmi-cdi: Interactive knowledge discovery for clinical decision intelligence. IBM Systems Journal, 46(1), 115–133.

Kimball, R. (1996). The data warehouse toolkit. New York: Wiley.

Klein, J. P, & Moeschberger, M. L. (2005). Survival analysis, 2nd edn. New York: Springer.

Kleinbaum, D. G., & Klein, M. (2005). Survival analysis, 2nd edn. New York: Springer.

Lundin, J., Lundin, M., Isola, J., & Joensuu, H. (2003). Infopoints: A web-based system for individualised survival estimation in breast cancer. Basic Music Journal, 326(7379), 29

McAullay, D., Williams, G., Chen, J., Jin, H., He, H., Sparks, R., et al. (2005). A delivery framework for health data mining and analytics. In ACSC ’05: Proceedings of the twenty-eighth Australasian conference on computer science (pp. 381–387). Darlinghurst: Australian Computer Society.

Meinicke, P., Brodag, T., Fricke, W. F., & Waack, S. (2006). P-value based visualization of codon usage data. Algorithms for Molecular Biology, 1, 10.

Mullins, I. M., Siadaty, M. S., Lyman, J., Scully, K., Garrett, C. T., Miller W. G. et al. (2006). Data mining and clinical data repositories: Insights from a 667,000 patient data set. Computers in Biology and Medicine, 36(12), 1351–1377.

Ölund, G., Lindqvist, P., & Litton, J. E. (2007). Bims: An information management system for biobanking in the 21st century. IBM Systems Journal, 46(1), 171–182.

Pedersen, T. B., & Jensen, C. S. (1998). Research issues in clinical data warehousing. In SSDBM ’98: Proceedings of the 10th international conference on scientific and statistical database management, IEEE computer society (pp. 43–52). Washington, DC, USA.

Pedersen, T. B., & Jensen, C. S. (1999). Multidimensional data modeling for complex data. In ICDE ’99: Proceedings of the 15th international conference on data engineering, IEEE computer society (p. 336). Washington, DC, USA.

R Development Core Team (2008) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria, http://www.R-project.org, ISBN 3-900051-07-0.

Radespiel-Tröger, M., Rabenstein, T., Schneider, H. T., & Lausen, B. (2003). Comparison of tree-based methods for prognostic stratification of survival data. Artificial Intelligence in Medicine, 28(3), 323–341.

Russell, S. J., & Norvig, P. (2003). Artificial intelligence, 2nd edn. Englewood Cliffs: Prentice Hall.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Klenk, S., Dippon, J., Fritz, P. et al. Interactive survival analysis with the OCDM system: From development to application. Inf Syst Front 11, 391–403 (2009). https://doi.org/10.1007/s10796-009-9152-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-009-9152-5