Abstract

We introduce a method for systematically reducing the dimension of biophysically realistic neuron models with stochastic ion channels exploiting time-scales separation. Based on a combination of singular perturbation methods for kinetic Markov schemes with some recent mathematical developments of the averaging method, the techniques are general and applicable to a large class of models. As an example, we derive and analyze reductions of different stochastic versions of the Hodgkin Huxley (HH) model, leading to distinct reduced models. The bifurcation analysis of one of the reduced models with the number of channels as a parameter provides new insights into some features of noisy discharge patterns, such as the bimodality of interspike intervals distribution. Our analysis of the stochastic HH model shows that, besides being a method to reduce the number of variables of neuronal models, our reduction scheme is a powerful method for gaining understanding on the impact of fluctuations due to finite size effects on the dynamics of slow fast systems. Our analysis of the reduced model reveals that decreasing the number of sodium channels in the HH model leads to a transition in the dynamics reminiscent of the Hopf bifurcation and that this transition accounts for changes in characteristics of the spike train generated by the model. Finally, we also examine the impact of these results on neuronal coding, notably, reliability of discharge times and spike latency, showing that reducing the number of channels can enhance discharge time reliability in response to weak inputs and that this phenomenon can be accounted for through the analysis of the reduced model.

Similar content being viewed by others

1 Introduction

Since the seminal work of Hodgkin and Huxley (1952), biophysically realistic neuron models are based on a detailed description of the gating dynamics of various ionic currents. The original four variables Hodgkin Huxley (HH) model has inspired a plethora of conductance-based neuron models with many more variables. Theoretical analysis of these high dimensional models, a prerequisite for understanding their functional response properties, can be mathematically challenging, as it is already the case for the original HH model (see for instance Rubin and Wechselberger 2007). Indeed, the higher the dimension of the model and the more difficult it becomes to have an intuitive representation of the dynamics. Furthermore, numerical simulations of large networks of high-dimensional models can pose computational difficulties. One way to partly overcome both theoretical and numerical hurdles has been through model reduction, that is, the design of lower dimensional models that retain essential characteristics of the original high dimensional ones. Dimension reduction for these models is often achieved by taking advantage of the separation of gating variables time-scales (Rinzel 2944; Kepler et al. 1992). Thanks to reduction, a deeper understanding of dynamical properties of neuronal models has been gained using singular perturbation theory (e.g. Suckley and Biktashev 2003; Rubin and Wechselberger 2007) and phase plane analysis of two-dimensional models (Izhikevich 2007). All these reduction schemes are designed for deterministic neuronal models. None of them takes into account channel noise arising from the intrinsic stochasticity of ion channels. The purpose of this paper is to present a reduction scheme for stochastic neuronal models with channel noise described by kinetic Markov schemes (Hille 2001; White et al. 2000).

Reductions and singular perturbation theory have been the cornerstone in the analysis of the dynamics of deterministic neuronal models. Similarly, one expects a stochastic reduction method to provide a means to explore and gain insights into the interplay between intrinsic noise and dynamical properties of neurons. For an infinite number of ion channels, the deterministic models are obtained by a law of large numbers from Pakdaman et al. (2010). However, when the number of ion channels is finite, the remaining fluctuations may be the source of many noise-induced phenomena (Chow and White 1996; Steinmetz et al. 2000; Schmid et al. 2003; Shuai and Jung 2003; Rowat 2007) which have putative functional consequences in neuronal coding and information processing. Therefore, it is of high relevance to develop a systematic theoretical approach to analyze these stochastic phenomena. As we will show in this work, reduction of conductance-based neuron models with stochastic ion channels provides a method to study noise-induced phenomena with the well developed techniques of deterministic systems.

An important example in the deterministic setting is the classical reduction of HH, setting m = m ∞ (V) where m is sodium activation, which is for instance, the starting stage of the analysis carried in Rubin and Wechselberger (2007). Here we examine the stochastic counterpart to this deterministic reduction: the steady-state approximation is replaced by an averaging over the quasi-stationary distribution of the fast variable. We show that for HH, the stochastic reduction yields not only standard terms, but also modifies the deterministic model. These modifications clarify the scenario of noise-induced changes in discharge patterns.

In other words, reduction transforms the analysis of the dynamics of a stochastic system into that of a deterministic dynamical systems. In this way noise induced qualitative changes of dynamics are brought within the well established field of bifurcation analysis of deterministic systems. This is all the more remarkable that reduction does not require any hypothesis on the noise intensity, that is, it is not confined to small or large noise limits as other theoretical analyses of stochastic systems often do. In this sense, reduction is a powerful tool, not only for decreasing the dimension of a model, but to gain understanding on the interplay between fluctuations and nonlinearities in slow fast systems.

The analysis of the reduced model reveals the existence of a transition in the dynamics of the model as the number of channels is decreased. Noise induced transitions in excitable systems have been reported in all classical neuronal models, such as the leaky integrate and fire model (Pakdaman et al. 2001), the FitzHugh Nagumo model (Tanabe and Pakdaman 2001), the Morris–Lecar model (Tateno and Pakdaman 2004), the active rotator and the Hodgkin–Huxley model (Tanabe and Pakdaman 2001; Takahata et al. 2002). These transitions have been analyzed through derivation of deterministic bifurcation diagrams and their consequences for neuronal coding, such as noise induced enhancement of discharge time precision and reliability, have been discussed (Tanabe et al. 1999; Pakdaman et al. 2001; Tanabe and Pakdaman 2001). The present work shows that a similar phenomenon occurs when the source of the noise is channel fluctuations rather than external. However, it is important to emphasize that this does not constitute a mere extension of previous results, because, unlike external noise, channel noise is inherently implicated in the nonlinear threshold-like response of neurons so that our approach and analysis methods are altogether different from previous studies.

In terms of methods, the question of stochastic reduction based on a separation of time-scales has been investigated in other fields, especially for biochemical reaction networks mainly through expansions of master equations (Mastny et al. 2007 and ref. therein). These situations are simpler than neuronal membrane models in which stochastic jump processes are coupled with differential equations. Our systematic stochastic reduction scheme is adapted for such hybrid systems that combine differential equations and jump processes. It is based upon a singular perturbation approach to multiscale continuous-time Markov chains (Yin and Zhang 1998) combined with a recent development of the averaging method for piecewise-deterministic Markov processes, see Faggionato et al. (2010) and Pakdaman et al. (2010, submitted). The averaging method has been widely used in the analysis of systems perturbed by fast deterministic oscillatory motion (Arnold 1983). In stochastic systems, the situation is compounded by the random nature of the fluctuations, even though, in essence, the steps retain some similarity to the deterministic case. Before going into details in Sections 2 and 3, we provide here a schematic view of our approach. First, multiscale analysis of kinetic Markov schemes enables the introduction of fast sub-processes and of an aggregated slow process accounting for the jumps between the fast sub-processes. The fast evolution within each sub-process is replaced by its quasi-stationary behavior. Then, averaging the slow dynamics with respect to these quasi-stationary distributions leads to an effective reduced stochastic hybrid system at the limit of a perfect separation of time-scales. However, averaging over the quasi-stationary measure leads to an error. A key step in our reduction approach is to estimate this error deriving a second-order term in the form of a diffusion approximation (Pakdaman et al. 2010, submitted).

This article is organized as follows. We first treat the reduction of stochastic versions of the HH model in order to illustrate in a specific example the three following points. (1) To describe the two types of new terms, namely a deterministic and stochastic one, that can appear in reduction which have no counterpart in deterministic reduction. (2) To present in a step by step manner the stages leading to the computations of these terms. (3) To illustrate the type of information regarding neuronal dynamics that may be obtained from the reduced model and that is not readily accessible in the full model. The general reduction scheme for arbitrary stochastic conductance based neuronal models that requires the manipulation of numerous notations, is described in full detail after treating this example. We hope that this particular layout, which starts from the analysis of a specific example rather than the depiction of the general scheme is more helpful to the reader and for potential applications of stochastic reduction in other models.

2 Reduction of HH models

In this section we present the result of our reduction approach on two stochastic versions of the HH model. An overview of the full models and their reductions is given in Fig. 2.

2.1 Full models

Deterministic model HH We recall here the classical deterministic HH model (Hodgkin and Huxley 1952), defined by the following system of differential equations:

with \(F(V,m,n,h)\!:=\!C^{-1}[I\!-\!g_L(V\!-\!V_L)\!-\!g_{Na}m^3h(V-V_{Na})-g_{K}(V-V_{K})]\) where g L , g Na , g K , V L , V Na , V K are respectively the maximal conductances and reversal potential associated with leakage, sodium and potassium ionic cu rrents, and I is the input current. The functions α x , β x , τ x and x ∞ for x = m, n, h are given in Appendix A.

We now introduce stochastic versions of HH with a finite number of ion channels: as metastable molecular devices subject to thermal noise, their stochastic conformational changes are usually (Hille 2001) modeled by kinetic Markov schemes (KMS). For the first version, called the “two-state” model TS N, the limit of infinite number of channels N → ∞ (by increasing the area of the membrane patch and keeping the densities constant) gives exactly the deterministic system HH (Pakdaman et al. 2010). However, this is not the case for the other version, called here the “multistate” model MS N, introduced in Skaugen and Walloe (1979) and frequently used in the literature. Indeed, its deterministic limit MS ∞ does not match with HH, unless a specific binomial relationship between the initial conditions is satisfied (see Section 4.2 for more details). This difference, which may seem innocuous for the deterministic reduction, has a substantial impact in the stochastic case as will become clear in the following, and we think that understanding this difference is important from a modeling perspective.

Stochastic two-state model TS N The gating variables m and h are treated separately. Gates m and h are assumed to follow open-closed schemes as depicted in Fig. 1 with opening and closing rates α m (V), β m (V) for x = m and α h (V), β h (V) for x = h. If the number of sodium channel is N Na then the number of gates m is N m = 3N Na and of gates h is N h = N Na . Similarly, the potassium channel is composed of four gates, each one following an open-closed scheme with rates α n (V), β n (V) for x = n. Denoting x N the proportion of open gates x, for x = m, n, h, the equation for V is:

Here N stands for the triple (N m , N h , N n ).

Stochastic multistate model MS N In this model, introduced in Skaugen and Walloe (1979), each of the N Na sodium channels follows an eight-state Markov scheme S Na as shown in Fig. 2.

Overview of the full models and their reductions. To account for the time-scale separation (the time-constant of sodium activation “m” is small compared to the other time-constants), we have introduced a small parameter ϵ. On the left column are stated the full models and on the right their reduction when ϵ → 0. More precisely, on the first line, the deterministic HH ϵ model is reduced to a 3D model. On the second and third lines, two stochastic versions of HH with a finite number of ion channels are considered. On the second line, the stochastic two-state model TS N,ϵ is reduced to a model TS N,0 where an additional deterministic term has appeared. Is also included a corrective stochastic term for small ϵ. On the third line, the stochastic multistate model MS N,ϵ (sodium and potassium channels follow multistate kinetic schemes) is reduced to a model MS N,0 where no additional term appear. A corrective stochastic term is also included for small ϵ. See text for more details

In the equation for V the product m 3 h corresponding to the sodium conductance is replaced by the proportion of channels in the state m 3 h 1 :

where each \(c^{Na}_n(t)\) follows independently the scheme S Na for n ∈ {1, .., N Na }. Similarly, each of the N k potassium channel variables \(c^{K}_n(t)\) is assumed to follow independently a five-state Markov scheme S K shown in Fig. 2. The term n 4 in the equation for V is replaced by the proportion of channels in the state n 4 :

Eventually, the equation for V reads:

Here N stands for the pair N = (N Na , N K ).

2.2 Reduced models

Time-scale separation As noticed in Suckley and Biktashev (2003) about the deterministic model HH, the time-scale of the variable m is faster than the others and we introduce a modified model HH ϵ (with ϵ a small positive parameter) where τ m (V) is replaced by ϵτ m (V) (equivalently α m (V) and β m (V) are replaced by \(\epsilon^{-1}\alpha_m(V)\) and \(\epsilon^{-1}\beta_m(V)\)). Similarly, we define TS N,ϵ and MS N,ϵ the associated modified stochastic models.

We assume the reader is familiar with deterministic reduction techniques, leading to the following reduced deterministic system HH 0 when ϵ → 0, substituting m by its steady-state m ∞ (V) in the equation for V:

We now proceed to the reduction of the two stochastic models TS N,ϵ and MS N,ϵ introduced above. We first present the results and then summarize the method and explain how to obtain each term in Section 3. The general framework is exposed in Section 6.

Stochastic reduction of the two-state gating model Applying the general theory (cf. Section 6), we derive the reduced model TS N,0 when ϵ = 0:

where \(\bar{F}_{N}\) is defined by:

and

As before, the variables \(\bar{h}\) and \(\bar{n}\) follow their usual kinetic Markov scheme with transition rates function of \(\bar{V}\).

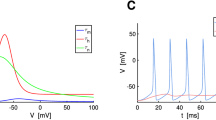

The expression of \(\bar{F}_N(V)\) contains an additional deterministic term, namely K N (V), that depends explicitly on the number of channels. This term comes from the average of m 3 against the quasi-stationary binomial distribution (cf. formula (9)). Thus, considering the case of a finite number N m of stochastic sodium gates leads to a new term in the effective description, as a result of the cubic non-linearity. In Fig. 3, the corrective term I cor = − g Na h(V − V Na )K N (V) is displayed as a function of V for a given value of h and for different values of N m . When V is very negative (resp. very positive), most of sodium channels are closed (resp. open), so that fluctuations due to sodium channel noise are very small, which is consistent with the bell shape of the corrective term. This term is always positive in the region V < V Na = 115 mV, and acts as an additional positive voltage-dependent current which depolarizes the membrane. This suggests that for N m low enough, this corrective term can induce regular firing, through a bifurcation path similar to the classical bifurcation of the HH model with I as a parameter. We will investigate further this phenomenon in Section 4.1.

For small ϵ, a correction term can be included and a switching diffusion approximation is obtained in the form:

Here B TS depends on N m and its computation is explained in Section 3, paragraph 5 with more details in Appendix B.

Stochastic reduction of the multistate gating model To operate the reduction when ϵ → 0 of the multistate model, the S Na scheme is reduced into a two-state open-closed scheme for h, whose transition rates, α h (V) and β h (V), are unchanged because independent of the state of m. However, when averaging the vector field of V, it is necessary to replace the term m 3 h 1 by the quasi stationary distribution at m = m 3 multiplied by h, which gives \(m_{\infty}(V)^3h\). Thus, taking the limit ϵ → 0 for the multistate model leads to a reduced system with m = m ∞ , as the usual deterministic reduction, with stochastic h following a two-state scheme (and n following the S K scheme).

Moreover, when ϵ is small it is of interest to incorporate a corrective term in the form of switching diffusion correction of order \(\sqrt{\epsilon}\) on the equation for the variable V. The computation of variance of this corrective term (see Sections 3 and 6) gives the following approximation:

with

where α m and β m are evaluated at \(\bar{V}\). The other variables \(\bar{n}\) and \(\bar{h}\) are the empirical measures associated with two-state kinetic Markov schemes, respectively with transition rates α n , β n and α h , β h , functions of \(\bar{V}\). The obtained expression is consistent with the approximation obtained in Chow and White (1996). Such an approximation may have many applications, both theoretical (to estimate the variability in exit problems) and numerical (the simulation of jump process is very heavy when ϵ is small, compared to this switching diffusion approximation).

These comparisons of the forms of the deterministic and stochastic reduced models highlight the key following point. In the deterministic case, we need only to replace the activation variable of the sodium current by its stationary value. No other changes are brought to the equation. In the stochastic case, there are two types of changes. One is the possible addition of a deterministic term at the limit ϵ = 0 and the other is the correction term for the fluctuations, of order \(\sqrt{\epsilon}\) for small ϵ. This latter term replaces fluctuations due to the jump process (channel noise) with a diffusion term. Furthermore, the terms obtained depend on the KMS model of channel noise.

3 The reduction algorithm

Here we summarize the reduction steps, with comments referring to the reduction of the stochastic HH models presented above. The general theory behind this reduction method is given in Section 6.

-

1.

Identify the time-scale separation and partition the state space In order to identify the fast transitions, one has to compare the magnitudes of the kinetic rates present in the system (higher rates correspond to faster transitions). Two related issues are expected to be encountered: first, the transition rates are function of the membrane potential so it is not straightforward to compare them, second the membrane potential dynamics has its own time constant. A solution suggested in Suckley and Biktashev (2003) is to compute \(\tau_x:=|\frac{\partial}{\partial x} \frac{dx}{dt}|\) for each variable x. Once the fast transitions have been identified in a given scheme (such as in the S Na scheme) one has to partition the state space into fast subsets. In the case of the model TS N,ϵ, the partition is trivial since there is only one transition in the two-state scheme for m, which is fast.

-

2.

Find the quasi-stationary distributions within fast subsets For a single channel following the two-state model with rates proportional to ϵ − 1, the stationary distribution characterizes the limiting behavior when ϵ → 0 and is given (in the case of m) by ρ TS = (1 − m ∞ (V),m ∞ (V)). In the multistate model, within a fast subset the stationary distribution is given by: \(\rho_{MS}=((1-m_{\infty}(V))^3,3(1-m_{\infty}(V))^2m_{\infty}(V),3(1-m_{\infty}(V))m_{\infty}(V)^2, m_{\infty}(V)^3)\).

-

3.

Average the transition rates to find the slow reduced kinetic scheme This step concerns only the multiscale model: one has to construct a reduced slow process which aggregates the slow transitions between states of the fast subsets (for instance transitions between m i h 0 and m i h 1 , 1 ≤ i ≤ 3, in the S Na scheme). Each fast subset is identified with a state of this slow process. In a general setting, the transition rates for this reduced slow process are given by a specific average with respect to the quasi-stationary distribution (cf. coarse-graining formula in Section 6.2). In the case of the S Na scheme, the reduced slow process obtained by this approach is exactly the classical two-state process h, since all the vertical transitions are identical.

-

4.

Average the vector field for the reduced dynamic of V In this second averaging step one is looking for a reduced equation for V. Let us describe the example of the two-state model TS N,ϵ. From the quasi-stationary distribution for a single two-state process (cf. 2 above), one deduces the binomial quasi-stationary distribution for the fraction of open gates m N :

$$ \rho^{(N_m)}_{\mathrm{open}}\left(\frac{k}{N_m}\right)=C_{N_m}^k m_{\infty}(V)^k (1-m_{\infty}(V))^{N_m-k} $$(9)Then, averaging the term \(m_N^3\) with respect to this distribution one obtains the classical term \(m_{\infty}(V)^3\) together with the additional term K N (V). In the multiscale model, the dependence of the vector field F MS upon u Na is linear: in this case no additional term appears and one recovers only the value of the quasistationary distribution ρ MS corresponding to the state m 3 h 1 , which is precisely \(m_{\infty}(V)^3\). In the case of a non-linear dependence with a multiscale scheme, one has to work carefully to derive the equivalent of the binomial quasi-stationary distribution (cf. the formula for Π m distribution in Section 6.2).

-

5.

Compute the variance of the corrective term This step is the more computationally involved. In the multistate model, the linearity of F MS with respect to u Na reduces the complexity of this computation (cf. Section 6.3 for more details). One has to apply formula (21) of Section 6.3, which requires to solve the full evolution of the law for each fast subset, and not only the stationary distribution. Namely, for the multiscale scheme, one has to solve for a fixed value of V (if possible analytically) the system \(\dot{M}=A_m(V)M\) for various initial conditions with

$$ A_m=\left(\begin{array}{cccc} -3\alpha_m &\beta_m & 0&0 \\ 3\alpha_m &-2\alpha_m -\beta_m & 2\beta_m &0 \\ 0 &2\alpha_m & -2\beta_m-\alpha_m &3\beta_m \\ 0 &0 & \alpha_m &-3\beta_m \end{array}\right) $$and then compute integrals of the form

$$R=\int_0^{\infty}\left(M(t)-\rho_{MS}\right) dt$$(see formula (20) in Section 6. for more details) which characterize the convergence towards the stationary distribution. We have done this computation using a formal calculus software to obtain the term \(B(\bar{V},\bar{h})\) in Eq. (8). As far as the two-state model is concerned, the vector field is non-linear with respect to m and the computations should be done directly on the law of the empirical measure. The R integrals are difficult to compute analytically and we give in Appendix B an expression in terms of special functions. Then, the variance can be obtained by formula (21).

4 Numerical analysis of the reduced models

In this section, we analyze the reduced HH models obtained in Section 2, showing how an analysis of the two-state reduced model can be useful to understand the stochastic bifurcations of the original model (Section 4.1). Moreover, as the reduction method gives two different results for seemingly similar stochastic HH models, we compare the results obtained for both stochastic models (Section 4.2).

4.1 Bifurcation analysis and ISI bimodality

We have seen that additional terms appear when reducing the stochastic two-state TS N,ϵ model. To clarify their impact, we analyze a system where the variables n and h evolve according to their deterministic limits:

while m evolves according to a two-state kinetic scheme (cf. Fig. 1. Indeed, we have chosen to introduce this semi-deterministic model in order to isolate the consequences of the additional terms. First, there is a slight change in the value of the equilibrium point. Then, these terms have consequences in terms of bifurcations. The reduced HH system with m = m ∞ (V), corresponding to the case N = ∞, has a stable equilibrium point for I < I H , a Hopf bifurcation occurs at I = I H and a double cycle bifurcation occurs at I = I dc < I H . In the region (I dc , I H ) the system is bistable: a stable equilibrium point and a stable limit cycle coexist. For I > I H an unstable equilibrium point coexists with a stable limit cycle. The additional terms change the values I dc and I H . Incorporating the additional term K N (V) into the model modifies this bifurcation diagram. In this term, the number of channels N = 1/η appears as a parameter. We can analyze systematically its impact on the dynamics of the model through the computation of bifurcation diagrams as is done for any parameter in a deterministic model.

The first notable change that is observed is that, even in the absence of any external current (i.e. I = 0), reducing the number of channels (i.e. increasing η = 1/N) leads to a bifurcation diagram similar to the one obtained when varying I in the reduced deterministic model. More precisely, as η is increased, the stable equilibrium loses is stability through a sub-critical Hopf bifurcation an we observe the same three qualitatively distinct asymptotic dynamics. For I = 0, there is a Hopf bifurcation when varying the parameter \(\eta=\frac{1}{N}\) at a value η H ≈ 0.01944 corresponding to N H = 51 (see Fig. 4(a)). A double cycle bifurcation seems to occur for η 1 ≈ 0.0151, that is N 1 = 66. Thus, for N ≤ N 1 = 66, the reduced two-state HH model Eq. (7) exhibits repetitive firing, with a bistable region for N H < N ≤ N 1.

Bifurcation diagrams for system TS N with deterministic n and h variable. (a) Bifurcation diagram with η as parameter for I = 0. The line crossing the figure left to right represents the equilibrium potential. The point is stable for low values of η (thick solid line) and becomes unstable (dashed line) after undergoing a subcritical Hopf bifurcation at η H ≈ 0.01944. The maximal and minimal voltage values of a branch of unstable periodic orbits initiated at the Hopf bifurcation are represented by the dotted line. This branch goes through a double cycle bifurcation as it folds and gives place to a branch of stable periodic orbits (maximal and minimal voltages represented by a solid line). (b) Two-parameter bifurcation diagram with I and η as parameters. The solid and dashed lines represent, respectively, the loci of the Hopf and double cycle bifurcations. The lines in the upper left part of the figure represent loci of two folds merging into a cusp. In the region (1) to the left of the double cycle, there exists a single stable equilibrium point. In the region (3) to the right of the Hopf, an unstable stable equilibrium coexists with a stable limit cycle. In the region (2) delineated by the double cycle and Hopf curves, the system is bistable (coexistence of a stable equilibrium and stable limit cycle)

We can consider the influence of I on this diagram. This is done through the computation of the two-parameter bifurcation diagram. It reveals that the loci of the Hopf and double cycle bifurcations mode to lower channel numbers (i.e. higher η) as more current is injected. Ultimately these loci meet with fold bifurcation, probably at a Takens–Bogdanov (TB) point. Even though such bifurcations have been encountered in the standard HH model (Holden et al. 1985), here we consider that the putative TB bifurcation occurs at very low channel numbers that may be of little interest.

The bifurcation analysis of the deterministic system, with the noise level η = 1/N as a parameter, helps understanding the behavior of the stochastic model TS N,ϵ (with deterministic n and h).

Indeed, if ϵ is small enough, the dynamics of the stochastic HH model with a finite number of channels are expected to resemble those of the deterministic reduced model perturbed by a small stochastic switching diffusion. In this way, the stochastic HH model should produce different forms of dynamics depending on the number of channels, and these dynamics and the changes between them should be according to the bifurcation scenario described above. More precisely, in the regime where the reduced model displays a single stable equilibrium point that is globally stable, the stochastic system displays fluctuations around the equilibrium with only rare noise induced excursions away from this state (Fig. 5(c)). In contrast, when the reduced model stabilizes at a limit cycle, the stochastic model generates a nearly periodic train of action potentials (Fig. 5(a)). The transition between these two dynamics corresponds to the bistable regime. In this case, the stochastic model generates bursts of nearly periodic spikes separated by phases of quiescence, the transitions between the two corresponding to noise induced transitions from the neighborhood of the limit cycle to that of the equilibrium and vice versa (Fig. 5(b)). The interspike interval histograms of these regimes (Fig. 6) confirm the description given above illustrated through representative waveforms of the membrane potential V and is consistent with the bifurcation analysis of the reduced model:

-

1.

Below the double cycle curve is a region with a unique stable equilibrium point: ISI distribution is approximately exponential, since a spike corresponds to a threshold crossing.

-

2.

Between the double cycle and the Hopf curves is a bistable region: ISI distribution is bimodal, one peak corresponding to the escape from the stable equilibrium, and the other peak to the fluctuations around the limit cycle.

-

3.

Above the Hopf curve is a region with a stable limit cycle and an unstable equilibrium point: ISI distribution is centered around the period of the limit cycle.

Sample trajectories of the stochastic two-state gating model TS N,ϵ with N gate m, and 104 gates n and h with I = 0 and ε = 0.1. (a) With N = 30 (zone 3), the sample path looks close to a noisy periodic trajectory, as expected. (b) With N = 70 (zone 2), the expected bimodality of ISI’s appears clearly on this sample path. (c) With N = 120, ISI statistics are closer to a poissonian behavior; however spikes may occur by pair as in this particular sample, which corresponds the peak in the ISI distribution (cf. Fig. 6, N = 100)

Interspike Interval (ISI) distribution for the stochastic two-state gating model TS N,ϵ with N gate m, and 104 gates n and h with I = 0 and ε = 0.1. These figures show the apparition of bimodal ISI distribution as N is increased. For N = 30, the distribution is unimodal and corresponds to a noisy limit cycle behavior. For higher values of N, bimodality appears and can be interpreted as the signature of bistability for the underlying deterministic system. For N ≥ 100 the bimodality tends to disappear towards an exponential behavior, corresponding the escape from a stable equilibrium point. The transition from a noisy limit cycle to an exponential behavior through a bimodal distribution is in line with the bifurcation diagram in Fig. 4. ISI histograms obtained from stochastic simulations of TS N,ϵ with a modified Gillespie algorithm Gillespie (1977; White et al. (2000). Note the different scales for the time-axis

We have checked this prediction with extensive and systematic numerical simulations. We summarize the results below with representative numerical illustrations, where we have set the number of gates n and h arbitrarily at 104 in order to reduce the impact of the other sources of noise and to focus on the effect of m. Equivalent results are obtained with deterministic gates n and h. Configurations with small N m and large N h are not completely realistic since one should keep N m = 3N h to account for the structure of the sodium channel. However, it provides a interesting setting to check the theoretical results obtained so far with the averaging principle. We have also checked the predictions 1, 2 and 3 with higher values of I still below I H , for which the averaging-induced double cycle and Hopf bifurcations occur at lower values of η (higher N m ). In this case, one can keep the ratio N m = 3N h and still see the spontaneous quasi-periodic firing, since noise coming from h gates is small enough.

We have also studied the impact of relaxing the assumption ϵ < < 1. With the value ϵ = 0.1, the transition from zone 1 to zone 3 is characterized by the apparition of a bimodal ISI distribution, which is the signature of the underlying bifurcations. As ϵ is increasing towards 1, bimodality progressively vanishes, since the model is no longer close to its singular limit. However, with ϵ = 1, bimodality does not appear, since the model is too far from its singular limit ϵ → 0.

Another important consequence of the analysis through reduction is that the transitions between qualitatively distinct regimes that occur when the number of channels are varied are reminiscent of deterministic bifurcations in the sense that they are rather abrupt (albeit less so than in a deterministic system because of the smoothing effect of noise).

The main conclusion here is that, thanks to reduction, the number of channels becomes a bifurcation parameter of a deterministic model. In this way, changes in neuronal dynamics induced at various channels numbers can be analyzed through a computation of a bifurcation diagram. A stochastic problem is thus transformed into a deterministic one, for which many analysis methods are available.

4.2 Comparison between two-state and multistate gating schemes

Let us recall some results from Pakdaman et al. (2010) concerning the case of an infinite number of ion channels. When N → ∞, by a law of large numbers, both models converge to deterministic systems of ODEs: MS N,ϵ → MS ∞ ,ε and TS N,ϵ → HH ϵ. These two deterministic limits do not have the same dimension, as MS ∞ ,ϵ is of dimension 8 + 5 + 1 = 14 whereas HH ϵ is only four-dimensional. However, an important property of the solutions of MS ∞ ,ϵ is the following:

Let \(X_0=(V_0,m_0,h_0,n_0) \in \mathbf{R}\times [0,1]^3\) be an initial condition for (HH ϵ) and denote X t the associated solution. There exists a smooth map Ψ : R × [0, 1]3 → R × [0, 1]8 × [0, 1]5 such that: if the initial conditions \(X_0^{\mbox{MS}}\) for MS ∞ ,ϵ satisfy \(\Psi(X_0)=X_0^{\mbox{MS}}\) , then for all t > 0, the solution \(X_t^{\mbox{MS}}\) of MS ∞ ,ϵ satisfies \(\Psi(X_t)=X_t^{\mbox{MS}}\) .

As an example, consider the multistate scheme of Fig. 7 described by the deterministic kinetic equations:

and the two-state scheme described by

With \(\Psi(m)=\left(m^2,2m(1-m),(1-m)^2\right)\), if \(\Psi(m(0))=\left(m_2(0),m_1(0),m_0(0)\right)\), then for all t ≥ 0, \(\Psi(m(t))=\left(m_2(t),m_1(t),m_0(t)\right)\).

This result means that there exists an invariant manifold on which both deterministic limits coincide. In this sense, both stochastic HH models can be considered as compatible with the original HH model as long as one is interested in the dynamics for theses special initial conditions. In Keener (2009), another result is obtained in a slightly different setting: assuming the transition coefficients to be only function of time, and forgetting V, it is shown that the corresponding invariant manifold is globally attracting under eigenvalues conditions.

We now investigate how above coincidence property of the deterministic equations translates into the stochastic models TS N,ϵ and MS N,ϵ. Although their deterministic limit when N → ∞, ϵ fixed coincide, we know from Section 2. that their limit when ϵ → 0, N fixed are different. One may expect the behavior of MS N,ϵ to be similar to TS N,ϵ. Even if the reduction of MS N,ϵ for ϵ → 0, N fixed is actually independent of N (Eq. (8)), one may expect the analysis carried out in Section 4.1 to be still relevant, because of the coincidence property of the limits at N → ∞. In particular, it is natural to ask whether the ISI bimodality persists in the MS N,ϵ model. In order to compare only the effects associated with the variable m, we consider an intermediate multistate model IMS N,ϵ, where h and n follow an open-closed scheme as in the two-state model, and where the gates m follow a four-state kinetic scheme, as depicted in Fig. 8. (A number N of these gates corresponds to 3N two-state gates m). Obtained from stochastic simulation of IMS N,ϵ for different values of N and ϵ, ISI distributions for I = 3 are displayed in Fig. 9. Bimodality is not present for ϵ = 0.1, in contrast with the two-state model (Fig. 6), but appears for a lower value of ϵ = 0.01. This phenomenon may seem unexpected: when ϵ → 0 the model IMS N,ϵ is closer to MS N,0 (actually independent of N), which does not exhibit bistability for I = 3. However, there may be a competition between the speed of convergence to the invariant manifold and the speed of convergence to the reduced model, so that one can suggest the following hypothesis. Smaller ϵ may increase the attractivity of the invariant manifold corresponding to the two-state model: bimodality for IMS N,ϵ when ϵ is small may thus be explained by the bistability of TS N,0 even if MS N,0 is not in a bistable regime.

Interspike Interval (ISI) distribution for the intermediate multistate model (HH\(^{\epsilon,N}_{IMS}\)) with N = 10,20,30,40 gates m, and 104 gates n and h, with I = 3. Bold line: ϵ = 0.001, dashed line: ϵ = 0.01, thin line: ϵ = 0.1. As in Fig. 4, these figures show the apparition of bimodal ISI distribution as N is increased for small enough ϵ = 0.01 and ϵ = 0.001 (solid lines). For higher ϵ = 0.1, the distributions are unimodal

As has been shown above, reduction of the two stochastic variants of the HH model, namely , the TS and MS models leads to different results. These reduced models differ both in their deterministic and stochastic components. This result is all the more interesting that whether at the level of a single channel or at the level of a large membrane, the two variants produce similar dynamics.

More precisely, at the level of a single channel, the relevant information is the jump process between the open and closed states. Whether this channel dynamics is modeled according to the TS or the MS formalism, has no observable impact as the two jump processes have exactly the same law. In other words, the jump statistics cannot be used to differentiate one model from the other. In this sense the two models are equivalent.

Similarly, given a large membrane patch, the system is in the deterministic limit. In this regime, even though the MS model has a higher dimension than the TS, the two models have similar dynamics. Indeed, the trajectories of the MS approach the invariant manifold upon which the dynamics are those of the TS. Differences between MS and TS dynamics are only transient and tend to vanish with time. In other words, after discarding such transients, it is not possible to distinguish the two models.

Based upon the above considerations, one can argue that the two variants are equivalent at the two extreme cases of a clamped single channel or a membrane patch with infinitely many channels. Reduction reveals that in the intermediate stage where there are finitely many channels, the situation is different. The dynamics of the TS and MS are not necessarily equivalent. It results from the interplay between channel noise and the non linear dynamics of the system and depends explicitly on the Markovian model selected for the description of channel noise. In this sense, reduction appears as a method to expose these differences and provide a means to investigate their consequences on neuronal dynamics.

5 Effects of channel noise on neuronal coding

After deriving the reduced stochastic HH models and analyzing their dynamics, in this section, we discuss the relevance of the results in terms of neuronal coding. Neurons encode incoming signals into trains of stereotyped action potentials. One of the issues in neuronal coding has been to analyze the extent in which the timing of the individual spikes is altered by the presence of internal noise due to the stochastic fluctuations of ionic channels. In this section, we use the reduction scheme to account for one of the counterintuitive effects of channel noise on discharge time reliability of neurons, namely that for certain classes of inputs, reliability can go through a maximum by increasing channel noise (i.e. by decreasing the number of channels). Previous studies have investigated the impact of channel noise on discharge time reliability. For instance, based upon numerical simulations, it has been reported in Schneidmann et al. (1998) that the reliability is higher for rapidly varying input signals than for slow ones. In Jung and Shuai (2001) and Schmid et al. (2001), a phenomenon of intrinsic stochastic resonance has been observed by numerical simulations, suggesting an optimal number of channels for information transmission and reliability of discharge patterns.

Here, our aim is to illustrate how thanks to reduction one can account for a counterintuitive effect of channel noise on discharge time reliability. We take on the simplest setting that is used in previous studies of neuronal reliability, namely the response of neurons to constant DC currents, and that allows us to highlight the contribution of reduction. Clearly, this particular selection is not limiting. More precisely, we consider the response of the stochastic TS N,ϵ model to a step current I, for various numbers N m . To highlight the impact of the number of sodium channels and the ensuring transition on neuronal coding, we have also represented in Fig. 10 the raster plots of simulations of stochastic HH models with various sodium channel numbers. All simulations were started with the potential at the resting state and the unit receiving a step current of amplitude \(I=1 ~\upmu \mathrm{A} / \mathrm{cm}^2\) at time zero. This current intensity is subthreshold in the sense that in the deterministic limit (large number of sodium and potassium channels), it does not evoke any discharges. For stochastic models, the situation is different because, due to channel fluctuations, such units display spontaneous firing. The panels of Fig. 10 show that for large numbers of channels, when the models are close to the deterministic regime, firing reliability is low in the sense that the discharge times in the raster plot are very poorly vertically aligned. However, as the number of channels is decreased, the reliability improves and the first discharge times of the units become strongly aligned. At very low channel numbers, the first spikes are well aligned however, reliability of later discharges deteriorates. In summary, we see that firing reliability goes through a maximum as channel number is decreased. We have used a constant step current to highlight this phenomenon, first because of its relevant to neuronal coding and the fact that it has been used to analyze and discuss neuronal reliability (Cecchi et al. 2000) and it is also simple enough to fully illustrate our purpose, that is, the transition discovered thanks to reduction can have substantial impact on neuronal reliability.

Each panel represents the raster plot for a given number of sodium m gates N = N m of the response of 50 units to a constant DC current of amplitude \(I=1 ~\upmu \mathrm{A /cm}^2\) applied from time t = 0. Each line on each panel represents the discharge times obtained from a single simulation run of the two-state model TS N,ϵ. All runs, whether within each panel or from one panel to another, were carried out independently. The initial condition is the equilibrium of the deterministic model without input. Parameters \(N_h = 10^3\), \(N_n = 10^4\) and ϵ = 0.1

The above phenomenon is also observed when one keeps the ratio between the number of h gates and of m gates to on third as discussed below in the case of latency coding. The stochastic transition described in Section 4.1 can have a significant impact in terms of neuronal coding. To illustrate this proposition, we have focused on latency coding, where information is encoded in the latency between stimulus onset and the first action potential. In Wainrib et al. (2010), the impact of channel noise on latency coding has been studied, focusing on large numbers of ion channels. We take on the simplest setting that is used in previous studies of neuronal reliability, namely the response of neurons to constant DC currents, and that allows us to highlight the contribution of reduction. Clearly, this particular selection is not limiting. More precisely, we consider the response of the stochastic TS N,ϵ model to a step current I, for various numbers of sodium channels N Na = N h with N m = 3N h . We show here how the reduction method helps understanding how relatively low number of channels can be beneficial in terms of latency variability. Indeed, numerical simulations displayed in Fig. 11 reveal that, contrary to what one may expect, for low values of N m (that is higher channel noise) the coefficient of variation (CV) of latency to first spike can be lower. Thus, we see that reduction helps in understanding some of the counterintuitive effects of noise. In this example, reduction reveals that for large N m , the input current is subthreshold, and the distribution of latency is expected to be close to exponential (CV close to 1), while it becomes suprathreshold at lower N m . More precisely, for low values of N m , the analysis of Section 4.1 shows that the reduced system has a unique stable limit cycle, which induces a low value of the CV, while for intermediate values of N m the system is bistable, and the resulting bimodality of latencies distribution accounts for the increase of the CV above 1. At very low values of N m , CV is increased due to an increase in channel noise, leading to a optimal number of channels in terms of latency variability. Notice that another phenomenon is visible in Fig. 11, namely the sawtooth-like behavior of the CV as a function of N m . Such behavior has been previously analyzed in Shuai and Jung (2005), where an explanation is provided in terms of elementary arithmetic properties of the number of channels.

Coefficient of variation (CV) for the latency to first spike as a function of the number of sodium channels N Na , in the subthreshold case (\(I_{ext} = 1~\upmu \mathrm{A/cm}^2\)) for the two-state model TS N,ϵ with ϵ = 0.1. Number of gates: N m = 3N h = 3N Na and \(N_n = 10^4\). The initial condition is the equilibrium of the deterministic model without input

This example illustrates how qualitative changes in the dynamics of the reduced model account for the differences in reliability observed in the full model, shedding in particular a new light on a phenomenon of intrinsic stochastic resonance in small patches of excitable membrane.

6 General theory

6.1 Models

As a stochastic interpretation of HH like models, we consider a general class of conductance-based neuron models with stochastic ion channels as follows:

-

For each ion type, labeled by k ∈ {1, .., d}, we consider a population of N k associated ion channels. Within a given population k, each ion channel, constituted of several gates, is modeled by one or several KMSs with voltage-dependent transition rates. A KMS is defined as a jump Markov process by a finite state space E and transition rates α i,j (V) between two states i, j ∈ E. We further make an assumption of time-scale separation which is stated below.

-

The conductance induced by the population k is a function of the empirical measures of the underlying KMSs. Typically, the empirical measures represent the proportion of open channels. If u denotes the vector of all empirical measures, then we assume that there is a function h k and a constant \(\bar{g}_k\) such that the conductance associated with population k is given by \(g_k=\bar{g}_k h_k(\mathbf{u})\).

-

The membrane potential V(t) evolves dynamically according to:

$$\begin{array}{rll} \frac{dV}{dt} &=& I(t) - \bar{g}_L(V-V_L) \\ &&-\sum\limits_{k=1}^d \bar{g}_k h_k(\mathbf{u})(V-V_k)\end{array}$$(14)$$:=f(V,\mathbf{u}) $$(15)where I(t) is the input current, g i and V i the conductance and resting potentials corresponding to the leak current for i = L and to different ionic currents for each value of k ∈ {1, .., d}. The theoretical results are still valid for any smooth function f.

Our time-scale separation assumption (TSA) is the following. We assume that one KMS, have the following property: There exist n subsets of fast transitions. In other words, the state space may be partitioned as:

where the sets {E i } i = 1,..,n are defined such as:

-

Fast transitions within each E i : if i,j ∈ E k then α i,j is of order O(ϵ − 1),

-

Slow transitions between fast sub-sets: otherwise, if i ∈ E k and j ∈ E l , with k ≠ l then α i,j is of order O(1).

The elements of E will be denoted by e i,k where i ∈ {1, ..., N} labels the fast sub-set and k ∈ E i the position within the fast sub-set E i .

Thus, if for a given KMS satisfying the TSA, we denote by A ϵ(V) the transition matrix defined by \(A^{\epsilon}_{i,j}(V)=\alpha_{i,j}(V)\) if i ≠ j and \(A^{\epsilon}_{i,i}(V)=-\displaystyle{\sum\limits_{j\neq i}}\alpha_{i,j}(V)\), then we can decompose this matrix as:

where A S accounts for the slow transitions and A F is a block matrix accounting for the fast transitions (each block corresponding to a subset E i ).

A typical example of KMS which satisfies the TSA is the sodium kinetic scheme (see Fig. 2) commonly used to study the impact of channel noise in the HH model.

The other important assumptions concern the ergodicity of the fast sub-processes, which is ensured by an irreducibility assumption since we are in the case of finite state spaces.

Notation

We denote the vector of empirical measures by u = (u 1, ..., u d ). Each u k , corresponding to population k, may gather several empirical measures as well: \(u_i=(u_1^1,...,u_1^{k_1})\) (cf. m and h for the sodium channel in the TS model). Amongst one of the \(u_k^j\) we assume that one of them, say \(u_{k_0}^{j_0}=u^{\mbox{TSA}}\) is the empirical measure for a population of KMSs under TSA. We denote:

where \(\tilde{u}\) gathers all the other empirical measures, behaving on the O(1) time-scale.

Remark

If one considers that the number of channels N k are all proportional to the area A of the patch and that the densities are fixed, then when A is large one recovers (Pakdaman et al. 2010) the deterministic conductance-based neuron models. In the present paper, we do not take this limit and the analysis is valid for any number of channels.

6.2 Reduction

We are interested in the limit of perfect time-scale separation, namely ϵ → 0.

Let us first explain how to handle a single KMS under TSA, and then we will see next how to apply this to reduce a full neuron model with stochastic ion channels. We denote X ϵ the considered process under TSA, and we introduce the quasi-stationary distributions \((\rho_j^i)_{j\in E_i}\) within fast sub-sets E i , for i ∈ {1, .., n}. We construct an aggregated process \((\bar{X})\) on the state space \(\bar{E}=\{1,..,n\}\) with transition rates:

\(\mbox{for } k,l\in \bar{E}\).

This aggregated process behaves on a slow time-scale, and since we have averaged out the transition rates against the quasi-stationary distribution, the fast time-scale has now disappeared. With such an aggregated process \(\bar{X}\), one can approximate time-averages of the form \(\int_0^T \psi(X^{\epsilon}(s)) ds\) by \(\int_0^T\bar{\psi}(\bar{X}(s))ds\) where \(\bar{\psi}(i):=\sum_{k\in E_i} \psi(e_{i,k})\rho^i_k\). Now, a consequence is that if the process X ϵ is coupled with a differential equation dV ϵ/dt = F(V ϵ, X ϵ) which is on a O(1) time-scale, then one obtains a reduced differential equation:

with \(\bar{F}(v,i):=\displaystyle{\sum\limits_{k\in E_i}} F(v,k)\rho_k^i\) for v ∈ R and \(i\in \bar{E}\).

Remark

As recently shown in Faggionato et al. (2010), this convergence result when ϵ → 0 is valid even if the transition rates for the jump process are also voltage-dependent (this setting is called piecewise-deterministic Markov process).

The remaining step is to obtain the quasi-stationary distribution for the empirical measure associated with the population of jump processes under TSA. Indeed, in general the coarse-graining and the averaging steps should be done directly on the empirical measure and not on each single KMS. Thus, we explain next how to find these quasi-stationary distributions.

To clarify the presentation, we first assume that the KMS satisfying the TSA has only fast transitions. In other words, with the notations above, n = 1 and E ≡ E 1. Namely, if one considers a population of N KMSs satisfying the TSA, the quasi-stationary distribution for the proportion of KMSs in a state i ∈ E is given by a binomial formula:

where (ρ i ) i ∈ E is the stationary distribution for the single KMS. We have thus expressed the stationary distribution for the empirical measure in terms of the one for the single KMS.

We now proceed to the averaging of the vector field with respect to this binomial distribution. For the vector field f given by Eq. (15), this leads to the following reduced equation when ϵ → 0:

with \(\bar{h}^{(N)}_k:= \displaystyle{\sum\limits_{c=1}^N}h_k(\tilde{u},c/N)\rho^{(N)}_i(c)\) and \(\tilde{u}\) the vector with all the empirical measures except the one on the fast time scale which has been considered for the averaging.

A key remark here is that the number of channels N has appeared in the reduced equation (18) for V. As illustrated in Section 4, this enables a bifurcation analysis with N as a parameter. We now turn to the case of a population of multiscale processes. The aim is to apply directly the general coarse graining result, summarized by formula (16), on the empirical measure, and not on each KMS of the population. So, we consider a population of multiscale processes \(X_i^{\epsilon}\), where each \(X^{\epsilon}_i\) satisfies the TSA, and we define:

where we recall that

and that e i,k are the elements of E, with 1 ≤ i ≤ n and 1 ≤ k ≤ d i . For x ∈ N *, we denote \(I_N^x:=\{0,...,N\}^x\). The process \(U_N^{\epsilon}\) is a jump Markov process on the state space

This process also has a multiscale structure:

-

Partitioning the state space: One can make a partition of the state space \({{\mathcal{E}}}_N\) as

$${{\mathcal{E}}}_N=\bigcup\limits_{\mathbf{\xi} \in \bar{{{\mathcal{E}}}}_N } {{\mathcal{E}}}_N(\mathbf{\xi})$$with

$$\bar{{{\mathcal{E}}}}_N:=\left\{\left(\xi_k\right)_{k=1}^n \in I_N^n\ /\ \sum_{k=1}^ n \xi_k=N\right\}$$and

$${{\mathcal{E}}}_N(\mathbf{\xi}):=\left\{(x_{i,k})_{1\leq i\leq n,\ k\in E_i}\in I_N^d\ /\ \sum_{1\leq k \leq d_i} x_{i,k} = \xi_i\right\}$$Here, an element ξ k counts the number of processes in the population that are in the fast subset E k . Then, given a constraint \(\xi \in \bar{{{\mathcal{E}}}}_N\), we denote by \({{\mathcal{E}}}_N(\mathbf{\xi})\) the set of all possible configurations. The key point here is that ξ is invariant when one of the underlying processes in the population undergoes a fast transition: ξ changes only when a slow transition happens.

-

Fast sub-processes: For each \(\mathbf{\xi} \in \bar{{{\mathcal{E}}}}_N \) we define a fast sub-process θ ξ which is characterized by its state space \({{\mathcal{E}}}_N(\mathbf{\xi})\) and its transition rates

$$\mu_{k,l}^i:= \epsilon^ {-1} x_{i,k} \alpha_{e_{i,k},e_{i,l}}$$between states x and \(x+\delta_{k,l}^i\) where \(\delta_{k,l}^ i\) is a vector in { − 1, 0, 1}d with a “−1” at position (i, k) and a “+ 1” at position (i, l) and 0 elsewhere. Such a transition means that one of the processes in the population has undergone a jump between the state e i,k ∈ E i and the state e i,l ∈ E i . The rate of such a transition depends upon the value of ξ i which counts the number of processes in E i . An important quantity associated with each fast sub-process θ ξ is its stationary distribution, which can be obtained, after a slight combinatorial work, as a product of multinomial distributions, called Π m distribution:

$$\pi_{\xi}(x):=\prod\limits_{i=1}^n \frac{\xi_i!}{x_{i,1}!..x_{i,d_i}!}\left(\rho_1^i\right)^{x_{i,1}}...\left(\rho_{d_i}^i\right)^{x_{i,d_i}}$$for \(x\in {{\mathcal{E}}}_N\).

-

Slow transitions between aggregated states: Following the coarse-graining formula (16), we are now able construct a slow process \(\bar{U}_N\) on the reduced state space \(\bar{{{\mathcal{E}}}}_N\). The transition between states ξ and ξ′ = ξ + δ k,l (where \(\delta_{k,l}\in\{-1,0,1\}^n\) has a “−1” at position k, a “+ 1” at position l and 0 elsewhere) occurs with rate

$$\bar{\alpha}_{\mathbf{\xi},\mathbf{\xi'}}:= \sum\limits_{x\in {{\mathcal{E}}}_N(\mathbf{\xi})}\sum\limits_{y\in {{\mathcal{E}}}_N(\mathbf{\xi'})} \pi_{\xi}(x) \mu(x,y)$$where \(\mu(x,y):=\displaystyle{\sum\limits_{i\in E_k,j\in E_l}} x_{i,k} \alpha_{e_{i,k},e_{j,l}}\)

-

The averaging of vector field F is made with respect to the Π m quasi-stationary distributions π ξ (x).

Special case In the case where the vector field F depends linearly on the multiscale empirical measure of interest (for instance in the stochastic multistate model MS N,ϵ), the result is more straightforward.

The essence of reduction is to approximate, for small ϵ, time averages of the form:

by ergodic averages

where u denotes a stationary ergodic process with invariant measure ρ and satisfying appropriate mixing conditions.

Thus, in the linear case, reduction only involves time-average of the type

which can be written as a sum of elementary averages of the type

Each of these terms can be approximated (using the general coarse-graining theory) by:

In other words, the time spent by \(X^{\epsilon}_j\) in state e i,k can be approximated by the product of the time spent by \(X^{\epsilon}_j\) in the subspace E i times the stationary distribution \(\rho_k^i\) of the fast-process evolving within E i . For instance, in the eight-state scheme S Na , the time spent in the state m 3 h 1 can be approximated when ϵ is small by the time spent in any of the state m x h 1 for x = 0, 1, 2, 3 multiplied by the quasi-stationary probability associated with the state m 3 , that is \(m_{\infty}(V)^3\).

As in the multiscale model MS N,ϵ, F MS depends linearly on m 3 h 1 , the averaged vector field is F MS evaluated at \(m_{\infty}(V)^3\bar{h}\), which is precisely the drift term obtained in Eq. (8).

6.3 Fluctuations

As the time-scale separation is not perfect in biological models, it is interesting to be able to include a corrective term to account for the error made when considering the reduced model at the limit ϵ = 0. This term is included as a switching diffusion of order \(\sqrt{\epsilon}\) that is added on the equation for V:

with \(\bar{u}\) the reduced process with rates given by the coarse-graining formula (16). The mathematical aspects of this approximation are studied in a separate paper (Pakdaman et al. 2010, submitted), which extends results of Faggionato et al. (2010) and Yin and Zhang (1998). To give the expression of σ f , some further notations are required. In order to keep them as handy as possible, we consider that the population of processes under TSA will be denoted by the generic notation X ϵ. This means that we pretend the population of N processes is just a single process. We have shown below that this is justified since we have exhibited a multiscale structure for the empirical measure as well. So instead of the complicated notations designed for the state space of empirical measures \({{\mathcal{E}}}_N\), we have chosen to work with the generic notations. We introduce the associated sub-processes \(x^{\epsilon}_i\) defined on each subset E i . Let us rescale this sub-processes by putting ϵ = 1 and consider the laws of \(x^{1}_i\):

We then define a quantity that accounts for the discrepancy between the law P V (x, y, t) and the quasi-stationary distribution \(\rho^{(i)}_V(y)\):

The diffusion coefficient \(\sigma_f^2\) is then given by:

where

Computation of \(R_V^{(i)}(x,y)\) involves not only the quasi-stationary distribution, but also the full time-course of the law of the empirical measure.

In the linear case one only needs to do this work for a single process under TSA and not for the empirical measure. Indeed, with the linearity, one obtains an average of N diffusion terms, one for each member of the population, and gathering them gives a single diffusion scaled as \(\frac{1}{\sqrt{N}}\). This is how we have obtained the diffusion correction in Eq. (8).

7 Discussion

In the deterministic case, reduction has a geometric interpretation in the phase space of the dynamical systems corresponding to the neuronal model. The aim of reduction is to derive a system of differential equations with a lower number of variables that is quantitatively close to the original system. The essence of this reduction is that in some sense there are redundant variables in the original model. Geometrically, in the phase space of the system, this translates into the fact that there exists a manifold that is invariant under the flow of the dynamical system and that attracts trajectories. Describing the dynamics on this manifold requires less variables than there are in the original model, yet this lower dimensional dynamical system is totally accurate. In such a case one obtains a perfect reduction of the original model. In practice, it is not always possible to obtain either the invariant manifold or the dynamics on it perfectly. Instead, one constructs an approximate reduced system that is quantitatively close (in some mathematical sense) to the perfect reduced model. The procedures of reduction of HH models offer methods to obtain such approximations.

In the stochastic case, interpretation of reduction is more complicated. On the one hand, one may suggest a sample path interpretation: the trajectories of the original model stay close with high probability to the trajectories of the reduced averaged system. On the other hand, since the law obeys a deterministic equation—possibly infinite-dimensional—such as the master equation, a singular perturbation approach for the law would provide a geometric interpretation in the law space. However, it remains unclear how to relate this geometry with the properties of the trajectories.

In this paper, we have provided a method to reduce stochastic conductance-based neuron models based on a time-scale separation. Based on a stochastic averaging method, the techniques presented here are general, widely applicable and all the computations can be carried out referring to a step-by-step reduction algorithm.

We have applied this reduction method to various stochastic HH models. These applications illustrate in detail the computational aspects of the scheme and the type of information that can be gained from it. In particular, we have derived an analytical expression for the diffusion approximation of the reduced 3D version of the stochastic HH model with multistate Markov scheme. More interestingly, for the two-state HH model, unexpected correction terms have appeared in the effective vector field due to the non-linearity m 3, modifying the bifurcations and providing an insight into the emergence of bimodal ISI distributions due to channel noise. Thanks to reduction, our work has revealed that existence of a dramatic transition in neuronal dynamics and consequently spike generation properties when channel numbers are decreased. Finally, we have shown that this transition strongly affects neuronal coding in ways that had not been described previously in relation with channel noise.

A mathematical analysis of the link between the reduced system and the original one, of the other time-scales in the system and of the scaling between the population size and the time-scale separation clearly deserves to be investigated. In biological terms, such an analysis suggests that it would be interesting to study whether there exists a link between ion channels densities and the time-scales on which they operate. Moreover, the stochastic reduction method is expected to be useful both for the stochastic simulation of neuron models with channel noise and for further analysis of channel noise induced phenomena. From a theoretical perspective, there exists several methods in the theory of noisy dynamical systems, (for instance: stochastic bifurcations,large deviations theory) to investigate the impact of a parameter on the behavior of the system. Our reduction approach provides a new method in this direction, and the singular perturbation approach provides a limiting system where the stochastic finite-size effects can be quantified by a deterministic analysis. Such an approach is expected to provide insights into other stochastic multiscale systems, especially deriving from the kinetic formalism, which are ubiquitous in neuron modeling.

References

Arnold, V. I. (1983). Geometric methods for ordinary differential equations. New York: Springer.

Cecchi, G. A., Sigman, M., Alonso, J. M., Martinez, L., Chialvo, D. R., & Magnasco, M. O. (2000). Noise in neurons is message dependent. Proceedings of the National Academy of Sciences, 97(10), 5557–5561.

Chow, C. C., & White, J. A. (1996). Spontaneous action potentials due to channel fluctuations. Biophysical Journal, 71(6), 3013–3021.

Faggionato, A., Gabrielli, D., & Crivellari M. R. (2010). Averaging and large deviation principles for fully-coupled piecewise deterministic Markov processes and applications to molecular motors. Markov Processes and Related Fields, 16(3), 497–548.

Gillespie, D. T. (1977). Exact stochastic simulation of coupled chemical reactions. The Journal of Physical Chemistry, 81(25), 2340–2361.

Hille, B. (2001). Ion channels of excitable membranes. Massachusettes: Sinauer Sunderland.

Hodgkin, A. L., & Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. Journal of Physiology, 117(4), 500–544.

Holden, A. V., Muhamad, M. A., & Schierwagen, A. K. (1985). Repolarizing currents and periodic activity in nerve membrane. Journal of Theoretical Neurobiology, 4, 61–71.

Izhikevich, E. M. (2007). Dynamical systems in neuroscience: The geometry of excitability and bursting. Cambridge: MIT Press.

Jung, P., & Shuai, J. W. (2001). Optimal sizes of ion channel clusters. Europhysics Letters, 56, 29–35.

Keener, J. (2009). Invariant manifold reductions for Markovian ion channel dynamics. Journal of Mathematical Biology, 58(3), 447–57.

Kepler, T. B., Abbott, L. F., & Marder, E. (1992). Reduction of conductance-based neuron models. Biological Cybernetics, 66, 381–387.

Mastny, E. A., Haseltine, E. L., & Rawlings, J. B. (2007). Two classes of quasi-steady-state model reductions for stochastic kinetics. The Journal of Chemical Physics, 127, 094106.

Pakdaman, K., Tanabe, S., & Shimokawa, T. (2001). Coherence resonance and discharge time reliability in neurons and neuronal models. Neural Networks, 14(6–7), 895–90.

Pakdaman, K., Thieullen, M., & Wainrib, G. (2010). Fluid limit theorems for stochastic hybrid systems with application to neuron models. Advances in Applied Probability, 42(3), 761–794.

Rinzel, J. (1985). Excitation dynamics: Insights from simplified membrane models. Feredation Proceedings, 44, 2944–2946.

Rowat, P. (2007). Interspike interval statistics in the stochastic Hodgkin–Huxley model: Coexistence of gamma frequency bursts and highly irregular firing. Neural Computation, 19(5), 1215.

Rubin, J., & Wechselberger, M. (2007). Giant squid—hidden canard: The 3d geometry of the Hodgkin Huxley model. Biological Cybernetics, 97, 5–32.

Schmid, G., Goychuk, I., & Hänggi, P. (2001). Stochastic resonance as a collective property of ion channel assemblies. Europhysics Letters, 56, 22–28.

Schmid, G., Goychuk, I., & Hänggi, P. (2003). Channel noise and synchronization in excitable membranes. Physica A: Statistical Mechanics and its Applications, 325(1–2), 165–175.

Schneidmann, E., Freedman, B., & Segev, I. (1998). Ion channel stochasticity may be critical in determining the reliability and precision of spike timing. Neural Computation, 10, 1679–1703.

Shuai, J. W., & Jung, P. (2003). Optimal ion channel clustering for intracellular calcium signaling. Proceedings of the National Academy of Sciences, 100(2), 506–512.

Shuai, J. W., & Jung, P. (2005). Entropically enhanced excitability in small systems. Physical Review Letters, 95(11), 114501.

Skaugen, E., & Walloe, L. (1979). Firing behavior in a stochastic nerve membrane model based upon the Hodgkin–Huxley equations. Acta Physiologica Scandinavica, 107(4), 343–63.

Steinmetz, P. N., Manwani, A., Koch, C., London, M., & Segev, I. (2000). Subthreshold voltage noise due to channel fluctuations in active neuronal membranes. Journal of Computational Neuroscience, 9(16), 133–148.

Suckley, R., & Biktashev, V. N. (2003). Comparison of asymptotics of heart and nerve excitability. Physical Review E, 68, 011902, 1–15.

Takahata, T., Tanabe, S., & Pakdaman, K. (2002). White noise stimulation of the Hodgkin–Huxley model. Biological Cybernetics, 86, 403–417.

Tanabe, S., & Pakdaman, K. (2001). Noise-induced transition in excitable neuron models. Biological Cybernetics 85, 269–280.

Tanabe, S., & Pakdaman, K. (2001). Noise-enhanced neuronal reliability. Physical Review E, 64, 041904.

Tanabe, S., & Pakdaman, K. (2001). Dynamics of moments of Fitz–Hugh–Nagumo neuronal models and stochastic bifurcations. Physical Review E, 63, 031911.

Tanabe, S., Sato, S., & Pakdaman, K. (1999). Response of an ensemble of noisy neuron models to a single input. Physical Review E, 60, 7235–7238.

Tateno, T., & Pakdaman, K. (2004). Random dynamics of the Morris–Lecar neural model. Chaos, 14, 511–530.

Wainrib, G., Thieullen, M., & Pakdaman, K. (2010). Intrinsic variability of latency to first spike. Biological Cybernetics, 103(1), 43–56.

White, J. A., Rubinstein, J. T., & Kay, A. R. (2000). Channel noise in neurons. Trends in Neurosciences, 23(3), 131–137.

Yin, G. G., & Zhang, Q. (1998). Continuous-time Markov chains and applications: A singular perturbation approach. New York: Springer.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Alain Destexhe

This work has been supported by the Agence Nationale de la Recherche through the ANR project MANDy “Mathematical Analysis of Neuronal Dynamics” ANR-09-BLAN-0008.

Appendices

Appendix A: Auxiliary functions

The transition rates for the HH model are given by:

Accordingly, τ x (V) and x ∞ (V), for x = m, n, h, are given by: τ x (V) = 1/(α x (V) + β x (V)) and x ∞ (V) = α x (V)/(α x (V) + β x (V)).

Appendix B: Diffusion term in the two-state model

The purpose of this appendix is to give more details about the computation of the diffusion term in the two-state model. The computation is more complicated than in the multi-state model because of the non-linearity m 3. Indeed, in the multi-state case, the vector field F MS is linear with respect to u Na and one only needs to compute the corrective diffusion for a single channel, and then divide it by \(\sqrt{N_{Na}}\), since the average of N Na independent Brownian motions is equal in law to a single Brownian motion divided by \(\sqrt{N_{Na}}\). In the non-linear case (two-state model), one needs to work out the computation directly on the process describing the empirical measure:

-

1.

First, one has to compute the law at time t of the empirical measure, with V fixed, that is the probability \(P_N^V(j,j_0,t)\) of having j open channels at time t starting from a population with j 0 open channels.

Denote N = N m . Starting from a proportion of open m gates \(u_N(0)=\frac{j_0}{N}\) at t = 0, with 0 ≤ j 0 ≤ N, one can show that the proportion of open gates u N (t) at time t follows a bi-binomial distribution:

$$\begin{array}{rll} && P_N^V(j,j_0,t)\\ &&:=\mathbf{P}\left[u_N(t)=\frac{j}{N}|u_N(0)=\frac{j_0}{N}\right]\\ &&\displaystyle{\sum_{x=\max(0,j-N+j_0)}^{\min(j,j_0)}}\mu_{x,j_0}\left(p_0(t)\right)\mu_{j-x,N-j_0}\left(p_1(t)\right) \end{array}$$with \(\mu_{i,j}(p)=C_{j}^i p^{i}(1-p)^{j-i}\) and where p 0(t) and p 1(t) are the solution of

$$ \dot{y}=(1-y)\alpha_m(V)-y\beta_m(V) $$(22)with respective initial conditions p 0(0) = 0 and p 1(0) = 1. Defining

$$ \tau_m:= \alpha_m(V)+\beta_m(V) \mbox{ and } m_{\infty}(V):=\frac{\alpha(V)}{\tau_y(V)} $$(23)the variation of constant gives: p 0(t) = m ∞ (V)\(\left(1-e^ {-t/\tau_m(V)}\right)\) and \(p_1(t)=e^ {-t/\tau_m(V)}+p_0(t)\). If j 0 = 0, then one retrieves the classic binomial distribution with parameters (N,p 0(t)): \(P_N^V(j,0,t)=C_N^j p_0(t)^j (1-p_0(t))^{N-j}\). When t → ∞, one checks that the quasi-stationary distribution

$$ \rho_N^V(j)=\displaystyle{\lim\limits_{t\to \infty}} P_N^V(j,j_0,t) $$does not depend on j 0 and is given by:

$$ \rho_N^V(j)= \mu_{j,N}(m_{\infty}) = C_N^j m_{\infty}(V)^j (1-m_{\infty}(V))^{N-j} $$ -

2.

Then, integrating with respect to time the difference between \(P_N^V(j,i,t)-\rho_N^V(j)\), we can express the quantity R(i,j) required to compute the diffusion term. Introducing K = (1 − m ∞ (V))/ (m ∞ (V)), and making the change of variable \(z=e^{-t/\tau_m(V)}\), the computation boils down to integrals of the form:

$$ \int_0^1 (1 - z)^a (1 + Kz)^b (1 + z/K)^c dz $$Using a formal computation software, R(i, j) can be expressed as a sum involving Appell F1 functions, defined by

$$ F_1(a,b_1,b_2,c;x,y) = \sum\limits_{m,n=0}^\infty \frac{(a)_{m+n} (b_1)_m (b_2)_n} {(c)_{m+n} \,m! \,n!} \,x^m y^n $$with (q) n = q (q + 1) ⋯ (q + n − 1). Then we can write:

$$\begin{array}{rll} R(i,j)&=&\tau_m\sum\limits_{x=\max(0,j-N+i)}^{\min(i,j)} C_i^x C_{N-i}^{j-x}Y_{\infty}^{(N)}(x,i,j)\\ &&\times \left(H_K^{(N)}(x,i,j)F_1^{(N)}(x,i,j)-1\right) \end{array}$$with:

$$\begin{array}{rll} Y_{\infty}^{(N)}(x,i,j)&:=& m_{\infty}^x m_{\infty}^{j-x} (1-m_{\infty})^{i-x}\\ &&\times \ (1-m_{\infty})^{N-i-j+x}\\ H_{K}^{(N)}(x,i,j)&:=&\frac{K^{x-i}(1+K)^{i+j-2x}}{1+2x-N-i-j}\\ F_1^{(N)}(x,i,j)&:=& F_1\left(w,x-i,x-j,w+1,\right.\\ &&\qquad\left.\frac{1}{1+K},\frac{K}{1+K}\right)\\ w&:=&1+2x+N-i-j \end{array}$$ -

3.

The complete expression for the variance is then given by plugging the above expression for R(i, j) into formula (20).

Rights and permissions

About this article

Cite this article

Wainrib, G., Thieullen, M. & Pakdaman, K. Reduction of stochastic conductance-based neuron models with time-scales separation. J Comput Neurosci 32, 327–346 (2012). https://doi.org/10.1007/s10827-011-0355-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-011-0355-7