Abstract

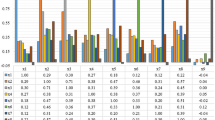

A new quadratic kernel-free non-linear support vector machine (which is called QSVM) is introduced. The SVM optimization problem can be stated as follows: Maximize the geometrical margin subject to all the training data with a functional margin greater than a constant. The functional margin is equal to W T X + b which is the equation of the hyper-plane used for linear separation. The geometrical margin is equal to\(\frac{1}{||W||}\) . And the constant in this case is equal to one. To separate the data non-linearly, a dual optimization form and the Kernel trick must be used. In this paper, a quadratic decision function that is capable of separating non-linearly the data is used. The geometrical margin is proved to be equal to the inverse of the norm of the gradient of the decision function. The functional margin is the equation of the quadratic function. QSVM is proved to be put in a quadratic optimization setting. This setting does not require the use of a dual form or the use of the Kernel trick. Comparisons between the QSVM and the SVM using the Gaussian and the polynomial kernels on databases from the UCI repository are shown.

Similar content being viewed by others

References

Cao L.J. and Tay F.E.H. (2003). Support Vector Machine with Adaptive Parameters in Financial Time Series Forecasting. IEEE Transactions on Neural Networks 14(6): 1506–1518

Chapelle O.l., Haffner P. and Vapnik V.N. (1999). Support Vector Machines for Histogram-Based Image Classification. IEEE Transactions on Neural Networks 10(5): 1055–1064

Chong E.K.P., Zak S.H. (1996) An Introduction to Optimization. Wiley Inter-Science

Cifarelli C., Nieddu L., Seref O. and Pardalos P.M. (2007). K-T.R.A.C.E: A kernel k-means procedure for classification. Journal Of Computer Operational Research 34(10): 3154–3161

Cristianini N., Shawe-Taylor J. (2000) An introduction to support vector machines (and other kernel-based learning methods). Cambridge University Press

Drucker H., Wu D. and Vapnik V.N. (1999). Support Vector Machines for Spam Categorization. IEEE Transactions on Neural Networks 10(5): 1048–1054

Duda R., Hart P. (1973) Pattern Classification and Scene Analysis. Wiley- InterScience

Abello J., Pardalos P.M., Resende M.G.C. (2002) Handbook of massive data sets. Kluwer

Pang S., Kim D. and Bang S.Y. (2005). Face Membership Authentication Using SVM Classification Tree Generated by Membership-Based LLE Data Partition. IEEE Transactions on Neural Networks 16(2): 436–446

Scholkopf B.: Support Vector Learning. PhD thesis, Technical University of Berlin (1997)

Schölkopf B. and Smola A.J. (2002). Learning with Kernels. MIT Press, Cambridge MA

UCI Machine Learning repository. http://www.ics.uci.edu/∼mlearn/MLRepository.html

Vapnik V (1995) The Nature of Statistical Learning Theory. Springer-Verlag

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Dagher, I. Quadratic kernel-free non-linear support vector machine. J Glob Optim 41, 15–30 (2008). https://doi.org/10.1007/s10898-007-9162-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-007-9162-0