Abstract

We consider the Spectral Projected Gradient method for solving constrained optimization problems with the objective function in the form of mathematical expectation. It is assumed that the feasible set is convex, closed and easy to project on. The objective function is approximated by a sequence of different Sample Average Approximation functions with different sample sizes. The sample size update is based on two error estimates—SAA error and approximate solution error. The Spectral Projected Gradient method combined with a nonmonotone line search is used. The almost sure convergence results are achieved without imposing explicit sample growth condition. Preliminary numerical results show the efficiency of the proposed method.

Similar content being viewed by others

1 Introduction

The problem that we consider is a constrained optimization problem of the form

where \( \Omega \subset \mathbb {R}^n\) is a convex and compact set, \( \xi : \mathcal {A} \rightarrow \mathbb {R}^m \) is a random vector from a probability space \( \left( \mathcal {A},\mathcal {F},\mathcal {P}\right) \) and \( F(\cdot ,\xi ) \in C^{2}(\Omega _e) \) with \( \Omega \subset \Omega _e \subset \mathbb {R}^n. \) The mathematical expectation that defines the objective function makes this problem difficult asf is rarely available analytically and, even when it is available, it usually includes multiple integrals. Thus, the common approach is to approximate the objective function with Sample Average Approximation, SAA. The quality of approximation depends on the sample size and taking a large sample ensures good matching between the original problem (1) and the approximate problem. There are many possibilities for the sample choice, depending on properties of the underlying random variable \( \xi \) and availability of data, see Byrd et al. [10], Fu [14], Polak and Royset [28], Shapiro et al. [30], Spall [32].

The Sample Average Approximation is defined as

for a given sample set \( {\mathcal N} := \{\xi ^1,\ldots , \xi ^N\} \subset {\mathcal A}. \) In general the quality of approximation depends heavily on the sample size N and taking N as large as computationally feasible is desirable in applications. Ultimately, letting the sample size \( N \rightarrow \infty \) should result in some kind of asymptotic convergence, for example almost sure convergence or convergence in probability. On the other hand large N makes the evaluation of \( f_{\mathcal N} \) and its derivatives expensive. Assuming that the sample size N is chosen appropriately, one can derive bounds on the difference between the solution of (1) and the approximate problem

Several results of this kind are available in Shapiro et al. [30], Spall [32]. The problem (2) is a reasonable approximation of the original one under a set of standard assumptions that we will state precisely later on. In general, one can be interested in solving the SAA problem for some finite, possibly very large N, as well as obtaining asymptotic results, that is, the results that cover the case \( N \rightarrow \infty , \) even if in practical applications one deals with a finite value of N. A naive application of an optimization solver to (2) is very often prohibitively costly if N is large due to the cost of calculating \( f_{{\mathcal N}}(x) \) and its gradient. Thus, there is a vast literature dealing with variable sample scheme.

Two main approaches can be distinguished. In the first approach the objective function \( f_{\mathcal N} \) is replaced with \( f_{{\mathcal N}_k}(x) \) at each iteration k and the iterative procedure is essentially a two step procedure of the following form. Given the current approximation \( x_k \) and the sample size \( N_k, \) one has to find \( s_k \) such that the value of \( f_{{\mathcal N}_k}(x_k + s_k) \) is decreased. After that we set \( x_{k+1} = x_{k} + s_k \) and choose a new sample size \( N_{k+1}. \) The key ingredient of this procedure is the choice of \( N_{k+1}. \) The schedule of sample sizes \( \{N_k\} \) should be defined in such way that either \( N_k = N \) for k large enough or \( N_k \rightarrow \infty \) if one is interested in asymptotic properties. Keeping in mind that \( \min f_{{\mathcal N}_k} \) is just an approximation of the original problem and that the cost of each iteration depends on \( N_k, \) it is rather intuitive to start the optimization procedure with smaller samples and gradually increase the sample size \( N_k \) as the solution is approached. Thus, the most common schedule sequence would be an increasing sequence \( N_0, N_1, \ldots . \) In the case of solving the approximate problem for a finite N one can also consider a (possibly oscillating) scheduling sequence that takes into account the cost of each iteration and the progress made in function decrease. Such procedure results in a more efficient method than the corresponding procedure with strictly increasing schedule sequence, Bastin [2], Bastin et al. [3], Krejić and Krklec [20], Krejić and Krklec Jerinkić [21], Krejić and Martínez [22]. The results presented in Friedlander and Schmidt [13] are also closely related.

Regarding the almost sure convergence and considering the case \( N \rightarrow \infty , \) a strictly increasing scheduling sequence that goes to infinity is first considered in Wardi [33]. An Armijo type line search method is combined with SAA approach. The convergence is proved with upper zero density. An extension of [33] for the unconstrained case is presented in Yan and Mukai [35], where the adaptive precision is proposed, that is, the sequence \( \{N_k\}_{k \in \mathbb {N}} \) is not determined in advance as in [33] but it is adapted during the iterative procedure. Nevertheless the sample size has to satisfy \( N_k \rightarrow \infty . \) The convergence result, again for the unconstrained case, is slightly stronger as the convergence with probability 1 is proved under the set of appropriate assumptions. The more general result that applies to both gradient and subgradient methods is obtained in Shapiro and Wardi [31]. The convergence with probability 1 is proved for the SAA gradient and subgradient methods assuming that the sample size tends to infinity and that the iterative sequence posses some additional properties. In this paper we are proposing a sample scheduling that ensures almost sure convergence of the SPG method for solving (1).

The second approach, often called the Surface Response Method, is again a two step procedure. It consists of a sequence of different SAA problems with different sample sizes that are approximately solved. After solving one SAA problem, the sample size is increased and the following SAA problem is again approximately solved. The main questions which crucially determine the efficiency of this procedure is the number of stages (that is, the number of different SAA problems to be solved) and the precision of each approximate solution. For further details one can see Pasupathy [27], Polak and Royset [28], Royset [29].

In this paper we are considering the constrained problem (1) assuming that the feasible set \( \Omega \) is easy to project on. Typical case would be a box or polyhedron. The Spectral Projected Gradient method (Birgin et al. [7, 8]) is a well known for its efficiency and simplicity. The SPG method is applied to the sequence of different SAA approximate problems (2) coupled with a suitable sample scheduling scheme that yields almost sure convergence. Thus, the principal aims of this paper are: (a) to derive an efficient sample scheduling that will yield an iterative sequence with almost sure convergence to a stationary point of the original problem through SAA approximation with \( N \rightarrow \infty , \) through efficient and computationally feasible optimization procedure for solving (2), and (b) to prove the almost sure convergence of the SPG method with an appropriate sample scheduling. It is important to notice that although the logic behind the sample scheduling is similar to the corresponding one in Krejić and Krklec [20], Krejić and Krklec Jerinkić [21], the conceptual change from a finite sample size in the SAA approximation in [20, 21] to an infinite sample introduces nontrivial changes in the sample scheduling. The same is true for theoretical consideration. Additional changes are due to the difference between unconstrained and constrained problems.

The paper is organized as follows. Some preliminaries are given in Sect. 2. The SPG algorithm and the appropriate scheduling algorithm are stated in Sect. 3, while the convergence results are presented in Sect. 4. Section 5 contains numerical experiments that confirm the theoretical results. Some conclusions are drawn in Sect. 6. The almost sure convergence will be abbreviated with a.s. in the rest of the paper.

2 Preliminaries

We will assume that the samples used for calculating the Sample Average Approximation at each iteration are available and taken cumulatively. So, for two positive integers N and M with \( N<M \) we define the sample sets

and the corresponding approximation of the objective function is

Clearly, \( N \in \mathbb {N} \) defines the sample set \( {\mathcal N} \) and vice versa. We will use both notations in the sequel.

The following set of assumptions makes the problem well defined and allows us to work with the function \( f_{\mathcal N}\) and its gradient.

Assumption A1

The set \( \Omega \subset \mathbb {R}^n\) is compact and convex.

Assumption A2

For any \( \xi \) from \(\left( \mathcal {A},\mathcal {F},\mathcal {P}\right) \) there holds \( F(\cdot ,\xi ) \in C^2(\Omega _e), \) where \( \Omega \subset \Omega _e \subset \mathbb {R}^n\) and \( \Omega _e \) is open and bounded. Furthermore, F and \(\nabla F\) are dominated by an integrable functions and \( E[\nabla F(x,\xi )] = \nabla E[F(x,\xi )] \) holds.

Assumption A3

The sample set \( \{\xi ^1,\xi ^2,\ldots \} \) is i.i.d.

The Assumptions A1–A2 imply that \( F(x,\xi )\) and \( \nabla F(x,\xi )\) are bounded on \(\Omega \) since they are continuous and the feasible set is bounded. The Assumption A2 states that F and \( \nabla F \) are dominated by integrable functions. In other words we assume that there exist functions \( K_1 (\xi ) \) and \( K_2(\xi ) \) such that for \( x \in \Omega \)

and such that \( E(K_1)< \infty , \; E(K_2)< \infty . \) Moreover, the second part of the Assumption A2 together with A3 implies that f is finite valued and continuous on any compact set (Shapiro et al. [30], Theorem 7.48) which implies that f is bounded on \(\Omega \). The same is true for the gradient, (Shapiro et al. [30], Theorem 7.52)

Both \( f_{\mathcal N}(x) \) and \( \nabla f_{\mathcal N}(x) \) are bounded and uniformly continuous on the compact set \( \Omega \) under the stated assumptions. The Assumptions A1–A3 together also imply that the SAA gradients uniformly converge to the true gradient value with probability 1, that is,

Let us now define the following two functions that will be used later on to define the sample update. Roughly speaking, the first one measures the quality of approximation \( f_{\mathcal N} (x)\approx f(x)\). This function is used in the Algorithms and does not necessarily represent the true (theoretically sound) error of the approximation. For example, it can be defined trivially as \(\nu (x,N)=1/N\).

Assumption A4

Assume that \( \nu : \mathbb {R}^n\times \mathbb {N} \rightarrow \mathbb {R}_+ \) is such that for any finite valued N there exists \( \nu _N \) such that

The error function \( \nu (x_k,N_k) \) is used to define the new sample size \( N_{k+1} \) such that the approximation error is well balanced with the decrease of \( f_{{\mathcal N}_k}. \) The decrease is denoted by \( dm_k \) and it approximates the difference \( f_{{\mathcal N}_k}(x_k) - f_{{\mathcal N}_k}(x_{k+1}). \)

Assumption A5

Assume that \( \gamma : \mathbb {N} \rightarrow (0,1) \) is such that \(\gamma \) is increasing function of N and

One obvious possibility is to define \( \gamma (N) = \exp (-1/N). \)

Notation For a given sample set \( {\mathcal N}_k \) and \( x_k \in \mathbb {R}^n\) we denote \( g_k = \nabla f_{{\mathcal N}_k}(x_k). \) The orthogonal projection on \( \Omega \) is denoted by \( P_{\Omega }(\cdot ), \) that is,

where the norm is assumed to be Euclidean.

3 Algorithms

The iterative method we consider is defined by Algorithms 1, 2 below. The main algorithm is Algorithm 1 which defines a new iteration using the spectral projected gradient method with nonmonotone line search, for a given sample size \( N_k. \) The sample size is updated through Algorithm 2. Two sequences, \( \{N_k\} \) and \( \{N_k^{\min }\} \) are defined, with \( N_k \) being the actual sample size and \( N_{k}^{\min } \) the lower bound of the sample size. The nonmonotone line search is defined by a sequence \( \{\varepsilon _k\} \) such that

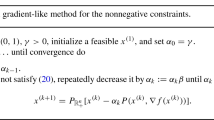

Algorithm 1

Given \( N_0 = N_0^{\min }, x_0 \in \Omega , 0< \alpha _{\min } < \alpha _{\max }, \alpha _0 \in [\alpha _{\min },\alpha _{\max }], \beta , \eta \in (0,1), \{\varepsilon _k\}. \) Set \( k= 0. \)

-

Step 1.

Test for stationarity. If

$$\begin{aligned} \Vert P_{\Omega }(x_k - g_k) - x_k\Vert = 0 \end{aligned}$$increase \( N_k = N_k + 1\) until \( \Vert P_{\Omega }(x_k - g_k) - x_k\Vert \ne 0. \) Set \( N_k^{\min } = N_k. \)

-

Step 2.

Compute the search direction

$$\begin{aligned} p_k = P_{\Omega }(x_k - \alpha _k g_k) - x_k. \end{aligned}$$ -

Step 3.

Find the smallest nonnegative integer j such that \( \lambda _k = \beta ^j \) satisfies

$$\begin{aligned} f_{{\mathcal N}_k}(x_k + \lambda _k p_k) \le f_{{\mathcal N}_k}(x_k) + \eta \lambda _k p_k^Tg_k + \varepsilon _k. \end{aligned}$$Define \(s_{k}= \lambda _k p_k\) and set \( x_{k+1} = x_k + s_k\).

-

Step 4.

Update \( {\mathcal N}_{k+1}\) within Algorithm 2. Set \( {\mathcal I}_k = {\mathcal N}_{k+1} \cap {\mathcal N}_k \) and \( y_k = \nabla f_{{\mathcal I}_k}(x_{k+1}) - \nabla f_{{\mathcal I}_k}(x_{k}). \)

-

Step 5.

Compute \( b_k = s_k^T y_k \) and \( a_k = s_k^T s_k \) and set

$$\begin{aligned} \alpha _{k+1} = \min \{\alpha _{\max },\max \{\alpha _{\min },a_k/b_k\}\}. \end{aligned}$$ -

Step 6.

Set \( k= k+1 \) and go to Step 1.

Before we state Algorithm 2, let us comment on the algorithm above. First, notice that the sequence of iterates \(\{x_{k}\}_{k \in \mathbb {N}}\) remains in the feasible set \(\Omega \). This is a consequence of \(\Omega \) being convex and compact and of definition of a search direction—the projection is performed only once at each iteration and the line search is performed within \(\Omega \).

As we already mentioned, the progress made in each iteration is measured by the decrease measure \( dm_k. \) Let us define

Notice that Step 1 of the previously stated algorithm ensures that \(dm_{k}\) used in Step 4 of Algorithm 2, is strictly positive assuming that there is no infinite loop in Step 1. The possibility of an infinite loop is discussed below Lemma 3.1. In all other cases the strictly positive \( dm_k \) comes from the fact that \(p_k^Tg_k=0\) implies \( \Vert P_{\Omega }(x_k - g_k) - x_k\Vert = 0 \) according to Lemma 3.1 from Birgin et al. [7]. For the sake of completeness we state this important result below.

Lemma 3.1

[7] Define \(g_{t}(x)=P_{\Omega }(x - t \nabla f(x)) - x\). For all \(x \in \Omega \), \(t \in (0,\alpha _{max}]\),

-

(i)

\(\nabla ^{T} f(x) g_{t}(x)\le -\frac{1}{t} \Vert g_{t}(x)\Vert ^2 \le -\frac{1}{\alpha _{max}} \Vert g_{t}(x)\Vert ^2\)

-

(ii)

The vector \(g_{t}(x^{*})\) vanishes if and only if \(x^{*}\) is a stationary point for (1).

Notice that the parameter \(\alpha _{max}\) stated in the previous lemma coincides with the upper bound of the spectral coefficient. Parameters \(\alpha _{min}\) and \(\alpha _{max}\) represent safeguard parameters which ensure that the spectral coefficient remains uniformly bounded away from zero as well as from infinity. One common choice is to set \(\alpha _{min}\) to \(10^{-4},10^{-8}\) or even \(10^{-30}\). On the other hand, the upper bound is usually set on \(10^{4},10^{8}\) or even \(10^{30}\). Now, if we assume \(\alpha _{max}\ge 1\) (which is usually true), this lemma implies that \(x^{*}\) is a stationary point for (1) if

Algorithm 1 implies that either there exists \( x_k \) such that

i.e., Step 1 is performed infinitely many times, or the algorithm generates an infinite sequence \( \{x_k\}. \) If \( x_k \) is such that (6) holds, then (3) implies

and therefore, \( \Vert P(x_k - \nabla f(x_k)) - x_k\Vert = 0 \), that is, \( x_k \) is a stationary point for (1). Given that in actual implementation the algorithm will eventually stop at some finite N, the stationary point \( x_k \) which satisfies (6) will be detected. So, from now on we assume that (6) does not occur.

As \( \varepsilon _k> 0 \), Step 3 necessarily terminates with a finite j for any search direction. So this step is well defined. Nevertheless, the search direction \( p_k \) calculated at Step 3 is descent direction for \( f_{{\mathcal N}_k} \) at \( x_k, \) as stated in Lemma 3.1 above. The additional term \( \varepsilon _k \) allows more freedom in the choice of step length and allows the nonmonotonicity that ensures that the good properties of spectral projected gradient method are preserved, see Birgin et al. [7, 8], Li and Fukushima [26]. It is important to state here that any other nonmonotone rule like the rules considered in [7], Grippo et al. [15, 16], Krejić and Krklec Jerinkić [21], Zhang and Hager [34] could be applied here, but the analysis would be a bit more cumbersome technically than with the rule we employ at Step 3.

The spectral coefficient \(\alpha _{k}\) is calculated using the intersection of two consecutive samples. It is easy to notice that \( I_k = \min \{N_k, N_{k+1}\} \) so both gradient values, \( \nabla f_{{\mathcal I}_k}(x_{k+1}) \) and \( \nabla f_{{\mathcal I}_k}(x_{k}), \) are available and no additional gradient values are needed for the calculation of the spectral coefficient. One could easily state

instead of \( y_k \) defined in Step 4 of the algorithm. In fact, the question of the best sample for calculation of \(y_k \) is still unsolved and there are many discussions in the literature, see Mokhtari and Ribeiro [24], Byrd et al. [9,10,11], Krejić et al. [19]. In the deterministic case \( y_k \) satisfies

As we are dealing with the expectation with respect to \( \xi , \) the variance of \( \xi \) and the corresponding variances of f and its derivatives, play an important role in the last equation. Furthermore, \( y_k \) defines the spectral coefficient \( a_k/b_k \), which is the Rayleigh quotient relative to the average Hessian matrix \( \int _0^1 \nabla ^2 f(x_k + ts_k) dt\), see Birgin et al. [8]. The eigenset of \( \nabla ^2 f_{\mathcal N} \) is clearly influenced by the sample set \( {\mathcal N} \), so the definition of \( y_k \) should reflect this fact as well. The choice we made here is a consequence of empirical experience that yielded a strong preference towards the definition of \( y_k \) stated in Step 4.

Recall that \( dm_k = - \lambda _k p_k^Tg_k \) and \( dm_k > 0. \) The algorithm for the sample size update is as follows.

Algorithm 2

Given \( dm_k, N_{k}, N_{k}^{min}, x_k, x_{k+1}. \)

-

Step 1.

Candidate \( N_k^+. \)

Set \( N = \max \{N_k,N_k^{\min }\} \)

-

Step 1.1

If \( dm_k = \nu (x_{k},N_{k}) \) set \( N_k^+ = N. \)

-

Step 1.2

If \( dm_k > \nu (x_{k},N_{k}) \)

While \( dm_k> \nu (x_k,N) \text{ and } N > N_k^{\min } \) set

$$\begin{aligned} N= N-1. \end{aligned}$$End(While).

Set \( N_k^+ = N. \)

-

Step 1.3

If \( dm_k < \nu (x_{k},N_{k}) \)

While \( dm_k < \nu (x_k,N) \) set

$$\begin{aligned} N= N+1. \end{aligned}$$End(While).

Set \( N_k^+ = N. \)

-

Step 1.1

-

Step 2.

Update of \( N_{k+1}. \)

If \( N_k^+ < N_k \) and

$$\begin{aligned} \rho _k = \left| \frac{f_{{\mathcal N}_k^+}(x_{k}) - f_{{\mathcal N}_k^+}(x_{k+1})}{f_{{\mathcal N}_k}(x_k) - f_{{\mathcal N}_k}(x_{k+1})} - 1\right| \ge \frac{N_k - N_k^+}{N_k}, \end{aligned}$$(7)set \( N_{k+1} = N_k. \) Otherwise set \( N_{k+1} = N_k^+. \)

-

Step 3.

Update of \( N_k^{\min }. \)

-

3.1

If \( N_{k+1} = N_k \) set \( N_{k+1}^{\min } = N_k^{\min }. \)

-

3.2

If \( N_{k+1} \ne N_{k} \) update \( N_k^{\min } \) by the following rule.

If \( N_{k+1} \) has been used in some of the previous iterations and

$$\begin{aligned} \frac{f_{{\mathcal N}_{k+1}}(x_{h(k)}) - f_{{\mathcal N}_{k+1}}(x_{k+1})}{k+1 - h(k)} \le \gamma (N_{k+1}) \nu (x_{k+1},N_{k+1}), \end{aligned}$$(8)where h(k) is the iteration in which we started to use \( N_{k+1} \) for the last time, set \( N_{k+1}^{\min } > N_{k}^{\min }. \)

Otherwise set \( N_{k+1}^{\min } = N_k^{\min }. \)

-

3.1

A few words on Algorithm 2 are due as well. The main objective of the variable sample scheme is to ensure some balance between the computational costs and precision of the Sample Average Approximation. The principal idea is to increase or decrease the sample size according to the progress made in decreasing the objective function. Such approach ensures that we work with low precision whenever possible, saving the computational effort if possible. At the same time the presented scheme ensures that the sample size increases to infinity and allows us to prove the almost sure convergence, as we will demonstrate later on.

The main ingredients of Algorithm 2 are the decrease in the objective function measured by \( dm_k \) and the precision of the sample average approximation measured by \( \nu (x_k,N_k). \) The sample size is increased or decreased in such a way that these two measures trail each other. To achieve a balance between \( dm_k \) and \( \nu (x_k, N) \) we are in fact constructing two sample size sequences, \( N_k \) and \( N_k^{\min }. \) The sample size is defined within Step 1–2. In Step 1 the candidate \( N_k^+ \) is determined to preserve the balance between \( dm_k \) and \( \nu (x_k,N). \) Notice that the candidate \( N_k^+ \) is constructed in such way that provides that the lower bound restriction, \(N_{k}^{+} \ge N_{k}^{min}\) holds. This follows from the fact that the starting trial sample size is \( N = \max \{N_k,N_k^{\min }\} \) and, according to Step 1.2, it is decreased only if the lower bound allows the decrease. If \( N_k^+ < N_k \), that is, if a decrease of the sample size is proposed, we perform an additional check stated in Step 2 to avoid possibly unproductive decreases. The parameter \(\rho _{k}\) is calculated only if we have a possible sample decrease as it measures similarity between the two models, represented by \(f_{{\mathcal N}_k^+}\) and \( f_{{\mathcal N}_k^+},\) through the decrease obtained at the current iteration. If these two model functions are close to each other, the parameter \(\rho _{k}\) is relatively small (close to zero) and we chose to work with the cheaper model function, that is, we set \(N_{k+1}=N_{k}^{+}\). Otherwise, we do not allow the decrease in the sample size since the model functions are too different and taking the smaller sample size may lead us in a wrong direction. In that case, we chose the larger sample size, that is, we chose the objective function estimate which we believe is closer to the final objective f. The quantity on the right hand side of (7) is motivated by our previous numerical testings. Basically, it encourages larger decreases of the sample size since they were usually beneficial for the overall performance of the algorithm. Moreover, notice that \(N_{k+1} \ge N_{k}^{min}\). If \(N_{k+1}=N_{k}^{+}\), this is obvious since we saw that \(N_{k}^{+} \ge N_{k}^{min}\). On the other hand, \(N_{k+1} = N_{k}\), but this may happen only if \(N_{k} > N_{k}^{+}\). Therefore, the lower bound condition is satisfied.

The second sequence \( N_k^{\min } \) is updated in Step 3 and it is clearly nondecreasing. It represents the smallest precision allowed at each stage of the optimization process and its role is to eventually push \( N_k \) towards infinity, even with the oscillations of \( N_k \) that are permitted by the algorithm. Algorithm 2 is essentially inspired by the variable sample scheme for solving the so called SAA problem with finite N, as presented in Krejić and Krklec Jerinkić [20, 21] for unconstrained problems. The first idea of this kind is developed in Bastin [2], Bastin et al. [3, 4] for the trust region approach and SAA methods. Although this Step is crucial for the convergence analysis, the lower bound increase is rarely activated according to our experience. The main idea in Step 3 is to track the progress related to various precision levels, that is, to various sample sizes in our case. The left hand side in the inequality (8) represents the average decrease between the last iteration at which we used the sample size and the new iteration with the same sample size. If this decrease is relatively modest, we assume that the oscillations were not that beneficial and increase the lower bound for the sample size, that is, the minimal allowed precision. The right hand side of the inequality (8) depends on the sample size as we do not want to treat all precision levels equally—we want to be more rigorous when the final objective function is approached. This Algorithm is conceptually based on [20] but, as we are now interested in pushing the sample size to the infinity, the steps are adjusted to allow an infinite increase of the sample size.

4 Convergence theory

The convergence results developed in this section show that the proposed method generates an iterative sequence that converges a.s. towards a solution of (1). Under the assumptions stated in Sect. 2 the first theorem states that the sample size generated by Algorithm 2 goes to infinity. After that, we prove a.s. convergence with an additional, but standard assumption regarding the error of the SAA approximation.

Remark 1

The sequence generated by Algorithm 1 is obviously random and thus all the relevant quantities such as \(N_k\) depend on the particular sample realization \(\omega \). However, the subsequent result shows that the sample size tends to infinity for any given \(\omega \). Thus, the result holds surely and not just almost surely. In order to avoid cumbersome notation, we will omit \(\omega \) in the sequel and write, for example, \(\{N_{k}\}_{k \in \mathbb {N}}\) instead of \(\{N_{k}(\omega )\}_{k \in \mathbb {N}}\) and subsequence \(K_1\) instead of subsequence \(K_1(\omega )\).

Theorem 4.1

Assume that A1–A5 hold. Then \( \lim _{k \rightarrow \infty } N_k = \infty . \)

Proof

First, let us show that the sequence \(\{N_{k}\}_{k \in \mathbb {N}}\) can not become stationary. Assume that there are \(\bar{N}_{0}\) and \(\bar{k}_{0}\) such that

Then, for each \(k \ge \bar{k}_{0}\) we have

by Step 3 of Algorithm 1. Thus

for arbitrary \( m \in \mathbb {N}. \) Recall that the sequence of iterates remains in \(\Omega \) and therefore \( f_{\bar{\mathcal N}_{0}} (x_{\bar{k}_{0}+m})\) is bounded from below for every m. Moreover, as \( 0<\sum _{k=0}^{\infty } \varepsilon _k <\infty \) and by rearranging the previous inequality we conclude that \(\sum _{j=0}^{\infty } dm_{\bar{k}_{0}+j}\) is finite. Therefore, using the fact that \(dm_{k}\) is nonnegative, we obtain

On the other hand, as a consequence of the Assumption A4, for each \(k \ge \bar{k}_{0}\) we have

so there exists \( \bar{k}_{1} > \bar{k}_{0} \) such that

However, Step 1.3 of Algorithm 2 implies that \( N_{\bar{k}_{1}+1} > N_{\bar{k}_{1}}=\bar{N}_{0}, \) which is a contradiction with (9).

Algorithm 2 ensures that \( N_{k+1} \ge N_{k}^{\min }. \) So if \( \lim _{k \rightarrow \infty } N_k^{\min } = \infty \) we obviously have the statement. Thus, in the sequel we consider the opposite case and show that the statement is true in that case as well.

Let us now assume that \( N_k^{\min } = N_{\max } \) for \( k \ge \bar{k}_{2}. \) Notice that the lower bound \( N_k^{\min }\) is nondecreasing and it can be increased only throughout Step 1 of Algorithm 1 or in Step 3.2 of Algorithm 2. Since \( N_k^{\min } \) is assumed to be bounded, i.e., \( N_k^{\min } \le N_{\max } \), Step 1 of Algorithm 1 can happen only a finitely many times. Therefore, without loss of generality we may exclude this scenario. On the other hand, in general, there are two possible outcomes of Step 3 of Algorithm 2, \( N_{k+1}^{\min } = N_k^{\min } \) or \( N_{k+1}^{\min } > N_{k}^{\min }. \) The second outcome is obviously not possible for \( k \ge \bar{k}_{2} \), so we must have \( N_{k+1}^{\min } = N_k^{\min }, k \ge \bar{k}_{2}. \) This further implies that we have one of the following three possibilities for each \( k \ge \bar{k}_{2}.\)

-

M1

\( N_{k+1} = N_k \)

-

M2

\( N_{k+1} \ne N_k \) and \( N_{k+1} \) has not been used before

-

M3

\( N_{k+1} \ne N_k, \)\( N_{k+1} \) has been used before and

$$\begin{aligned} \frac{f_{{\mathcal N}_{k+1}}(x_{h(k)}) - f_{{\mathcal N}_{k+1}}(x_{k+1})}{k+1 - h(k)} \ge \gamma (N_{k+1}) \nu (x_{k+1},N_{k+1}) \end{aligned}$$

Assume that the statement of this theorem is not true so there exists an infinite subsequence of \( \{N_k\}_{k \in \mathbb {N}} \) such that its elements are bounded. Then there must exist a finite sample size \( \bar{N}_{1} \) which is visited infinitely many times, that is, there exists an infinite subsequence \( K_{0} = \{k \ge \bar{k}_{2}: N_{k+1} = \bar{N}_{1}\}, \) for some \( \bar{N}_{1}< \infty . \) Since we proved that the sequence \(\{N_{k}\}\) can not be stationary, there exists an infinite subsequence \(K_{1} \subset K_{0}\) such that M1 does not hold for \(k \in K_{1}\). More precisely, there must exist an infinite subsequence of iterations in which the sample size is changed on \(\bar{N}_{1}\), that is, there exists \( K_1 = \{k \ge \bar{k}_{2}: N_{k} \ne N_{k+1} = \bar{N}_{1}\}\). Moreover, by excluding the first member of the sequence \(K_1\) we obtain an infinite subsequence \(K_2 \subset K_1\) that makes the scenario M2 impossible as well since the sample size \( \bar{N}_{1} \) is obviously used before during the optimization process. Therefore, for every \(k \in K_2\)

Let \(K_3=\{k+1, k \in K_2\}. \) Notice that this corresponds to the subsequence of iterations in which the sample size \( \bar{N}_{1} \) is revisited. Therefore, iterates \(x_{h(k)} \) and \(x_{k+1}\) from the previous inequality must be a neighboring iterates of the sequence \(\{x_{k}\}_{k \in K_{3}}\). Also, notice that \( k+1 - h(k) > 1. \) Denoting \(\{x_{k}\}_{k \in K_{3}}:=\{x_{k_{j}}\}_{j \in \mathbb {N}}\) we obtain that the following holds for every \(j \in \mathbb {N}\)

Given that, according to Assumptions A4–A5,

(10) implies that \( f_{\bar{\mathcal N}_{1}} \) is unbounded on \(\Omega \) which is clearly wrong. Thus the statement is proved. \(\square \)

Let us now proceed to prove the almost sure convergence results for Spectral Projected Gradient method defined in Algorithm 1. Recall that \(g_{k}=\nabla f_{\mathcal N_{k}}(x_{k})\) and \(p_{k}\) is a search direction.

Lemma 4.1

Assume that A1–A5 hold and let \( K \subset \mathbb {N} \) be a subset of iterations such that

Then \( x^* \) is a stationary point for (1) a.s.

Proof

Given that the search directions \( p_k \) converge to zero through K, we have

The sequence of spectral coefficients \( \alpha _k \) is bounded and thus there exists \( K_1 \subset K \) such that \( \lim _{k \in K_1} \alpha _k = \alpha ^* \in [\alpha _{\min },\alpha _{\max }]. \)

As \( N_k \rightarrow \infty \) we have

Thus

Now, the statement follows by Lemma 3.1. \(\square \)

In order to prove the main results, we make an additional assumption.

Assumption A6

Let \(\omega \) be an arbitrary sample path generated by Algorithm 1. Assume that \( e: \mathbb {R}^n\times \mathbb {N} \rightarrow \mathbb {R}_+ \) is a function with the following properties

Notice that (12) is assumed with probability 1 while (11) is without any random elements and thus holds surely. The function e is deterministic and the only property that holds with probability (one) is that it bounds the error that depends on stochastic quantity \(f_{\mathcal N}(x)\). Although this assumption seems restrictive, the results presented in Homem-de-Mello [17] show that such functions exist provided, for example, that the variance of \(F(x,\xi )\) is uniformly bounded on \(\Omega \). This is true for the class of problems where the Gaussian noise is added to some smooth deterministic function to obtain \(F(x,\xi )\), or, more generally, if \(F(x,\xi )=h_1(x)+h_2(x) \xi \) where \(h_1\) and \(h_2\) are smooth functions and the variance of \(\xi \) is bounded. In that case, one possible choice is

where C(x) is related to the variance of \(F(x,\xi )\). It is important to notice that in general, as pointed out by one of the Reviewers, C(x) might be infinite and thus the condition (11) might be violated.

Given that Theorem 4.1 states that \( N_k \) grows to infinity, it follows that the error bound (13) derived in Proposition 3.5 [17] for cumulative samples remains a relevant choice, at least for theoretical purposes. However, the log bound is considered as too conservative from the practical point of view and it is often approximated with a bound of sample variance type (Bastin [2]). More precisely, the bound that is frequently used is \(z \frac{ \hat{\sigma }(x_{k},N_{k})}{ \sqrt{N_k}}\) where z is a suitable quantile and \(\hat{\sigma }^{2}(x_{k},N_{k})\) is a sample variance of F, that is,

We use this bound in implementation in order to get numerical results. We also set \(\nu (x,N)=e(x,N).\)

The main convergence results are given in Theorems 4.2 and 4.3 below. The first theorem claims that there exists an accumulation point which is stationary. In the second theorem we prove a stronger result, that each strictly strong accumulation point is stationary. Both of the results hold a.s. To the best of our knowledge, a stronger result (that each accumulation point is stationary a.s.) can not be attained under this setup. However, according to the analysis presented in this paper, one can achieve the result of every accumulation point being stationary a.s. if the sample size is increased fast enough. We state this result in Theorem 4.4 for completeness.

Theorem 4.2

Assume that A1–A6 hold. For almost every sequence \(\{x_k\}_{k \in \mathbb {N}} \) generated by Algorithm 1, there exists an accumulation point of \(\{x_k\}_{k \in \mathbb {N}} \) which is stationary for (1).

Proof

Let us demonstrate that there exists at least one subsequence of \(\{p_k\}\) which converges to zero.

Suppose that there exists \(p>0\) such that for every \(k \in \mathbb {N}\)

Then, Lemma 3.1 implies the following inequalities

and

Suppose that \(\lambda _{k}\ge \bar{\lambda }>0, \; k \in \mathbb {N}\). In that case

Define

Moreover, given that \(\{x_{k}\}_{k \in \mathbb {N}} \subset \Omega \) and \( N_k \rightarrow \infty \), according to Assumption A6 we have that for all k large enough (more precisely, for \(k \ge \bar{k}_{1}=\bar{k}_{1}(\omega )\) where \(\bar{k}_{1}\) is such that \(N_k \ge N^0 (\omega )\) for every \(k \ge k_1\)) there holds

and (11) implies

Thus, for every \(k \ge \bar{k}_{1}\) we have a.s.

Let \(q\in (0,\eta \bar{d})\) be an arbitrary constant. Then, (18) implies that \(\tilde{e}_{N_{k}}<(\eta \bar{d}-q)/2\) for every k large enough, that is, there exists \(\bar{k}_{2}=\bar{k}_{2}(\omega )\) such that

and for every \(k\ge \bar{k}(\omega )=\max \{\bar{k}_{1},\bar{k}_{2}\}\)

which furthermore implies that a.s.

Letting s tend to infinity we obtain that f is unbounded which is not possible.

Now, suppose that there is a subsequence \(K_{1}(\omega ) \subseteq \{\bar{k}+1,\bar{k}+2,\ldots \}\) such that

Since the initial step size in the line search is 1 at every iteration and the backtracking with parameter \(\beta \) is employed, for every \(k \in K_1\) large enough there must be at least one unsuccessful trial in Step 3 of Algorithm 1. Let us denote by \( \lambda '_k\) the trial preceding the successful trial \( \lambda _k\), that is, let \( \lambda _k=\beta \lambda '_k\). In that case the line search rule implies that for every \( k \in K_1 \) there exists \( \lambda '_k = \lambda _k/\beta \) such that \( \lim _{k \in K_{1}}\lambda '_k = 0 \) and

As \( \varepsilon _k > 0 \) we have

The Mean Value Theorem implies the existence of \( t_k \in (0,1) \) such that

Given that \( \{p_k\}\) and \(\{x_{k}\}\) are bounded, there exists \(K_2 (\omega )\subseteq K_{1}\) such that \( \lim _{k \in K_2} (x_{k},p_{k})=(x^{*},p^{*})\) and

Therefore, taking limits on both sides of (20) we get

The condition \( \eta \in (0,1) \) and (21) together yield

On the other hand, taking limit for \( k \in K_{2} \) in (15) we obtain

which is clearly in contradiction with (22). Therefore, we conclude that (14) is wrong and there exists a subsequence of \(\{p_{k}\}_{k \in \mathbb {N}}\) that converges to zero, that is, there exists \(K_3 (\omega ) \subseteq \mathbb {N}\) such that \( \lim _{k \in K_3} p_{k}=0\). Again, \(\{x_{k}\}\) is bounded and there must exist \(K_4 (\omega ) \subseteq K_3\) such that

Finally, Lemma 4.1 implies that \(\tilde{x}\) is a stationary point for (1) a.s. and the statement follows.\(\square \)

In order to show the stronger result, we state the following definition of strictly strong accumulation point, Yan and Mukai [35].

Definition 4.1

[35] A point \(x^{*}\) is called strictly strong accumulation point of the sequence \(\{x_k\}_{k \in \mathbb {N}} \) if there exists a subsequence \(K\subseteq \mathbb {N}\) and a constant \(b \in \mathbb {N}\) such that \(\lim _{k_i \in K}x_{k_i}=x^{*}\) and \( k_{i+1} - k_i \le b \) for any two consecutive elements \( k_{i}, k_{i+1} \in K. \)

Theorem 4.3

Assume that A1–A6 hold. For almost every sequence \(\{x_k\}_{k \in \mathbb {N}} \) generated by Algorithm 1, every strictly strong accumulation point of the sequence \(\{x_k\}_{k \in \mathbb {N}} \) is stationary for (1).

Proof

Let \(x^{*}\) be an arbitrary strictly strong accumulation point of the sequence \(\{x_k\}_{k \in \mathbb {N}} \). That means that there is a subsequence \(K\subseteq \mathbb {N}\) and a positive constant b such that \(\lim _{k \in K}x_{k}=x^{*}\) and \(k_{i+1}-k_{i} \le b\) for every \(i \in \mathbb {N}\) where \(\{x_k\}_{k \in K} := \{x_{k_{i}}\}_{i \in \mathbb {N}} \).

Suppose that \(dm_{k}\ge \bar{d}>0\) for every \(k \in K\). As shown in the proof of Theorem 4.2, for every \(k \ge \bar{k}(\omega )\) we have

Without loss of generality, we can assume that \(K \subseteq \{\bar{k}+1,\bar{k}+2,\ldots \}\). Define \(s_{i}:=k_{i+1} - k_i\). Then it holds for every \(i \in \mathbb {N}\) that

Since \(dm_{k}\ge 0\) for every k, \(dm_{k_{i}}\ge \bar{d}\) and \(s_{i}\le b\), we obtain that that for every \(i \in \mathbb {N}\)

Now, letting i tend to infinity and using the fact that

we obtain

So, we conclude that a.s. there exists \( K_1 \subseteq K \) such that

Moreover, Lemma 3.1 implies the descent property of \(p_{k}\)

Therefore, if \(\lambda _{k}\ge \bar{\lambda }>0\) for all \(k \in K_1\) we obtain \(\lim _{k \in K_1} p_k = 0\) and the statement follows by Lemma 4.1. Else, suppose that there exists \(K_{2} \subseteq K_{1}\) such that

Using the Mean Value Theorem together with the descent property of the search direction and following the proof of Theorem 4.2, we obtain the existence of \( K_3 \subseteq K_2 \) such that \(\lim _{k \in K_3} p_k = 0,\) which completes the proof. \(\square \)

Remark 2

A few words are due here in order to relate the result of the above theorem with the existing ones. Clearly, the definition of strictly strong accumulation point is not common in the deterministic optimization. However it appears to be necessary in the context of almost sure convergence if one wants to avoid conditions on growth of the sample sizes. The result we obtained here is analogous to the results for unconstrained case presented in Yan and Mukai [35]. Comparing with the results presented in Wardi [33] there is a clear trade-off, either one obtains weaker convergence, in upper mid density, or imposes the assumption of strictly strong accumulation points. Proving the convergence in upper density for the method we consider here seems to be possible although technically demanding. Another possibility would be to assume that \( \lim _{k \rightarrow \infty } \lambda _k p_k = 0 \) as in Shapiro et al. [30]. In that case the corresponding result, convergence w.p.1, follows along the same ideas as in [33]. Given that imposing the growth condition might cause very rapid increase in \( N_k \) and thus make the optimization procedure more expensive we believe that the conditions of Theorem 4.3 represent a good balance between theoretical and practical issues. Nevertheless, we state the following analysis for completeness.

Theorem 4.4

Assume that A1–A6 hold. For almost every sequence \(\{x_k\}_{k \in \mathbb {N}} \) generated by Algorithm 1, every accumulation point of the sequence \(\{x_k\}_{k \in \mathbb {N}} \) is stationary for (1) if the following holds

where \(\tilde{e}_{N_{k}}=\sup _{x\in \Omega } e(x,N_{k})\).

Proof

As in Theorem 4.3 we obtain that (24) holds and thus the following holds for every \(k \ge \bar{k}(\omega )\)

As f is bounded and \(2 \tilde{e}_{N_{j}} + \varepsilon _{j}\) is summable, we obtain that

Let \(x^{*}\) be an arbitrary accumulation point of the sequence \(\{x_k\}_{k \in \mathbb {N}} \), that is let \(K_{0}\) be a set of positive integers such that \(\lim _{k \in K_{0}} x_{k}=x^{*}\). Following the steps from the proof of Theorem 4.2 we conclude that the existence of \(K_{1}\subseteq K_{0}\) such that \(p_{k}\ge p>0\) for every \(k \in K_{1}\) is impossible. Therefore, \(\lim _{k \in K_{0}} p_{k}=0\) a.s. which together with Lemma 4.1 implies that \(x^{*}\) is a stationary point a.s. This completes the proof. \(\square \)

If we consider the bound (13), the Assumption (25) is true if we take \(N_{k}\ge e^{k}\) for instance. So, in order to obtain the stronger convergence result, one can use Algorithm 1 for finitely many iterations and then switch to exponential growth of the sample size. This kind of hybrid approach would combine adaptive sample update and the conservative exponential update which provides better theoretical results. It would be interesting to find out an optimal switching point i.e. an iteration \( k_0 \) or the sample size \( N_{k_0} \) after which the sample size should grow exponentially.

5 Numerical results

In this section we report preliminary numerical results. The test collection consists of 5 academic problems and the M/M/1 problem. The experiments are designed to investigate the efficiency of the variable sample size (VSS) scheme proposed in Algorithm 2 as well as the properties of Spectral Projected Gradient method in stochastic environment. Thus the VSS is compared with four other sample size schemes combined with the SPG method.

The setup for testing is defined as follows.

are satisfied for some k within at most \(10^7\) function evaluations. In other words, \(x_k\) is an approximate stationary point of \(\min _{x \in \Omega } f_{{\mathcal N}_k}(x)\) with the tolerance \( \epsilon _1 \) and the relative/absolute error estimate of an approximation \(f_{{\mathcal N}_k}(x_k) \approx f(x_k)\) is at most \(\epsilon _{2}\). The counting of function evaluations includes counting each gradient \(\nabla F\) evaluation as n evaluations of f. Since the error bound (13) is considered as too conservative for practical implementations (Bastin [2]), we employ the sample variance

and set

as the precision measure. Function \(\gamma \) defined in Assumption A5 is set to \( \gamma (N_{k}) = \exp (-1/N_{k}) \). The sequence \(\{\varepsilon _{k}\}_{k \in \mathbb {N}}\) is defined as

The rest of the parameters needed in Algorithm 1 are \(\beta =0.5\), \(\eta =10^{-4}\), \(\alpha _{min}=10^{-8}\) and \(\alpha _{max}=10^{8}\).

The proposed method terminates either because the number of function evaluations reaches \(10^7\) or because (26) is satisfied for some finite \(N_{k}\) and \(x_{k}\). Either way, it terminates with some finite sample size. Let us denote this sample size by \(N_{max}\). VSS method is compared with four other sample size update schemes referred to as HEUR1, HEUR2, HEUR3 and SAA. HEUR1 uses update \(N_{k+1}=\min \{\left[ 1.1 N_{k}\right] ,N_{max}\}\), HEUR2 takes \(N_{k+1}=\min \{\left[ e^k\right] ,N_{max}\}\), HEUR3 approximately solves the sequence of problems (2) where the sample size \(|\mathcal {N}|\) is increased by \(10\%\) until it reaches \(N_{max}\) and SAA takes \(N_{k}=N_{max}\) for all k. The initial sample size is \( N_0 = 3 \) for all tested problems and \(N_{max}\) heavily depends on the problem and the variance level. In order to make the comparison fair, the sample generated within VSS runs is used for the other tested schemes as well. The same is true for the starting point. We performed 10 independent runs for each tested problem. The question of suitable \( N_{\max } \) is far from simple and there is a number of paper that deals with this issues, for example Bayraksan and Morton [5], Homem-de-Mello and Bayraksan [6]. Given that the principal goal of this paper is not the quality of solution for a finite \( N_{\max } \) but the almost sure convergence for unbounded sample, we adopted this simple rule for \( N_{\max } \) in our tests.

The tested schemes HEUR1 and SAA have been used in our previous work where the sample size was finite and unconstrained optimization problems were considered. HEUR1 was motivated by Friedlander and Schmidt [13], although this is not the only paper that suggests this kind of scheduling sequence. Since the SPG method is linearly convergent, Pasupathy [27] also suggests the \(10\%\) growth of the sample size. However, [27] uses the so called diagonalization where a sequence of optimization problems is solved with the precision \(q_{k}=K/ \sqrt{Nk}\). We implemented this scheme (HEUR3) as well, with \(K=\epsilon _{1} \sqrt{N_{max}}\) to attain the same final precision, but the results were not good since it did not converge within \(10^7\) function evaluations in a vast majority of the tested problems. The remaining scheme HEUR2 was motivated by the result given in Theorem 4.4 and some other related results ([6] for instance) where the minimal growth rate for the sample size is given in order to provide stronger convergence result. Moreover, notice that VSS may exceed the final sample size within the optimization process which gives an advantage to the other tested schemes. Finally, one can always find a heuristic which will perform better on a particular problem than the scheme proposed in this paper. But the main advantage of VSS is its adaptive nature and inner mechanism which works for an arbitrary problem. Tuning the parameters is clearly problem dependent and a generic approach is tested here.

The test examples are defined as

where \(h: \mathbb {R}^{n} \rightarrow \mathbb {R}\). Two levels of variance are tested, \(\sigma ^2=0.1\) and \(\sigma ^2=1\). Regarding the constraints, two cases are considered with respect to the solution—if constraints are active at the solution then we denote the feasible set is denoted by \( \Omega _a, \) and if the constraints are inactive, the feasible set is \( \Omega _{ia}. \) The dimension of all examples is \(n=10\) and the feasible set is n-dimensional box of the form \( [l,u]^n\). Starting points are chosen randomly within a feasible set and the stopping criterion parameters are \(\epsilon _{1}=10^{-2}\) and \(\epsilon _{2}=0.05\).

Functions h are originally taken from Montaz Ali et al. [25]. We list the problems together with active/inactive case constraints, the range of the sample size \(N_{max}\) and the mean values \( \bar{N}_{\max } \) in all the runs.

-

P1

Exponential problem

$$\begin{aligned} F(x,\xi )= & {} e^{-0.5 \Vert \xi x \Vert ^{2}},\\ \Omega _a= & {} [0.3,0.5]^{10}, \; \Omega _{ia}=[-1,1]^{10}, \quad N_{max} \in [3,208], \; \bar{N}_{\max } =97. \end{aligned}$$ -

P2

Griewank problem

$$\begin{aligned} F(x,\xi )= & {} 1+\frac{1}{4000} \Vert \xi x\Vert ^{2}-\prod _{i=1}^{10} \cos \left( \frac{\xi x_{i} }{\sqrt{i}}\right) ,\\ \Omega _a= & {} [100,200]^{10}, \; \Omega _{ia}=[-600,600]^{10}, \quad N_{max} \in [3,25731], \; \bar{N}_{\max } =2800. \end{aligned}$$ -

P3

Neumaier 3 problem

$$\begin{aligned} F(x,\xi )= & {} \sum _{i=1}^{10} ( \xi x_{i}-1)^{2}-\sum _{i=2}^{10}\xi x_{i} \xi x_{i-1},\\ \Omega _a= & {} [0,10]^{10}, \; \Omega _{ia}=[-100,100]^{10}, \quad N_{max} \in [3,3274], \; \bar{N}_{\max } = 1301. \end{aligned}$$ -

P4

Salomon problem

$$\begin{aligned} F(x,\xi )= & {} 1-\cos (2 \pi \Vert \xi x \Vert ^{2})+0.1 \Vert \xi x \Vert ^{2},\\ \Omega _a= & {} [10,50]^{10}, \; \Omega _{ia}=[-100,100]^{10}, \quad N_{max} \in [3,3651], \; \bar{N}_{\max } = 1312. \end{aligned}$$ -

P5

Sinusoidal problem

$$\begin{aligned} F(x,\xi )= & {} -2.5 \prod _{i=1}^{10}\sin ( \xi x_{i}-30)-\prod _{i=1}^{10}\sin (5 ( \xi x_{i}-30)),\\ \Omega _a= & {} [0,2]^{10}, \; \Omega _{ia}=[0,180]^{10}, \quad N_{max} \in [3,1440], \; \bar{N}_{\max } = 205. \end{aligned}$$

M/M/1 queueing problem is often used in stochastic optimization for illustration of real world problems, [1, 18]. This is a parameter estimation problem. It aims to estimate a distribution parameter that minimizes the objective function which takes into account the expected number of customers in a queue and the expected service time given by the mean value of the Exponential distribution. The goal is to minimize the expected time that a client spends in a queue (waiting time and service time) taking into account the (nonzero) costs associated to (nonzero) waiting and service times The solution mainly serves for finding the optimal service time in a sense that it gives an information weather the service time should be improved. In this approach it is important to notice that some balance between the server cost with the above stated objective should exist. This problem can be solved analytically and for that particular reason it makes a good example for evaluating the stochastic procedures. The expected steady-state number of customers in a queue with arrival rate 1 and mean service time x is denoted by L(x). More detailed description of queueing-type problems, as well as other possible approaches can be found in Andradottir [1] or Law [23] for example.

We consider a special two dimensional case (two queues) where the cost induced by the service improvement is addressed within the following objective function

where \(L(x_{i})\) is mathematical expectation of a random variable that follows Geometrical distribution with parameter \(1-x_{i}\), that is,

Therefore, \(L(x_{i})=x_{i}/(1-x_{i})\) and the analytical solution of the problem is known: \(x^{*}=(0.787,0.787)^{T}\) with \(f(x^{*})=26.0764\). Geometrically distributed random variable can be generated with Uniform distribution as

We define function F from (1) by

As suggested in Kao et al. [18], finite differences (Fu [14]) are employed to approximate the gradient. More precisely \(g(x, \xi ) \approx \nabla F(x,\xi )\) with \(g(x, \xi )=(g_{1}(x, \xi ),g_{2}(x, \xi ))^{T}\) and

In order to avoid singularities and obtain closed feasible set, we define \(\Omega =[0+\epsilon _{3}, 1-\epsilon _{3}]^{2}\) and use \(h=10^{-2}\), \(\epsilon _{3}=0.05\). The starting point is \(x_{0}=(0.1,0.1)^{T}\) and the parameters from (26) are \(\epsilon _{1}=10^{-1}\) and \(\epsilon _{2}=10^{-2}\).

The results are mainly presented through performance profile graphs (Dolan and Moré [12]) using the number of function evaluations as the cost function. Roughly speaking, performance profile states the probability that the considered method close enough to the best method among all tested methods, where closeness is measured by \(\alpha \) presented on x-axes. Specifically, \(\alpha =1\) gives us information about the percentage of the tested problems in which the considered method performance was the best one in the considered metrics.

Examples P1–P5. The graphs on the left present profiles for \( \sigma ^2=0.1 \) while the graphs on the right present profiles for \( \sigma ^2=1. \) The pair of graphs on the top represents the profiles for both constraints activity (active and inactive at the solution), the pair in the middle are the profiles for inactive constraints while the bottom pair contains the profiles for the case of active constraints

The results for the problems P1–P5 are presented in Figs. 1 and 2. Only the runs with at least one successful method are included, i.e. \(82\%\) of all the runs performed on these problems. The rest of runs are considered unsuccessful since none of the tested methods converged within \(10^7\) function evaluations. However, the resulting average optimality measure is of order \(10^{-1}\) and the failure is mainly due to budget constraint (\(10^7\) FEVs).

Figure 1 shows that VSS outperforms all other methods if one considers the overall results. It exhibits particularly good behavior for problems with solutions on the boundary of feasible set. The worst performance of VSS is observed for higher variance and inactive constraints as in these cases it is outperformed by the exponential growth sample scheme. Clearly, the behavior of the tested methods heavily depends on the problem—on its structure as well as on the objective function form which determines the variance of F. Heuristic approaches performed well when the solution is inside the feasible set, but the overall results (Fig. 2) show that the usage of VSS is more efficient in terms of number of function evaluations than the other tested schemes.

In Fig. 3 we present the sample scheduling for a randomly chosen run, showing the adaptive nature of the scheduling as the sequence of sample sizes is clearly nonmonotone but eventually growing.

The analytical solution of the M/M/1 queueing problem is known. Figure 4 plots the relative error of the form \((f(x_{k})-f(x^{*}))/f(x^{*})\) against the number of function evaluations FEV. The average values of all 10 runs are shown. The figure shows that VSS is highly competitive with the other tested schemes in the a budget framework. SAA and HEUR3 were too expensive and therefore their graphics are not visible on this figure. All the tested runs were successful for M/M/1 queueing problem. Average sample size \(\bar{N}_{\max }\) for the queuing problem is 3917 and \( \bar{N}_{max} \in [3782, 4108].\) The mean value of the objective function f(x) at the final iteration among these 10 runs is approximately 26.108 for all tested methods and the centered sample variance of \(f(x^*)\) is of order \(10^{-4}\). Therefore, all the methods yield solutions of practically the same quality.

Finally, Fig. 5 presents common performance profile for P1–P5 problems and M/M/1 queueing problem and confirms that VSS remains the most effective.

6 Conclusions

The method we propose and analyze in this paper consists of two components. An efficient sample scheduling update based on the progress achieved in the current iteration with the current SAA approximate function and the precision of SAA approximation, is coupled with the SPG method. A nonmonotone line search is considered as the SPG behaves much better if some nonmonotonicity is allowed. It is assumed that the feasible set is easy to project on and therefore the principal advantages of the SPG method, efficiency and simplicity, yielded a fast and reliable method for solving the constrained problems with the objective function in the form of mathematical expectation. The sample size is pushed to infinity and the almost sure convergence is proved under a set of appropriate assumptions. No growth condition on the sample sizes is assumed what is particularly important from the practical point of view, as the fast increase in the sample size very often yields an expensive method. The set of assumptions is compatible with the corresponding results for the unconstrained case. The assumption of strictly strong accumulation point yields almost sure convergence. Apparently this assumption is necessary if one wants to avoid the growth condition.

References

Andradottir, S.: A scaled stochastic approximation algorithm. Manage. Sci. 42(4), 475–498 (1996)

Bastin, F.: Trust-Region Algorithms for Nonlinear Stochastic Programming and Mixed Logit Models, Ph.D. thesis, University of Namur, Belgium (2004)

Bastin, F., Cirillo, C., Toint, P.L.: An adaptive Monte Carlo algorithm for computing mixed logit estimators. CMS 3(1), 55–79 (2006)

Bastin, F., Cirillo, C., Toint, P.L.: Convergence theory for nonconvex stochastic programming with an application to mixed logit. Math. Program. Ser. B 108, 207–234 (2006)

Bayraksan, G., Morton, D.P.: A sequential sampling procedure for stochastic programming. Oper. Res. Int. J. 59, 898–913 (2011)

Homem-de-Mello, T., Bayraksan, G.: Monte Carlo sampling-based methods for stochastic optimization. Surv. Oper. Res. Manag. Sci. 19, 56–85 (2014)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone Spectral Projected Gradients on Convex Sets. SIAM J. Optim. 10, 1196–1211 (2000)

Birgin, E.G., Martínez, J.M., Raydan, M.: Spectral projected gradient methods: review and perspectives. J. Stat. Softw. 60(3), 1–21 (2014)

Byrd, R., Chin, G., Neveitt, W., Nocedal, J.: On the use of stochastic Hessian information in optimization methods for machine learning. SIAM J. Optim. 21(3), 977–995 (2011)

Byrd, R., Chin, G., Neveitt, W., Nocedal, J.: Sample size selection in optimization methods for machine learning. Math. Program. 134(1), 127–155 (2012)

Byrd, R.H., Hansen, S.L., Nocedal, J., Singer, Y.: A stochastic quasi-Newton method for large scale optimization, arxiv.org/abs/1401.7020)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. Ser. A 91, 201–213 (2002)

Friedlander, M.P., Schmidt, M.: Hybrid deterministic-stochastic methods for data fitting. SIAM J. Sci. Comput. 34(3), 1380–1405 (2012)

Fu, M.C.: Gradient estimation, In: Henderson, S.G., Nelson, B.L. (eds.), Handbook in OR & MS 13, pp. 575–616. Elsevier B.V. (2006)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23(4), 707–716 (1986)

Grippo, L., Lampariello, F., Lucidi, S.: A class of nonmonotone stabilization methods in unconstrained optimization. Numer. Math. 59, 779–805 (1991)

Homem-de-Mello, T.: Variable-sample methods for stochastic optimization. ACM Trans. Model. Comput. Simul. 13(2), 108–133 (2003)

Kao, C., Song, W.T., Chen, S.: A modified quasi-Newton method for optimization in simulation. Int. Trans. Oper. Res. 4(3), 223–233 (1997)

Krejić, N., Lužanin, Z., Ovcin, Z., Stojkovska, I.: Descent direction method with line search for unconstrained optimization in noisy environment. Optim. Methods Softw. 30(6), 1164–1184 (2015)

Krejić, N., Krklec, N.: Line search methods with variable sample size for unconstrained optimization. J. Comput. Appl. Math. 245, 213–231 (2013)

Krejić, N., Jerinkić, N.Krklec: Nonmonotone line search methods with variable sample size. Numer. Algorithms 68, 711–739 (2015)

Krejić, N., Martínez, J.M.: Inexact restoration approach for minimization with inexact evaluation of the objective function. Math. Comput. 85(300), 1775–1791 (2016)

Law, A.M.: Simulation Modeling and Analysis. McGraw-Hill Education, New York (2014)

Mokhtary, A., Ribeiro, A.: Global convergence of online limited memory BFGS. J. Mach. Learn. Res. 16, 3151–3181 (2015)

Ali, M.Montaz, Khompatraporn, C., Zabinsky, Z.B.: A numerical evaluation of several stochastic algorithms on selected continous global optimization test problems. J. Global Optim. 31(4), 635–672 (2005)

Li, D.H., Fukushima, M.: A derivative-free line search and global convergence of Broyden-like method for nonlinear equations. Optim. Methods Softw. 13, 181–201 (2000)

Pasupathy, R.: On choosing parameters in retrospective-approximation algorithms for stochastic root finding and simulation optimization. Oper. Res. 58(4), 889–901 (2010)

Polak, E., Royset, J.O.: Eficient sample sizes in stochastic nonlinear programing. J. Comput. Appl. Math. 217(2), 301–310 (2008)

Royset, J.O.: Optimality functions in stochastic programming. Math. Program. 135(1–2), 293–321 (2012)

Shapiro, A., Dentcheva, D., Ruszczynski, A.: Lectures on stochastic programming: modeling and theory. MPS/SIAM Ser. Optim. 9, 1–413 (2009)

Shapiro, A., Wardi, Y.: Convergence analysis of gradient descent stochastic algorithms. J. Optim. Theory Appl. 91(2), 439–454 (1996)

Spall, J.C.: Introduction to Stochastic Search and Optimization. Wiley, Hoboken (2003)

Wardi, Y.: Stochastic algorithms with Armijo stepsizes for minimization of functions. J. Optim. Theory Appl. 64, 399–417 (1990)

Zhang, H., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 4, 1043–1056 (2004)

Yan, D., Mukai, H.: Optimization algorithm with probabilistic estimation. J. Optim. Theory Appl. 79(2), 345–371 (1993)

Acknowledgements

We are grateful to the associate editor and two anonymous referees whose constructive remarks helped us to improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research supported by Serbian Ministry of Education, Science and Technological Development, Grant No. 174030.

Rights and permissions

About this article

Cite this article

Krejić, N., Krklec Jerinkić, N. Spectral projected gradient method for stochastic optimization. J Glob Optim 73, 59–81 (2019). https://doi.org/10.1007/s10898-018-0682-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0682-6