Abstract

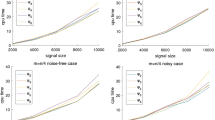

In this paper, we replace the \(\ell _0\) norm with the variation of generalized Gaussian function \(\Phi _\alpha (x)\) in sparse signal recovery. We firstly show that \(\Phi _\alpha (x)\) is a type of non-convex sparsity-promoting function and clearly demonstrate the equivalence among the three minimization models \((\mathrm{P}_0):\min \limits _{x\in {\mathbb {R}}^n}\Vert x\Vert _0\) subject to \( Ax=b\), \({\mathrm{(E}_\alpha )}:\min \limits _{x\in {\mathbb {R}}^n}\Phi _\alpha (x)\) subject to \(Ax=b\) and \((\mathrm{E}^{\lambda }_\alpha ):\min \limits _{x\in {\mathbb {R}}^n}\frac{1}{2}\Vert Ax-b\Vert ^{2}_{2}+\lambda \Phi _\alpha (x).\) The established equivalent theorems elaborate that \((\mathrm{P}_0)\) can be completely overcome by solving the continuous minimization \((\mathrm{E}_\alpha )\) for some \(\alpha \)s, while the latter is computable by solving the regularized minimization \((\mathrm{E}^{\lambda }_\alpha )\) under certain conditions. Secondly, based on DC algorithm and iterative soft thresholding algorithm, a successful algorithm for the regularization minimization \((\mathrm{E}^{\lambda }_\alpha )\), called the DCS algorithm, is given. Finally, plenty of simulations are conducted to compare this algorithm with two classical algorithms which are half algorithm and soft algorithm, and the experiment results show that the DCS algorithm performs well in sparse signal recovery.

Similar content being viewed by others

References

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Anl, T.H., Tao, P.D.: The DC programming and DCA revisited with DC models of real world nonconvex optimization problems. Ann. Oper. Res. 133(1), 23–46 (2005)

Babacan, S.D., Molina, R., Katsaggelos, A.K.: Bayesian compressive sensing using laplace priors. IEEE Trans. Image Process. 19(1), 53–63 (2010)

Beck, A., Teboulle, M.A.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Blumensath, T., Davies, M.E.: Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 14(5), 629–654 (2008)

Cand\(\grave{e}\)s, E., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. , 59(8), 1207–1223 (2006)

Cand\(\grave{e}\)s, E., Rudelson, M., Tao, T., Vershynin, R.: Error correction via linear programming. In: 46th Annual IEEE Symposium on Foundations of Computer Science, pp. 668-681. Pittsburgh, PA (2005)

Cand\(\grave{e}\)s, E., Tao, T.: Decoding by linear programming, IEEE Trans. Inform. Theory 51(12), 4203–4215 (2005)

Cao, W., Sun, J., Xu, Z.: Fast image deconvolution using closed-form thresholding formulas of regularization. J. Vis. Commun. Image Represent. 24(1), 31–41 (2003)

Carmy, A., Gurfil, P., Kanevsky, D., Ramabhadran, B.: ABCS: approximate Bayesian compressed sensing. IBM Research Report, RC24816 (2009)

Chartrand, R.: Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. 14(10), 707–710 (2007)

Davies, M.E., Gribonval, R.: Restricted isometry constants where \(l_p\) sparse recovery can fail for \(0<p<1\). IEEE Trans. Inform. Theory 55(5), 2203–2214 (2009)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inform. Theory 52(4), 1289–1306 (2006)

Donoho, D.L.: De-noising by soft thresholding. IEEE Trans. Inform. Theory 41(3), 613–627 (1995)

Donoho, D.L., Elad, M.: Optimally sparse representation in general (nonorthoganal) dictionaries via \(l_1\) minimization. In: Proceedings of Nature Academic Sciences, pp. 2197–2202. USA (2003)

Donoho, D.L., Tanner, J.: Sparse nonnegative solution of underdetermined linear equations by linear programming. PNAS 102(27), 9446–9451 (2005)

Elad, M.: Sparse and Redundant Representations: from Theory to Applications in Signal and Image Processing. Springe Press, New York (2010)

Elhamifar, E., Vidal, R.: Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2765–2781 (2013)

Glasbey, C.A.: An analysis of histogram-based thresholding algorithm. CVGIP Graph. Models Image Process. 55(6), 532–537 (1993)

Goldstein, T., Osher, S.: The Split Bregman Method for \(\ell _1\)-regularized Problems. SIAM J. Imag. Sci. 2(2), 323–343 (2009)

Horst, R., Thoain, V., Programming, D.C.: Overview. J. Optim. Appl. 103(1), 1–43 (1999)

Ji, S., Xue, Y., Carin, L.: Bayesian compressive sensing. IEEE Trans. Signal Process. 52(8), 2346–2356 (2008)

Le Thi, H.A., Thi, B.T.N., Le, H.M.: Sparse signal recovery by difference of convex functions algorithms. In: Intelligent Information and Database Systems, vol. 7803, pp. 387–397, Berlin, Germany (2013)

Li, H., Zhang, Q., Cui, A., Peng, J.: Minimization of fraction function penalty in compressed sensing. IEEE Trans. Neural Netw. Learn. Syst. 31(5), 1626–1637 (2020)

Liu, G., Lin, Z., Yan, S.: Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 171–184 (2013)

Louizos, C., Welling, M., Kingma, D.: Learning sparse neural networks through \(\ell _0\) Regularization (2017). arXiv preprint arXiv:1712.01312

Moulin, P., Liu, J.: Analysis of multiresolution image denoising schemes using generalized Gaussian and complexity priors. IEEE Trans. Inform. Theory 45(3), 909–919 (1999)

Natarajan, B.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24(2), 227–234 (1995)

Nikolova, M.: Estimées locales fortement homogènes. Comptes Rendus Acad. Sci. Paris 325(1), 665–670 (1997)

Ong, C.S., Hoai An, L.T.: An learning sparse classifiers with difference of convex functions algorithms. Optim. Methods Softw. 28(4), 830–854 (2013)

Scardapane, S., Comminiello, D., Hussain, A., Uncini, A.: Group sparse regularization for deep neural networks. Neurocomputing 241, 81–89 (2017)

Tao, P.D., Hoai An, L.T.: Convex analysis approach to D.c. programming: theory, algorithms and application. Acta Mathematica Vietenamica 22(1), 289–355 (1997)

Temlyakov, V.N.: Greedy algorithm and \(m\)-term trigonometric approximation. Construct. Approx. 14(4), 569–587 (1998)

Thiao, M.: Approches de la programmation DC et DCA en data mining, Th\(\grave{e}\)se de doctorat \(\grave{a}\) 1’INSA-Rouen, France (2011)

Tibshirani, R.: Regression Shrinkage and Selection via the Lasso. J. Roy. Stat. Soc. Ser. B 58(1), 267–288 (1996)

Tropp, J., Gillbert, A.: Signal recovery from partial information via orthogonal matching pursuit. IEEE Trans. Inform. Theory 53(12), 4655–4666 (2007)

Wen, B., Chen, X., Pong, T.K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69, 1–28 (2018)

Xu, Z., Chang, X., Xu, F., Zhang, H.: \(L_{1/2}\) regularization: a thresholding representation theory and a fast solver. IEEE Trans. Neural Netw. Learn. Syst. 23(7), 1013–1027 (2012)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _1\) problems in compressive sensing. SIAM J. Sci. Comput. 33(1), 250–278 (2011)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\)-minimization with applications to compressed sensing. SIAM J. Imag. Sci. 1(1), 143–168 (2008)

Acknowledgements

we are grateful to the editor and the referees for their comments which led to substantial improvements in the manuscript.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proofs of Theorems and Lemmas

Proofs of Theorems and Lemmas

In this section, we mainly prove all the Theorems.

It is easy to verify that for any \(\alpha >0, \nu \in (0,1]\), \(\phi _\alpha (t)\) is symmetric on \({\mathbb {R}}\), increasing and concave for \(t\in [0,+\infty )\), \(\phi _\alpha (t)=0\) if \(t=0\) and \(\lim \limits _{\alpha \rightarrow +\infty }\phi _\alpha (t)=1\) if \(t\ne 0\). Therefore, with the adjustment of parameter \(\alpha \), \(\Phi _\alpha (x)\) can approximate \(\ell _0\) norm well. Moreover, the function \(\phi _\alpha (t)\) satisfies the following triangle inequality.

Lemma 2

For any \(\alpha >0\), \(\nu \in (0,1]\) and any real number \(x,\ y\), the triangle inequality holds:

Proof

Because

the triangle inequality holds. \(\square \)

Proof of Theorem 1

Suppose that \(\hat{x}\) is the optimal solution to \((\mathrm{E}_{\alpha })\) with \(\Vert \hat{x}\Vert _{0}=k> m\), then the k columns combined linearly by \(\hat{x}\) are linear dependent. Thus, there exists a non-trivial vector \(h\in {\mathbb {R}}^n\) which has the same support with \(\hat{x}\) such that \(Ah=0\). Obviously, \(\hat{x}-h\), \(\hat{x}+h\) are also solutions to \(Ax=b\).

For arbitrary j, \(\hat{x}_{j}-h_{j},\hat{x}_{j}+h_{j}\) and \(\hat{x}_{j}\) have the same sign with the assumption that

Owing to the fact that \(\phi _{\alpha }(t)=1-e^{-\alpha \mid t\mid ^\nu }\) is a strictly concave function for |t|, there is

thus

Furthermore, we can get

or

which are in contradiction to the fact that \(\hat{x}\) is the optimal solution to \((\mathrm{E}_{\alpha })\), so \(\Vert \hat{x}\Vert _{0}\le m\). The Theorem is proved. \(\square \)

Proof of Theorem 2

Let \(x^{*}\) be the optimal solution to \((\mathrm{E}^\lambda _\alpha )\) and \(\Vert x^*\Vert _0=k\). Without loss of generality, we assume

It is obvious that for all \(t\in {\mathbb {R}}\) and \(h\in {\mathbb {R}}^{n}\),

which implies that

If \(supp(h)\subseteq supp(x^{*})\), then for all \(t\in {\mathbb {R}}\),

Incorporating the equality (A2) into the inequality (A1), we have

Dividing on both sides of the inequality above by \(t>0\), and letting \(t\rightarrow 0\) yield that

Apparently, the above inequality also holds for \(-h\) which leads to

Then, incorporating the equality (A3) into the inequality (A1) yields that, for all \(t\in {\mathbb {R}}\),

Dividing by \(t^2\) on both sides of the inequality (A4) and letting \(t\rightarrow 0\) yield

Denote the first k columns of A by B. According to the inequality above, for any \(y\ne 0\) with \(y\in {\mathbb {R}}^k\),

where \(\hat{y}=(y_1,\ldots ,y_k,0,\ldots ,0)^T\), which implies that the matrix \(B^TB\) is positive definite, and hence the columns of B are linearly independent, that is, \(\Vert x^*\Vert _0\le m\). \(\square \)

Before proving the equivalence between \((\mathrm P_0)\) and the constrained minimization problems \((\mathrm{E}_\alpha )\) (Theorems 3 and 4), we need to introduce two constants and Lemma 3.

We denote by \(\Delta \) the set of solutions to \(Ax=b\) with \(\Vert x\Vert _0\le m\), then the cardinality of \(\Delta \) is finite. In fact, for any \(x\in \Delta \), because \(A_{m\times n}\) is a full row rank matrix, there exists a full rank square submatrix \(B_{m\times m}\) of \(A_{m\times n}\) such that \(Bx=b\). Since \(A_{m\times n}\) has at most \(\genfrac(){0.0pt}0{n}{m}\) full rank square submatrix \(B_{m\times m}\)s, the set \(\Delta \) has at most \(\genfrac(){0.0pt}0{n}{m}\) elements. Furthermore, we define two constants q(A, b) and Q(A, b) as follows

Because \(\Delta \) has at most \(\genfrac(){0.0pt}0{n}{m}\) elements, the set \(\{z_i\mid z\in \Delta ,z_i\not =0, 1\le i\le n\}\) has at most \(m\times \genfrac(){0.0pt}0{n}{m}\) non-zero elements, which implies that the constants q(A, b) and Q(A, b) are finite and positive.

Lemma 3

If there is a constant \(0<p<1\) such that, for any \(x\in (0,p)\),

where \(t_1\ge t_2\ge \cdots \ge t_n>0, s_1\ge s_2\ge \cdots \ge s_n>0\), then \(t_1=s_1, t_2=s_2, \ldots , t_n=s_n\).

Proof

Since

for any \(x\in (0,p)\), dividing on both sides of the equality (A7) by \(x^{t_N}\), we have

Letting \(x\rightarrow 0\), the limit of the left of equality (A8) exists because \(t_i-t_N\ge 0\ (i=1,2,\ldots ,N-1)\), which implies that the limit of the right of equality (A8) also exists. Hence, \(s_N\ge t_N\). Similarly, dividing on both sides of the equality (A7) by \(x^{s_N}\), we have \(s_N\le t_N\), which implies that \(s_N=t_N\) and

Repeating the process above, we have \(t_1=s_1, t_2=s_2, \ldots , t_N=s_N\), as claimed. \(\square \)

Proof of Theorem 3

Let \(x^{\alpha }\) be the optimal solution to \((\mathrm{E}_{\alpha })\) and \(x^{0}\) the optimal solution to \((\mathrm P_0)\). It suffices to show that \(\Vert x^{\alpha }\Vert _{0}=\Vert x^0\Vert _{0}\) and \(\Phi _{\alpha }(x^0)=\Phi _{\alpha }(x^\alpha )\). Firstly, we have

Since \(x^{0}\) and \(x^{\alpha }\) are the optimal solutions to \((\mathrm P_0)\) and \((\mathrm{E}_{\alpha })\), respectively, the first equality and the third inequality hold. The second inequality is true by the definition of \(\Phi _\alpha (x)\), and the last one holds by the definition of q(A, b) in (A6).

What’s more, for arbitrary \(\alpha \) with

the following inequality is true

Combining inequality (A9) and (A11) yields that

which implies that

Since \(\Vert x^{\alpha }\Vert _{0}\) is an integer number, we get \(\Vert x^{\alpha }\Vert _{0}=\Vert x^0\Vert _{0}\) if (A10) holds.

Secondly, if \(\Phi _{\alpha }(x^0)>\Phi _{\alpha }(x^\alpha )\), then

where the third inequality is true by the definition of \(Q(A,b),\ q(A,b)\) in (A6) and \(\Vert x^{0}\Vert _0\le \Vert x^{\alpha }\Vert _0\). Dividing on both sides of the equality above by \(\Vert x^{\alpha }\Vert _0\), we have

Denote by \(u(\alpha )\) the right side of (A12), it is easy to verify that \(u(\alpha )\rightarrow 0\ (\alpha \rightarrow +\infty )\) and \(u(\alpha )\) is monotonically decreasing when

Hence, there exists \(\alpha _2\) such that, whenever \(\alpha >\alpha _2\),

which is a contraction with (A12). That is, \(\Phi _{\alpha }(x^0)=\Phi _{\alpha }(x^\alpha )\) if \(\alpha >\alpha _2\).

Denote by \(\alpha _1\) the right side of (A10). Taking \(\alpha ^{*}=\max \{\alpha _1,\ \alpha _2\}\), we can get the conclusion that, whenever \(\alpha >\alpha ^{*}\), \((\mathrm P_0)\) and \((\mathrm{E}_\alpha )\) are equivalent. The proof is completed. \(\square \)

Proof of Theorem 4

Let \(\{\alpha _i\mid i=1,2,\ldots ,n,\ldots \}\) be the increasing infinite sequence with \(\lim \limits _{i\rightarrow +\infty }\alpha _i=+\infty \) and \(\alpha _1=1\). For each \(\alpha _i\), by Lemma 1, the optimal solution \(\hat{x}^i\) to \((\mathrm{E}_{\alpha _i})\) belongs to \(\Delta \). Since \(\Delta \) is a finite set, one element, named \(\hat{x}\), will repeatedly solve \((\mathrm{E}_{\alpha _i})\) for some subsequence \(\{\alpha _{i_k}\mid k=0,1,\ldots \}\) of \(\{\alpha _i\}\). Denote by \(\hat{\alpha }\) the smallest number \(\alpha _{i_1}\) of the infinite subsequence \(\{\alpha _{i_k}\mid k=1,2,\ldots \}\). In the following, we show that \((\mathrm P_0)\) and \((\mathrm{E}_{\hat{\alpha }})\) are equivalent.

First, \(\hat{x}\) is the optimal solution to \((\mathrm P_0)\). In fact, for arbitrary \(\alpha _{i_{k}}\ge \alpha _{i_0}\), there is

Let \(k\rightarrow +\infty \), we have

that is to say, \(\hat{x}\) is the optimal solution to \((\mathrm P_0)\).

Second, for any k, \(x^{0}\), the optimal solution to \((\mathrm P_0)\), is the optimal solution to \((\mathrm{E}_{\alpha _{i_k}})\). In fact, from Theorem 3, there exists \(k_0\) such that \(x^{0}\) is the optimal solution to \((\mathrm{E}_{\alpha _{i_k}})\) whenever \(k\ge k_0\) and \(\Vert x^{0}\Vert _{0}=\Vert x^{\alpha _{i_k}}\Vert _{0}\). Take \(\Vert x^{0}\Vert _{0}=N\), hence for any \(k\ge k_0\),

which leads to

Without loss of generality, we assume that \(\mid x^{0}_1\mid \ge \mid x^{0}_2\mid \ge \cdots \ge \mid x^{0}_N\mid \) and \(\mid \hat{x}_1\mid \ge \mid \hat{x}_i\mid \ge \cdots \ge \mid \hat{x}_N\mid \). Due to Lemma 3, we have \(\mid x^{0}_1\mid =\mid \hat{x}_1\mid , \mid x^{0}_2\mid =\mid \hat{x}_2\mid ,\ldots ,\mid x^{0}_N\mid =\mid \hat{x}_N\mid \), which implies that for any k, \(x^{0}\) is the optimal solution to \((\mathrm{E}_{\alpha _{i_k}})\). The proof is completed. \(\square \)

Proof of Lemma 1

(1) Let \(x^{*}\) be the optimal solution to \((\mathrm{E}^\lambda _\alpha )\). Then, with the definition of the optimal solution, we have

Thus

which implies that

Furthermore, if \(\lambda >\Vert b\Vert ^{2}_{2}\), then (1) holds.

Because the function \(r(t)=t^{\nu -1}e^{-\alpha t^\nu }\ (\nu <1,\alpha >0)\) is strictly decreasing with \(t>0\), for any \(i\in supp(x^*)\),

This together with inequality (1) gives

Moreover, replacing h in equality (A3) with the base vector \(e_i\) for every \(i\in supp(x^*)\), we have

Combining this with the inequality (A14), the inequality (2) holds.

(2) Suppose that \(x^{*}\ne 0\) is the optimal solution to \((\mathrm E^\lambda _\alpha )\) and \(\Vert x^*\Vert _0=k\). By equality (A15), we have

Multiplying both sides of the equality above by \(x^{*T}\) yields that

Since \(A^{T}A\) is positive semidefinite, there is

Equivalently,

On the other hand, using the fact that the function \(h(\lambda )\) is strictly increasing, we have

when

For any \(i\in supp(x^*)\), combining this inequality with the inequality (A14),

holds. Consequently,

which leads to

which is a contradiction to inequality (A16), as claimed. \(\square \)

Proof of Theorem 5

Let \(x^{\lambda }\) and \(x^{\alpha }\) be the optimal solutions to \((\mathrm{E}^{\lambda }_\alpha )\) and \((\mathrm{E}_{\alpha })\), respectively. To prove the conclusion, it suffices to show that \(Ax^{\lambda }=b\). In fact, if \(Ax^{\lambda }=b\), then we have

and

which implies that \((\mathrm{E}^{\lambda }_\alpha )\) and \((\mathrm{E}_\alpha )\) are equivalent.

Suppose that \(Ax^{\lambda }=b^{\lambda }\ne b\). Let \(y^{\alpha }\) be the optimal solution to

then \(A(x^\lambda +y^\alpha )=b\). By Theorem 1, the column submatrix \(A^*\) of A consisting of the columns indexed by the set of \(supp(y^\alpha )\) is full column rank and \(\Vert y^\alpha \Vert _0=k\le m\). Besides, due to the definition of \(\sigma _{\min }\), we have

where \(\hat{y}^\alpha \in {\mathbb {R}}^k\) is composed of non-zero elements of \(y^\alpha \). With the inequality of matrix-norm, there is

in which the last inequality holds by the inequality (2).

Furthermore, as \(\lambda \Phi _\alpha (y^\alpha )\le \lambda m\), it is true that

Combining it with (6) and (A17), we get

which implies that

Therefore, we obtain

Since \(x^{\lambda }\) and \(x^{\alpha }\) are the optimal solutions to \((\mathrm P_0)\) and \((\mathrm{E}_{\alpha })\), respectively, the first and the last inequality hold. The second equality holds because of \(A(x^\lambda +y^\alpha )=b\). The third inequality is true by Lemma 2, and the fourth inequality holds by (A18). This is a contradiction and hence \(Ax^\lambda =b\), as claimed. \(\square \)

Proof of Theorem 6

We define the objective function of (8) as

and its surrogate function is

where z is an additional variable, \(\mu \) is a positive parameter. Then we have the following optimization problem

For a fixed \(z\in {\mathbb {R}}^n\), as each component \(x_i\) is decoupled, we can minimize function \(l_\mu (x,z)\) with respect to each \(x_i\) individually. Based on the equality (A20), the objective function of this component-wise minimization is

Based on the reference [14], we know that the minimizer \(x^*=(x^*_1,\ldots ,x^*_n)\) to this minimization is

where \(S_{\lambda \mu }(t)=\mathrm{sgn}(t)\max \{0,\mid t\mid -\lambda \mu \}\) for any \(t\in {\mathbb {R}}\).

Moreover, because of the condition \(0<\mu \le \Vert A\Vert ^2\), we have

which implies that \(x^*\) is a local minimizer of \(l_\mu (x,x^*)\) as long as \(x^*\) is the optimal solution to (8).

Taking \(z=x^*\) in the equality (A22), we have

as claimed \(\square \)

Rights and permissions

About this article

Cite this article

Li, H., Zhang, Q., Lin, S. et al. Sparse signal recovery via generalized gaussian function. J Glob Optim 83, 783–801 (2022). https://doi.org/10.1007/s10898-022-01126-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01126-2

Keywords

- Sparse signal recovery

- \(\ell _{0}\) minimization

- Generalized Gaussian function

- Regularization minimization

- The DCS algorithm