Abstract

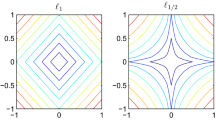

We study analytical and numerical properties of the \(L_1-L_2\) minimization problem for sparse representation of a signal over a highly coherent dictionary. Though the \(L_1-L_2\) metric is non-convex, it is Lipschitz continuous. The difference of convex algorithm (DCA) is readily applicable for computing the sparse representation coefficients. The \(L_1\) minimization appears as an initialization step of DCA. We further integrate DCA with a non-standard simulated annealing methodology to approximate globally sparse solutions. Non-Gaussian random perturbations are more effective than standard Gaussian perturbations for improving sparsity of solutions. In numerical experiments, we conduct an extensive comparison among sparse penalties such as \(L_0, L_1, L_p\) for \(p\in (0,1)\) based on data from three specific applications (over-sampled discreet cosine basis, differential absorption optical spectroscopy, and image denoising) where highly coherent dictionaries arise. We find numerically that the \(L_1-L_2\) minimization persistently produces better results than \(L_1\) minimization, especially when the sensing matrix is ill-conditioned. In addition, the DCA method outperforms many existing algorithms for other nonconvex metrics.

Similar content being viewed by others

References

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Candès, E., Elder, Y., Needle, D., Randall, P.: Compressed sensing with coherent and redundant dictionaries. Appl. Comput. Harmon. Anal. 31, 59–73 (2011)

Candès, E.J., Fernandez-Granda, C.: Super-resolution from noisy data. J. Fourier Anal. Appl. 19(6), 1229–1254 (2013)

Candés, E.J., Tao, T.: Decoding by linear programming. IEEE Trans. Inf. Theory 51(2), 4203–4215 (2005)

Carnevali, P., Coletti, L., Patarnello, S.: Image processing by simulated annealing. IBM J. Res. Dev. 29(6), 569–579 (1985)

Chartrand, R., Yin, W.: Iteratively reweighted algorithms for compressive sensing. In: International Conference on Acoustics, Speech, and Signal Processing, pp. 3869–3872, (2008)

Donoho, D., Elad, M.: Optimally sparse representation in general (nonorthogonl) dictionaries via l1 minimization. Proc. Natl. Acad. Sci. USA 100, 2197–2202 (2003)

Elad, M., Aharon, M.: Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 15(12), 3736–3745 (2006)

Esser, E., Lou, Y., Xin, J.: A method for finding structured sparse solutions to non-negative least squares problems with applications. SIAM J. Imaging Sci. 6(4), 2010–2046 (2013)

Fannjiang, A., Liao, W.: Coherence pattern-guided compressive sensing with unresolved grids. SIAM J. Imaging Sci. 5(1), 179–202 (2012)

Finlayson-Pitts, B.: Unpublished data. Provided by L, Wingen (2000)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984)

Gidas, B.: Nonstationary Markov chains and convergence of the annealing algorithm. J. Stat. Phys. 39(1–2), 73–131 (1985)

Kirkpatrick, S., Gelatt, C.D., Vecchi, M.P.: Optimization by simmulated annealing. Science 220(4598), 671–680 (1983)

Lai, M.J., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed lq minimization. SIAM J. Numer. Anal. 5(2), 927–957 (2013)

Lu, Z., Zhang, Y.: Penalty decomposition methods for L0-norm minimization. preprint. arXiv:1008.5372v2 [math. OC], 2012

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24(2), 227–234 (1995)

Olshausen, B., Field, D.: Sparse coding with an overcomplete basis set: a strategy employed by v1? Vision Res. 37, 3311–3325 (1997)

Platt, U., Stutz, J.: Differential Optical Absorption Spectroscopy: Principles and Applications. Springer, Berlin (2008)

Tao, P.D., An, L.T.H.: Convex analysis approach to d.c. programming: theory, algorithms and applications. Acta Math. Vietnam. 22(1), 289–355 (1997)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Series B Stat. Methodol. 58(1), 267–288 (1996)

Tropp, J.: Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 50, 2231–2242 (2004)

Xu, F., Wang, S.: A hybrid simulated annealing thresholding algorithm for compressed sensing. Signal Process. 93, 1577–1585 (2013)

Yin, P., Esser, E., and Xin, J.: Ratio and difference of \(l_1 and l_2\) norms and sparse representation with coherent dictionaries. Technical report, UCLA CAM Report [13-21] (2013)

Yin, P., Lou, Y., He, Q., and Xin, J.: Minimization of \(l_1 - l_2\) for compressed sensing. Technical report, UCLA CAM Report [14-01] (2014)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for l1 minimization with applications to compressed sensing. SIAM J. Imaging Sci 1, 143–168 (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

The work was partially supported by NSF grants DMS- 0928427 and DMS-1222507.

Rights and permissions

About this article

Cite this article

Lou, Y., Yin, P., He, Q. et al. Computing Sparse Representation in a Highly Coherent Dictionary Based on Difference of \(L_1\) and \(L_2\) . J Sci Comput 64, 178–196 (2015). https://doi.org/10.1007/s10915-014-9930-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-014-9930-1