Abstract

We study the problem of constructing sparse and fast mean reverting portfolios. The problem is motivated by convergence trading and formulated as a generalized eigenvalue problem with a cardinality constraint (d’Aspremont in Quant Finance 11(3):351–364, 2011). We use a proxy of mean reversion coefficient, the direct Ornstein–Uhlenbeck estimator, which can be applied to both stationary and nonstationary data. In addition, we introduce three different methods to enforce the sparsity of the solutions. One method uses the ratio of \(l_1\) and \(l_2\) norms and the other two use \(l_1\) norm. We analyze various formulations of the resulting non-convex optimization problems and develop efficient algorithms to solve them for portfolio sizes as large as hundreds. By adopting a simple convergence trading strategy, we test the performance of our sparse mean reverting portfolios on both synthetic and historical real market data. In particular, the \(l_1\) regularization method, in combination with quadratic program formulation as well as difference of convex functions and least angle regression treatment, gives fast and robust performance on large out-of-sample data set.

Similar content being viewed by others

References

Barekat, F., Yin, K., Caflisch, R.E., Osher, S.J., Lai, R., Ozolins, V.: Compressed wannier modes found from an \(L_1\) regularized energy functional. arXiv preprint arXiv:1403.6883 (2014)

Box, G.E., Tiao, G.C.: A canonical analysis of multiple time series. Biometrika 64(2), 355–365 (1977)

Box, M., Davies, D., Swann, W.H., Australia, I.: Non-linear Optimization Techniques, vol. 5. Oliver & Boyd, Edinburgh (1969)

Chow, S.-N., Yang, T.-S., Zhou, H.: Global optimizations by intermittent diffusion. National Science Council Tunghai University Endowment Fund for Academic Advancement Mathematics Research Promotion Center, p. 121 (2009)

Cuturi, M., D’aspremont, A.: Mean reversion with a variance threshold. In: Proceedings of the 30th International Conference on Machine Learning (ICML-13), pp. 271–279 (2013)

d’Aspremont, A.: Identifying small mean-reverting portfolios. Quant. Finance 11(3), 351–364 (2011)

d’Aspremont, A., El Ghaoui, L., Jordan, M.I., Lanckriet, G.R.: A direct formulation for sparse PCA using semidefinite programming. SIAM Rev. 49(3), 434–448 (2007)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R., et al.: Least angle regression. Ann. Stat. 32(2), 407–499 (2004)

Engle, R.F., Granger, C.W.: Co-integration and error correction: representation, estimation, and testing. Econom. J. Econom. Soc. 55, 251–276 (1987)

Esser, E., Lou, Y., Xin, J.: A method for finding structured sparse solutions to nonnegative least squares problems with applications. SIAM J. Imaging Sci. 6(4), 2010–2046 (2013)

Fogarasi, N., Levendovszky, J.: Improved parameter estimation and simple trading algorithm for sparse, mean reverting portfolios. In: Annales Universitatis Scientiarium Budapestinensis, Sectio Computatorica, vol. 37, pp. 121–144 (2012)

Horst, R., Thoai, N.V.: DC programming: overview. J. Optim. Theory Appl. 103(1), 1–43 (1999)

Hotelling, H.: Relations between two sets of variates. Biometrika 28(3–4), 321–377 (1936)

Hoyer, P.O.: Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 5, 1457–1469 (2004)

Hu, Y., Long, H.: Least squares estimator for Ornstein–Uhlenbeck processes driven by \(\alpha \)-stable motions. Stoch. Process. Appl. 119(8), 2465–2480 (2009)

Ji, H., Li, J., Shen, Z., Wang, K.: Image deconvolution using a characterization of sharp images in wavelet domain. Appl. Comput. Harmonic Anal. 32(2), 295–304 (2012)

Jolliffe, I.T., Trendafilov, N.T., Uddin, M.: A modified principal component technique based on the lasso. J. Comput. Gr. Stat. 12(3), 531–547 (2003)

Journée, M., Nesterov, Y., Richtárik, P., Sepulchre, R.: Generalized power method for sparse principal component analysis. J. Mach. Learn. Res. 11, 517–553 (2010)

Krishnan, D., Tay, T., Fergus, R.: Blind deconvolution using a normalized sparsity measure. In: 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 233–240. IEEE (2011)

Lai, R., Osher, S.: A splitting method for orthogonality constrained problems. J. Sci. Comput. 58(2), 431–449 (2014)

Le Thi, H., Huynh, V., Pham Dinh, T.: Convergence analysis of DC algorithm for DC programming with subanalytic data. Annals of Operations Research, Technical Report, LMI, INSA-Rouen (2009)

Le Thi, H.A., Tao, P.D.: Solving a class of linearly constrained indefinite quadratic problems by DC algorithms. J. Glob. Optim. 11(3), 253–285 (1997)

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24(2), 227–234 (1995)

Ngo, T.T., Bellalij, M., Saad, Y.: The trace ratio optimization problem. SIAM Rev. 54(3), 545–569 (2012)

Ozoliņš, V., Lai, R., Caflisch, R., Osher, S.: Compressed modes for variational problems in mathematics and physics. Proc. Natl. Acad. Sci. 110(46), 18368–18373 (2013)

Ozoliņš, V., Lai, R., Caflisch, R., Osher, S.: Compressed plane waves yield a compactly supported multiresolution basis for the laplace operator. Proc. Natl. Acad. Sci. 111(5), 1691–1696 (2014)

Richtárik, P., Takáč, M., Ahipaşaoğlu, S.D.: Alternating maximization: unifying framework for 8 sparse PCA formulations and efficient parallel codes. arXiv preprint arXiv:1212.4137 (2012)

Sriperumbudur, B.K., Torres, D.A., Lanckriet, G.R.: Sparse eigen methods by dc programming. In: Proceedings of the 24th International Conference on Machine Learning, pp. 831–838. ACM (2007)

Tao, P.D., Le Thi, H.A.: Convex analysis approach to DC programming: theory, algorithms and applications. Acta Math. Vietnam. 22(1), 289–355 (1997)

Tao, P.D., Le Thi, H.A.: A DC optimization algorithm for solving the trust-region subproblem. SIAM J. Optim. 8(2), 476–505 (1998)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 58, 267–288 (1996)

Yin, P., Esser, E., Xin, J.: Ratio and difference of l1 and l2 norms and sparse representation with coherent dictionaries. Commun. Inf. Syst. 14(2), 87–109 (2014)

Yu, J.: Bias in the estimation of the mean reversion parameter in continuous time models. J. Econom. 169(1), 114–122 (2012)

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Gr. Stat. 15(2), 265–286 (2006)

Acknowledgements

We would like to thank Dr. Wuan Luo for bringing reference [6] to our attention and for helpful communication.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to the memory of our good friend and colleague Ernie Esser.

This work was partially supported by NSF Grants DMS-1222507, DMS-1522383, and IIS-1632935.

Appendices

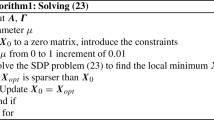

Appendix A: Semi-definite Relaxation Method Derived From \(l_1/l_2\)

By setting \(X = xx^T\), then the problem (12) is equivalent to

where |X| means we take the absolute value for each entry of X.

Then after a change of variables:

and dropping the rank constraint, the previous problem can be written as a semidefinite programming problem:

If we set \(\mathrm {Card}(x) = k = m^2\), this is exactly the semidefinite relaxation in [6].

Appendix B: Estimation of the Matrices A and B

For the estimations below, we assume that each column of the data matrix S represents an asset and its mean is 0. Its size is \(l\times n\), so we have l observations and n assets.

We define \(S_c\) and \(S_f\) in the following way:

where \(S_{ti}\) is the value at time t of the ith asset.

1.1 Appendix B.1: Predictability

In [11], the authors discussed several methods in estimating \(\beta \) and \(\Gamma \) in VAR(1) model (4). In most cases, the number of the observations of assets values l is greater than the number of assets n. Under this case and previous assumptions, we could use the following estimates:

Therefore, the matrices in problem (7) can be estimated as:

1.2 Appendix B.2: Direct OU Estimator

Using a similar method as B.1, we estimate the matrices A and B as follows:

Rights and permissions

About this article

Cite this article

Long, X., Solna, K. & Xin, J. Three \(l_1\) Based Nonconvex Methods in Constructing Sparse Mean Reverting Portfolios. J Sci Comput 75, 1156–1186 (2018). https://doi.org/10.1007/s10915-017-0578-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0578-5