Abstract

The surgical Apgar score predicts major 30-day postoperative complications using data assessed at the end of surgery. We hypothesized that evaluating the surgical Apgar score continuously during surgery may identify patients at high risk for postoperative complications. We retrospectively identified general, vascular, and general oncology patients at Vanderbilt University Medical Center. Logistic regression methods were used to construct a series of predictive models in order to continuously estimate the risk of major postoperative complications, and to alert care providers during surgery should the risk exceed a given threshold. Area under the receiver operating characteristic curve (AUROC) was used to evaluate the discriminative ability of a model utilizing a continuously measured surgical Apgar score relative to models that use only preoperative clinical factors or continuously monitored individual constituents of the surgical Apgar score (i.e. heart rate, blood pressure, and blood loss). AUROC estimates were validated internally using a bootstrap method. 4,728 patients were included. Combining the ASA PS classification with continuously measured surgical Apgar score demonstrated improved discriminative ability (AUROC 0.80) in the pooled cohort compared to ASA (0.73) and the surgical Apgar score alone (0.74). To optimize the tradeoff between inadequate and excessive alerting with future real-time notifications, we recommend a threshold probability of 0.24. Continuous assessment of the surgical Apgar score is predictive for major postoperative complications. In the future, real-time notifications might allow for detection and mitigation of changes in a patient’s accumulating risk of complications during a surgical procedure.

Similar content being viewed by others

Introduction

Measurement of risk for postoperative complications perioperatively is important in guiding medical decision making. Having a better understanding of when a patient’s risk profile changes during a surgical procedure is an important goal which might guide more timely interventions, triage decisions, and enhance communication among clinicians. In spite of rapid technological advances, the state of the art with respect to perioperative risk measurement and appropriate real-time notification dynamic changes in operative risk is still quite limited [1].

Since early identification of high-risk patients and appropriate intervention aimed at improving patient outcome can reduce the length of hospital stay [2], morbidity, and mortality [3–5], multiple risk scores have been designed to identify vulnerable patient populations pre- and post-operatively [3–6]. However, most of these risk scores are based on complicated algorithms and are not easily applied [6].

In 2007 Gawande et al. developed the surgical Apgar score (sAs), a ten- point scoring system based on lowest intraoperative mean arterial blood pressure (MAP), lowest heart rate (HR), and estimated blood loss (EBL) during surgery [3]. (Table 1) This score can be easily assessed at the conclusion of the surgery and has proven to be applicable in most surgical subspecialties [7–11]. The sAs predicts an individual patient’s risk for major postoperative complications as defined by the National Surgical Quality Improvement Program (NSQIP) [12] and death within 30 days following surgery [3, 8]. It is possible to monitor sAs trends throughout a case. Real-time assessment of the sAs and notification systems apprising clinicians of rapid changes in a patient’s sAs may represent an objective tool to aid providers in their decision making process, allowing them to rely on objective data. Given the rise in adoption of perioperative information management systems [13, 14], we therefore hypothesize that a model based on the continuous sAs monitoring may be used intraoperatively to identify patients at high risk of postoperative complications.

Methods

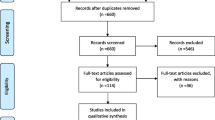

The Vanderbilt University Human Research Protection Program, Nashville, TN, approved the study. We conducted a retrospective evaluation of patients who had general, vascular, or general oncology surgery at Vanderbilt University Medical Center between January 1, 2009 and December 31, 2011. Intraoperative data were extracted from the Vanderbilt Medical Center’s perioperative data warehouse.

Patients ≥18 years of age who underwent surgery under general anesthesia, and had completed electronic anesthesia records, were included in the study. Patients that received care in our off-campus surgical centers and non-operative cases (i.e., bronchoscopy cases, dental procedures, procedures in the intensive care unit, and gastrointestinal, radiological, and electrophysiology cases) and all organ donors were also excluded. We excluded patients with only a single documented blood loss recording at the end of the case when that blood loss recording was greater than 100 mL. This allowed us to avoid sudden artificial changes of sAs trends at the end of a case in patients where blood loss was not documented as it occurred throughout the procedure.

The following variables were then extracted from Vanderbilt’s perioperative data warehouse using Microsoft SQL server technology (Microsoft Corporation, Redmond, WA): patient demographics, surgery date and starting time, length of the surgical procedure, type of primary surgical procedure, indication (emergency or elective procedure), American Society of Anesthesiologists Physical Status Classification (ASA classification), and hospital discharge date. All values for HR, MAP, and EBL were extracted from the database, as time stamped data.

The sAs was calculated as previously described [3] each time new information (i.e., vital signs or EBL) was documented in the record (typically every 30–60 s). The initial set of vital signs, defined as the patient’s baseline were captured once the patient reached sufficient anesthetic depth to start the surgery. HR values outside the range of 15 to 200 beats per minute and mean arterial pressures outside of the range of 25 to 180 mmHg were interpreted as artifact and were discarded. On average, EBL was recorded in 15-min intervals during standard surgical procedures without extensive blood loss.

Our primary endpoint was death within 30 days of surgery or the occurrence of major complications displayed in Table 2 based on the NSQIP registry, which collects peri- and postoperative data from various institutions for a comparative analysis of complication rates and surgical outcomes [15, 16].

Statistical analysis

Pairwise analyses were performed to evaluate the associations between demographic and operative patient characteristics and major postoperative complications within 30 days of surgery. Logistic regression was used to assess the association of end-of-case sAs with investigated outcomes. P-values less than 0.05 were considered statistically significant.

Logistic regression models were used to intraoperatively update the risk of major complications, conditional on preoperative and continuously monitored clinical factors, including HR, MAP, EBL, sAs, and derived factors. Logistic regression was implemented by assigning each patient’s outcome to every corresponding intraoperative record. At each intraoperative time-point, all continuously monitored factors were additionally summarized using two derived factors: the largest drop from baseline to the most current measurement, and a measure that we denote “insult.” Insult represents the cumulative drop in a continuously monitored factor from baseline to the current measurement multiplied by time (Fig. 1). Once the value is below baseline, the contribution to insult is positive. When the current value of a continuously monitored factor is greater than or equal to the baseline value, the contribution to insult is zero. The magnitude of insult may grow over the course of a procedure, but not shrink.

Illustration of an example heart rate (bpm) over procedure time (min) and the positive, cumulative contribution every drop in heart rate below the baseline value makes to HR insult. Baseline is defined as the first vital sign captured once a patient reaches sufficient anesthetic depth to start the surgery. Shaded areas demark heart rate values below baseline during the procedure. The second graph illustrates how drops in heart rate cumulatively contribute to HR insult and thereby account for the depicted rise in HR insult over time

Eight different models were constructed for each of the three surgical services, as described in Table 3. These models are denoted as follows: the ‘ASA,’ ‘sAs,’ ‘sAs and ASA,’ ‘HR,’ ‘HR and ASA,’ ‘MAP,’ ‘MAP and ASA,’ ‘HR, MAP, and ASA.’ Interactions between the current value of continuously measured factors and each of the two associated derivates were also considered.

Each risk model was used to evaluate a protocol for raising notifications intraoperatively. Based on the risk estimate at each intraoperative record, and for a sequence of threshold probabilities, we noted the procedure time at which the first notification would have been raised. Procedures where no notification was given were also noted. Box and whisker plots are used to display the times of first alert at various threshold probabilities. Since the ‘ASA’ model utilizes only preoperative information, an alert may only arise at the beginning of the surgical case. In contrast, the ‘sAs and ASA’ model may activate an alert at any time during the procedure.

The discriminative value of notification protocols was assessed for each model and surgical service by constructing receiver operating characteristic (ROC) curves. Area under the ROC curve (AUROC) values were compared among models in a pair wise manner by constructing a 95 % confidence interval for the ratio of two AUROC values. The AUROC estimate in the general surgery cohort was internally validated using a bootstrap validation technique [17]. In addition to these summaries of model discriminative value, calibration curves associated with the ‘sAs and ASA’ model are presented for each surgery service at procedure times 0, 60, and 120 min.

All statistical analyses were computed using SAS 9.3 statistical software package (SAS Institute, Cary, NC) and R version 3.0.3 (Vienna, Austria).

Results

We obtained complete electronic intraoperative data on 4,728 patients that fulfilled our inclusion criteria: 1,924 general surgery patients, 1,795 general oncology patients, and 1,009 vascular surgery patients. Out of 243,057 available patients 238,329 subjects had to be excluded for the reasons listed in Table 4. Demographic and intraoperative characteristics of the study population are displayed in Table 5. The incidence of major complications within 30 days of surgery was 16.11 % (95 % CI, 15.08–17.20), corresponding to a total of 762 patients. Major complications included 71 deaths (1.50 %, 95 % CI, 1.17–1.89) within 30 days of surgery. Mean age of the study population was 55 years, with patients suffering from major complications being on average 4 years older than patients without complications (p < 0.001). Increased patient age, higher ASA classification, and longer duration of the surgical procedure were associated with a statistically significant increase in adverse events (p < 0.001). The lowest intraoperative HR was significantly higher (62 versus 56, p < 0.001) and the lowest MAP was significantly lower (49 versus 51, p < 0.001) in patients with complications compared to patients without complications. Patients with EBL exceeding 800 mL were significantly more likely to suffer from adverse events (p < 0.001), as were patients with a lower sAs (p < 0.001). For every unit decrease in the sAs, the univariate odds of having a major postoperative complication increased by 62 % (OR 1.62; 95 % confidence interval [CI], 1.59–1.65; p < 0.001).

The association between major postoperative complications and various ranges of sAs is illustrated in Table 6. Among 402 patients with a score of 9 or 10, only 32 patients (8.0 %, 95 % CI, 5.51–11.05) suffered from major complications. In comparison, among 443 patients with a score of ≤ 4, 206 patients (46.5 %, 95 % CI, 41.78–51.27) experienced adverse events postoperatively. Patients who had end-of-case sAs between 0 and 4 had almost a five-times increased risk (Relative Risk [RR] 4.8, 95 % CI, 4.1–5.6; p < 0.001) of suffering from major postoperative complications compared to patients with a sAs between 7 and 8. On the other hand, patients with an end-sAs of 9 or 10 had a mildly decreased risk (RR 0.9, 95 % CI, 0.6–1.3; p < 0.001) of experiencing major complications compared to patients with a score of 7–8.

Out of 4,728 patients, 762 (16.11 %) experienced one or more adverse events. The five most frequent major complications in our study population were ventilator use for more than 48 h (27.86 %), wound disruption (15.03 %), deep or organ-space surgical site infection (12.58 %), renal failure (10.13 %), and sepsis (8.25 %).

Table 7 summarizes the discriminative value of each model using the AUROC, with 95 % bootstrap confidence intervals. In general surgery, the AUROC for the ‘ASA’ model is 0.69 compared to 0.71 for the ‘sAs’ model. The ‘HR and ASA’ and the ‘HR, MAP, and ASA’ models demonstrated a slightly better predictive ability than the ‘sAs’ model, with an AUROC of 0.72 in both models, respectively. Nevertheless, the ‘sAs and ASA’ model results in a better predictive ability, with an AUROC of 0.74. The bootstrap validated estimate of AUROC for the ‘sAs and ASA’ model in general surgery cases was 0.74, indicating very little ‘optimism’ due to model overfitting (Table 7). In vascular surgery, the AUROCs for the ‘ASA’ and ‘sAs’ models are similar, with an AUROC of 0.73 and 0.72, respectively. Even in this specialty the combined ‘sAs and ASA’ model exhibits a better predictive ability, with an AUROC of 0.80 (95 %, CI: 0.78–0.81). In general oncology the ‘ASA’ model predicts complications slightly more accurately (AUROC 0.71) than the ‘sAs’ model (AUROC 0.70), ‘HR’ model (AUROC 0.70), and the ‘MAP’ model (AUROC 0.59). Among all three subspecialties investigated in the study, the discriminative value of the ‘sAs and ASA’ model is the strongest in vascular surgery with an AUROC of 0.80. When all subspecialties were merged, the predictive ability of the ‘sAs’ model (AUROC 0.74) was slightly better than that of the ‘ASA’ model (AUROC 0.73). The ‘sAs and ASA’ model (AUROC 0.80) remained superior to the ‘HR’ model (AUROC 0.66), the ‘HR and ASA’ model (AUROC 0.75), the ‘MAP’ model (AUROC 0.60), the ‘MAP and ASA’ model (AUROC 0.72), and the ‘HR, MAP, and ASA’ model (AUROC 0.73). Calibration curves associated with the ‘sAs and ASA’ model for each surgical service at procedure times 0, 60, and 120 min (Fig. 2) indicate acceptable calibration. Table 8 describes the pairwise comparison of the eight risk models, using ratios (expressed as percentages) of the corresponding AUROCs. In particular, the AUROC for the combined ‘sAs and ASA’ model was improved by 7.9 % (95 % CI, 6.3–9.6) relative to the ‘ASA’ model in general surgery cases, 9.1 % (95 % CI, 7.1–11.0) in vascular surgery, 10.1 % (95 % CI, 6.4–13.5) in oncology surgery, and 8.5 % (95 % CI, 7.8–9.4) in the combined cohort. The AUROC for the combined ‘sAs and ASA’ model, relative to the ‘sAs’ model, was improved by 4.5 % (95 % CI, 3.6–5.5) in general surgery, 11.3 % (95 % CI, 9.4–13.7) in vascular surgery, 12.0 % (95 % CI, 7.9–17.2) in oncology surgery, and 7.2 % (95 % CI, 6.8–7.8) in the pooled cohort. In summary, in all three specialties and in the pooled cohort, combining ASA classification with sAs trend analysis showed a superior discriminative value in comparison to either risk score alone (Table 8).

Figure 3 displays box and whisker plots for the times to first hypothetical intraoperative notification, at increasing threshold probabilities. A notification criterion based exclusively on the ASA classification would have raised a notification either at the very beginning of the procedure or not at all since the ASA classification utilizes only preoperative information. In contrast, utilizing continuously monitored intraoperative factors in addition to ASA classification enables notification at any time during the surgical procedure. We believe that this feature is largely responsible for gains in sensitivity and specificity. Outliers in Fig. 3 may be attributed to a paucity of patients who had a high probability of experiencing adverse events. Although threshold levels for notification should be optimized and validated in a prospective manner, our findings indicate that a threshold probability of 0.24 would exhibit acceptable specificity (0.85) and sensitivity (0.53) while maximizing clinical utility and avoiding premature activation.

Displayed are box and whisker plots for the times to first hypothetical intraoperative notification, at increasing threshold probabilities for each surgical service and the pooled cohort. At high threshold probabilities, alerts are raised later during the surgical procedure. Alerts are activated earlier in the case when the threshold probability is set low since with this low tolerance for postoperative complications the threshold is exceeded more readily

Discussion

In this study we found that continuous sAs measurement can provide useful information about acute changes in a patient’s status and his/her risk for postoperative complications in general, vascular, and general oncology surgery. General surgery and vascular surgery were chosen for our study since the sAs had originally been validated in these subspecialties. General oncology was chosen due to the large sample size of this patient cohort.

We have shown that a continuously monitored sAs and its associated derivative factors improve on the discriminative value of ASA classification alone for postoperative complications. Combining the ASA classification with the sAs improves predictive ability in all three surgical specialties, demonstrating the highest AUROC of 0.80 in vascular surgery. Even when all three subspecialties were pooled, the predictive ability was comparable to that of each subspecialty examined separately. By combing the ASA classification with the sAs the patient’s preoperative condition was pared with his/her intraoperative performance. This allows clinicians to provide patients with a realistic estimate of the postoperative course. Lastly, we identified various time-points throughout the procedure at which the first notification would be raised for a sequence of threshold probabilities.

Our findings were consistent with previous studies, as we observed that the sAs could predict a patient’s risk of suffering from major postoperative complications within 30 days following surgery [3, 7, 18].

Our results were consistent with findings by Hyder et al., [19] who assessed the predictive ability of the sAs computed on a continuous basis throughout a surgical procedure or at sampling intervals ranging from five to ten minutes. Hyder et al. determined that more frequent assessment of the sAs improved its predictive ability yet greater sampling intervals in their study population enhanced the specificity of the sAs allowing for better model discrimination [19]. We extended previous studies by establishing a notification model based on risk estimates that would alert clinicians to a change in patient condition warranting medical attention. In selecting an appropriate threshold for raising alerts the contribution of artifacts may be limited. In our study we found that a threshold probability of 0.24 would exhibit acceptable sensitivity and specificity. However, the utility and the interpretation of this threshold probability need to be validated in a prospective study.

Our study is limited by the following factors. First, this is a single center study conducted at a major academic institution and is restricted to an adult patient population undergoing general, vascular, or general oncology surgery under general anesthesia. Considering that our hospital draws patients from a wide geographic radius, postoperative complications of some patients who sought postoperative care elsewhere would not have been captured in the study if these patients were lost to follow-up. However, 95.2 % of all patients included in the study had a follow-up visit at our hospital. Furthermore, the assessment of EBL has been criticized to be imprecise and may present a major limitation to the sAs [3, 7]. However, the original authors argue that the amount of blood loss needed to calculate the score falls into a wide enough range to render accurate assessment of intraoperative blood loss possible [3]. Moreover the manual assessment of EBL routinely updated every 15 min is a limitation to our study as the real-time score assessment relies on EBL data which is outdated. Another limitation to our study is the subjectiveness and interrater variability in the allocation of the ASA classification among different physicians and the inaccuracy of clinical interpretation [20, 21]. Lastly, although extreme values in HR and mean arterial pressure were excluded, artifactual measurements within these limits might be present.

A variety of measures and intraoperative variables are used to assess a patient’s condition during a surgical case, providing clinicians with an overabundance of perioperative data elements [22, 23]. Owing to this large amount of information, real-time notifications have been introduced to analyze data elements, enable trend detection, and alert clinicians to abnormal values or a patient’s deteriorating condition [22]. Real-time notifications draw the clinicians’ attention to acute changes that may warrant timely intervention to improve patient outcome [24, 25]. Providing real-time notifications in anesthesia has proven to reduce hospital costs, improve patient care, and prevent postoperative complications [22, 26]. Real-time notifications about changes in a patient’s sAs trend will motivate providers to better allocate resources driven by the patient’s tailored, acute physiology.

In a future prospective study we intend to investigate the utility of providing the perioperative team with a real-time display of the patient’s sAs. With such a study we hope to elicit how continuous assessment of the sAs over the course of a surgical case will affect clinical decision-making, patient care, and postoperative patient outcomes. Real-time display of sAs trends might lead to a new approach to anesthetic and operative management, [27, 28] with earlier medical interventions aimed at stabilizing the patient intraoperatively, reducing perioperative morbidity and mortality. This approach to integrating real-time data capture and analysis, with currently available alert mechanisms, should ultimately facilitate a greater impact of our medical systems [29].

References

Lawrence, J. P., Advances and new insights in monitoring. Thorac. Surg. Clin. 15(1):55–70, 2005. doi:10.1016/j.thorsurg.2004.09.002.

Ivanov, J., Borger, M. A., Rao, V., and David, T. E., The Toronto Risk Score for adverse events following cardiac surgery. Can. J.Cardiol. 22(3):221–227, 2006.

Gawande, A. A., Kwaan, M. R., Regenbogen, S. E., Lipsitz, S. A., and Zinner, M. J., An Apgar score for surgery. J. Am. Coll. Surg. 204(2):201–208, 2007. doi:10.1016/j.jamcollsurg.2006.11.011.

Dalton, J. E., Kurz, A., Turan, A., Mascha, E. J., Sessler, D. I., and Saager, L., Development and validation of a risk quantification index for 30-day postoperative mortality and morbidity in noncardiac surgical patients. Anesthesiology 114(6):1336–1344, 2011. doi:10.1097/ALN.0b013e318219d5f9.

Sessler, D. I., Sigl, J. C., Manberg, P. J., Kelley, S. D., Schubert, A., and Chamoun, N. G., Broadly applicable risk stratification system for predicting duration of hospitalization and mortality. Anesthesiology 113(5):1026–1037, 2010. doi:10.1097/ALN.0b013e3181f79a8d.

Barnett, S., and Moonesinghe, S. R., Clinical risk scores to guide perioperative management. Postgrad. Med. J. 87(1030):535–541, 2011. doi:10.1136/pgmj.2010.107169.

Regenbogen, S. E., Ehrenfeld, J. M., Lipsitz, S. R., Greenberg, C. C., Hutter, M. M., and Gawande, A. A., Utility of the surgical apgar score: validation in 4119 patients. Arch. Surg. 144(1):30–36, 2009. doi:10.1001/archsurg.2008.504. discussion 37.

Reynolds, P. Q., Sanders, N. W., Schildcrout, J. S., Mercaldo, N. D., and St Jacques, P. J., Expansion of the surgical Apgar score across all surgical subspecialties as a means to predict postoperative mortality. Anesthesiology 114(6):1305–1312, 2011. doi:10.1097/ALN.0b013e318219d734.

Haynes, A. B., Regenbogen, S. E., Weiser, T. G., Lipsitz, S. R., Dziekan, G., Berry, W. R., and Gawande, A. A., Surgical outcome measurement for a global patient population: validation of the Surgical Apgar Score in 8 countries. Surgery 149(4):519–524, 2011. doi:10.1016/j.surg.2010.10.019.

Prasad, S. M., Ferreria, M., Berry, A. M., Lipsitz, S. R., Richie, J. P., Gawande, A. A., and Hu, J. C., Surgical apgar outcome score: perioperative risk assessment for radical cystectomy. J. Urol. 181(3):1046–1052, 2009. doi:10.1016/j.juro.2008.10.165. discussion 1052–1043.

Zighelboim, I., Kizer, N., Taylor, N. P., Case, A. S., Gao, F., Thaker, P. H., Rader, J. S., Massad, L. S., Mutch, D. G., and Powell, M. A., “Surgical Apgar Score” predicts postoperative complications after cytoreduction for advanced ovarian cancer. Gynecol. Oncol. 116(3):370–373, 2010. doi:10.1016/j.ygyno.2009.11.031.

Khuri, S. F., Daley, J., Henderson, W., Barbour, G., Lowry, P., Irvin, G., Gibbs, J., Grover, F., Hammermeister, K., Stremple, J. F., et al., The national veterans administration surgical risk study: risk adjustment for the comparative assessment of the quality of surgical care. J. Am. Coll. Surg. 180(5):519–531, 1995.

Simpao, A. F., Ahumada, L. M., Galvez, J. A., and Rehman, M. A., A review of analytics and clinical informatics in health care. J. Med. Syst. 38(4):45, 2014. doi:10.1007/s10916-014-0045-x.

Stol, I. S., Ehrenfeld, J. M., and Epstein, R. H., Technology diffusion of anesthesia information management systems into academic anesthesia departments in the United States. Anesth. Analg. 118(3):644–650, 2014. doi:10.1213/ANE.0000000000000055.

Khuri, S. F., Daley, J., Henderson, W., Hur, K., Demakis, J., Aust, J. B., Chong, V., Fabri, P. J., Gibbs, J. O., Grover, F., Hammermeister, K., Irvin, G., 3rd, McDonald, G., Passaro, E., Jr., Phillips, L., Scamman, F., Spencer, J., and Stremple, J. F., The Department of Veterans Affairs’ NSQIP: the first national, validated, outcome-based, risk-adjusted, and peer-controlled program for the measurement and enhancement of the quality of surgical care. National VA Surgical Quality Improvement Program. Ann. Surg. 228(4):491–507, 1998.

Khuri, S. F., The NSQIP: a new frontier in surgery. Surgery 138(5):837–843, 2005. doi:10.1016/j.surg.2005.08.016.

Harrell, F. E., Jr., Lee, K. L., and Mark, D. B., Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat. Med. 15(4):361–387, 1996. doi:10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4.

Regenbogen, S. E., Bordeianou, L., Hutter, M. M., and Gawande, A. A., The intraoperative Surgical Apgar Score predicts postdischarge complications after colon and rectal resection. Surgery 148(3):559–566, 2010. doi:10.1016/j.surg.2010.01.015.

Hyder, J. A., Kor, D. J., Cima, R. R., and Subramanian, A., How to improve the performance of intraoperative risk models: an example with vital signs using the surgical apgar score. Anesth. Analg. 117(6):1338–1346, 2013. doi:10.1213/ANE.0b013e3182a46d6d.

Aronson, W. L., McAuliffe, M. S., and Miller, K., Variability in the American society of anesthesiologists physical status classification scale. AANA J. 71(4):265–274, 2003.

Sankar, A., Johnson, S. R., Beattie, W. S., Tait, G., and Wijeysundera, D. N., Reliability of the American Society of Anesthesiologists physical status scale in clinical practice. Br. J. Anaesth. 113(3):424–432, 2014. doi:10.1093/bja/aeu100.

Wanderer, J. P., Sandberg, W. S., and Ehrenfeld, J. M., Real-time alerts and reminders using information systems. Anesthesiol. Clin. 29(3):389–396, 2011. doi:10.1016/j.anclin.2011.05.003.

Nouei, M. T., Kamyad, A. V., Sarzaeem, M., and Ghazalbash, S., Developing a genetic fuzzy system for risk assessment of mortality after cardiac surgery. J. Med. Syst. 38(10):102, 2014. doi:10.1007/s10916-014-0102-5.

Radhakrishna, K., Waghmare, A., Ekstrand, M., Raj, T., Selvam, S., Sreerama, S. M., and Sampath, S., Real-time feedback for improving compliance to hand sanitization among healthcare workers in an open layout ICU using radiofrequency identification. J. Med. Syst. 39(6):68, 2015. doi:10.1007/s10916-015-0251-1.

Marchand-Maillet, F., Debes, C., Garnier, F., Dufeu, N., Sciard, D., and Beaussier, M., Accuracy of patient’s turnover time prediction using RFID technology in an academic ambulatory surgery center. J. Med. Syst. 39(2):12, 2015. doi:10.1007/s10916-015-0192-8.

Nair, B. G., Newman, S. F., Peterson, G. N., Wu, W. Y., and Schwid, H. A., Feedback mechanisms including real-time electronic alerts to achieve near 100% timely prophylactic antibiotic administration in surgical cases. Anesth. Analg. 111(5):1293–1300, 2010. doi:10.1213/ANE.0b013e3181f46d89.

Gabriel, R. A., Gimlich, R., Ehrenfeld, J. M., and Urman, R. D., Operating room metrics score card-creating a prototype for individualized feedback. J. Med. Syst. 38(11):144, 2014. doi:10.1007/s10916-014-0144-8.

Malapero, R. J., Gabriel, R. A., Gimlich, R., Ehrenfeld, J. M., Philip, B. K., Bates, D. W., and Urman, R. D., An anesthesia medication cost scorecard--concepts for individualized feedback. J. Med. Syst. 39(5):48, 2015. doi:10.1007/s10916-015-0226-2.

Ehrenfeld, J. M., The current and future needs of our medical systems. J. Med. Syst. 39(2):16, 2015. doi:10.1007/s10916-015-0212-8.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Systems-Level Quality Improvement

Rights and permissions

About this article

Cite this article

Jering, M.Z., Marolen, K.N., Shotwell, M.S. et al. Combining the ASA Physical Classification System and Continuous Intraoperative Surgical Apgar Score Measurement in Predicting Postoperative Risk. J Med Syst 39, 147 (2015). https://doi.org/10.1007/s10916-015-0332-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10916-015-0332-1