Abstract

Various natural and man-made disasters as well as major political events (like riots) have increased the importance of understanding geographic failures and how correlated failures impact networks. Since mission critical networks are overlaid as virtual networks over a physical network infrastructure forming multilayer networks, there is an increasing need for methods to analyze multilayer networks for geographic vulnerabilities. In this paper, we present a novel impact-based resilience metric. Our new metric uses ideas borrowed from performability to combine network impact with state probability to calculate a new metric called Network Impact Resilience. The idea is that the highest impact to the mission of a network should drive its resilience metric. Furthermore, we present a state space analysis method that analyzes multilayer networks for geographic vulnerabilities. To demonstrate the methods, the inability to provision a given number of upper layer services is used as the criteria for network failure. Mapping techniques for multilayer network states are presented. Simplifying geographic state mapping techniques to reduce enumeration costs are also presented and tested. Finally, these techniques are tested on networks of varying sizes.

Similar content being viewed by others

1 Introduction

Mission critical networks such as air traffic control networks [1] used for emergency response, power grid monitoring, and other national infrastructure are increasingly being implemented on shared infrastructures using multilayer network architectures. That is, mission critical networks are provided over underlying network resources. While this increases the flexibility of providing such services over the underlying network infrastructure, it also increases the risk to the mission critical networks.

Disasters and other geographically correlated events present significant challenges for network designers for several reasons. Mission critical user requirements are typically more important during these types of network challenges. This has been documented during several well known events like Hurricane Katrina, the Tohoku Earthquake and the Indian Ocean Tsunami. How do we evaluate and protect mission critical networks against geographic challenges? Multilayer networks warrant additional concern since geographic events can affect nodes and infrastructure in multiple layers of the network simultaneously (see Fig. 1) leading to challenges in determining network performance.

Work related to geographic vulnerabilities in networks frequently focuses on one aspect of the problem like topology considerations or graph theoretic network measures. The users (especially mission critical users) and the impact that a geographic event will have on those users is rarely considered. To address these challenges, we take a look at the network design and evaluation process as it relates to geographic failures in mission critical networks. We offer the following as solutions to these challenges:

-

A novel impact driven, probability based resilience evaluation tool referred to as the Network Impact Resilience (NIR) metric

-

A self-pruning, flexible state based method, referred to as the Self-Pruning Network State Generation (SP-NSG) algorithm, to analyze multilayer networks for geographic vulnerabilities based on user requirements

-

A workflow for network design and analysis to support disaster communications

State based analysis offers tremendous flexibility in the types of network testing that are both possible and demonstrated in this work. Many other methods tend to be tied to a particular network measure.

The Self-Pruning Network State Generation algorithm (SP-NSG) uses the idea of pruning the state space during the execution of the algorithm to reduce the states that need to be investigated, which drastically reduces computation time. One of the benefits is that it allows an effective method to identify geographic vulnerabilities in networks. Even with intelligent state space pruning methods, the analysis can become intractable for large networks. To maintain tractability in large networks, we also present a K-means clustering method. It relies on the idea of reducing the number of nodes for purposes of the state based analysis using clustering. In addition to improving tractability, we propose a method to analyze multilayer networks that simultaneously maps geographic failures onto multiple layers and analyzes the performance.

We demonstrate the accuracy of the SP-NSG with K-means clustering using simulation and observe our approach to be accurate more than 99 % of the time. Additionally, the performance of the SP-NSG algorithm is reported. With proper constraints applied to the analysis, we were able to complete state based analysis techniques on very large multilayer networks that were previously intractable.

The rest of the paper is organized as follows. In Sect. 2, we discuss the process to evaluate networks for disaster impact. This is followed by a discussion of related work. We then present the Network Impact Resilience metric in Sect. 4. The SP-NSG method for the single-layer network is presented in Sect. 5, followed by its extension to the multilayer network in Sect. 6. We describe the evaluation methodology in Sect. 7, while the results are presented in 8. Finally, we present discussion and conclusions in Sects. 9 and 10.

2 Disaster Planning: Impact Based Approach

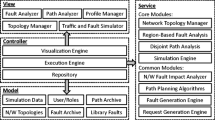

One of the challenges that faces network planners is the occurrence of disasters and how to serve the needs of the emergency responders and other mission critical users during and following disasters. The Federal Aviation Administration (FAA) System Wide Information Management (SWIM) network is an example of a mission critical multilayer network [1]. To address this problem, we take a look at the process to create a network that considers the impact of disasters. Without considering specific disaster scenarios in the context of the Mission Critical requirements, it is difficult to model the disaster and the impacts immediately following the disaster. We propose a process that incorporate disaster modeling into the design process and factors the vulnerabilities back into the design as shown in Fig. 2.

The Topology Design component involves the topology selection. Frequently, the topology node locations are based on physical or cost-based constraints. Links may be added based on node connectivity derived from user requirements. Augmentation algorithms based on link protection like Share Risk Link Groups (SRLG) or based on graph theoretic networks are commonly used here.

The Provisioning component typically allocates link sizes, creates higher layer topologies, and provisions the network resources to meet user requirements. Capacity based SRLGs, virtual topologies, and many other protection algorithms that involve network capacity planning are used. Integer Linear Programs (ILP) and other optimization algorithms and heuristics are commonly used to provision resources along diverse paths or in using a variety of protection schemes. It is not uncommon for Topology Design and Provisioning to be considered concurrently.

Frequently, the Evaluation component is not included with the assumption that the Topology Design and Provisioning is adequate for disaster planning purposes. Since Topology Design and Provisioning tends to be more concerned with the day to day operation of the network, the design tends to focus on common failure modes (like random link or node failures). When the Evaluation component is included, techniques like graph metrics or connectivity/capacity based approaches are typical but do not necessarily consider the specific mission critical user demands. Our contention is that without disaster evaluation that considers mission critical user requirements, extremely unlikely events like natural disasters will eventually occur and have catastrophic consequences during disaster response and recovery. The NIR and SP-NSG is proposed for the Evaluation component. The NIR using the SP-NSG produces two outputs. The first output is a metric that gives an indication of the disaster resilience of the network being evaluated. the second output is a list of specific failure modes or geographic vulnerabilities found.

3 Related Work

Network resilience analysis is a varied and well studied research area. This work is a cross section of several research areas including geographically correlated failures, multiple layer networks, and network resilience metrics. We did not find existing research that generates a comprehensive set of geographic vulnerabilities in networks or resilience metrics that evaluate those vulnerabilities based on specific mission critical user requirements in multilayer networks.

For comprehensive surveys on the subject of network survivability to disasters please refer to the work by Sterbenz et al. [2] and Habib et al. [3].

3.1 Geographic Vulnerabilities in Networks

Much of the work related to finding geographic vulnerabilities in networks focuses on reachability of network components (or connectivity) or degradation of network capacity during a given geographically correlated failure [4–8]. There is also a significant amount of work that focuses on graph theoretic approaches that is discussed in Sect. 3.3.

Banerjee et al. [4] presented an algorithm that uses intersections of regions around nodes and links to create a complete set of regions that can be used to analyze region based failures in networks. Li et al. [5] expand on the work in [4] by considering multiple regions and link capacity.

Rahnamay-Naeini et al. [6] use geographically correlated stochastic models to generate link failures known as the Strauss point process. Then they compute the average two-terminal connectivity and all-terminal connectivity using Monte Carlo simulation. Sterbenz et al. pioneered the concept of geographic network challenges using simulation as described in [47]. Our work is not stochastic in nature; rather, it produces all possible failure modes. Naumayer and Modiano [7] present work to calculate probabilities of geographically correlated failures in the presence of randomly placed line segments that can intersect network links. Recently, Saito [8] used probabilistic geometric models to determine geographic vulnerabilities in networks. In [9, 10], Agarwal et al. create hippodromes around network components based on an attack radius. They were able to use geographically correlated events to estimate the probability of failure of these components using average two-terminal reliability and capacity degradations.

3.2 Multilayer Network Survivability

A user requirement can be protected using end-to-end protection or link (or node) level protection. These protection techniques can implemented at the optical layer (wave protection or SRLGs), hybrid data link/network layer [Multiprotocol Label Switching (MPLS) or Generalized MPLS], network layer (IP rerouting), or higher layers using techniques like virtual network embedding (VNE) or virtual topologies. Coordination between layer protection is typically based on technology reroute times with tradeoffs, as described in [11, 12].

Survivability of multilayer networks has been addressed before [13–16, 47], but not significantly from the perspective of mission critical networks or for large geographically correlated events except by simulation methods.

State based approaches include Oikonomou, Sinha, and Doverspike who present a multilayer model in [17] that relies on the most probable states first to minimize the number of states analyzed. In our work, we utilize and extend the algorithms in [18] that use state space pruning instead of most probable states. In [19], Lee and Modiano propose a method of choosing diverse routing in multilayer networks using the Max-Flow Min-Cut theorem. In [20], Pacharintanaku and Tipper develop a multilayer mapping approach that uses lower layer mappings to provide diverse paths for upper layer connections and use mathematical programming to assign capacity.

Early work in disjoint path selection with Shared Risk Link Groups (SRLG) was completed by Oki et al. [21]. The concept of a probabilistic SRLG (P-SRLG) in order to find probabilities of dual path failure in the presence of correlated link failures was proposed by Lee and Modiano in [22]. In [23], Esmaelili et al. created multidomain Routing and Wavelength Assignment (RWA) solutions to improve lightpath reliability during correlated failures using drivers like risk minimization or traffic engineering. In [24], this was generalized to include protect paths.

In [25], Yu et al. proposed a Mixed-Integer Linear Program (MILP) and heuristics to map virtual infrastructure (VI) onto a substrate network so that the VI could survive certain regional failures. Also extending Virtual Network Embedding (VNE) concepts, Rahman and Boutaba [26] added survivability in order to maintain virtual networks during link failures. In [47], Sterbenz et al. discusses an extensive framework in detail that describes mapping techniques from the physical layer to the application layer for the purposes of resilience modelling. They then use these techniques to generate NS-3 code that allows the simulation of a wide variety of attacks including geographically correlated attacks.

3.3 Network Resilience Metrics

Network resilience metrics have different meanings based on the context. When referencing applications like web services, metrics like performability are considered [27, 28]. When referencing topologies and network structure, it is more likely to see graph theoretical or reachability metrics.

In [27, 28], performability is described as the probability that a network will perform at a given network measure. Since the NIR has a basis in performability, it is described in detail in Sect. 4. The difference between the NIR and standard performability is that highly probable events tend to dominate performability and thus the design of the network. With mission critical networks, we are primarily interested in survivability and resilience rather than performability alone. To address this concern, we propose the concept of network impact versus network measure.

Much of the work in network reliability using state enumeration techniques was by Colbourn and Ball in [29, 30]. This work was extended by Li and Sylvester [31] to include most probable state algorithms to calculate bounds for network reliability. Jarvis and Shier [32], as well as Dotson and Gobien [33] presented methods based on the most probable states. Gomes et al. [34] extended these ideas to multimode network models. In 1979, Dotson and Gobien [33] created methods to calculate 2-terminal reliability based on De Morgan’s law. The method works by using failure modes to reduce the state space. Our methods utilize and extend these ideas by using failed and unfeasible network states to reduce the state space.

Graph theoretic metrics including node degree, algebraic connectivity, betweenness, clustering coefficient, and more recently weighted spectral distribution can be used to evaluate geographic vulnerabilities in networks. Work that compares these metrics includes Bigdeli et al. [35]; Long et al. [36]; and Çetinkaya et al. [37]. A complete description of the more recent weighted spectral distribution can be found in [38] and also in [37]. Of interest for geographic vulnerabilities is the clustering coefficient metric that focuses on the connectivity of a node’s neighbors described in works by Sterbenz et al. [39] and Ahn et al. [40]. One of the challenges of most of these metrics is that in general they evaluate a topology for a specific metric and not an entire network with traffic demands of varying design and importance. The ability to evaluate weighted graphs improves the situation. By itself, this information is not sufficient to help find vulnerable regions in networks considering different demand structures without a brute force type of analysis.

In [41], Garbin and Shortle articulated the concept of a resilience curve, where a network performance measure like network bandwidth is contrasted to a failed percentage of network assets. The intuitive effect is useful as the concavity of the curve is indicative of the resilience. The resilience measure can then be calculated by summing the area under the curve or simply a level of damage necessary to reduce the network measure below a given threshold. A similar approach is used by Manzano et al. [42]. They analyzed a network measure (referred to as a QoS parameter) versus the number of failed components. They were able to compare their approach against many graph metrics and network resilience metrics. In [43], Menth et al. measured resilience by calculating a complementary cumulative distribution function (CCDF) and network availability based on the network load and a set of relevant failure modes.

The NIR that is presented in this work was also originally presented in [18]. We extend that work here with a new and novel method to create impact curves. The method presented here normalizes the impact curves that allows the metric to be used more broadly when comparing networks to each other for resilience purposes. The previous work on the NIR was only presented theoretically. Here, we apply the NIR to three multilayer network topologies.

The SP-NSG presented in this work is an evolution from our earlier work [18, 44, 45]. The state based analysis techniques used to find geographic vulnerabilities presented in those works are extended here in several ways. First, a new technique for testing multilayer capacitated networks is described and tested. Second, we simulated the SP-NSG allowing for an extensive evaluation of the performance and accuracy of the algorithm. Third, a new 28 node topology was tested and evaluated using the SP-NSG.

4 Network Impact Resilience (NIR)

The inspiration for the NIR is rooted in performability, which is one of the metrics used to evaluate mission critical networks. Performability uses the probability of network events and the network performance associated with that event to calculate an average network performance [27]. If N is the number of network elements, \(S_i\) is the system state, \(X[S_i]\) is the network measure at state \(S_i\), and \(P[S_i]\) is the probability of network state \(S_i\), then performability is given by

Calculating a given network measure such as dropped connections or available bandwidth for network states is well understood; these measures are used to calculate several resilience metrics and performability metrics. However, relating network measure to the mission of the network is considerably more challenging. To effectively do this, two pieces of information are required.

-

1.

The minimum network measure required to support the mission of the network \((X_{\min })\)

-

2.

An understanding of the relationship between the network mission and network measure

How to determine these factors is complex and requires knowledge of the network mission. Modeling and simulation of catastrophic events can be used to determine this information. To define network impact, we start with a review of the terms network state and network measure.

Definition 1

Network state. The network state \(S_i\) is defined as \([s_1 s_2 \cdots s_N]\) where \(s_i\) is ‘0’ if the network component i is up and \(s_i\) is ‘1’ if the network component i is down.

Clearly, this creates \(2^N\) unique network states. Each network state may also have a unique effect on the performance of the network. Typically, the performance of a network with a particular performance indicator is called the network measure.

Definition 2

Network measure. The network measure, \(X[S_i]\), is defined as the performance of a network using a given metric at network state \(S_i\).

From (1), network measure \(X[S_i]\) is related to the ability of the network to meet the demands placed on the network. Typically \(X[S_i]\) ranges from 0 (i.e., \(0\,\%\) of demands met) to 1 (i.e., \(100\,\%\) of demands met). Returning to the question, is the network able to meet its mission?, we consider the following:

Definition 3

Minimum network measure . The minimum network measure, \(X_{\min }\), is defined as the Network Measure below which the network can no longer serve its mission.

Definition 4

Network impact. The network impact, \(Y[S_i]\), is defined as an indication of the networks’ inability to perform its mission in a given network state \(S_i\).

Using this definition, \(Y[S_i]\) ranges from 0 to 1. When \(Y[S_i]\) is 0, the network is meeting its mission fully and there is no negative impact to the mission. When \(Y[S_i]\) is 1, which by definition means \(X[S_i]=X_{\min }\), the impact in state \(S_i\) has caused the complete failure of the network to meet the mission. \(X_{\min }\) is strongly application dependent. This work uses \(X_{\min }\) values between 30 and 50 %.

Consider the situation where the network is under-provisioned. In ideal conditions, the mission of the network may still be met. But, a failure in the network may decrease the available bandwidth to the point that severe congestion occurs and the mission cannot be met due to increased latency or dropped traffic. This situation would not be considered resilient. In the opposite situation, the network may be over-provisioned to the point that many failures may occur and available bandwidth can decrease significantly before an impact to the mission is noted, providing considerably more resilience. An impact curve can be used to visualize the relationship between the network measure and the mission of the network:

Definition 5

Impact curve. The impact curve is the function created by \(Y[S_i]\) when it is sorted from minimum impact to maximum impact over the possible state space \(S_i\).

To provide a possible mathematical interpretation for \(Y[S_i]\), we begin by incorporating the minimum network measure, \(X_f[S_i]\):

It is then straightforward to define the following normalized network impact function:

Figure 3a shows an example where \(X[S_i]\) is a simple decreasing linear function over all network states. \(Y[S_i]\) increases linearly from 0 impact to 1 impact at the point where \(X_{\min }\) is reached.

To provide the ability to fit the relationship between the network mission and network measure, we add an impact parameter, \(\alpha\):

Note that since the minimum impact is 0 and maximum impact is 1, the impact parameter does not affect the impact at the endpoints. It will also be demonstrated that as \(X_{\min }\) is decreased, the ability to improve network resilience increases.

Figure 3b shows the effect that the impact parameter \(\alpha\) has on the impact curves. What is useful to note here is that as \(\alpha\) increases, the impact of the network is reduced for a larger proportion of the network state space. Therefore, it is obvious that as \(\alpha\) increases, the resilience of the network increases. The implication here is that a network that can withstand multiple service failures prior to the inability to perform its mission would have a high \(\alpha\) value.

Finally, the Network Impact Resilience is defined as:

Like performability (1), the NIR (5) uses state probability to determine average performance. By using impact instead of network measure, the NIR is better at predicting the occurrence of high impact events. When \({\mathcal {I}}=0\), there is no impact over the state space and would indicate that a network is impervious to failure. When \({\mathcal {I}}=1.0\), it would indicate that a network cannot perform its mission. The usage of the NIR would be to compare network architectures that serve the same demands and mission. If the NIR is calculated for two networks with the same demand and mission, the lower NIR would indicate the more resilient network. This will be demonstrated in the results section.

One of the challenges with the NIR, as well as the performability, is calculating the network measure or the network impact for enough samples to accurately represent the probability of that impact or measure. Being able to represent the probability curve with a small number of samples is necessary to calculate the NIR in a tractable manner. We use \(3-6\) samples in the most sensitive area of the probability curve and then use a piecewise approximation to construct the probabilities needed for the NIR.

5 Self-Pruning Network State Generation

The self-pruning network state generation (SP-NSG) algorithm was proposed in [18, 44]. It borrows on concepts from an algorithm developed by Dotson [33] to generate states as the algorithm progresses. We first present an overview of SP-NSG for single-layer networks before discussing it in the context of multilayer networks in Sect. 6. It creates a lexicographic ordering of states based on De Morgan’s law where ‘0’ denotes a working or up network component and ‘1’ denotes a down network component:

As stated previously, we focus on nodal failures but our work could be extended to link failures as well. To present the algorithm, we define the following terms:

Definition 6

Geographic vulnerability: A Geographic Vulnerability is the geographic area of a network that (if attacked) can cause the network to fail to perform its mission. Significant impact may be related to the level of reachability between nodes or the ability to provision a percentage of demands across the network.

Definition 7

Geospatial event: A Geospatial Event is an event that can cause a geographic vulnerability.

Definition 8

Threat radius: The threat radius is the physical radius of a geospatial event that is used to define the geographic vulnerability.

Definition 9

Feasible mode: Based on the threat radius (and other filters), Feasible Mode implies that this Network State is possible.

Definition 10

Network test: Test procedure \(X(S_i)\) that evaluates the performance of a network at a given Network State \(S_i\) with a Success or Fail outcome based on the ability of the network to be able to perform its mission.

For a given Network State with a failed Network Test, the intersection of circles with radii equal to the Threat Radius surrounding each down node forms the Geographic Vulnerability. The following assumptions are used to develop SP-NSG.

Assumption 1

If a network state \(S_i\) with \(m\, (\ge 1)\) nodes, \(k_1, \ldots , k_m\), down causes the network to fail to perform it’s mission, i.e., \(X(S_i) < {\hat{T}}\) (where \(\hat{T}\) is the failed threshold of \(X_{\min }\)), then any \(S_j\) that contains the same nodes will result in \(X(S_j) \le X(S_i)\). Therefore, state \(S_j\) does not need to be examined since it would also cause the network to fail its mission.

Assumption 2

If a network state \(S_i\) with \(m\, (\ge 1)\) nodes, \(k_1, \ldots , k_m\), is not a feasible network state, then any \(S_j\) that contains the same down nodes will also not be feasible. Therefore, state \(S_j\) does not need to be examined.

An example of Assumption 2 is a failure state that has down nodes separated by a large distance if geographically correlated failures are being analyzed, which would not need to be analyzed. We use De Morgan’s Law (6) to reduce the search space necessary to calculate reliability [33]. As we can see from Fig. 4, State 1 can be used to generate States 6 to 9 by incrementally changing the status of each node to down that is after the last down node in State 1. This follows De Morgan’s Law (6) by dividing the state space into two sections. The first section is represented by the previously successful test (in this case state 1) represented by P and the section that is untested (in this case States 6–9) represented by \(\overline{P}\).

We first test the state \(S_0 = [0 \; 0 \; 0 \cdots 0]\). If it is successful (which it should be), we use De Morgan’s law to add the complement of \(S_0\) to a list to test for both feasibility that a geographic event could affect that particular set of nodes and if that state contains a previously failed state. This would include \(S_1 = [1 \; 0 \; 0 \cdots 0]\), \(S_2 = [0 \; 1 \; 0 \cdots 0]\), etc \(\ldots\) The states that are feasible and that do not contain previously unsuccessful failure modes, are added to the queue to test. The next state is popped from the queue to test and the process is repeated. If the test is unsuccessful, that state is noted. In the absence of infeasible or unsuccessful failure modes, a lexicographic ordering is produced where the failure states are ordered by the number of failed nodes in that state as shown in Fig. 4. The SP-NSG is described in Algorithm 1 and operates as shown in Fig. 5.

5.1 Network Tests

In the step Network Test in Algorithm 1, we consider two different tests to determine if the network is performing its function or not under a failed state critical to its mission. These tests are (1) reachability of a network in terms of connectivity (“connectivity test”), (2) provisioning of demands for a capacitated network (“capacitated network test”).

For the connectivity test, we consider the set of demands to determine if each source is connected to each destination. The metric, \(M_C(S_i)\), for state \(S_i\) is defined as the ratio of successful connections over total connections. If the metric is below a certain threshold for a particular failed state, then we say that the mission critical network does not meet its requirement.

For the capacitated network test, we first provision all user demands on the network with no failures, assigning capacity to each link as needed by the demands. We consider the metric, \(M_D(S_i)\), to be the ratio of the demands provisioned as successful at state \(S_i\) compared to the total traffic demands provisioned initially. If the metric is below a certain threshold for a particular failed state, then we say that the mission critical network does not meet its requirement. It should be noted that additional capacity can be added via a redundancy factor. This is shown in the multilayer provisioning algorithm, Algorithm 3.

5.2 Feasible Modes

In addition to using failure modes to reduce state space, another important method to reduce state space is by using network state feasibility. If a particular network state \((S_i)\) is not feasible, then by Assumption 2, any states that contain the same down nodes as \(S_i\) would also not be feasible. The main test for feasibility used here is maximum geographic distance, which is the maximum distance that any two down nodes may be located from each other. When planning for a disaster with a maximum radius of d kilometers, that disaster would not be able to simultaneously destroy two nodes that are separated by more than 2d kilometers. Therefore, a failure mode containing those two nodes is assumed to not be feasible under that disaster scenario. Other feasibility filters that could be used to limit state space include the maximum number of simultaneously failed nodes during a random failure analysis.

6 Multilayer SP-NSG Model

We first define our multilayer network model and then describe the Multilayer SP-NSG algorithm.

6.1 Multilayer Network Model

The network model being used includes a lower layer undirected graph denoted as \(G_L(V_L,E_L)\) shown in Fig. 6. The upper layer network is modeled as an undirected graph denoted as \(G_U(V_U,E_U)\). Customer demands are assumed to be given to the upper layer network. The i-th demand is the tuple \(\langle s_U,t_U,D_U\rangle\) that indicates the demand from the upper layer source \((s_U)\), upper layer destination \((t_U)\), and the amount of demand \((D_U)\). The demand \(\langle s_U,t_U,D_U\rangle\) is then provisioned across \(G_U\).

After all of the demands are provisioned, \(E_U\) now contains the link capacity required at the upper layer. \(E_U\) with the updated capacity requirements at the upper layer is passed to the lower layer network \(G_L\) for provisioning. \(G_L\) is provisioned with the upper layer link capacity requirements providing baseline. For example, in Fig. 6, two demands are sent to the upper layer network as \(\langle 2,3,1\rangle\) and \(\langle 1,3,1\rangle\). These are mapped onto \(G_U\) and the subsequent requirements are mapped onto \(G_L\). With shortest path routing, this leaves nodes 1–2 with a bandwidth of 1, nodes 2–5 with bandwidth of 2, and nodes 5–4 with a bandwidth of 2.

6.2 Multilayer SP-NSG

In Algorithm 1, the step Reconfigure Network(\(S_i\)) assumes that the links and nodes are based on the network state \(S_i\). To reconfigure in the case of the multilayer network, we need to generate \(G_U\) and \(G_L\). In order to generate new links (\(E_L, E_U\)), surviving nodes (\(V_L, V_U\)) must be generated. If we assume that the upper layer nodes will always share a location with a lower layer node, the state space for both layers can always be defined with the lower layer state space \(({\mathbf {S}}_i = {\mathbf {S}}_{L,i})\). Then a simple transformation is required to convert to the upper layer state space. This is completed with the use of an \(n \times m\) transformation matrix \({\mathbf {L}}_{LU}\) where n is the number of lower layer nodes and m is the number of upper layer nodes. If a mapping exists between the lower and upper layer, \(L_{LU} (l,u) = 1\), where l is the lower layer node number and u is the upper layer node number. All other matrix locations are 0. Converting the network state i from a lower layer to an upper one is a simple transformation as shown below.

To illustrate the point, the network shown in Fig. 6 would have a transformation matrix as shown below.

The algorithm for ReconfigureNetwork(\(S_i\)) for multilayer networks is shown in Algorithm 2.

6.3 Multilayer Network Test

The step TestNetwork in Algorithm 1 for single-layer networks considered either the connectivity test or the capacitated network test as discussed in Sect. 5.1.

We now describe a more sophisticated approach to perform the network test for multilayer networks that uses ideas similar to the single layer capacitated network test. In this two stage approach shown in Algorithms 3 and 4, we first provision the demands onto the upper layer network by adding capacity to the links \(E_U\) in the upper layer network. These links and their capacities would serve as demands on the lower layer network \(D_{L}\). These demands are then provisioned onto the lower network creating a set of links with baseline capacities. Those capacities in the lower layer network can be augmented with a redundancy factor R as shown in Algorithm 3.

For the network test using a network state \(S_i\), the upper layer links \(E_U\) are provisioned on the augmented lower layer network after it was reconfigured from \(S_i\). The links that are provisioned successfully provide the links for the reconfigured upper layer network. The demands are provisioned on the reconfigured upper layer network. The proportion of successfully provisioned demands provide the metric as shown in Algorithm 4.

It should be noted that during the network test, links from the upper layer network may be provisioned on the lower layer network using longer paths because previously available nodes are down. This can result in more resources being allocated to the longer links which would potentially deny those resources to the shorter links. In Algorithm 4, we address this with an augmentation that searches the old list of paths generated for the links. If that path and capacity still exists, then that link is provisioned immediately. If the path and capacity does not exist, the provisioning of that link is delayed until all of the links have been processed for the first time.

6.4 Improvements to SP-NSG for Geographic Vulnerabilities in Large Networks

One of the issues with multilayer networks is that the network test typically has high computational costs, especially for large networks. The basic implementation of SP-NSG has a cost that is associated with the possible number of states \(2^{N_r}\) given a maximum of \(N_r\) nodes in a particular threat radius r. However, as we know from [18], the number of states analyzed is still typically a very small fraction of the maximum number of states. We consider the following assumption to improve on SP-NSG for large networks:

Assumption 3

If two nodes are located in close proximity to each other and one node fails due to a geographic event, then the other node will also fail.

Assumption 3 raises a few questions. First, should probabilistic failures be considered? This is a question that affects the entire SP-NSG algorithm. Following the mission-critical thread for this work, when looking for geographic vulnerabilities in networks, we are less interested in what is the likely outcome as we are with what is the potential outcome if mitigating steps are not taken. To that end, Assumption 3 seems to apply. Furthermore, we are interested for the worst case, not the average case.

With Assumption 3, we propose that a node could represent multiple nodes that are located in close proximity to each other. The set of nodes used in the state generation algorithm can be reduced and mapping can be established to both the upper and lower set of nodes/links. We acknowledge that this is an approximation when the node location that is considered in the state generation is not exactly the node location in the network. However, we build on the premise that if the distance between the new node location and the mapped node is relatively small, the outcome is likely to be the same. The advantage of this approach is that the network nodes (in either layer) that are no longer included in the state generation algorithm are still used via mapping. This preserves the network connectivity and structure at those layers. To select new node locations, we use the K-means clustering algorithm in areas of the network that are dense as described in Algorithm 5, which is used to create a new set of nodes \(V_A\).

Once \(V_A\) is known, the mapping can be generated in a manner similar to the previous section that allows the network states generated from \(V_A\) to be mapped onto \(V_L\) and \(V_U\) as follows:

Note that \(S_{U,i}\) is still created from (7).

One final optimization that was used was to store the shortest path results during the initial (all working) network test. If a new network state does not affect any of the nodes in the original shortest path, the original results would still hold and a new shortest path calculation does not need to be run. In a large network, we found that this leads to substantial improvements.

6.5 Complexity of Approach

Without clustering, the complexity of our approach is \(F(N)*N*2^{N_r}\) where \(N_r\) is the maximum number of nodes in a threat radius, N is the total number of nodes, and F(N) is the complexity of computing the network measure. When clustering is incorporated, the complexity is reduced to \(F(N)*N*2^k\), where k is the new maximum number of nodes in a threat radius after clustering. Clearly, if k can be limited to a relatively small number (\(k \ll n\)), then the complexity is significantly reduced.

Now consider the complexity of F(N). For the multilayer case, a breadth-first shortest path calculation (BFS) is used for every link in the upper layer network across the lower layer network along with a breadth-first shortest path calculation for every user demand across the upper layer network. Therefore, \(F(N) = |E_u| (|N_L| + |E_L|) + D (|N_U| + |E_U|)\) for a total of D demands in the upper layer network. If \(|N_L| \gg |N_U|\), this simplifies to \(F(N) = |E_u| (|N_L| + |E_L|)\).

7 Evaluation Methodology

To evaluate the methods proposed here, we consider three multilayer networks representing a small, medium, and large network. The small network is a Gabriel topology with 16 nodes in the lower layer network and 6 (N1, N6, N12, N1, N5, and N14) nodes in the upper layer network. As shown in Fig. 7, we consider three different upper layer topologies: the ring topology (Topology-1), semi-mesh topology (Topology-2), and star topology (Topology-3) with N13 as the hub.

The medium network is the Nobel-EU topology from the Survivable Network Design Library (SNDlib 1.0) [46] with 28 nodes in the lower layer network and 10 nodes in the upper layer network. Again, three different upper layer topologies are tested: the semi-mesh topology (Topology 1), semi-star topology (Topology 2), and ring topology (Topology 3); see Fig. 8. This multilayer network example is referred to as the EU network.

The large network is the AT&T Layer 1 topology generated by Sterbenz et al. [47] and available at [48]. This is a large physical layer network with 383 nodes. There are two different upper layer topologies that are tested: the dense topology (Topology-1) and regular topology (Topology-2); see Fig. 9. This multilayer network example is referred to as the ATTL1 network.

All upper layer demands are simply one unit of bandwidth for each demand and the demand structure is always from all upper layer nodes to all other upper layer nodes. These networks are analyzed using a network test that is appropriate for the topology. For example, if one node is down in the upper layer network of the Gabriel network, 10 demands become automatically out of service. If the network test required 70 % demands, then one node down would cause the network to fail. So for the Gabriel networks, 40 % was selected as the threshold to satisfy mission critical requirements. For the EU network, 50 % was selected and for the ATTL1 network 70 % was selected.

A set of 3000 random network attacks was also created to test the accuracy of the algorithms. The network test is run based on the random location of the attack and subsequent down nodes within the given threat radius of the attack location. They are shown with the output of the SP-NSG algorithm. There are several parameters that can be varied to conduct the analysis. These include the number of nodes allowed in a cluster (which are varied from 4 to 16), the size of the threat radius (which is topology dependent), redundancy factor (which is varied from 1 to 3), and network test parameter. Several statistics are collected with each operation including run time, states tested, states failed, vulnerable areas of the network, and ratio of vulnerable area to total area.

8 Results

The analysis tool that implements the SP-NSG algorithm was built and compiled using GCC C++ on an MS Windows 7 Personal Computer with an Intel I7 processor and 8 GB of RAM. The graphic output and network/configuration interfaces use an XML format. Python 3.2 was used to generate the output graphics. The random attack generator was also built and compiled using GCC C++. The XML network formats used here are very similar to those used by [46] in the Survivable Network Design Library.

For all three networks, the NSG-SP algorithm predicts the Geographic Vulnerability. The red ‘o’ sign denotes a random attack that did sufficient damage to cause the network measure to be less than \(X_{\min }\) causing the mission to fail. The gray ‘+’ sign denotes a random attack that caused a network measure that was greater than \(X_{\min }\) and thus, the mission did not fail. The shaded area should correlate with the red marker locations.

8.1 Gabriel Network

From Fig. 10a–e, we illustrate a few important points. First, as the threat radius grows, the vulnerable area grows. This is intuitive since with the increase in the threat radius, more nodes are affected by a single event causing more severe impacts. Figure 10b shows that as the threat radius grows from 10 to 15, vulnerabilities show up between nodes N5 and N14, and between nodes N1, N12, and N13. The latter failure mode illustrates the interaction between the upper layer and lower layer since N13 is only a lower layer node and nodes N1 and N12 are present in both layer topologies.

Gabriel network. Lower layer network is shown with solid lines. Upper layer network is shown with dotted lines. a Gabriel network, L2 network #1, threat radius = 10, redundancy factor = 1, network test = 40 %, b Gabriel network, L2 network #1, threat radius = 12.5, redundancy factor = 1, network test = 40 %, c Gabriel network, L2 network #1, threat radius = 15, redundancy factor = 1, network test = 40 %, d Gabriel network, L2 network #2, threat radius = 10, redundancy factor = 1, network test = 40 %, e Gabriel network, L2 network #3, threat radius = 10, redundancy factor = 1, network test = 40 %

An important point is that the effect on different upper layer topologies are not always predictable. The Gabriel #2 topology had vulnerabilities that the Gabriel #1 topology did not. This is because when the upper layer network was more flexible, more direct routes were available between nodes and they tended to utilize the same nodes (N1 and N14) while entirely ignoring other longer routes. This was determined by analyzing the flow analysis after provisioning. This suggests that some extra steps would need to be exercised to ensure that the flexibility of the upper layer network did not cause the entire network to be more vulnerable. The star topology performed as expected with a vulnerability at the center of the star. Table 1 shows the information that was collected on the Gabriel network. Computation time (sec.) and errors are discussed later in the paper.

The NIR metric shown in Table 2 reflects the vulnerabilities shown in Table 1 and in Fig. 10. The Gabriel #1 topology has the least vulnerabilities with a threat radius of 15. To compute the NIR, the minimum network measure \(X_{\min }\) was swept at intervals of 10 % from 40 to 90 %, approximating the probability curve. It is noteworthy that as \(\alpha\) grew to 16, the differences between the topologies became more apparent. This is because the #1 topology had very few vulnerabilities when X(S) was less than 50 %. Figure 11 shows the impact curve and the approximated probability distribution for Gabriel #1 and #3 topologies. With topology #3, it is apparent that the higher probability of network failure at a lower network measure drives the NIR up for that topology.

8.2 EU Network

For the figures associated with the EU network, we use the same notation used with the Gabriel network. In a similar manner to the Gabriel network, Fig. 12a–e illustrate that as the threat radius grew the vulnerable area grew. Perhaps the most noteworthy point is that when the upper layer network was more dense, the network was more vulnerable. We saw this same effect with the Gabriel network. Also notable is that the loop topology was as resilient to geographic events as any other topology to geographic events. Table 3 shows the information that was collected on the EU network. Computation time and errors are discussed later in the paper.

EU Network. Lower layer network is shown with solid lines. Upper layer network is shown with dotted lines. a EU network, L2 network #1, threat radius = 2.5, redundancy factor = 1, network test = 50 %, b EU network, L2 network #1, threat radius = 3.75, redundancy factor = 1, network test = 50 %, c EU network, L2 network #1, threat radius = 5.0, redundancy factor = 1, network test = 50 %, d EU network, L2 network #2, threat radius = 3.75, redundancy factor = 1, network test = 50 %, e EU network, L2 network #3, threat radius = 3.75, redundancy factor = 1, network test = 50 %

The NIR metric shown in Table 4 reflects the vulnerabilities shown in Table 3. Note that the NIR favors different alternatives for different \(\alpha\) values. With \(\alpha = 1\), the NIR indicates that the EU #2 topology is more resilient. At \(\alpha = 16\), the EU #1 and #3 topologies are more resilient. The reason for this non-intuitive result is that all three upper topologies perform similarly when the concern is equal for the loss of 10–90 % of the demands. However, when the loss of 60 % of the demands is weighted more heavily \((\alpha = 16)\), the loop and partial mesh topologies (\(\#1\) and \(\#3\)) are the clear favorites. The intuition for the results seems to hold.

8.3 ATTL1 Network

Figure 13a–c show that the area of vulnerability seems to follow a line from the northeast to the southwest U.S. During the initial multilayer provisioning process, only two flows were created across the network in the western part of the country. One path was near the vulnerabilities marked and the other path was through the middle part of the country, entirely ignoring the path on the northern part of the topology. Even with redundancy augmentation, capacity was not added as no additional initial capacity was needed.

ATTL1 network. Lower layer network is shown with solid lines. Upper layer network is shown with dotted lines. a ATTLA network, L2 topology #1, threat radius = 1.0, redundancy factor = 1, network test = 70 %, b ATTL1 network, L2 topology #1, threat radius = 1.5, redundancy factor = 1, network test = 70 %, c ATTL1 network, L2 topology #1, threat radius = 2.0, redundancy factor = 1, network test = 70 %, d ATTL1 network, L2 topology #2, threat radius = 1.5, redundancy factor = 1, network test = 70 %, e ATTL1 network, L2 topology #2, threat radius = 2.0, redundancy factor = 1, network test = 70 %

The provisioning algorithms (used in this work) use a shortest path algorithm to find paths. It may be required to investigate other methods to generate paths in order to encourage more path diversity. Interestingly, the large network tended to follow traditional assumptions, with sparse upper layer networks faring worse than more dense upper layer networks.

Figure 14 shows the results of the clustering algorithm. The dark lines are a tree with the new clustered location at the base of the tree. The nodes that are clustered are at the leaves of the tree. When the threat radius is large (in this example it is 2.0) and the cluster factor is high (9), considerable clustering occurs.

The NIR also agrees with Table 5 and Fig. 13d, which shows the most resilient topology to be topology 1.

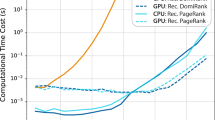

8.4 Performance of the Algorithm

In Fig. 15a, the effect that the threat radius has on processing time is shown normalized against the processing time at the largest threat radius. It is intuitive for the Gabriel and EU networks that the processing time rises as the threat radius increases. For the ATTL1 network, the processing time is relatively flat. This is because the complexity is controlled by the clustering algorithm. The plot of the clustering factor versus processing time is included to demonstrate this. In Fig. 15b, we see the vulnerable area versus the threat radius normalized against the total area in the network. The vulnerable area increases as would be expected when the threat radius is larger.

One of the concerns of using clustering for the purpose of reducing the number of states to be analyzed was the accuracy of the predicted vulnerability. In the Gabriel and EU networks (without clustering), we did not observe any errors. In the ATTL1 network, the effect of clustering on accuracy was shown in Table 5. As the number of clusters is reduced, errors do rise. However, even with 4 clusters the percentage of errors was still less than 1 % of 3000 random attacks.

9 Discussion

During the SP-NSG process, one of the important benefits of state space pruning is that it can be used to find geographic vulnerabilities in networks. This benefit is exploited in this work. The NIR has the primary benefit that it can be used with different network tests, which allows the NIR to be configured specifically to the mission of the network and its applications (Table 6).

However, for large networks, the vulnerability analysis can still become intractable. To maintain tractability in large networks, we also present a K-means clustering method. It relies on the idea of reducing the number of nodes for purposes of the state based analysis using clustering. Matrix transformation is used both for the K-Means Clustering Approach and the multilayer testing methods that maps geographic failures simultaneously onto multiple layers.

Small (16 node), medium (28 node), and large networks (383 nodes) with several different upper layer topologies are used to demonstrate our approach. Various threat radii were tested both for performance and impact on vulnerability. Typical results were seen with increased areas of vulnerability for larger threat radii. The performance was also reduced with larger threat radii. The notable exception to this is when other factors manage the growth in complexity like clustering are used. Maximum simultaneous node failures could also be used to manage complexity in a similar way.

Attacks were simulated to test the accuracy of the SP-NSG with clustering. Various clustering factors are tested for performance and accuracy. As the clustering factor is reduced, the number of nodes reduced to a single node is increased. This decreases accuracy but increases performance. Nine nodes in a threat radius seemed to provide good performance gains while maintaining reasonable accuracy. Different upper layer topologies were also tested. The selection of the topology had dramatic and sometimes unpredictable results. Loop topologies generally perform well with geographic attacks. Mesh topologies do not always perform as expected. This is related to vulnerabilities created by the initial provisioning algorithm.

The NIR was also demonstrated on the three test networks. It consistently followed our intuition on the prediction of resilient networks. We found success using the NIR with a high \(\alpha\) value to predict resiliency. It would be interesting to learn more about the application of this metric with \(\alpha = 1\) (or even less than one) and possibly its relationship with availability or reliability.

With respect to disaster planning, one factor that is not covered in this work is the communication of mission critical requirement information between the users and the network. In [49], extended Service Level Agreements (SLAs) are proposed for this purpose.

10 Conclusions

In this work, we discuss the network design process as it relates to disaster planning and present a novel network resilience metric (NIR) and a reduced state based method to analyze multilayer networks for geographic vulnerabilities known as the Self-Pruning Network State Generation (SP-NSG) algorithm. The SP-NSG uses the idea of pruning the state space during the execution of the algorithm to reduce the number of network states to test.

The overall results demonstrate that it is possible to use state based techniques and the NIR to efficiently analyze large multilayer networks for specific types of failure modes like geographically correlated failures. In the future, network testing for multilayer networks needs to be expanded. With different objectives for various mission critical networks, innovative network testing should be created to address the different objectives. In addition, most network tests of multilayer networks are not efficient for large node sets or large demand sets. More efficient multilayer network testing would also be beneficial.

References

Standley, J., Brown, V., Comitz, P., Schoolfield, J.: SWIM segment 2 deployment and utilization in NextGen R&D programs. In: Integrated Communications, Navigation and Surveillance Conference (ICNS), 2012, pp. G8-1–G8-5 (2012)

Sterbenz, J.P.G., Hutchison, D., Çetinkaya, E.K., Jabbar, A., Rohrer, J.P., Schöller, M., Smith, P.: Resilience and survivability in communication networks: strategies, principles, and survey of disciplines. Comput. Netw. 54(8), 1245–1265 (2010)

Habib, M.F., Tornatore, M., Dikbiyik, F., Mukherjee, B.: Disaster survivability in optical communication networks. Comput. Commun. 36(6), 630–644 (2013)

Banerjee, S., Shirazipourazad, S., Sen, A.: Design and analysis of networks with large components in presence of region-based faults. In: 2011 IEEE International Conference on Communications (ICC), pp. 1–6. IEEE (2011)

Li, R., Wang, X., Jiang, X.: Network survivability against region failure. In: 2011 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), pp. 1–6. IEEE (2011)

Rahnamay-Naeini, M., Pezoa, J.E., Azar, G., Ghani, N., Hayat, M.M.: Modeling stochastic correlated failures and their effects on network reliability. In: 2011 Proceedings of 20th International Conference on Computer Communications and Networks (ICCCN), pp. 1–6. IEEE (2011)

Neumayer, S., Modiano, E.: Network reliability with geographically correlated failures. In: 2010 Proceedings IEEE INFOCOM, pp. 1–9. IEEE (2010)

Saito, H.: Analysis of geometric disaster evaluation model for physical networks. IEEE/ACM Trans. Netw. 23(6), 1777–1789 (2015)

Agarwal, P.K., Efrat, A., Ganjugunte, S.K., Hay, D., Sankararaman, S., Zussman, G.: Network vulnerability to single, multiple, and probabilistic physical attacks. In: Military Communications Conference, 2010—MILCOM 2010, pp. 1824–1829. IEEE (2010)

Agarwal, P.K., Efrat, A., Ganjugunte, S.K., Hay, D., Sankararaman, S., Zussman, G.: The resilience of wdm networks to probabilistic geographical failures. IEEE/ACM Trans. Netw.: TON 21(5), 1525–1538 (2013)

Fumagalli, A., Valcarenghi, L.: Ip restoration vs. wdm protection: is there an optimal choice? IEEE Netw. 14(6), 34–41 (2000)

Cholda, P., Tapolcai, J., Cinkler, T., Wajda, K., Jajszczyk, A.: Quality of resilience as a network reliability characterization tool. IEEE Netw. 23(2), 11–19 (2009)

Medhi, D.: A unified approach to network survivability for teletraffic networks: models, algorithms and analysis. IEEE Trans. Commun. 42, 534–548 (1994)

Medhi, D., Khurana, R.: Optimization and performance of network restoration schemes for wide-area teletraffic networks. J. Netw. Syst. Manag. 3, 265–294 (1995)

Pióro, M., Medhi, D.: Routing, Flow, and Capacity Design in Communication and Computer Networks. Elsevier, Amsterdam (2004)

Vasseur, J.-P., Pickavet, M., Demeester, P.: Network Recovery: Protection and Restoration of Optical, SONET-SDH, IP, and MPLS. Elsevier, Amsterdam (2004)

Oikonomou, K.N., Sinha, R.K., Doverspike, R.D.: Multi-layer network performance and reliability analysis. Int. J. Interdiscip. Telecommun. Netw.: IJITN 1(3), 1–30 (2009)

Gardner, M.T., Beard, C., Medhi, D.: Using network measure to reduce state space enumeration in resilient networks. In: 2013 9th International Conference on the Design of Reliable Communication Networks (DRCN), pp. 250–257. IEEE (2013)

Lee, K., Modiano, E., Lee, H.-W.: Cross-layer survivability in wdm-based networks. IEEE/ACM Trans. Netw.: TON 19(4), 1000–1013 (2011)

Pacharintanakul, P., Tipper, D.: Crosslayer survivable mapping in overlay-ip-wdm networks. In: 7th International Workshop on Design of Reliable Communication Networks, 2009. DRCN 2009, pp. 168–174. IEEE (2009)

Oki, E., Matsuura, N., Shiomoto, K., Yamanaka, N.: A disjoint path selection scheme with shared risk link groups in gmpls networks. IEEE Commun. Lett. 6(9), 406–408 (2002)

Lee, H.-W., Modiano, E., Lee, K.: Diverse routing in networks with probabilistic failures. IEEE/ACM Trans. Netw. 18(6), 1895–1907 (2010)

Esmaeili, M., Peng, M., Khan, S., Finochietto, J., Jin, Y., Ghani, N.: Multi-domain dwdm network provisioning for correlated failures. In: Optical Fiber Communication Conference and Exposition (OFC/NFOEC), 2011 and the National Fiber Optic Engineers Conference, pp. 1–3. IEEE (2011)

Diaz, O., Feng, X., Min-Allah, N., Khodeir, M., Peng, M., Khan, S., Ghani, N.: Network survivability for multiple probabilistic failures. IEEE Commun. Lett. 16(8), 1320–1323 (2012)

Yu, H., Qiao, C., Anand, V., Liu, X., Di, H., Sun, G.: Survivable virtual infrastructure mapping in a federated computing and networking system under single regional failures. In: 2010 IEEE Global Telecommunications Conference (GLOBECOM 2010), pp. 1–6 (2010)

Rizwanur Rahman, M., Svne, R.B.: Survivable virtual network embedding algorithms for network virtualization. IEEE Trans. Netw. Serv. Manag. 10(2), 105–118 (2013)

Deepankar, M.: Network Reliability and Fault-Tolerance. Wiley Encyclopedia of Electrical and Electronics Engineering, Hoboken (1999)

Menascé, D.A., Almeida, V.: Capacity Planning for Web Services: Metrics, Models, and Methods. Prentice Hall PTR, Upper Saddle River (2001)

Colbourn, C.J.: Reliability issues in telecommunications network planning. In: Sansò, B., Soriano, P. (eds.) Telecommunications Network Planning, pp. 135–146. Springer (1999)

Ball, M.O., Colbourn, C.J., Provan, J.S.: Network reliability. Handb. Oper. Res. Manag. Sci. 7, 673–762 (1995)

Li, V.O.K., Silvester, J.A.: Performance analysis of networks with unreliable components. IEEE Trans. Commun. 32(10), 1105–1110 (1984)

Jarvis, J.P., Shier, D.R.: An improved algorithm for approximating the performance of stochastic flow networks. INFORMS J. Comput. 8(4), 355–360 (1996)

Dotson, W., Gobien, J.: A new analysis technique for probabilistic graphs. IEEE Trans. Circuits Syst. 26(10), 855–865 (1979)

Gomes, T., Craveirinha, J., Martins, L.: An efficient algorithm for sequential generation of failure states in a network with multi-mode components. Reliab. Eng. Syst Saf. 77(2), 111–119 (2002)

Bigdeli, A., Tizghadam, A., Leon-Garcia, A.: Comparison of network criticality, algebraic connectivity, and other graph metrics. In: Proceedings of the 1st Annual Workshop on Simplifying Complex Network for Practitioners, pp. 4. ACM (2009)

Long, X., Tipper, D., Gomes, T.: Measuring the survivability of networks to geographic correlated failures. Opt. Switch. Netw. 14, 117–133 (2014)

Çetinkaya, E.K., Alenazi, M.J.F., Cheng, Y., Peck, A.M., Sterbenz, J.P.G.: A comparative analysis of geometric graph models for modelling backbone networks. Opt. Switch. Netw. 14(Part 2), 95–106 (2014) (Special Issue on (RNDM) 2013)

Fay, D., Haddadi, H., Thomason, A., Moore, A.W., Mortier, R., Jamakovic, A., Uhlig, S., Rio, M.: Weighted spectral distribution for internet topology analysis: theory and applications. IEEE/ACM Trans. Netw. 18(1), 164–176 (2010)

Sterbenz, J.P.G., Cetinkaya, E.K., Hameed, M.A., Jabbar, A., Rohrer, J.P.: Modelling and analysis of network resilience. In: 2011 Third International Conference on Communication Systems and Networks (COMSNETS), pp. 1–10. IEEE (2011)

Ahn, Y.-Y., Han, S., Kwak, H., Moon, S., Jeong, H.: Analysis of topological characteristics of huge online social networking services. In: Proceedings of the 16th International Conference on World Wide Web, pp. 835–844. ACM (2007)

Garbin, D., Shortle, J.: Critical thinking: moving from infrastructure protection to infrastructure resiliency. Critical Infrastructure Protection Program Discussion Paper Series (2007)

Manzano, M., Calle, E., Torres-Padrosa, V., Segovia, J., Harle, D.: Endurance: a new robustness measure for complex networks under multiple failure scenarios. Comput. Netw. 57(17), 3641–3653 (2013)

Menth, M., Duelli, M., Martin, R., Milbrandt, J.: Resilience analysis of packet-switched communication networks. IEEE/ACM Trans. Netw.: TON 17(6), 1950–1963 (2009)

Gardner, M.T., Beard, C.: Evaluating geographic vulnerabilities in networks. In: 2011 IEEE International Workshop Technical Committee on Communications Quality and Reliability (CQR), pp. 1–6. IEEE (2011)

Gardner, M.T., May, R., Beard, C., Medhi, D.: Finding geographic vulnerabilities in multilayer networks using reduced network state enumeration. In: 2015 11th International Conference on the Design of Reliable Communication Networks (DRCN), pp. 49–56. IEEE (2015)

Orlowski, S., Wessäly, R., Pióro, M., Tomaszewski, A.: SNDlib 1.0-survivable network design library. Networks 55(3), 276–286 (2010)

Sterbenz, J.P.G., Cetinkaya, E.K., Hameed, M.A., Jabbar, A., Qian, S., Rohrer, J.P.: Evaluation of network resilience, survivability, and disruption tolerance: analysis, topology generation, simulation, and experimentation. Telecommun. Syst. 52(2), 705–736 (2013)

Sterbenz, J.P.G., Rohrer, J.P., Cetinkaya, E.K., Alenazi, M.J.F., Cosner, A., Rolfe, J.: KU-Topview Network Topology Tool, The University of Kansas. http://www.ittc.ku.edu/resilinets/maps/, [Online; accessed 30-October-2013] (2010)

Gardner, M.T., Cheng, Y., May, R., Beard, C., Sterbenz, J., Medhi, D.: Creating network resilience against disasters using service level agreements. In : 2016 12th International Conference on the Design of Reliable Communication Networks (DRCN’2016), Paris, France, IEEE (2016)

Acknowledgments

This research is supported in part by the National Science Foundation under Grant No. CNS-1217736 and by the Federal Aviation Administration under Cooperative Agreement No. 11-G-0182.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gardner, M.T., May, R., Beard, C. et al. Determining Geographic Vulnerabilities Using a Novel Impact Based Resilience Metric. J Netw Syst Manage 24, 711–745 (2016). https://doi.org/10.1007/s10922-016-9383-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10922-016-9383-y