Abstract

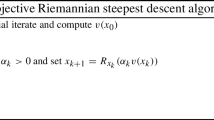

In this paper, we present an inexact version of the steepest descent method with Armijo’s rule for multicriteria optimization in the Riemannian context given in Bento et al. (J. Optim. Theory Appl., 154: 88–107, 2012). Under mild assumptions on the multicriteria function, we prove that each accumulation point (if any) satisfies first-order necessary conditions for Pareto optimality. Moreover, assuming that the multicriteria function is quasi-convex and the Riemannian manifold has nonnegative curvature, we show full convergence of any sequence generated by the method to a Pareto critical point.

Similar content being viewed by others

References

Janh, J.: Vector Optimization. Springer, Berlin (2011)

Graña Drummond, L.M., Maculan, N., Svaiter, B.F.: On the choice of parameters for the weighting method in vector optimization. Math. Program. 111, 201–216 (2008)

Graña Drummond, L.M., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175(2), 395–414 (2005)

Graña Drummond, L.M., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28(1), 5–29 (2004)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51(3), 479–494 (2000)

Fukuda, E.H., Graña Drummond, L.M.: On the convergence of the projected gradient method for vector optimization. Optimization 60, 1009–1021 (2011)

Fukuda, E.H., Graña Drummond, L.M.: Inexact projected gradient method for vector optimization. Comput. Optim. Appl. 54(3), 473–493 (2013)

Bento, G.C., Ferreira, O.P., Oliveira, P.R.: Unconstrained steepest descent method for multicriteria optimization on Riemannian manifolds. J. Optim. Theory Appl. 154, 88–107 (2012)

Bello Cruz, J.Y., Lucambio Pérez, L.R., Melo, J.G.: Convergence of the projected gradient method for quasiconvex multiobjective optimization. Nonlinear Anal. 74, 5268–5273 (2011)

Takayama, A.: Mathematical Economics. Cambridge Univ. Press, Cambridge (1997)

Gromicho, J.: Quasiconvex Optimization and Location Theory. Kluwer Academic Publishers, Dordrecht (1998)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15(4), 953–970 (2005)

Fliege, J., Graña Drummond, L.M., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20(2), 602–626 (2009)

Ceng, L.C., Mordukhovich, B.S., Yao, J.C.: Hybrid approximate proximal method with auxiliary variational inequality for vector optimization. J. Optim. Theory Appl. 146, 267–303 (2010)

Udriste, C.: Convex Functions and Optimization Algorithms on Riemannian Manifolds. Mathematics and Its Applications, vol. 297. Kluwer Academic Publishers, Dordrecht (1994)

Rapcsák, T.: Smooth Nonlinear Optimization in R n. Kluwer Academic Publishers, Dordrecht (1997)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Wang, J.H., Lopez, G., Martin-Marquez, V., Li, C.: Monotone and accretive vector fields on Riemannian manifolds. J. Optim. Theory Appl. 146, 691–708 (2010)

Bento, G.C., Ferreira, O.P., Oliveira, P.R.: Local convergence of the proximal point method for a special class of nonconvex functions on Hadamard manifolds. Nonlinear Anal. 73, 564–572 (2010)

Li, C., Mordukhovich, B.S., Wang, J.H., Yao, J.C.: Weak sharp minima on Riemannian manifolds. SIAM J. Optim. 21, 15–23 (2011)

Cruz Neto, J.X., Ferreira, O.P., Lucâmbio Pérez, L.R., Németh, S.Z.: Convex- and monotone-transformable mathematical programming problems and a proximal-like point method. J. Glob. Optim. 35, 53–69 (2006)

Bento, G.C., Melo, J.G.: A subgradient method for convex feasibility on Riemannian manifolds. J. Optim. Theory Appl. 152, 773–785 (2012)

Cruz Neto, J.X., de Lima, L.L., Oliveira, P.R.: Geodesic algorithms in Riemannian geometry. Balk. J. Geom. Appl. 3(2), 89–100 (1998)

Do Carmo, M.P.: Riemannian Geometry. Birkhauser, Boston (1992)

Papa Quiroz, E.A., Quispe, E.M., Oliveira, P.R.: Steepest descent method with a generalized Armijo search for quasiconvex functions on Riemannian manifolds. J. Math. Anal. Appl. 341(1), 467–477 (2008)

do Carmo, M.P.: Differential Geometry of Curves and Surfaces. Prentice Hall, New Jersey (1976)

Yamaguchi, T.: Locally geodesically quasiconvex functions on complete Riemannian manifolds. Trans. Am. Math. Soc. 298(1), 307–330 (1986)

Rapsáck, T.: Sectional curvature in nonlinear optimization. J. Glob. Optim. 40(1–3), 375–388 (2008)

Bento, C.G., Cruz Neto, J.X., Oliveira, P.R.: Convergence of inexact descent methods for nonconvex optimization on Riemannian manifolds (2011). arXiv:1103.4828

Acknowledgements

The authors would like to extend their gratitude toward anonymous referees whose suggestions helped us to improve the presentation of this paper. The first author was partially supported by CNPq Grant 471815/2012-8, Project CAPES-MES-CUBA 226/2012, PROCAD-nf-UFG/UnB/IMPA, and FAPEG/CNPq. The second author was partially supported by CNPq GRANT 301625-2008 and PRONEX-Optimization (FAPERJ/CNPq).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alfredo Iusem.

Rights and permissions

About this article

Cite this article

Bento, G.C., da Cruz Neto, J.X. & Santos, P.S.M. An Inexact Steepest Descent Method for Multicriteria Optimization on Riemannian Manifolds. J Optim Theory Appl 159, 108–124 (2013). https://doi.org/10.1007/s10957-013-0305-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0305-9