Abstract

In this paper, a modified version of the spectral conjugate gradient algorithm suggested by Jian, Chen, Jiang, Zeng and Yin is proposed. It is proved that the new method is globally convergent for general nonlinear functions, under some standard assumptions. Based on the modified secant condition and quasi-Newton directions, some new spectral parameters are introduced. It is shown that the search direction satisfies the sufficient descent property independent of the line search. Numerical experiments indicate a promising behavior of the new algorithm, especially for large-scale problems.

Similar content being viewed by others

References

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Polak, E., Ribiére, G.: Note sur la convergence des mthodes de directions conjugèes. Rev. Fr. Inf. Rech. Oper. 16, 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43(1), 87–101 (2001)

Fletcher, R.: Practical Methods of Optimization. Unconstrained Optimization, vol. 1. Wiley, New York (1987)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Dai, Y., Kou, C.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Yu, G.H., Guan, L.T., Chen, W.F.: Spectral conjugate gradient methods with sufficient descent property for large-scale unconstrained optimization. Optim. Methods. Softw. 23(2), 275–293 (2008)

Jian, J., Chen, Q., Jiang, X., Zeng, Y., Yin, J.: A new spectral conjugate gradient method for large-scale unconstrained optimization. Optim. Methods. Softw. 32(3), 503–515 (2017)

Amini, K., Faramarzi, P., Pirfalah, N.: A modified Hestenes–Stiefel conjugate gradient method with an optimal property. Optim. Methods. Softw. (2018). https://doi.org/10.1080/10556788.2018.1457150

Dong, X.L., Han, D., Dai, Zh, Li, L., Zhu, J.: An accelerated three-term conjugate gradient method with sufficient descent condition and conjugacy condition. J. Optim. Theory Appl. (2018). https://doi.org/10.1007/s10957-018-1377-3

Liu, H., Liu, Z.: An efficient Barzilai–Borwein conjugate gradient method for unconstrained optimization. J. Optim. Theory Appl. (2018). https://doi.org/10.1007/s10957-018-1393-3

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Raydan, M.: The Barzilain and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Birgin, E.G., Martínez, J.M.: A spectral conjugate gradient method for unconstrained optimization. Appl. Math. Optim. 43, 117–128 (2001)

Perry, A.: A modified conjugate gradient algorithm. Oper. Res. 26, 1073–1078 (1978)

Andrei, N.: A scaled BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. Appl. Math. Lett. 20, 645–650 (2007)

Andrei, N.: Another hybrid conjugate gradient algorithm for unconstrained optimization. Numer. Algorithms. 47, 143–156 (2008)

Andrei, N.: Accelerated scaled memoryless BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. Eur. J. Oper. Res. 204(3), 410–420 (2010)

Andrei, N.: New accelerated conjugate gradient algorithms as a modification of Dai-Yuan’s computational scheme for unconstrained optimization. J. Comput. Appl. Math. 234, 3397–3410 (2010)

Babaie-Kafaki, S., Mahdavi-Amiri, N.: Two modifed hybrid conjugate gradient methods based on a hybrid secant equation. Math. Model. Anal. 18(1), 32–52 (2013)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (2000)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 335–358 (2006)

Zoutendijk, G.: Nonlinear programming, computational methods. In: Abadie, J. (ed.) Integer and Nonlinear Programming, pp. 37–86. North-Holland Publishing Company, Amsterdam (1970)

Powell, M.J.D.: Non-convex minimization calculations and the conjugate gradient method. In: Griffiths, D.F. (ed.) Numerical Analysis, Lecture Notes in Mathematics 1066, pp. 122–141. Springer, Berlin (1984)

Al-Baali, M.: Descent property and global convergence of the Fletcher–Reeves method with inexact line search. IMA J. Numer. Anal. 5(1), 121–124 (1985)

Fatemi, M.: An optimal parameter for Dai–Liao family of conjugate gradient methods. J. Optim. Theory Appl. 169, 587–605 (2016)

Zheng, Y., Zheng, B.: Two new Dai–Liao-type conjugate gradient methods for unconstrained optimization problems. J. Optim. Theory Appl. 175, 502–509 (2017)

Andrei, N.: A Dai–Liao conjugate gradient algorithm with clustering of eigenvalues. Numer. Algorithms 77, 1273–1282 (2018)

Aminifard, Z., Babaie-Kafaki, S.: An optimal parameter choice for the Dai–Liao family of conjugate gradient methods by avoiding a direction of the maximum magnification by the search direction matrix. 4OR Q. J. Oper. Res. (2018). https://doi.org/10.1007/s10288-018-0387-1

Li, D.H., Fukushima, M.: A modified BFGS method and its global convergence in non-convex minimization. J. Comput. Appl. Math. 129(1–2), 15–35 (2001)

Zhou, W., Zhang, L.: A nonlinear conjugate gradient method based on the MBFGS secant condition. Optim. Methods Softw. 21(5), 707–714 (2006)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2(1), 21–42 (1992)

Andrei, N.: An unconstrained optimization test functions collection. Adv. Model. Optim. 10(1), 147–161 (2008)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, PhL: CUTE: Constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

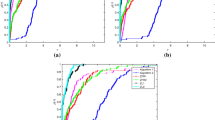

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program 91(2), 201–213 (2002)

Acknowledgements

The authors are grateful to the anonymous referees and editor for suggestions and comments during the preparation of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Alexandre Cabot.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Faramarzi, P., Amini, K. A Modified Spectral Conjugate Gradient Method with Global Convergence. J Optim Theory Appl 182, 667–690 (2019). https://doi.org/10.1007/s10957-019-01527-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-019-01527-6

Keywords

- Global convergence

- Sufficient descent property

- Unconstrained optimization

- Spectral conjugate gradient

- Modified secant condition