Abstract

We study a new technique to check the existence of feasible points for mixed-integer nonlinear optimization problems that satisfy a structural requirement called granularity. For granular optimization problems, we show how rounding the optimal points of certain purely continuous optimization problems can lead to feasible points of the original mixed-integer nonlinear problem. To this end, we generalize results for the mixed-integer linear case from Neumann et al. (Comput Optim Appl 72:309–337, 2019). We study some additional issues caused by nonlinearity and show how to overcome them by extending the standard granularity concept to an advanced version, which we call pseudo-granularity. In a computational study on instances from a standard test library, we demonstrate that pseudo-granularity can be expected in many nonlinear applications from practice, and that its explicit use can be beneficial.

Similar content being viewed by others

References

Neumann, C., Stein, O., Sudermann-Merx, N.: A feasible rounding approach for mixed-integer optimization problems. Comput. Optim. Appl. 72, 309–337 (2019)

Danna, E., Rothberg, E., Le Pape, C.: Exploring relaxation induced neighborhoods to improve MIP solutions. Math. Program. 102, 71–90 (2005)

Papadimitriou, C.H., Steiglitz, K.: Combinatorial Optimization. Dover Publications, Mineola (1998)

Achterberg, T., Berthold, T.: Improving the feasibility pump. Discrete Optim. 4, 77–86 (2007)

Fischetti, M., Glover, F., Lodi, A.: The feasibility pump. Math. Program. 104, 91–104 (2005)

Fischetti, M., Salvagnin, D.: Feasibility pump 2.0. Math. Program. Comput. 1, 201–222 (2009)

Berthold, T., Gleixner, A.M.: Undercover: a primal MINLP heuristic exploring a largest sub-MIP. Math. Program. 144, 315–346 (2014)

Berthold, T.: RENS—the optimal rounding. Math. Program. Comput. 6, 33–54 (2014)

Bonami, P., Gonçalves, J.P.M.: Heuristics for convex mixed integer nonlinear programs. Comput. Optim. Appl. 51, 729–747 (2012)

Belotti, P., Kirches, C., Leyffer, S., Linderoth, J., Luedtke, J., Mahajan, A.: Mixed-integer nonlinear optimization. Acta Numer. 22, 1–131 (2013)

Stein, O.: Error bounds for mixed integer linear optimization problems. Math. Program. 156, 101–123 (2016)

Stein, O.: Error bounds for mixed integer nonlinear optimization problems. Optim. Lett. 10, 1153–1168 (2016)

Conforti, M., Cornuéjols, G., Zambelli, G.: Integer Programming. Springer, Cham (2014)

Grunewald, F., Segal, D.: How to Solve a Quadratic Equation in Integers. Mathematical Proceedings of the Cambridge Philosophical Society, vol. 89. Cambridge University Press, Cambridge (1981)

Siegel, C.L.: Zur Theorie der quadratischen Formen. Nachr. Akad. Wiss. Göttingen, Math.-Phys. Klasse, 21–46 (1972)

Bussieck, M.R., Drud, A.S., Meeraus, A.: MINLPLib—a collection of test models for mixed-integer nonlinear programming. INFORMS J. Comput. 15, 114–119 (2003)

Hart, W.E., Watson, J.-P., Woodruff, D.L.: Pyomo: modeling and solving mathematical programs in Python. Math. Program. Comput. 3, 219–260 (2011)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106, 25–58 (2008)

COIN-OR. https://www.coin-or.org

MINLPLib. http://www.minlplib.org/instances.html

Bonami, P., Biegler, L.T., Conn, A.R., Cornuéjols, G., Grossmann, I.E., Laird, C.D., Lee, J., Lodi, A., Margot, F., Sawaya, N., Wächter, A.: An algorithmic framework for convex mixed integer nonlinear programs. Discrete Optim. 5, 186–204 (2008)

Bonami, P., Lee, J.: Bonmin users’ manual. Technical report, September (2009)

Hansen, E.: Global Optimization Using Interval Analysis. Marcel Dekker, New York (1992)

Neumaier, A.: Interval Methods for Systems of Equations. Cambridge University Press, Cambridge (1990)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Fukushima, M., Pang, J.-S.: Some feasibility issues in mathematical programs with equilibrium constraints. SIAM J. Optim. 8, 673–681 (1998)

Audet, C., Hansen, P., Jaumard, B., Savard, G.: Links between linear bilevel and mixed 0–1 programming problems. J. Optim. Theory Appl. 93, 273–300 (1997)

Fortuny-Amat, J., McCarl, B.: A representation and economic interpretation of a two-level programming problem. J. Oper. Res. Soc. 32, 783–792 (1981)

Neumann, C., Stein, O., Sudermann-Merx, N.: Bounds on the objective value of feasible roundings (forthcoming)

Acknowledgements

The authors are grateful to the anonymous referees and the editor for their precise and substantial remarks on earlier versions of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Paul I. Barton.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Computation of Lipschitz Constants

In this appendix, we identify some problem classes which are suitable for the computation of the necessary Lipschitz constants in Assumption 3.1. From the mean value theorem, the Hölder inequality and the Weierstrass theorem it is well known that for fixed \(x\in \mathrm{pr}_xD\) with a nonempty and compact set D(x) and a continuously differentiable (in y) function \(g_i(x,\cdot )\), \(i\in I\), the value

is a Lipschitz constant for \(g_i(x,\cdot )\) on D(x) with respect to the \(\ell _\infty \)-norm.

To achieve uniform Lipschitz constants, let us define the ‘worst case’ Lipschitz constants among those in (10) with respect to \(x\in \mathrm{pr}_xD\),

If we assume D to be a polytope, then the supremum is attained, and this yields

Note that \(L^i_\infty \) is preferable over the Lipschitz constant

for \(g_i\) on D, since it promotes a larger set \(T^-\). This shows that Assumption 3.1 is weaker than a general Lipschitz condition and illustrates our main motivation to require Lipschitz conditions only on the fibers \(\{x\}\times D(x)\).

Example A.1

If D is a polytope and for some \(i\in I\) the entries of the gradient \(\nabla _y g_i\) are factorable functions, then techniques from interval arithmetic may be employed to compute \(L^i_\infty \) as a guaranteed upper bound for \(\max _{(x,y)\in D}\Vert \nabla _y g_i(x,y)\Vert _1\) (cf., e.g., [23, 24]). Again, since smaller Lipschitz constants lead to larger sets \(T^-\), good upper bounds are beneficial for the consistency of \(T^-\). \(\square \)

Example A.2

If for some \(i\in I\) we have \(g_i(x,y)=F_i(x)+\beta _i^\intercal y\), then (11) boils down to \(L^{i}_\infty = \Vert \beta _i\Vert _1\). This actually explains the inclusion ‘\(\supseteq \)’ in (2). \(\square \)

Example A.3

If D is a polytope and for some \(i \in I\), we have

then

may be computed by the vertex theorem of convex maximization [25, Corollary 32.3.4] as

where \({{\,\mathrm{vert}\,}}D\) denotes the vertex set of D. Alternatively, we may obtain \(L^i_\infty \) as the optimal value of the linear program with complementarity constraints [26]

As a third possibility, \(L_\infty ^i\) can be computed as the optimal value of a mixed-integer linear optimization problem. In fact, modeling the complementarity constraint \(u^\intercal v = 0\) in the above LPCC by a big-M reformulation [27, 28] results in the problem

with \(M_u,M_v \in \mathbb R^m\) large enough, where \({{\,\mathrm{diag}\,}}(M_u)\) and \({{\,\mathrm{diag}\,}}(M_v)\) denote the corresponding diagonal matrices, and e stands for the all ones vector.

Notice that, in order to obtain a tight LP relaxation, we propose not one single big-M constant, but different big-M’s for each variable \(u_i\), \(v_i\), \(i=1,\dots , m\). We stress that good values for the entries of \(M_{u}\) and \(M_v\) can be computed explicitly from the problem data. Indeed, with \((q_x)_k^\intercal \) and \((q_y)_k^\intercal \) denoting row k of the Matrix \(Q_x\) and \(Q_y\), respectively, we may set the k’th entries of \(M_u\) and \(M_v\) to

which comes at the cost of solving 2m LPs. The validity of theses bounds for u and v immediately follows from the equality constraints \(Q_yy+ Q_xx + \beta = u-v\) together with the (remodeled) complementarity constraints.

As our numerical study in Sect. 7 reveals, separable quadratic constraints \(g_i\) constitute a relevant special case of this setting. Since they satisfy \(Q_x = 0\), and under the explicit knowledge of box constraints for y, that is \(y \in [y^\ell , y^u]\) with \(y^\ell ,y^u \in \mathbb R^m\), we may compute valid (albeit possibly coarser) values for \((M_u)_k\) and \((M_v)_k\) as

where \((q_y)_{kj}\) denotes the entry at row k and column j of \(Q_y\). Note that these bounds are valid due to our previous construction and \(\mathrm{pr}_y D\subseteq [y^\ell ,y^u]\). In our computational study, we indeed use (12) and (13) to quickly compute \(M_{u}\) and \(M_v\).

We remark that neither of the three above auxiliary problems for the computation of \(L_\infty ^i\) is efficiently solvable in the sense that we may obtain an optimal value in polynomial time. However, our computational study reveals that the auxiliary MILP is quickly solvable for many practical applications. \(\square \)

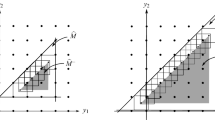

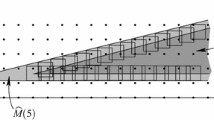

Objective Value of the Generated Feasible Point

Due to rounding effects as well as due to the necessary modifications on the transition from M to \({\widetilde{T}}_\rho ^-\), the point  generated by FRA-SOR can of course not be expected to be optimal for MINLP. In fact, already for a two-dimensional purely integer linear problem with two inequality constraints and the inner parallel set \({\widehat{M}}^-=T^-\), Fig. 4 illustrates that the point generated by FRA-SOR may be far from optimal. Modifying the illustrated situation by forcing the angle between the two constraints to become more acute results in examples which move the constructed point arbitrarily far away from an optimal point.

generated by FRA-SOR can of course not be expected to be optimal for MINLP. In fact, already for a two-dimensional purely integer linear problem with two inequality constraints and the inner parallel set \({\widehat{M}}^-=T^-\), Fig. 4 illustrates that the point generated by FRA-SOR may be far from optimal. Modifying the illustrated situation by forcing the angle between the two constraints to become more acute results in examples which move the constructed point arbitrarily far away from an optimal point.

To evaluate how ‘good’ a point  generated by FRA-SOR is, one may compare its objective value

generated by FRA-SOR is, one may compare its objective value  with the optimal value \({\widehat{v}}\) of the continuously relaxed problem \(\widehat{\hbox {MINLP}}\). This yields the bounds

with the optimal value \({\widehat{v}}\) of the continuously relaxed problem \(\widehat{\hbox {MINLP}}\). This yields the bounds

on the deviation of  from the optimal value v of MINLP. Note that in this bound we propose to use the optimal value \({\widehat{v}}\) of f over \({\widehat{M}}\) without any enlargement constructions, rather than the optimal value of f over \({\widehat{M}}_\rho \), since this leads to a tighter bound.

from the optimal value v of MINLP. Note that in this bound we propose to use the optimal value \({\widehat{v}}\) of f over \({\widehat{M}}\) without any enlargement constructions, rather than the optimal value of f over \({\widehat{M}}_\rho \), since this leads to a tighter bound.

In contrast to such an a-posteriori bound on the objective value  , from a structural point of view one may also be interested in a-priori bounds, which only depend on the problem data of MINLP. For FRA-SOR, the derivation of such bounds is indeed possible and further discussed in [29].

, from a structural point of view one may also be interested in a-priori bounds, which only depend on the problem data of MINLP. For FRA-SOR, the derivation of such bounds is indeed possible and further discussed in [29].

Rights and permissions

About this article

Cite this article

Neumann, C., Stein, O. & Sudermann-Merx, N. Granularity in Nonlinear Mixed-Integer Optimization. J Optim Theory Appl 184, 433–465 (2020). https://doi.org/10.1007/s10957-019-01591-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-019-01591-y