Abstract

We further develop the mathematical theory of causal interventions, extending earlier results of Korb, Twardy, Handfield, & Oppy, (2005) and Spirtes, Glymour, Scheines (2000). Some of the skepticism surrounding causal discovery has concerned the fact that using only observational data can radically underdetermine the best explanatory causal model, with the true causal model appearing inferior to a simpler, faithful model (cf. Cartwright, (2001). Our results show that experimental data, together with some plausible assumptions, can reduce the space of viable explanatory causal models to one.

Similar content being viewed by others

Notes

Technically speaking, this is called a d-connecting path. We will provide the formal definition in the next section.

We will assume that our interventions have some positive probability of affecting the target variable, of course; but then via our plausibility assumptions below we shall assume the same for every parent variable.

Note that although considering the fully augmented model implies a consideration of a joint prior probability over the doubled variable space, we do not require joint interventions, but only independent interventions (as an inspection of the proof in the Appendix will reveal); nor do we restrict the particular prior distribution over any intervention variable, except to say that they must not be extreme distributions.

The terminology is changed somewhat to fit our current usage. For example, what we call statistical indistinguishability Spirtes et al. (2000) call strong statistical indistinguishability (which, except for a minimality condition, is the same thing); also, they do not write of augmenting models, but discuss “rigid indistinguishability”, which amounts to the same thing. SGS also write of probability distributions being faithful to models, etc., whereas, since the probabilities are furnished by reality and the models aim to represent them, we find their usage backwards.

In SGS terminology, this is an application of the idea of rigid distinguishability to models which are weakly indistinguishable, that is, having some probability distribution which they can both represent.

In saying this we ignore non-linear causal relationships, which can result in unfaithful isolated causal paths. In general, such things cannot be ignored; however, they do not make any special difficulties for causal discovery (Korb et al., 2005).

To be sure, since we are limiting ourselves to Wright’s path models, this already implies causal sufficiency, as well as that the error terms are independently and positively distributed.

For those unprepared to acknowledge the dagginess of reality, we note explicitly that we are here introducing a technical language with a scope limited to the confines of this paper (as with implausibility).

Strictly speaking, the “English” version is in the language of probabilistic dependency, whereas d-separation is in the language of graph theory. The English rendition follows, however, given the standard assumption of the Markov property below (Definition 6).

By augmentation space we mean the space of models over the joint variables of the augmented model. This will include models which are not themselves augmented models, such as Chickering extensions of augmented models.

And, indeed, this unique model is discoverable in O(n 2) independence tests, in consequence of the proof of Theorem 3.

The problem of underdetermination of theory by data is that for any finite data set, there are normally many empirically distinct models that can fit it equally well. Further data may eliminate some competing models, but usually not all of them. So how can we be claiming to eliminate all of them here? The answer is partly that our model space is restricted: in particular, we do not allow models that include new, latent variables. But it is also partly that experimental data have great power. First, experiments can be designed to gather the right sort of data: as we showed, the right interventions can test the competing predictions of all the candidate models. Second, experiments can be designed to have the right sort of relationship to the system under investigation: genuinely independent and precisely targeted interventions mean that implausible models can be eliminated. Under these conditions the problem of underdetermination can be solved completely.

Positive means here that all path coefficients are non-zero; in the language of §3, the models are Type I plausible.

We thank a referee for noting this.

References

Cartwright, N. (2001). What is wrong with Bayes nets? The Monist, 84, 242–264.

Chickering, D. M. (1995). A tranformational characterization of equivalent Bayesian net- work structures. In P. Besnard & S. Hanks (Eds.), 11th Conference on Uncertainty in AI (pp. 87–98). San Francisco.

Hesslow, G. (1976). Discussion: Two notes on the probabilistic approach to causality. Philosophy of Science, 43, 290–292.

Humphreys, P., & Freedman, D. (1996). The grand leap. The British Journal for the Philosophy of Science, 47, 113–118.

Korb, K. B., Hope, L. R., Nicholson, A. E., & Axnick, K. (2004). Varieties of causal intervention. In Pacific Rim International Conference on AI’04, pp. 322–331.

Korb, K. B., Twardy, C., Handfield, T., & Oppy, G. (2005). Causal reasoning with causal models. Technical Report Monash University.

Pearl, J. (2000). Causality: Models, reasoning and inference. New York: Cambridge University Press.

Spirtes, P., Glymour C., & Scheines R. (1993). Causation, Prediction and Search. Springer Verlag

Spirtes, P., Glymour, C., & Scheines R. (2000). Causation, prediction and search (2nd Ed.), MIT Press

Steel, D. (2004, August). Biological redundancy and the faithfulness condition. In Causality, uncertainty and ignorance: Third international summer school, Univ of Konstanz, Germany

Verma, T. S., & Pearl J. (1990). Equivalence and synthesis of causal models. In Proceedings of the sixth conference on uncertainty in AI, pp. 220–227. Morgan Kaufmann

Woodward, J. (2003). Making things happen. Oxford

Wright, S. (1934). The method of path coefficients. Annals of Mathematical Statistics, 5,(3) 161–215

Acknowledgements

We are grateful for a Monash Research Fund grant which helped to support this work. We thank Charles Twardy, Lucas Hope, Rodney O’Donnell and anonymous referees for helpful comments on this paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Here we prove Theorem 3 for linear recursive path models with imperfect interventions. The proof assumes familiarity with path modeling and Bayesian networks.

Theorem 3

(Distinguishability under intervention) For any M 1(θ1), M 2(θ2) (which model the same space and are positive) Footnote 13 such that \(M_1\neq M_2\), if \(P_{M_1(\theta_1)} = P_{M_2(\theta_2)}\), then under interventions \(P_{M^{\prime}_1(\theta_1)}\neq P_{M^{\prime}_2(\theta_2)}.\)

Proof

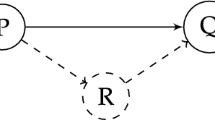

Since M 1 and M 2 differ structurally, for some pair of nodes X and Y one contains \(X\rightarrow Y\) while the other contains \(X\leftarrow Y\) or else X:Y (i.e., they are not directly connected). In either case, we can find a probabilistic dependency induced by one model that must fail relative to the other. To fix ideas, let us suppose the former holds for M 1 and the latter for M 2.

Case 1

M 2 contains X:Y. Let the set of variables Z be the set of all variables in M′1 (equivalently, \(M^{\prime}_2\)) except for \(\{X,I_Y,I_X\}\) (and so \(Y\in {\bf Z}\)). Then \(r_{I_{Y} X\cdot {\bf Z}}\neq 0\) in \(M^{\prime}_1(\theta_1)\) and \(r_{I_{Y} X\cdot {\bf Z}} = 0\) in M′2(θ2). This follows from Wright’s theory of path models, given that \(p_{YI_Y}p_{YX}\neq 0\) in M′1(θ1) and \( p_{YI_Y}p_{YX} = 0\) in M′2(θ2). (Given Z, there is only one d-connecting path between X and I Y in M 1 and none in M 2.)

Case 2

M 2 contains X← Y. Let the set of variables Z be the set of all variables in M′1 except for \(\{X,I_{Y},I_{X}\}\) (and so \(Y\in {\bf Z}\)). Then \(r_{I_Y X\cdot {\bf Z}}\neq 0 \) in \(M^{\prime}_1(\theta_1)\) and \(r_{I_Y X\cdot {\bf Z}}= 0\) in \(M^{\prime}_2(\theta_2)\). This follows from Wright’s theory of path models, given that \(p_{YI_Y}p_{YX}\neq 0\) in \(M^{\prime}_1(\theta_1)\). (As for Case 1, given Z, there is only one d-connecting path between X and I Y in M 1 and none in M 2.) Similarly, letting Z be the set of all variables in \(M^{\prime}_1\) except for \(\{Y,I_Y,I_X\}\) (and so \(X\in{\bf Z}\)), \(r_{I_X Y\cdot {\bf Z}}\neq 0\) in \(M^{\prime}_2(\theta_2)\) and \(r_{I_X Y\cdot {\bf Z}} = 0\) in \(M^{\prime}_1(\theta_1)\). □

This implies, given perfect data, that distinguishability between any two distinct models of size n can be achieved with n independent interventions and O(n 2) conditional independence tests. Thus, the computational cost of finding such empirical distinctions is modest. Footnote 14

Rights and permissions

About this article

Cite this article

Korb, K.B., Nyberg, E. The power of intervention. Minds & Machines 16, 289–302 (2006). https://doi.org/10.1007/s11023-006-9040-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11023-006-9040-4